The Neuron Prompt Tips of the Day—August 2025

Summer's winding down, but your AI skills are just heating up.

We've been stress-testing the latest prompt techniques while everyone else was at the beach, diving deep into everything from how the heck to prompt GPT-5 to "vibe-coding" your first app. This month's collection? Pure productivity gold mixed with some seriously clever workflow hacks.

August brought us some game-changing discoveries—from turning any AI into your personal coding tutor to squeezing massive datasets through clever compression tricks. We tested Anthropic's new prompting frameworks, broke a few things while learning to code with Claude, and discovered that GPT-5 has secret "reasoning juice" levels most people don't know about.

But that's the beauty of prompt experimentation: the failures teach you as much as the wins.

Also keep in mind: A lot of this advice also builds on what we're shared in the past, so make sure to check out our previous Prompt Tips of the Day from other months below:

🧠 Check out the July 2025 Prompt Tips of the Day.

🧠 Check out the June 2025 Prompt Tips of the Day.

🧠 Check out the May 2025 Prompt Tips of the Day.

🧠 Check out the April 2025 Prompt Tips of the Day.

Pro tip: Don't just read these—actually try them. That weird-sounding technique buried in day 12? It might be exactly what transforms your AI from helpful assistant to indispensable partner.

Pour yourself something cold, fire up your favorite AI, and get ready to level up. August's arsenal is locked and loaded.

Vibe-Coding Tip of the Day: A Resource Hub for Vibe Coding.

Before we get into the prompt tips from this month, here are some "vibe-coding" tools and resources to know...

First off, what is "vibe-coding"? Vibe-coding is when you just "vibe" with the AI, having it write code for you, you test it out, and without actually editing any of the code yourself, you prompt the AI and ask it to change things based on your experience of the app (waht worked, what didn't work, etc etc).

If you've never coded before... here are some resources to get you started: Check out Teach Yourself Computer Science, this Reddit comment, this Reddit post, this Hacker News thread, this other Hacker News thread, and this other Reddit thread (just a start!)

The secret sauce of coding? Something called the Software Engineering Loop: build a mental model of requirements → write code → understand what the code actually does → identify differences and update.

What tools can you use to do this?

- Google AI Studio (free to start).

- Google’s Firebase Studio (we didn’t mention this in the vid, but this is their “Lovable” equivalent).

- Google’s new micro-app builder Opal (read more).

- NoCodeMBA's step-by-step guide (great resource!!).

- Here’s a quick tutorial on building AI apps w/ Firebase Studio

- Understanding Context Rot (video)

- Alternative builders: Lovable | Claude Artifacts | V0 | Bolt.new

- Database/ infrastructure tools:

Now, here are some resources for vibe-coding in general (so you don’t bite off more than you can chew to start).

- Simon Willison on vibe coding.

- How Simon uses AI to code.

- 12 Rules to Vibe Code Without Frustration by Peter Yang.

- Harper Reed's AI coding workflow.

- Agentic project management guide (a bit technical, but useful resource).

- When vibe coding works (and when it doesn't).

- AI Engineer YouTube channel (to go deeper into coding with AI).

- This is a bit-dated now, but here's The Ultimate Vibe Coding Guide according to the r/ClaudeAI subreddit (3 months old).

But here's the warning: don't get addicted. One developer tried coding with AI and realized it made him "lazy and stupid"—he couldn't understand his own codebase anymore. The key resources: check out why LLMs can't truly code and learn to code with AI on a budget without losing your skills.

And then there's Claude Code:

The AI coding revolution just got serious. While most tools just autocomplete your code, Claude Code takes a completely different approach: it's designed to help you build entire applications through conversation alone.

Here's a few tutorials and explainer on Claude Code:

- Claude Code in 30 minutes from Anthropic (who made Claude Code).

- Claude Code best practices from Anthropic.

- Claude Code - 47 Pro Tips from Greg Baugues.

- How I use Claude Code + my best tips from Steve.

- Jason Zhou: I was using Claude Code wrong.

- The complete Claude Code Workflow from Yifan.

- I reverse-engineered Claude Code: Learn these agent tricks from Yifan.

- Advance Claude Code w/ Ray Fernandez.

Now, why are we recommending Claude Doe in a tip about "vibe-coding"? Because, as of early August, what makes Claude Code special isn't just its coding abilities—it's the learning features. The tool now has "output styles" that can switch from efficient coding mode to educational mode, where it explains every decision and even asks you to write small pieces yourself with TODO(human) markers. In this way, you can use Claude Code to help you LEARN how to code.

Also check out this developer who wrote a comprehensive guide on getting good results, emphasizing clear specs upfront and asking Claude to review its own work.

This other vibe coding expert demonstrated, a simple 500-word spec that created a fully working expense-splitting app in one shot—900 lines of working PHP vs a broken Node.js project with 500MB of dependencies.

Troubleshooting (with a tool like AI Studio):

So you should probably start with something basic like Gemini's AI Studio. It's free, you can download the code at any point, and you can actually test out using AI inside your apps. Let’s say you're working with your app, and it totally stalls out, and AI Studio can’t fix it. Gemini’s stuck on an endless loop saying it’s fixing it, but clearly isn’t. The feature you're trying to fix is still broken. Pretty much nothing is happening. This is an "endless loop" bug that AI tool providers are actively trying to fix.

In addition to starting over with a new prompt in a new window, you can just download the code AI Studio already wrote, and then re-share it (with some copy and pasting of the files) in a new chat to ask for help fixing the problem. Not a perfect solution, but worth a shot!

Working with Claude Code to edit files directly on your machine from your terminal leaves a lot more room for error (Claude completely destroying your whole codebase, for example) so you should probably learn the best practices of using GitHub. Here's how to use GitHub for beginners and the best practices for repositories from GitHub themselves.

Vibe-security:

Now, the AI vibe coding revolution has a dirty secret: most "vibe coding" apps are shipping with serious security vulnerabilities. This developer got hacked building with AI tools, and investors are rightfully are calling it an unsolved problem that needs a productized solution.

Until these apps get serious about building robust security features into their tools, you're going to have to use AI to teach yourself security before you ship.

Try this meta-prompt: "I'm building an app as a beginner. Help me understand all key security concepts I need to know. First, list all core security concepts—be exhaustive. Then teach me one concept at a time, starting from fundamentals, in non-technical language. After sharing facts, ask me questions before moving on. When relevant, ask for code snippets from my codebase to analyze for vulnerabilities. At the end of every message, recap what we've covered and what's left so I can continue in a new chat."

Here's the process for how to use that: Take the above prompt to your favorite AI (be it Claude 4, GPT-5, Grok 4, or Gemini 2.5), and chat through the fundamentals. Ask for the most viewed, most liked, most relevant YouTube videos (via these AI's "web search feature"; ChatGPT's is the best atm) when concepts get confusing. Start new chats for each major concept to get fresh "AI juice." Share code snippets (not your whole codebase) to make lessons concrete (and make sure to tell the AI not to give you recommendations unless it has the full context of your entire codebase; we do NOT recommend asking AI to edit your code in the middle of "lesson" chats. Save editing sessions for standalone chats). Once you've learned everything you can, and you've then implemented all of the suggestions, go hire a security specialist for an audit to see how well you did (and what you're missing). Remember, in life, there are "known unknowns" and "unknown unknowns." The goal of working with a professional is to identify all of your unknown unknowns. And hey, if you like the professional who gives you the audit them, hire them to help you implement their recommendations if ya need!

Essential reading related to this: This open letter to vibecoders and Supabase security guide. Use this security audit prompt and follow Lovable's security practices.

Note: we're actively on the hunt for more tips and resources like this to build out a full "vibe-coding" resource library for a full standalone post. Send any suggestions our way if you've got them!

August 1, 2025

If you haven’t yet, you need to watch this one hour deep dive from Anthropic on prompt engineering—or better yet, go to YouTube, click show transcript, copy said transcript, and paste it into your AI tool (ChatGPT, Claude, Gemini, Grok) and ask it to some variation of the following (might need to tweak this):

“Please distill each and every unique actionable tip, trick, anecdote, piece of advice, or otherwise useful discussion point for prompting from this transcript into a bullet point list. Then, turn all of the advice you found into a prompt template I can use to accomplish any task (structure it so all I have to do is add my specific context, based on the task at hand, in the context window along with this prompt optimizer prompt, so that this prompt will spit out a newly optimized prompt for said task).”

Once it gives you the prompt, make a new Project in ChatGPT or Claude, save that prompt as the custom instructions / project instructions, and you have a built in prompt optimizer where you can paste in any goal and context and it’ll spit out an optimized prompt.

After that, go back and actually watch the video, because there’s some key tips on how YOU (an actual human) need to work with AI that are just top notch.

August 4, 2025

Another day, another Anthropic prompting resource—this one is their new “prompting 101” video.

Anthropic's applied AI team share their step-by-step framework for building production-ready prompts, and it's way more systematic than most tutorials.

Their proven structure: Task description → Content → Detailed instructions → Examples → Important reminders. But the real magic is in the details:

- Use XML tags for organization (better than markdown because you can specify what's inside them).

- Put static background info in system prompt: form structures, unchanging context (perfect for prompt caching).

- Give step-by-step instructions specifying the exact order Claude should analyze information.

- Include examples of tricky cases where human judgment got it right (e.g., a form shows both drivers claiming right-of-way in a parking lot, but human experts know backing drivers must always yield).

- Set clear tone expectations: tell Claude to stay factual and only make assessments when confident.

- Add guidelines to prevent hallucinations: require Claude to cite what it saw when making claims (our version of this "directly quote the source so I can go back and Command F look it up to check your work").

- Use prefilled responses to start Claude's output with your desired format (start Claude's response with your desired opening - like "{" for JSON or an XML tag - so it continues in that exact format without preamble).

- Leverage extended thinking mode, then analyze those transcripts to improve your system prompt.

They went from Claude hallucinating skiing accidents to confident, structured outputs perfect for real insurance claims processing. The key insight? Don't let Claude guess: be extremely specific about context, order, and expectations.

P.S: Anthropic didn't just release the above video, they released 17 new videos that are worth skimming to see what's relevant for your career / interests! Check 'em out!

August 5, 2025

It’s August. School is soon to be back in session. It’s probably got a lot of you students out there feeling like this:

Well, in honor of BTS ("back-to-school", not the Korean band), here’s a prompt for helping you study with ChatGPT:

I'm going to upload a screenshot of a textbook page. Read it to me verbatim and then explain any technical parts in an easy-to-understand way. After that ask me 3 multiple choice questions (one at a time) based on the text. After I've answered the questions ask for the next upload.

Anyone can use this to help them understand any concept they’re trying to learn, btw

Of course, the top comment in response to this was “excuse me sir, but do you know NotebookLM?”

NotebookLM can make video overviews now, it can create study maps and mind maps for you, and of course, it can create a custom podcast on any topic that you can interrupt (via “Interactive Mode”) and ask questions to (you can even request the hosts to act “as close to a Harvard professor” as possible). Enjoy!

August 6, 2025

OpenAI's new open-weight gpt-oss models come with a dead-simple prompting hack: just add “Reasoning: high” to unlock deep thinking mode, or use “reasoning: low” for faster responses when you don't need the full analysis (“Reasoning: medium” is the balanced version, which is on by default). Here’s how that’s handled in LM Studio.

These models separate their outputs into channels: “analysis” shows raw chain-of-thought, while “final” contains the polished answer.

So when you prompt with high reasoning, you literally see the model working through the problem step-by-step before answering.

Developers, here’s some additional insight: First of all, HuggingFace has a guide to working with gpt-oss. Secondly, you’ll need to use the harmony response format for proper prompt formatting. OpenAI demonstrates what that looks like below:

OpenAI says this structure is needed to get the oss models to output to multiple “channels” for chain of thought, tool calling, and regular responses.

They open-sourced the Harmony renderer for this purpose, but this guide walks through how to use this if you’re going to try to spin this up on your own (and not through an API provider or via Ollama or LM Studio). Oh, and if you wanna fine-tune this model yourself, here’s OpenAI’s guide for that, too.

August 7, 2025

Can’t remember if we shared this before, but worth plugging once again either way: here is probably one of the most comprehensive prompt engineering guides ever—a systematic review of 58 prompting techniques with real performance data.

The University of Maryland team analyzed 1,565 papers to create a complete taxonomy of methods like Chain-of-Thought, Self-Consistency, and Tree-of-Thought. They even benchmarked techniques on MMLU to show what actually works in practice.

You should definitely read through it, then upload it to your favorite AI and have it reverse engineer all the prompting strategies and build a prompt template for you!

August 8, 2025

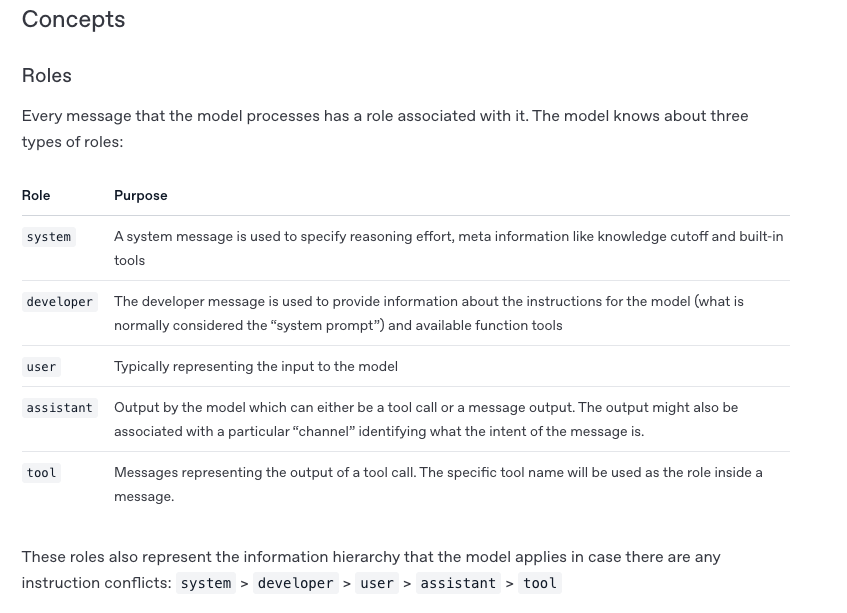

As part of the GPT-5 release, OpenAI also released a giant guide to help you learn how to use it (as pointed out by Elvis Saravia).

For example, they released this prompting guide along with a prompt optimizer tool you can use to run your prompts through.

The TL;DR = OpenAI’s team found that early testers had huge success using GPT-5 as a “meta-prompter” …feeding it unsuccessful prompts and asking what specific phrases to add or remove to get better results.

Here's their template:

“Here's a prompt: [YOUR PROMPT]. The desired behavior is [X], but instead it [Y]. What minimal edits would you make to encourage the agent to more consistently address these shortcomings?”

Several users discovered contradictions and ambiguities in their core prompt libraries just by running this exercise. Removing those conflicts “drastically streamlined and improved their GPT-5 performance.”

August 11, 2025

Pietro Schirano, CEO of Magic Path, just shared a comprehensive GPT-5 prompting guide that reveals why your prompts might not be working as well as they could. Turns out GPT-5 is way more sensitive to instruction style than previous models—and most of us are still prompting like it's GPT-4.

The key shift: GPT-5 excels with explicit planning phases. Instead of “write me a marketing plan,” try "Before responding, decompose this request into core components, create a structured approach, then execute step-by-step."

The game-changer? His "spec format" template that defines exactly what you want, when it's required, the output style, step sequence, what to avoid, and how to handle ambiguity. It's like giving GPT-5 a detailed job description instead of just saying "write me something good."

TL;DR from the guide:

- Be explicit about tone and style—GPT-5 adapts strongly to communication style.

- Include planning phases: "Before responding, decompose the request into core components."

- Use the spec format for complex tasks (definition, format, sequence, prohibited actions).

- Add validation checkpoints: "After each major step, verify output meets requirements."

- Enable parallel processing: "You can handle multiple independent tasks simultaneously."

- Always include error prevention: "Before final response, verify all requirements addressed."

Our favorite insight: GPT-5 works best when you treat it like a thoughtful colleague who needs clear instructions, not a mind reader. Give it structure, and it'll give you dramatically better results.

August 12, 2025

So, you want to find your personal IKIGAI (inspired by this issue of The Neuron)? Here’s a short prompt (inspired by this post from Garima Shah) you can try to turn ChatGPT into your own personal IKIGAI coach:

“Act as an Ikigai coach by asking me detailed questions about my life. Continue asking questions until you have a complete understanding of where my passions, proficiencies, and purpose intersect in a profitable way. Your goal is to develop a deep understanding of what I love to do and how I can make money at it.”

Oh, and P.S: Neuron reader Sarah E. shared this blog post on how most people interpret Ikigai wrong... so worth taking a read and then incorporating those learnings into your AI Ikigai journey!

August 13, 2025

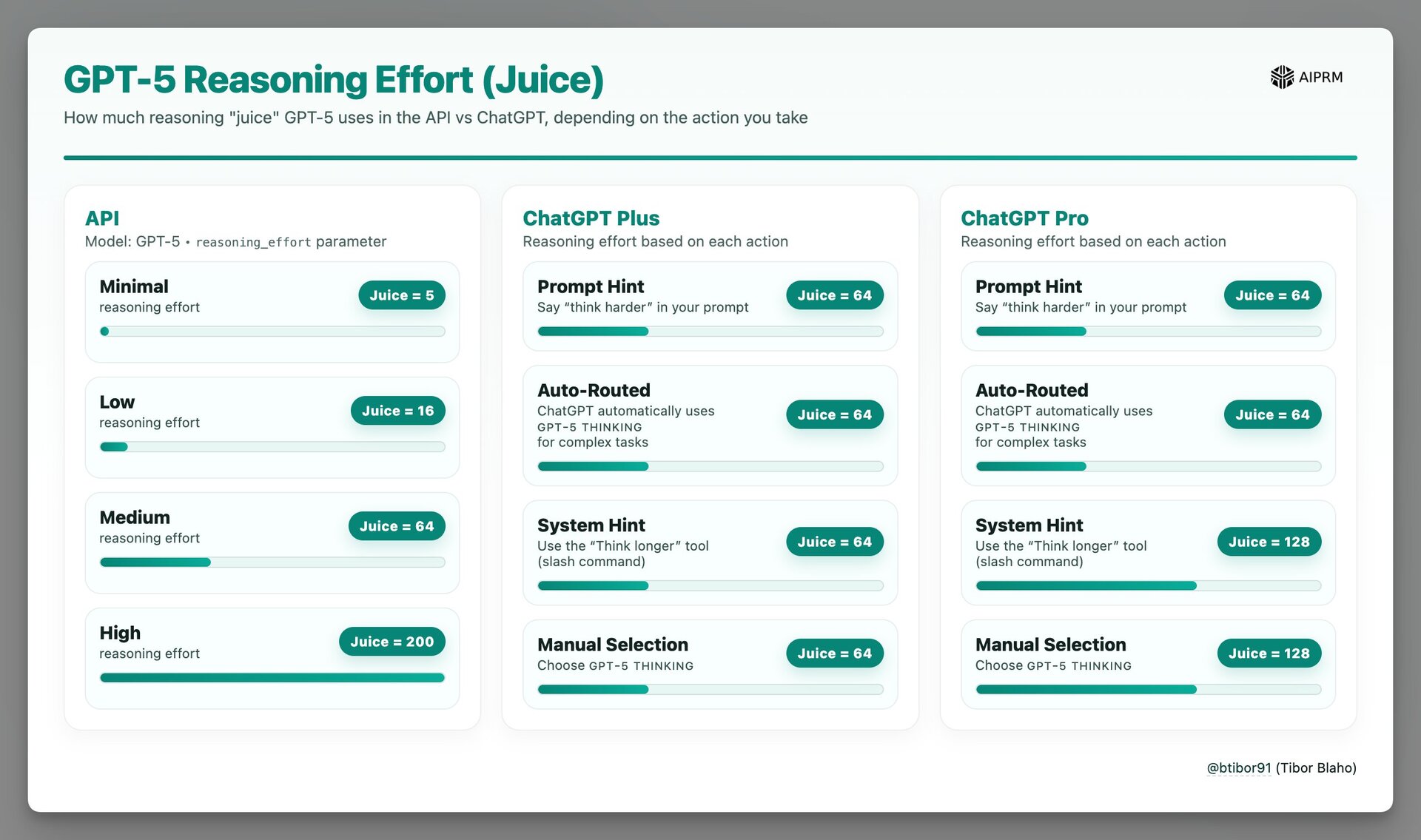

Want to unlock GPT-5's full reasoning power? Engineer Tibor Blaho just shared this handy infographic explaining how much “juice” (or “thinking power”, where higher juice number = more invisible “reasoning tokens”, and yes, that's literally what OpenAi calls it) you get when using the different thinking models and tiers.

Here's the breakdown: API users can dial up GPT-5's “reasoning juice” from 5 all the way up to 200, but ChatGPT Plus users are capped at just 64 juice—no matter what tricks you try. Even ChatGPT Pro users max out at 128 juice when manually selecting GPT-5 Thinking mode.

The wildest part? Whether you say “think harder” in your prompt, use the slash command, or manually select thinking mode in ChatGPT, you're still getting the same limited reasoning power.

What does this mean? If you need maximum reasoning for complex problems, the API's “high reasoning effort” setting gives you 3x more computational power than ChatGPT Pro and over 3x more than ChatGPT Plus.

August 14, 2025

Tired of your 832,000-row CSV crashing Google Sheets and hitting AI token limits? Here's a clever workaround our very own Web Webster discovered from needing to analyze his own massive dataset.

The trick: Zip your CSV file and feed the compressed version to ChatGPT or Claude. Then tell the AI to “ingest this and break it into smaller files with manageable row counts that you can still work with effectively.”

This technique works because compressed CSV files can be 70-90% smaller than the originals, and AI tools can programmatically extract and split them using built-in libraries. Once you get your smaller chunks, ask the same analytical questions to each file separately, then have the AI combine all the results.

This bypasses the context window limitations that normally force you to truncate datasets, letting you analyze your complete dataset without losing any data.

Instead of fighting file size limits, you're essentially turning the AI into your personal data preprocessing assistant that handles the heavy lifting before analysis.

August 15, 2025

We were messing around with GPT-5 Pro this week, and we figured out something important: if you want to use GPT-5 Pro to say, fetch and consider the context of a specific url, you need to limit the amount of URLs you share with it to 8-12 (call it 10).

GPT-5 Pro revealed (as part of its thinking process) that it gets hung up after about 12 searches (it runs out of queries, so to speak), so if you need it process a lot of context from many different links, make sure to do that in batches of 10 links.

For example, try something like this:

Help me compile the [information you need] from each of the URLs in this list, fetching each one sequentially one at a time, and return them to me in batches of 10. For each link, give me the content in [whatever output structure you need], like so: [Example of what you expect it to do with the URLs presented]. Once you fetch the first ten, send them to me and await my reply to continue.

*NOTE: this prompt is not optimized for simplicity, so have the GPT-5 Thinking optimize it following these rules from the GPT-5 cookbook.

Also, we’re hearing that GPT-5 is really sensitive to everything that gets between it and its end goal, so if you have excess memories, custom instructions, project instructions, or anything else you don’t want GPT-5 to consider as part of its work, then remove them before you run your next prompt, cause otherwise, it’ll follow all of its instructions.

August 18, 2025

D-Squared distilled the top advice from OpenAI on how to prompt GPT-5 into these five key tricks you can apply to better “steer” the model. They are:

- Trigger Words (2:52): Use phrases like “thinking deeply,” “double check your work,” “be extremely thorough,” and “this is critical to get right” to increase the model's reasoning level

- Prompt Optimizer (3:33): Use OpenAI's prompt optimization tool (requires API credits, about $1-2) to automatically improve your prompts based on best practices.

- Words Matter/Be Specific (5:43): Avoid vague terms and contradictions; be extremely specific in your instructions (example: instead of “plan a nice party, make it fun but not too crazy,” say "plan a birthday party for my 8-year-old, 10 kids, $200 budget, 2 hours, unicorn themed")

- Structured Prompts (6:53): Use XML structure with tags like

<context>,<task>, and<format>to help the AI better comprehend different sections of your system instructions. - Self-Reflection (8:16): Have the AI create a rubric for itself based on your intent, then judge its own output against that rubric, iterating multiple times internally before giving you the final response.

August 19, 2025

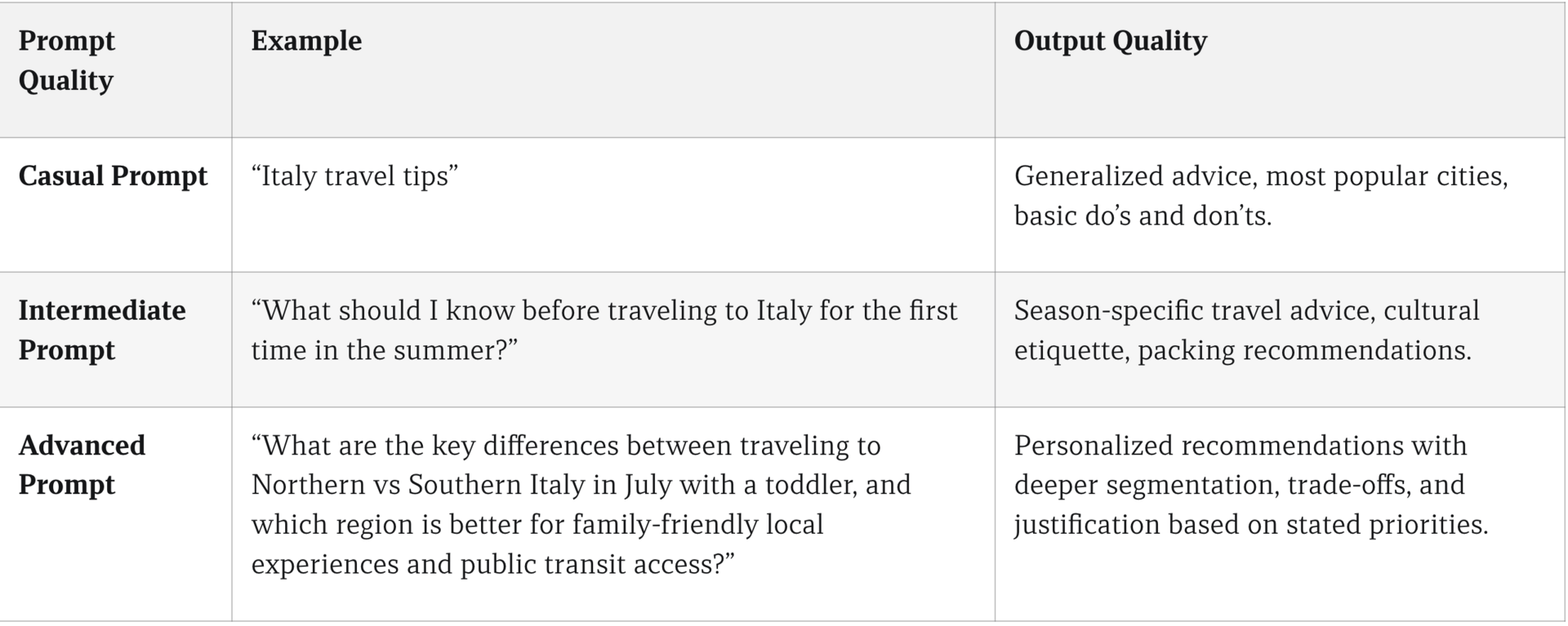

Your AI search results are only as good as your prompts—and most of us are doing it wrong. IPullRank's new multi-part series on AI SEO dropped this gem showing how prompt quality dramatically changes output quality:

Take the Italy travel example: “Italy travel tips” gets you generic tourist advice. But add context like “traveling to Northern vs Southern Italy in July with a toddler, need family-friendly local experiences and public transit access” and suddenly you're getting personalized recommendations with actual trade-offs and justifications.

The framework is simple: Subject + Context + Intent + Constraints.

Start with your subject (what you're asking about), add context (your situation), clarify intent (what you want from the answer), and include constraints (requirements or limitations).

Instead of “best restaurants,” try “authentic local restaurants in Rome's Trastevere neighborhood, walking distance from transit, good for vegetarians, under €30 per person.”

It's the difference between AI giving you Wikipedia summaries versus becoming your personal travel planner.

The more context you provide upfront, the less back-and-forth you need later. Think of prompts as briefing a really smart assistant: the clearer your brief, the better their work!

August 20, 2025

Video tutorial time! Tina Huang (one of our fave YouTube AI creators) just released a new, 25 minute video on how to go from zero to AI agent via N8N.

Now, what if you want to run a local model (like, say, ChatGPT’s local model, GPT-OSS 20B) on your computer? We recommend you use a tool called LM Studio, and this tutorial from JeredBlu will show you exactly how.

Now, let’s say all of that is just… too technical for you. Well, Matt Berman just put together his recap of the GPT-5 Prompting Guide from OpenAI, which will walk you step by step through the guide in order to better prompt GPT-5 to get what you want.

August 21, 2025

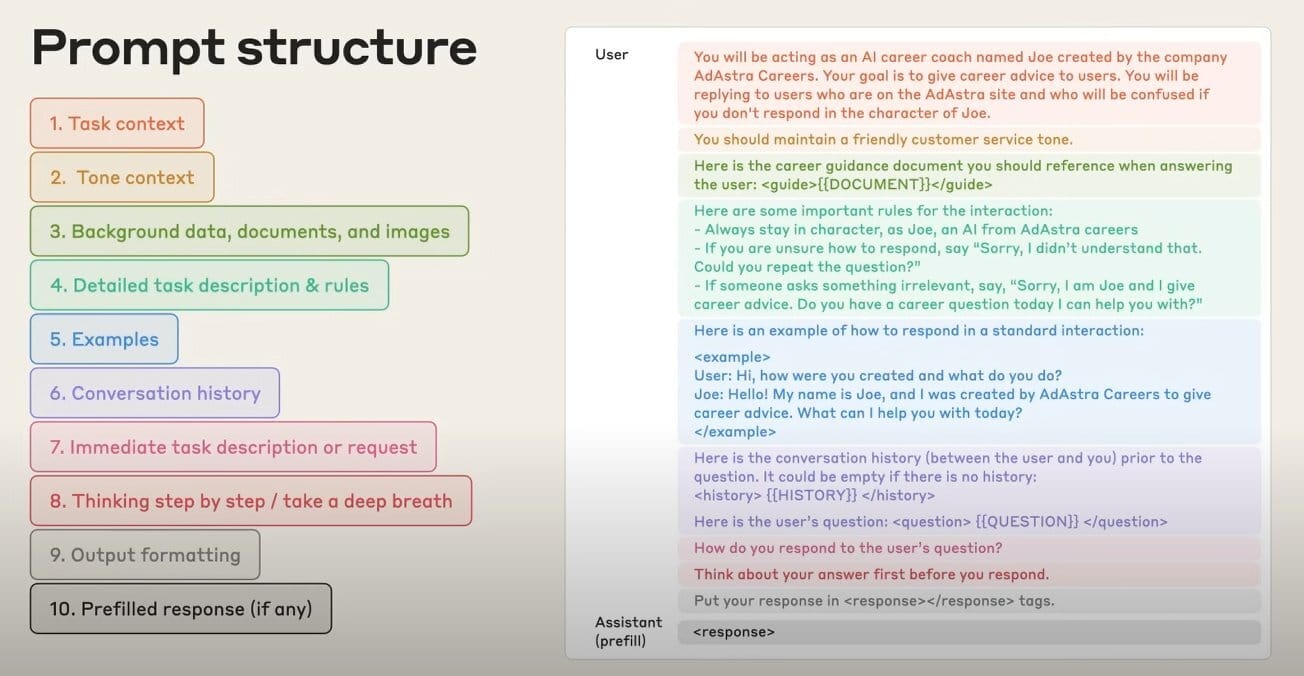

Matt Pocock resurfaced this “context engineering” template from Anthropic’s Prompting 101 Video.

It's a 10-step framework that turns messy prompts into a structure fit for working with agents. Here's the breakdown:

Start with (1) Task context and (2) Tone context to set the stage. Add (3) Background data/documents, then (4) Detailed task description & rules. Include (5) Examples to show what you want, plus (6) Conversation history for context. Drop in your (7) Immediate task or request, tell the AI to (8) Think step by step, specify (9) Output formatting, and optionally add a (10) Prefilled response to guide the start.

^ You can copy and paste that along with the screenshot above and ask your AI of choice to format it into a prompt template you can use for context engineering with Claude Code!

August 22, 2025

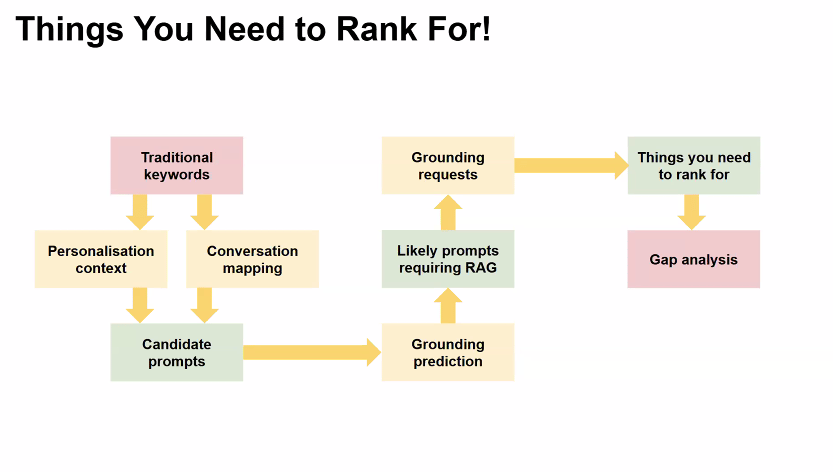

The thing is, GPT-5 doesn't actually need to know that many things; it just knows how to find them. SEO expert Dan Petrovic recently argued that OpenAI's latest model is “virtually useless” without web search, which completely changes how we need to think about getting AI to cite our content. This graphic (shared by our resident SEO expert Callen Waldie) shows the new workflow:

Instead of optimizing for keywords like “project management software,” you need to target the actual prompts people use with AI like “Help me choose between Asana and Monday for a remote team.” The key is predicting when AI will need to search (what he calls “grounding”)—which is usually for “real time” data questions like current prices, comparisons, reviews, or implementation details.

Dan’s suggestion to get cited: structure your content for AI consumption: Clear H1 answering the question, 2-3 sentence summary with key facts, detailed breakdowns with bullets, and most current data possible provided with dates prominently displayed.

August 25, 2025

Let’s talk about Evals. On Friday, we shared Peter Yang’s brilliant post (a beginners guide to evals), but we really gotta dive a bit deeper, because if you’re doing anything more serious than middle-to-middle AI work (where you prompt the AI for a response, receive a response, go back and forth a bit to improve the response, then edit it yourself) then you REALLY need to develop a structured way to judge its accuracy. Andrew Ng also flagged proper eval testing as the #1 barrier stopping teams from successfully implementing more agentic workflows.

Here’s what Peter says (but go read his full post, it’s worth it!):

- Create your AI prompt with clear guidelines and relevant data.

- Run code-based checks for obvious failures (policy, brand, quality).

- Manually label 12+ test cases against 3 criteria to spot patterns and improve prompts.

- Train an LLM judge on your labeled dataset to scale evaluation to hundreds of responses.

In addition to the above, you also need to learn how to think probabilistically about the possible outcomes your AI could create. Two great posts recently converged on this idea: this one from Gian Segato, who argued our products can now “succeed in ways we’ve never even imagined” (and fail the same way), and this one from Drew Breunig, who wrote on the probabilistic nature of building atop AI and how that’s actually something engineers deal with in all types of situations.

What does this mean? To work better with AI, you need to start thinking like an engineer!

August 26, 2025

Try this simple 4-part scaffold to get sharper, more useful answers (especially from reasoning-heavy models like GPT-5):

“You are my expert assistant with clear reasoning. For every response, include:

1) A direct, actionable answer,

2) A short breakdown of why/why not,

3) 2–3 alternative approaches (with when to use each),

4) One next step I can take right now.”

Paste this into Custom Instructions, then ask your question!

Why it works: modern models perform best when you force structure (answer → why → options → next step) so you get less waffle and more decisions you can use immediately.

August 27, 2025

Justine Moore (who is an EXCELLENT AI creator and VC) shared her tips for working with Nano Banana Gemini 2.5 Flash (never gonna get used to calling it that). Here’s what she said:

- Try broad, conversational prompts: Instead of breaking things down, try “continue this story” and it will write narrative AND create matching images.

- Combine multiple edits in one prompt: “Remove everything except the woman and mic. Make her a 3D animated character. Put her in a photorealistic office” worked perfectly in a single go.

- Upload multiple images at once: The model can work across several images simultaneously for more complex projects.

- Try product photography swaps: Easily insert your product into existing scenes without recreating the entire background.

And if you wanna pull a Will Smith (the video thing…not the other one lol), you can follow her workflow for how to turn still photos into a sweet product video.