We're probably seeing GPT-5 get assembled in real time

A handful of mysterious new AI models just showed up on the competitive coding platform LM Arena, and the internet is convinced we’re getting a live preview of OpenAI’s GPT-5.

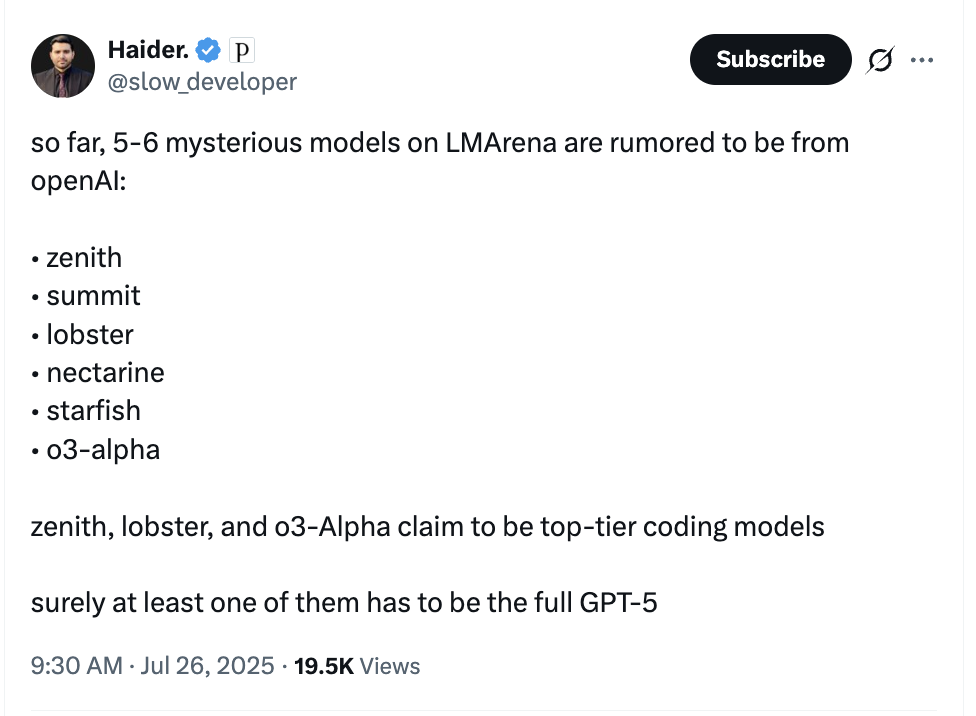

AI sleuths have identified at least six anonymous models—Zenith, Summit, Lobster, Nectarine, Starfish, and o3-alpha—that are supposedly outperforming nearly every other known model. The prevailing theory is that these are different versions of GPT-5 being secretly (or should we say not-so-secretly) tested before their official release. We know OpenAI is planning to launch GPT-5 with mini and nano versions that will also be available through its API, according to The Verge, who first reported the news and the new potential launch date of August.

Here's the rumored breakdown of the lineup:

- Zenith: The top-tier, peak performance model. Think of this as the full GPT-5.

- Summit: A general-purpose model that excels at complex reasoning and coding.

- Lobster: A potential “GPT-5 mini” that’s smaller and faster.

- Starfish: One of two likely candidates for OpenAI’s upcoming open-source model.

- Nectarine: A less impressive model than others on the list; the naming convention doesn't really go with the others, so this might not even be an OpenAI model.

- o3-alpha: A coding-specific variant.

We've also seen reports that Microsoft has been preparing Copilot for GPT-5 as well. The performance of these models is already legendary. AI researcher Ethan Mollick gave "summit" a wild prompt: "create something I can paste into p5js that will startle me with its cleverness in creating something that invokes the control panel of a starship in the distant future."

Here's what we know for sure:

According to The Information, GPT-5 is specifically designed to crush coding tasks. We're talking about the nitty-gritty, practical stuff developers actually need: refactoring massive codebases, debugging complex systems, and handling the kind of real-world programming that makes engineers pull their hair out.

The performance claims are wild. Head-to-head tests reportedly show GPT-5 outperforming Claude Sonnet 4 at coding (which, if you know Claude's reputation among developers, is saying something). Whether it'll be cheaper than Grok 4 or offer a better user experience than Claude Code remains to be seen—but the raw coding horsepower seems legit from everyone who benchmarked it in the arena:

- Zenith and Summit are supposedly good at creative writing (another, more esoteric example).

- o3-alpha is very good at code, starfish is seemingly less good,

- Summit can make pretty compelling SVG videos (now compare to Grok 4).

- Zenith is supposedly the smartest (but again, going off vibes atm).

- Summit can create a front end "Twitch like" website., and feels better than Lobster at coding but vibes wise seems less good than o3-alpha.

- And now these models have left the arena... meaning OpenAI's testing is done.

According to Ethan Mollick, Summit spit out a fully interactive starship control panel with over 2,300 lines of code that worked perfectly on the first try—complete with a warp drive, shields, and voice commands. Here's the code from Ethan Mollick’s summit demo here and the result of the code here.

Here are some other coding project examples across two common AI tests: Pelican on a bike and spinning ball in a Hexagon).

As The Information says, GPT-5 is a hybrid reasoning model where you control how long it "thinks" before answering. Think of it like a dimmer switch for AI intelligence—crank it up for complex problems, dial it down for quick tasks. It's also designed to excel at browser automation, hard sciences, and creative writing (which seems confirmed for Summit / Zenith).

The Information also reported that OpenAI plans to use this architecture all the way through GPT-8. Yes, GPT-8 is apparently already on their roadmap. (Does this mean they're skipping 6 and 7, or do they have a master plan stretching years into the future? Your guess is as good as ours.)

Why this is happening: Many believe these aren't just six separate models, but a "mixture-of-experts" (MoE) that will be combined to create the final, ultra-powerful GPT-5. One expert might excel at Python, another at poetic prose, and a third at logical reasoning. The models on LM Arena are believed to be these individual experts, being fine-tuned on real-world data before being integrated into a cohesive whole.

By testing them on the public, OpenAI gets a firehose of real-world data to see which "experts" are best at coding, reasoning, or creative tasks before making the final move and bundling them together. It’s a brilliant, if sneaky, way to build and validate a frontier model in the open. We are literally watching the sausage get made, and it’s fascinating.

As far as launch day goes...

Another, more speculative rumor thinks this could be like the 12 days of Shipmas, but in July. Unless OpenAI starts its launch day today (Monday, July 28), and drops new stuff all week, that seems unlikely, and just hype.

We originally heard end of July, then we heard as early as August, and now we're hearing July 31... so either the answer is "all of the above" (via a week-long release schedule, like the above speculation), or most likely Thursday at the earliest, since OpenAI likes to release its big stuff on Thursdays (hence the term ThursdAI).

However, the "official" leaked date to The Verge was set for August... which could mean as soon as Friday, or weeks from now.

Build in Public, but make it AI

Releasing on LM Arena a few days (or weeks) before a big release is quite common. This public "beta test" allows OpenAI to gather an immense volume of diverse, real-world data, identifying edge cases, biases, and performance quirks at a scale that internal testing could never replicate.

However, it also comes with minor hiccups. One developer noted that summit repeatedly ignored instructions to use vanilla JavaScript, defaulting to React instead. This was later revealed to be an artifact of the test environment itself; WebDev Arena's system prompt explicitly instructs models to act as expert React engineers, even offering a "$1 million tip" for a job well done. It's a humorous reminder that even in these advanced systems, the underlying instructions still rule.

The secrecy surrounding the models is palpable. Shortly after they appeared, WebDev Arena removed its public code inspection tools, preventing users from digging into the website's source to find clues about the models' origins. It was a clear signal that while OpenAI wants public feedback, it doesn't want to give the game away entirely.

What This Means for the Future

The implications of this "live assembly" are significant. First, it suggests the era of monolithic AI models may be ending (or is at least paused), replaced by more complex, modular systems. An MoE approach allows for greater specialization and efficiency, potentially leading to more powerful and less costly AI.

Second, it changes the nature of AI releases. Instead of a single, dramatic launch, we may see a more iterative process where components are tested and refined in the open. The release of GPT-5, rumored for as early as August 2025, may feel less like a sudden unveiling and more like the formal debut of a system we've already met.

This has the potential to feel very underwhelming, in the same way that the launch of ChatGPT Agent felt kinda overwhelming, though it's a fundamentally better product than any of its individual parts (except Deep Research, which remains the best "agentic" tool every released at this point).

While the AI community watches with bated breath, some, like the notable critic Gary Marcus, urge caution against runaway hype. For some cold water, read Gary Marcus’ piece here on “What to expect when you’re expecting GPT-5” (props on the name, Marcus).

But it's hard not to be excited. We are, for the first time, getting a front-row seat to the construction of a frontier AI model. The question is no longer if GPT-5 is coming, but what final form it will take when all these powerful, mysterious pieces are finally put together.