At its Day 2 MAX keynote, Adobe painted a clear picture of its future: AI is your new creative partner. To prove it, they brought out YouTube phenoms Brandon Baum and Mark Rober, along with Superman director James Gunn, to talk shop.

Below, we recap the key events from Day 2 of Adobe Max, starting with Day 2's keynote.

Here's the TL;DR; read on below for more.

On the second day of its annual MAX conference, Adobe drove home a message that has been echoing through the creative industry for the past year: AI is not a replacement, but a partner. The keynote stage featured a powerful trio of modern storytellers—YouTube creator Brandon Baum, science educator and viral video wizard Mark Rober, and Hollywood director James Gunn—who each offered a unique perspective on a shared theme: technology is a powerful tool, but human instinct, hard work, and the timeless art of storytelling remain paramount.

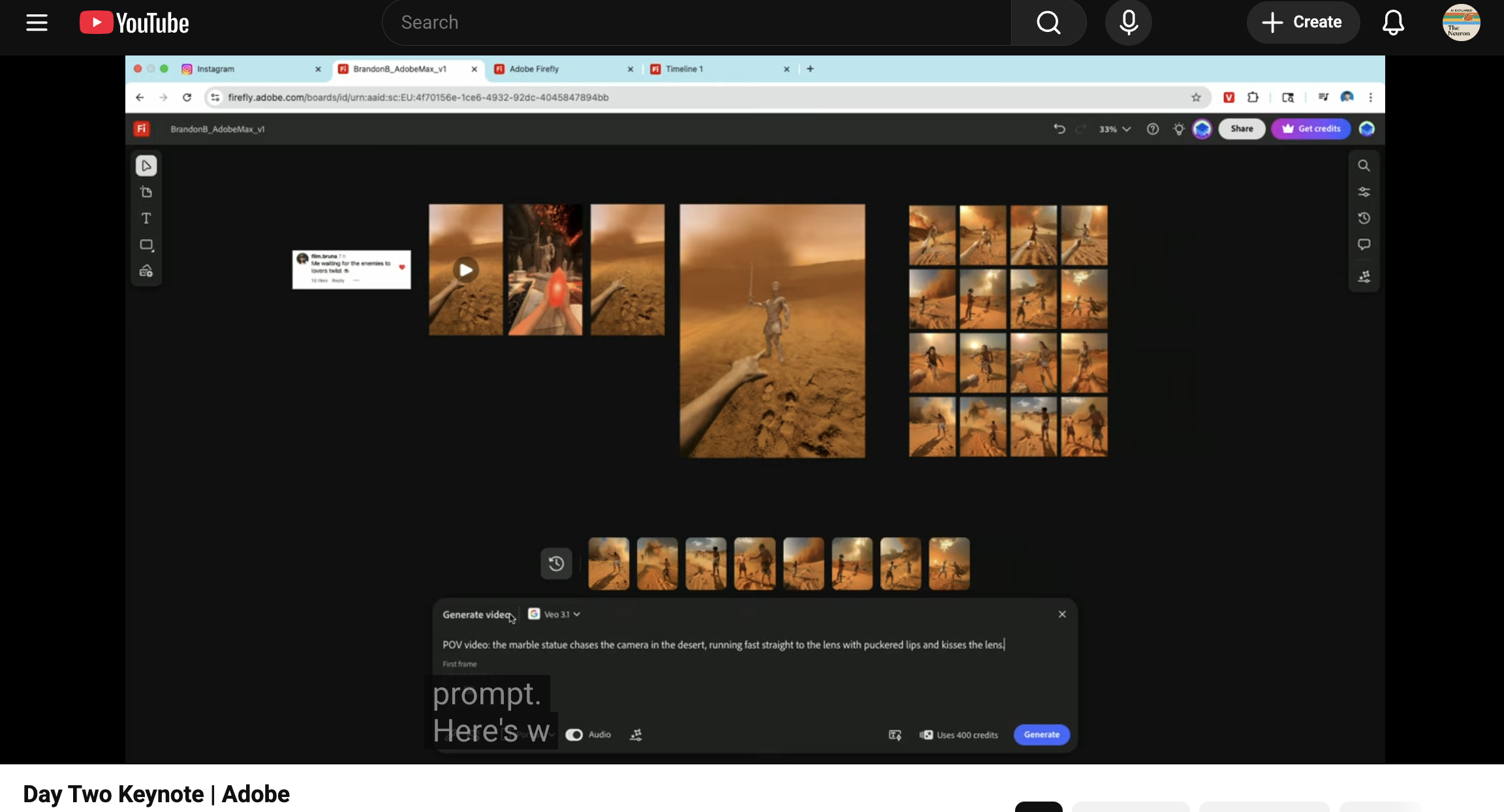

While the celebrity talks were inspiring, the real headline was Brandon Baum’s live demo of a fully integrated, AI-powered video workflow that lets you go from a simple idea to a finished scene—complete with a custom score—in minutes.

Here’s the quick step-by-step workflow he demoed on stage:

- Ideation: Started with Firefly Boards, their new visual mood board, to collect video frames and reference images for an alternate ending to a short film.

- Image Gen: Used a text prompt (“POV: a marble statue puckers its lips, running toward the camera through a deer storm”) combined with a reference image to create a new keyframe.

- Video Gen: Turned that static image into a moving clip using Veo 3.1 with a single click inside Boards.

- Audio Gen: Generated a custom score in Firefly Soundtrack by prompting it with the vibe: “enemies to lovers story arc, high energy, fast tempo.”

- Editing: Dropped the new clips into the Firefly Video Editor and used AI to generate a seamless, professional-looking transition between two completely different shots.

This ability to "fail faster to stumble onto gold quicker," as Baum put it, is a massive unlock for creators.

But tools are just one piece of the puzzle. Mark Rober and James Gunn spent their time on stage reminding everyone that storytelling is still a human endeavor. Their advice was simple but powerful:

- Mark Rober: To make something go viral, you have to create a "visceral response." Facts and specs don't move people; stories do. He used this principle to raise over $40M for clean water and help recruit engineers for a life-saving drone company with his Zipline video.

- James Gunn: The biggest enemy of creativity is judging your own work as you create it. His advice? "Be bored and do bad things." Give yourself the freedom to create poorly, because that's where true creativity lies.

What to do: For creators, this integrated toolset is a call to experiment more freely. Use AI to handle the tedious parts of storyboarding, asset creation, and transitions so you can focus on the core narrative. For marketers and leaders, take Rober’s advice to heart: ditch the spec sheets in your next campaign and find the human story that creates a visceral connection. No matter your field, Gunn's philosophy is a reminder that the path to great work involves finishing projects, even the messy ones.

The All-in-One AI Workflow: A Live Demo

The most tangible vision of Adobe's AI-powered future came from Brandon Baum, who conducted a stunning live demonstration of an integrated creative workflow. His goal was to create an alternate ending for a short film on the fly, inspired by comments from his audience. What followed was a masterclass in speed and iteration, all powered by Adobe Firefly.

The process began with Firefly Boards, a digital mood board where Baum collected snapshots from his existing video, character references, and inspirational images. This became his creative canvas. From there, he used a text prompt—"POV: a marble statue puckers its lips, running toward the camera through a deer storm"—combined with a reference image from his board to generate a striking new keyframe.

With a click, that static image was transformed into a dynamic video clip using Google’s Veo 3.1 model, integrated directly into the workflow. But a scene needs sound. Baum navigated to Firefly Soundtrack and, instead of searching a stock library, he simply typed in the feeling he wanted: "enemies to lovers story arc, high energy, fast tempo." Within seconds, an original, fitting score was generated.

The final, and perhaps most impressive, step took place in the new Firefly Video Editor. Baum placed his original footage and the newly generated AI clip on the timeline. To bridge the jarring cut between them, he used an AI transition tool. By setting the last frame of the first clip and the first frame of the second as parameters, he prompted the AI to generate a seamless, slow-motion transition that elegantly blended the two disparate scenes.

This entire sequence, from ideation to a rendered scene with a custom score, showcased a future where creators can "fail faster to stumble onto gold quicker," as Baum aptly put it. The barrier to experimentation is dissolving, allowing storytellers to test wild ideas in minutes, not days. However, he concluded with a crucial reminder: "Tools don't make stories. Storytellers do."

Mark Rober: Engineering Virality with Visceral Stories

Mark Rober, a former NASA engineer whose YouTube channel boasts over 73 million subscribers, took the stage to deconstruct the anatomy of a viral video. His core philosophy is "hiding the vegetables"—making people learn about science and engineering by wrapping it in irresistibly entertaining content.

According to Rober, every viral video, regardless of its subject, shares one common trait: it elicits a visceral response. It makes you feel something powerful—amazement, laughter, anger, inspiration. He argued that facts, figures, and technical specs fail to connect with audiences because "facts don't create a shift in the heart."

He contended this isn't just a marketing trick; it's rooted in human evolution. We are hardwired to respond to stories because they were our ancestors' primary method for transferring critical information and fostering the large-scale cooperation that defines our species. "What is a country," he posited, "if not a story we all agree on?"

Rober provided two powerful examples. First, the #teamwater fundraiser, which raised an astounding $41.6 million by telling a simple, emotional story that inspired millions to give small amounts. Second, his viral video about Zipline, a company using autonomous drones to deliver life-saving medical supplies in Rwanda. The story, which focused on the human impact and the engineers behind the tech, garnered over 40 million views and directly led to Zipline hiring more than 20 new employees who were inspired by the video. His parting advice was a call to action: leverage our innate response to storytelling to inspire action and make a meaningful impact.

James Gunn: The Hard Truths of Creativity and Craft

Closing the keynote was a candid conversation between Adobe's Jason Levine and James Gunn, the celebrated director of Guardians of the Galaxy and the new head of DC Studios. Gunn offered a refreshingly honest and pragmatic take on the creative process. He dismissed the cliché "follow your dreams," suggesting a more introspective approach: find the intersection of what you love doing and what you are truly good at, which requires brutal self-honesty.

For Gunn, success is built on two mundane but non-negotiable truths: hard work and finishing what you start. He recalled a pivotal moment in his career when he decided to simply complete his projects, regardless of their perceived perfection. That choice, he said, changed his life completely.

His most profound advice was aimed directly at the internal struggles of creators. "The enemy of any creativity is to judge it," he stated. He urged the audience to embrace making "bad work" and creating "shit" to silence the inner critic and achieve a state of pure, present-moment creativity.

When asked about technology, Gunn was agnostic. Whether it's a practical effect or a digital one, the only question that matters is, "What works best for the story?" He values meticulous planning and understanding the unique "program" of each actor he works with to capture the best performance. He explained his iconic use of 70s AM pop in Guardians of the Galaxy as a narrative tool—a way to ground a fantastical space opera in a familiar, emotional reality for the audience.

Ultimately, Gunn's message, echoed by his father's advice to "do what you love and the rest will follow," was a powerful reminder that while tools evolve, the foundational elements of a successful creative career—self-awareness, relentless work ethic, and authentic passion—are timeless.

Together, the three speakers painted a holistic picture of the modern creative landscape: one where groundbreaking AI tools are unlocking unprecedented possibilities, but only in the hands of storytellers who understand the unchanging, deeply human art of connection.

Inside Adobe's Secret Lab: A Breakdown of 10 "Sneaks" That Will Reshape Creative Work

Later in the day on October 29, Adobe hosted its signature "Sneaks" session. Each year, the Adobe MAX "Sneaks" session offers a tantalizing glimpse into the future, showcasing the experimental projects brewing within the company's research and development labs. While there's no guarantee these technologies will become official features, they serve as a powerful roadmap for where the creative world is heading. This year's lineup was a tour de force in AI-driven simplification, with ten projects aimed at making impossibly complex tasks astonishingly simple.

The theme this year was clear: make ridiculously complex tasks feel as easy as a single click or a simple prompt. One project even brought Photoshop-level editing to video.

Here are the four most jaw-dropping projects:

- Trace Erase + Frame Forward: This was the showstopper. Imagine removing a car from a video. You’d normally have to painstakingly rotoscope it out, frame by frame. With this project, you just use the "Trace Erase" tool on the first frame to remove the car, its shadow, and its reflection from the street. Then you click "Propagate," and the AI removes it from the entire video. It took seconds.

- Project Light Touch: This tool lets you relight a photo after you've taken it. You can soften harsh shadows or—get this—add entirely new, virtual lights into the scene. The demo showed them dropping a glowing green light inside a witch's cauldron, and the entire room realistically lit up.

- Project Clean Take: Your audio editing nightmare is over. This tool lets you edit dialogue by editing a transcript. You can change a single word ("fourth" to "fifth"), or even change the speaker's emotion from "bored" to "confident." It can also isolate and remove annoying background noise, like a passing train or unlicensed music.

- Project Surface Selection: A new Photoshop tool that finally understands reflections. You can select just the surface of a car and change its color without messing up any of the shiny reflections, making color adjustments look hyper-realistic.

While these are just R&D projects, they signal a massive shift. The next generation of creative work won't be about mastering tedious technical skills like masking, rotoscoping, or audio mixing. It will be about taste, direction, and having a clear vision. The AI will handle the "how," leaving you to focus on the "what" and "why." Time to start practicing your prompting skills.

Below we dive into each project in depth based on the demos we were shown at Adobe MAX.

Project Motion Map: AI-Powered Vector Animation

Project Motion Map aims to automate the classic problem of vector animation (17:43). Presented by Mohit Ghoul, we see how an AI "motion map agent" analyzes an illustration layer by layer, assigns logical motion to each part, and writes the animation code line by line. In a demo, a "Meal Dash" promo was animated with a single click, making eyes blink and text rotate. The tool can also be interactive; with a simple prompt like "eyes follow the scooter," the character's eyes were animated to track a moving object. The most advanced feature showcased the AI's semantic understanding: it analyzed a multi-layered illustration of a chess game and animated a logical sequence of moves, correctly handling all the pieces and their shadows.

Project Clean Take: Transcript-Based Audio and Dialogue Editing

Presented by Lee Brimelow, Project Clean Take (25:36) reimagines audio editing by tying it to a transcript. For dialogue, it can fix common issues like "uptalk" (upward inflection) or regenerate a single spoken word, changing "fourth" to "fifth." It can even alter the emotion of the delivery, transforming a bored-sounding voiceover into a confident one, or even a whisper. The tool also uses source separation technology to break audio into distinct tracks for speech, ambience, music, and reverb. This allows a user to surgically mute a jarring background noise (like a drawbridge), remove unlicensed music, and even find a licensed replacement from Adobe Stock. A "Match Acoustics" feature ensures any new or altered audio sounds like it was recorded in the original environment.

Project Surface Selection: The Ultimate Selection Tool for Photoshop

Presented by Valentin Deschaintre, Project Surface Selection introduces a new Photoshop tool designed to solve the age-old problem of selecting complex surfaces with reflections (35:35). Where tools like Color Range would select unwanted highlights, Surface Selection intelligently identifies just the physical surface of an object. In one demo, the tool perfectly selected a car's body, allowing its color to be changed while flawlessly preserving all the original reflections and lighting. In a more complex example, it selected the intricate pattern of vines on a brick wall, creating a detailed grayscale mask that allowed a logo to be placed realistically behind the vines, complete with a drop shadow.

Project New Dev: Transforming 2D Photos into 3D Scenes

Using a technology called Gaussian Splats, Project New Dev converts a single 2D photograph into a fully navigable 3D scene (44:33). Presenter Elie Michel took a photo of an old tractor and was able to rotate the entire world's perspective, move flowers from the background to the foreground, and use selection tools to change the tractor's color. The tool can even split elements, like a fountain, into their own layer to be independently scaled, rotated, or animated to bounce around. This powerful technology runs both locally and in a web browser.

Project Light Touch: Relighting Photos After the Fact

Project Light Touch (presented by Zhixin Shu) gives photographers control over lighting long after a picture has been taken (50:16). It can detect existing light sources and allow you to adjust their intensity or diffusion, for example, to soften a harsh shadow on a person's face. Its most powerful feature, Spatial Lighting, lets you add entirely new virtual lights into the 3D space of the photo. In the demo, a presenter dropped a virtual point light inside a witch's cauldron, which then cast a realistic, colored glow across the entire scene. The light could be moved anywhere, with shadows and highlights updating in real-time. Host Jessica Williams was so impressed she ran across the stage to try it herself, ultimately placing the light directly in the witche's cauldron. Did we mention you can change the color of the light?? Because that's a thing.

Project Scene It: Precise, Controllable 3D Scene Generation

Project Scene It offers a new paradigm for generating 3D scenes (57:12). Instead of relying solely on complex text prompts, a user can place basic 3D shapes (primitives) into a scene to act as placeholders. By tagging these shapes with simple prompts—for instance, tagging a sphere with "hibiscus flowers"—the AI generates the desired objects within those exact boundaries. This method provides precise control over the final composition, camera angle, and asset orientation. The tool can also alter the global lighting to change the mood, for example, from day to night.

P.S: This project went so well, they offered intern Oindrila Saha (who presented it) a job after!

Project Trace Erase: Object Removal That Erases Everything

Lingzhi Shang presented Trace Erase, which removes an object and its entire environmental footprint (1:07:48). An evolution of Content-Aware Fill, when it removes an object, it also eliminates its shadow, its reflection, and even the light it was casting on its surroundings. The demos were stunning: it removed a car from a wet street along with its reflection in the water; it erased a person and their footsteps from a sandy beach; and it completely removed a jet ski and its entire wake from the surface of the water—all with a single click.

In the demo, Shang shared that a task like removing a lamp and its light source would typically be a 20-30 layer project, now done in one click.

Project Frame Forward: Bringing Photoshop Editing Power to Video

Presented by Jui-hsien Wang, Frame Forward leverages tools like Trace Erase and Generative Fill and applies them to video (1:15:32). The workflow is simple: edit the first frame of a video clip as if it were a photo, then hit a "propagate" button. The AI analyzes the edit and intelligently applies it to the rest of the video. In a demo, Trace Erase removed a drifting car and its smoke from the first frame of a drone shot; propagating the edit removed the car from the entire clip, a task that would have taken a VFX artist hours. In another example, Generative Fill was used to add a puddle to a frame, and the AI propagated it through the video with realistic physics.

Project Sound Stager: An AI Chat Agent for Sound Design

Oriol Nieto shared Project Sound Stager introduces "Sound Chat," an AI agent that analyzes a video's content, emotional themes, location, and subtext to automatically suggest and add sound effects (1:23:38). It populates a full multi-track timeline with sounds for everything from alarms to backpacks. Crucially, the user retains full control. The timeline is completely editable, and you can chat with the AI to refine the soundscape with commands like "replace with school ambience" or "add car sound during the car scene."

Even though there were plenty of options to choose from, Oriol kept picking sounds that sounded a bit, what's the word... demonic? Host Jessica Williams playfully teased Nieto, saying "every sound effect you choose is hell on earth." Pretty funny segment.

Project TurnStyle: Perfecting Perspective for Generated Assets

Zhiqin Chen, who presented turntable last year, shared this new expansion on turntable's foundation called TurnStyle. The TL;DR is that it solves the common issue of a generated asset not matching the perspective of its background image (1:29:22). When an object is added to a scene, TurnStyle creates a 3D proxy of it. The user can then freely rotate this proxy to find the exact right angle and viewpoint. Once positioned correctly, the tool upscales the asset to high resolution and uses image harmonization to blend its lighting and color to make it look like a natural part of the scene. The demo showed this being used not only on full characters but also to isolate and rotate just a bird's head on a character's body while maintaining a consistent style.

Together, these ten "sneaks" paint a clear picture of Adobe's vision: a future where the friction between a creative idea and its execution is almost entirely eliminated, empowering storytellers to focus on what truly matters—the story itself.