Adobe came to play, y'all.

At Adobe MAX 2025 in Los Angeles, the company dropped an entire creative AI ecosystem that touches every single part of the creative workflow. In our opinion, all these new features aren't about replacing creators; it's about empowering them with superpowers they can actually control.

Here's the TL;DR: Adobe's new plan is to put an AI co-pilot in every single app.

Adobe unveiled a complete reinvention of its creative suite. The new guiding principle? Turn every app into a conversation, where you can just tell Photoshop, Premiere, or Express what you want to do.

This all starts with AI Assistants, which are being embedded across Adobe’s ecosystem. The new Photoshop AI Assistant lives in a side panel and can perform complex, multi-step tasks like "select the main subject, put it on a new layer, and replace the background with a futuristic cityscape." It understands layers, edits non-destructively, and can even offer suggestions to improve your work.

Here’s the big picture: Adobe is building a central AI brain called Firefly that connects everything. And it’s not just for images anymore.

- Firefly Video: A new web-based video editor lets you generate clips, create custom transitions, and add AI-generated sound effects directly to a timeline.

- Firefly Audio: You can now generate royalty-free background music and AI voiceovers with a prompt, then use "Enhance Speech" to clean it up like a pro.

- Custom Models: This is a huge one. You can now train a private Firefly model on your own work. Just upload 10-30 of your images, and it will learn to generate new content in your unique style.

For example, in a live demo, Adobe showcased how a user could start with a blank timeline, generate video clips from text prompts, use an existing frame as a compositional reference for the next shot, and create custom, AI-generated transitions between them. This is paired with Firefly Audio, which includes a Generate Soundtrack tool for creating royalty-free music and a Generate Speech feature for AI voiceovers.

They then demo'd the "Enhance Speech" function, which allows for studio-quality audio cleanup, letting users edit dialogue and background noise as separate tracks. We saw in real time how the demo reduced the background noise of the entire Peacock theater chatting in the background, transforming the clip into podcast-friendly dialogue. Pretty awesome.

But it's not just Adobe's AI anymore. They’re opening up the platform, integrating top-tier models from competitors directly into their apps. With one Creative Cloud subscription, you’ll have access to Google’s Imagen and Veo, Runway, Pika, and Ideogram. Need to upscale a blurry photo? Photoshop’s new Generative Upscale is powered by tech from Topaz to push images to 4K.

What this means for you: The era of hunting through menus and memorizing keyboard shortcuts is fading. The new workflow is conversational.

- For professionals, the game-changer is Firefly Custom Models. Start training one now to create a consistent, on-brand look for all your assets.

- For everyday creators, the AI Assistants in Photoshop and Express will drastically speed up your workflow.

- The best place to start is the Photoshop AI Assistant (currently in private beta), which offers a powerful glimpse into the future of creative software—a future where you're less of a button-pusher and more of a creative director.

Our take: To us, the message was crystal clear: Adobe is in an existential fight with competitors closing in from all sides—from Canva to Figma to Midjourney to Runway to upstart video editors and AI models alike. Their response = embed AI directly into the tools creators already use, and in the features they desperately want to make their lives easier. However, these features have a critical difference from Adobe's competitors: maximum flexibility and control.

Adobe already has the platform creators use, with maximum control. They just needed to embed AI at the layer level inside the technology to make it maximally efficient, and it looks like they've done that.

Below, we dive into everything announced and our impressions of it all. let's go!

The Firefly Ecosystem

First off, CEO Shantanu Narayen took the stage not to announce incremental features, but to outline a complete reinvention of the creative process, centered entirely around artificial intelligence. The message was clear: the future of creativity is conversational, collaborative, and powered by a multitude of AI models. Adobe’s plan is to put an AI co-pilot inside every app, transforming users from operators into creative directors.

This vision is built on four pillars—Express, Firefly, GenStudio, and Acrobat—and is designed to infuse AI into every stage of the workflow, from initial ideation to final delivery. At the heart of this transformation is Firefly, which has evolved far beyond a simple image generator into a comprehensive, AI-powered creative destination. Adobe positioned Firefly as a "search engine for the imagination," a one-stop-shop for AI-native creation, and their new feature suite delivered on that idea.

The Model Marketplace: Your AI, Your Choice

Adobe has been in the process of opening its ecosystem to competitor models. The company’s new philosophy is built on choice, offering a "three-model" approach: Adobe's native Firefly models, partner models from industry leaders, and your own custom-trained models.

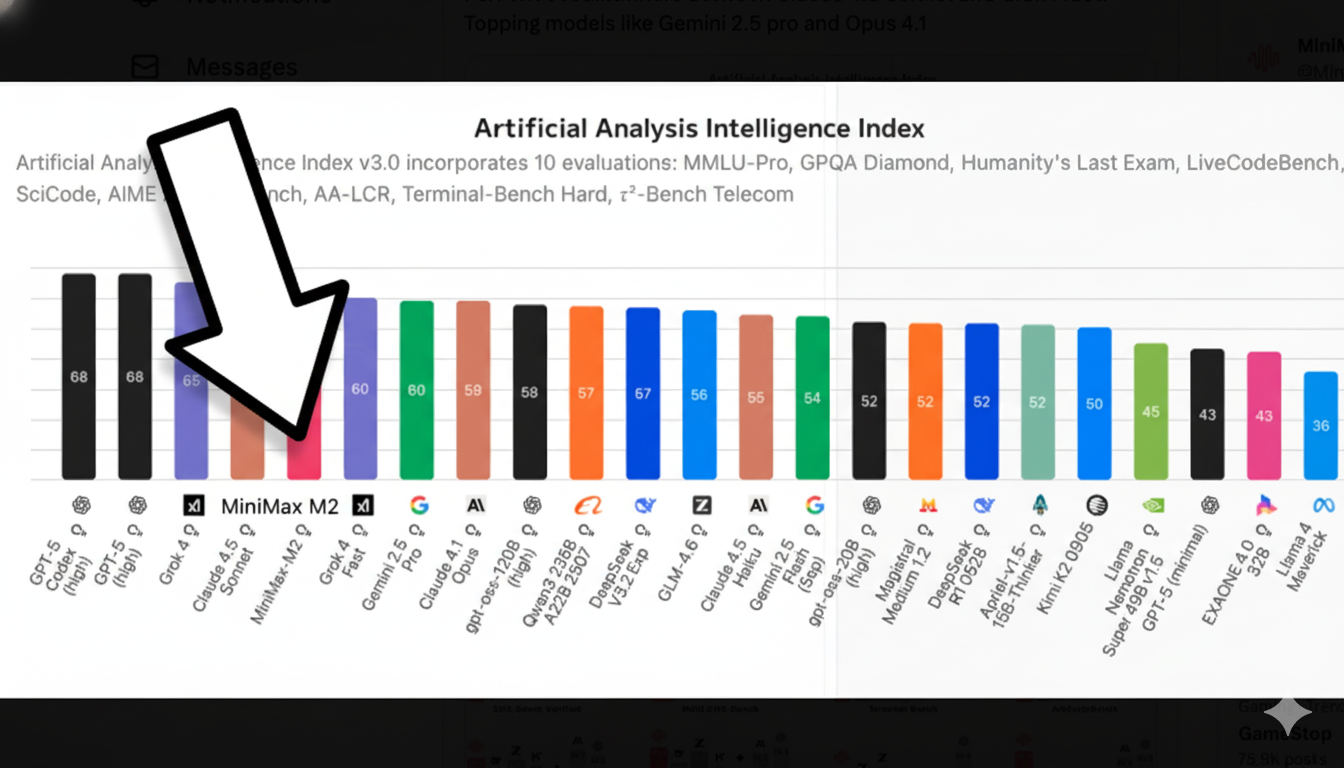

In a way, Adobe just turned Firefly into an AI model marketplace. Want to use Google's Gemini 2.5 Flash (nano banana) for better scene and character coherence? Go for it. Need FLUX.1 Kontext from Black Forest Labs? It's there. Prefer OpenAI's approach? That works too.

This is huge. Instead of being locked into one AI's quirks, you can pick the right model for each specific task. And through December 1st? Unlimited generations with all models for Creative Cloud Pro subscribers.

This means a single Creative Cloud subscription now serves as a gateway to a suite of best-in-class AI tools. The integration list is a who's who of generative AI:

- Image Generation: Google's Gemini and Imagen, BFL's FLUX.1, and Ideogram.

- Video Generation: Google's Veo, Runway, and Pika.

- Voice Synthesis: ElevenLabs.

- Image Upscaling: Topaz Labs.

This integration is already bearing fruit in flagship products. Photoshop’s new Generative Upscale feature, for instance, leverages Topaz's renowned technology to intelligently enlarge low-resolution images to pristine 4K quality. This multi-model strategy positions Adobe not just as a toolmaker, but as the central hub for professional-grade AI, abstracting away the complexity of managing multiple subscriptions and APIs.

The complete list of partner models now include:

- Google: Gemini, Veo (video), Imagen (image).

- OpenAI: Their latest models integrated directly.

- Runway: Video generation specialists.

- Luma AI: 3D and video models.

- ElevenLabs: Voice synthesis (those crystal-clear AI voices).

- Topaz Labs: The upscaling wizards (turn potato photos into 4K).

- Black Forest Labs: FLUX models for precision generation.

- Moon Valley: Creator-friendly video models (new project, very cool!).

Custom Models: Your Style, Your Rules

Perhaps the most impactful new feature for individual creators is Firefly Custom Models. Acknowledging that personal style is paramount, Adobe now allows users to train their own private, secure models. By uploading as few as 10 to 30 of their own images or assets, creators can tune Firefly to replicate their unique aesthetic, ensuring consistency across all generated content.

Here's where it gets personal. Firefly Custom Models (private beta) let you train AI on your own style. Drop in 10-30 of your images, illustrations, or designs, and boom—you've got an AI that generates in YOUR aesthetic. For brands, this means consistent visual identity at scale. For artists, it means AI that actually respects their unique style.

Even bigger for enterprises: Firefly Foundry. This lets companies build private, proprietary models trained only on their assets. Coca-Cola can have an AI that only knows Coke's visual language. Nike can have one that breathes swooshes. All private, all secure, all on-brand.

Firefly Image Model 5 and the Era of Choice

The platform is powered by the new Firefly Image Model 5, a significant leap forward in quality. Now generating images at a native 4-megapixel resolution, it delivers sharper, more photorealistic results with vast improvements in rendering complex human anatomy and generating accurate, legible text within images.

Another big piece of news was Firefly Image Model 5, Adobe's most advanced image generation model yet. Native 4MP resolution (that's 4 megapixels without upscaling), photorealistic detail that actually looks real, and here's the kicker—you can edit images just by describing what you want changed. "Move the tree," "swap the sky," "add a cup of coffee"—Firefly gets it.

But Adobe's biggest power move? They're not forcing you to use only their models.

The Conversational Canvas: AI Assistants Everywhere

The most profound change to the user experience is the introduction of conversational AI assistants across the product line. The goal is to merge the simplicity of natural language with the precision of direct manipulation tools.

Specifically, Adobe introduced AI Assistants across Express, Firefly, and Photoshop. These are agentic systems that can actually do multi-step tasks.

The Photoshop AI Assistant, now in private beta, exemplifies this new paradigm. Housed in a side panel, it can understand and execute complex, multi-step commands. A user can type, "Rename all my layers based on the content of each," and the assistant will analyze the image and perform the task instantly. It understands the structure of a Photoshop document, performing non-destructive edits on separate layers and offering creative suggestions.

Photoshop AI Assistant can:

- Rename all your layers based on their content (finally!).

- Suggest improvements to your composition.

- Execute complex multi-step edits from a single prompt.

- Switch between direct manipulation and conversational editing.

Similarly, the Adobe Express AI Assistant provides contextual control over designs, understanding the individual elements of a project and applying a series of edits from a single prompt. It understands design principles, too.Tell it what you want, and it creates layouts that actually make sense—understanding color theory, typography, and composition.

Looking ahead, Adobe previewed Project Moonlight, a cross-app agent that acts like an intelligent social media manager. Moonlight can connect to a user's Instagram analytics, identify top-performing content themes, and suggest new post ideas. A user could then ask it to find the best photos from a recent shoot in Lightroom, apply a specific preset, and draft a formatted caption for three different social platforms—all through a single conversational interface.

Moonlight is definitely the most ambitious project, and the demo was a little confusing, in our opinion because the functionality and integrations weren't 100% there yet. But it shows the potential of cross-app integration to brainstorm and publish posts directly from a signle UI. An AI assistant that works across ALL Adobe apps and even connects to your social channels would be amazing. The demo they showed can analyze your Instagram performance, suggest content based on what's working, and coordinate your entire creative workflow. Better would be if it could post for you, too!

The Video Revolution: Full Production in Your Browser

Adobe dropped a bomb with their new web-based Firefly video editor (private beta). This isn't just another AI video generator—it's a full multitrack timeline editor that happens to have AI superpowers built in.

You can:

- Generate new clips directly in the timeline.

- Mix your shot footage with AI-generated content.

- Add AI voiceovers with Generate Speech (multiple languages, natural pacing).

- Create custom soundtracks with Generate Soundtrack that automatically sync to your cuts.

- Edit via transcript (text-based editing is finally here).

- Apply style presets like claymation, anime, or 2D animation.

The UI/UX philosophy here is brilliant: AI handles what you want it to handle, then gets out of the way so you can take over. This is the editing paradigm we've been waiting for.

Supercharging the Classics: AI in Photoshop, Premiere, and Lightroom

Adobe is also deeply embedding this new AI technology into its flagship desktop applications.

In Photoshop, beyond the AI Assistant and Generative Upscale, the new Harmonize feature automatically matches the color, tone, and lighting of a newly added object to its background, making complex composites look realistic with a single click.

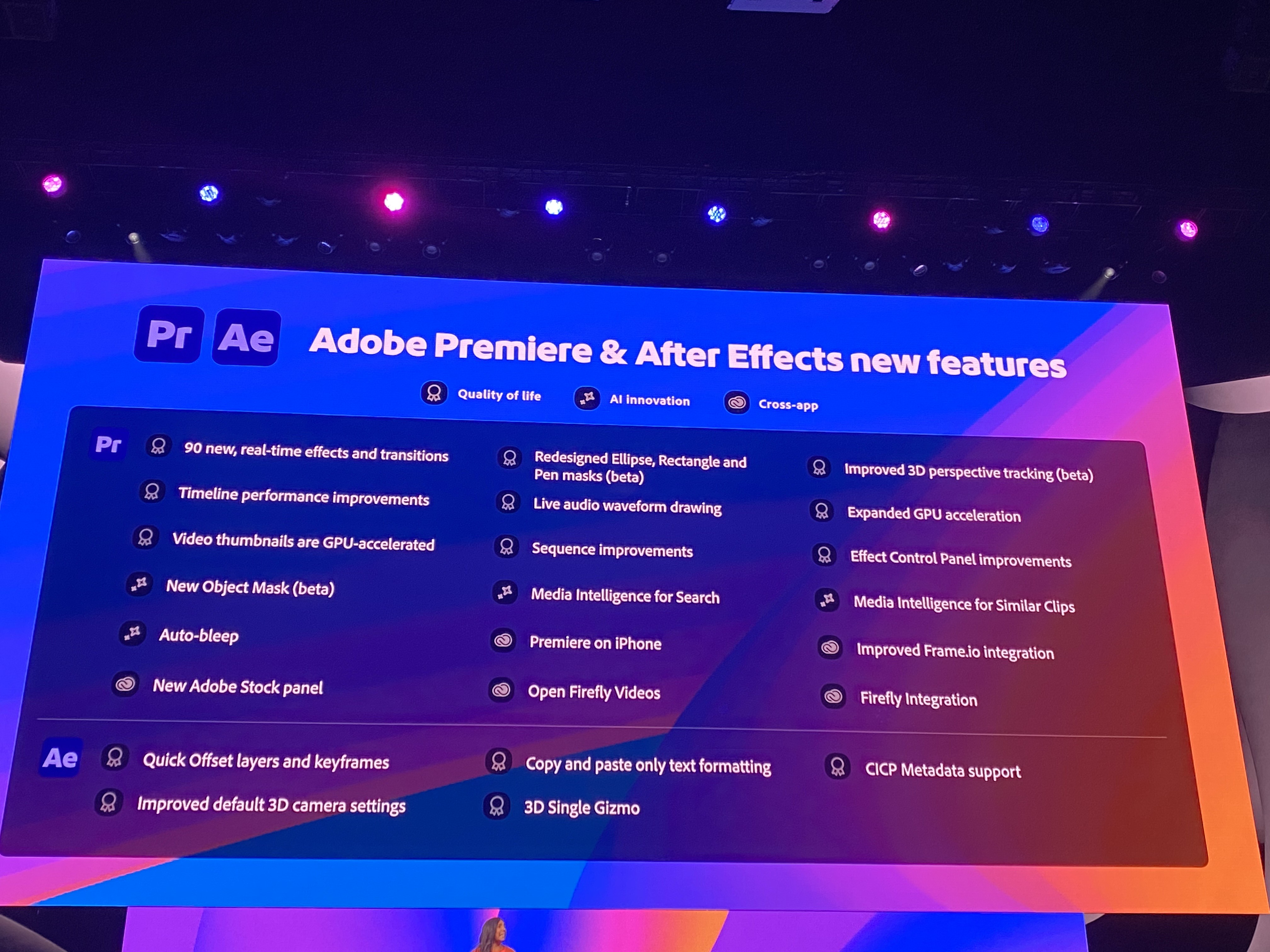

For video editors, Premiere Pro introduces an AI Object Mask, a powerful tool that automatically identifies and isolates people or objects in a video, effectively eliminating the tedious process of manual rotoscoping. This is complemented by faster new vector masking tools with 3D perspective tracking.

Lightroom is getting a major workflow enhancement with Assisted Culling. For photographers who return from a shoot with thousands of photos, this feature uses AI to analyze the entire batch and surface the best shots based on focus, composition, sharpness, and subject angle, dramatically reducing culling time. It also adds intelligent tools for removing dust spots and unwanted reflections.

Illustrator also got a ton of cool new features, including better snapping, an enhanced font browser, and Turntable, which lets you draw a vector image once, and turn it any angle you like while keeping the same consistency (lots of cool use-cases here).

The Little Things That Add Up

Putting all of those features in a list, it's clear that Adobe's secret weapon might be all the small features that solve real annoyances:

- Generative Layer Naming: AI automatically names your Photoshop layers based on what's in them.

- Generative Upscale with Topaz: Turn ancient low-res photos into 4K masterpieces.

- Harmonize in Photoshop: One-click realistic compositing that matches lighting and color.

- Assisted Culling in Lightroom: AI finds your best shots from thousands of photos.

- AI Object Mask in Premiere: Goodbye manual rotoscoping, hello automatic object isolation.

- Dust Removal in Lightroom: Detects and removes sensor dust across entire catalogs.

- Reflection Removal: Slider-based control to remove (or keep) window reflections.

YouTube Partnership: Shorts Get Serious

Adobe partnered with YouTube to bring Premiere editing directly to YouTube Shorts. A new "Create for YouTube Shorts" space in Premiere mobile lets creators edit and publish directly to Shorts with exclusive effects and templates. One-tap publishing. No export hassles.

For the Enterprise: Scaling Content with GenStudio

Adobe is extending these capabilities to its enterprise customers with Adobe GenStudio, a platform designed for creating personalized content at scale. Here, the concept of custom models is elevated with Firefly Foundry, a service where Adobe's own researchers and engineers work directly with brands to train secure, proprietary models on their entire product catalogs and brand assets.

For businesses, GenStudio got massive upgrades:

- Firefly Creative Production: Edit thousands of images at once (batch background replacement, color grading, cropping).

- Direct integrations with Amazon Ads, Google Marketing Platform, TikTok, LinkedIn.

- Performance marketing optimization built-in.

- Brand-safe, rights-cleared content at scale.

Furthermore, Firefly Creative Production for Enterprise introduces a powerful, node-based interface similar to ComfyUI, allowing businesses to build automated workflows for repetitive tasks like replacing backgrounds on thousands of product shots or resizing a campaign for dozens of different platforms.

With a promotional offer of unlimited Firefly generations through December 1, 2025, Adobe is aggressively pushing creators of all levels—from individual artists to global marketing teams—to embrace this new AI-powered future. The message from MAX 2025 is that the blank canvas is no longer empty; it’s now an intelligent, conversational partner ready to create.

The Bottom Line

In our opinion, Adobe fundamentally reimagined how AI should work in creative software. Instead of AI as a separate thing you go to, it's woven into everything you already do. Instead of one-size-fits-all AI, you get to choose your model. Instead of AI replacing your workflow, it amplifies it. This is what genAI for creators should have always been, y'all.

The small touches—like being able to use Topaz upscaling directly in Photoshop, or having AI name your layers—show Adobe actually understands what drives creators crazy. The big swings—like custom models and the video editor—show they're not ceding ground to anyone. Your move, AI upstarts.

Most importantly, the UI/UX philosophy throughout is spot-on: AI assists where you want assistance, then hands back control. You're never fighting the AI or working around it. You're working with it.