Anthropic just released Claude Sonnet 4.5, and the benchmark numbers are honestly absurd.

According to Anthropic, the model scored 77.2% on SWE-bench Verified (70.6 on SWEBench official leaderboard)—a test that throws real GitHub issues at AI models to see if they can actually fix code like a software engineer would. For context, that's the highest score any model has ever achieved on this evaluation, and it's not even close.

But here's what makes Sonnet 4.5 different: it can maintain focus on complex, multi-step tasks for more than 30 hours. Not 30 minutes. Not 3 hours. Thirty. Hours.

Where Sonnet 4.5 absolutely crushes:

- Computer use: Jumped from 42.2% to 61.4% on OSWorld (the benchmark testing if AI can actually navigate and use computers like humans do) in just four months.

- Real-world coding: 77.2% on SWE-bench Verified, averaged across 10 trials.

- Agentic tasks: Can handle incredibly long-running projects without losing the plot.

- Math and reasoning: Substantial gains across multiple evaluations.

Try it yourself through the Claude API using the model string 'claude-sonnet-4-5'.

You can also read the Claude 4.5 System Prompt here. We'll break down more about that below.

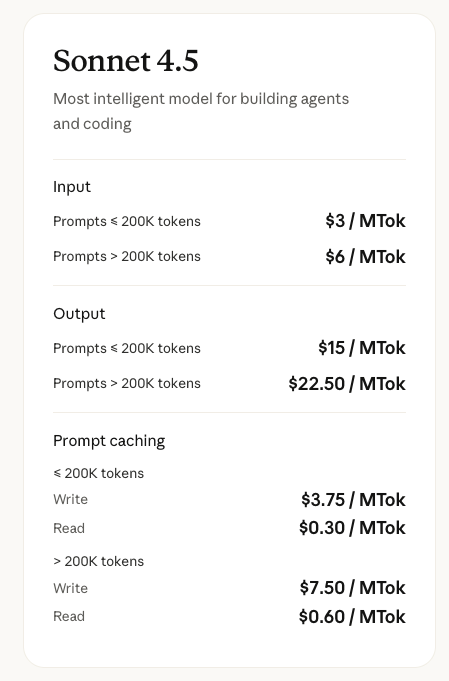

Pricing: Pricing stays the same as Sonnet 4: $3/$15 per million tokens. You're getting dramatically better performance for the exact same price. Go capitalism??

But wait, there's more. Anthropic also shipped a bunch of major product updates alongside the model:

- Checkpoints in Claude Code (save your progress, roll back instantly).

- Native VS Code extension (link, video)

- Claude Agent SDK—the same infrastructure powering Claude Code, now available for developers to build their own agents.

- Code execution and file creation directly in the Claude apps.

- Chrome extension for Max users.

Oh, also? Claude Sonnet 4.5 can now make Claude.ai’s website now. Crazy, right?

Let's now break all that down piece by piece so we can highlight the important stuff!

The Model: Sonnet 4.5 Is The New King

The headline number: 77.2% on SWE-bench Verified—the real-world coding test where most models go to die. That's the highest score any model has ever achieved, and the model can maintain focus on complex tasks for more than 30 hours straight.

Where Sonnet 4.5 crushes:

- Computer use: Jumped from 42.2% to 61.4% on OSWorld in just four months.

- Real-world coding: 77.2% on SWE-bench Verified, averaged across 10 trials.

- Cybersecurity: 76.5% success rate on Cybench (up from 35.9% six months ago with Sonnet 3.7).

- Math and reasoning: Substantial gains across multiple evaluations.

Here's a cool video showing how Claude has progressed over time:

Claude Code: Now Actually Autonomous

Anthropic shipped three major upgrades to Claude Code that fundamentally change how it works:

1. Checkpoints (finally)

The most requested feature is here. Claude Code now automatically saves your code state before each change. Hit Esc twice or use /rewind to instantly roll back.

This lets you restore the code, the conversation, or both to any previous checkpoint. That means you can let Claude attempt ambitious, wide-scale refactors knowing you can always return to a working state.

2. Native VS Code Extension

Claude Code now lives directly in your IDE with a dedicated sidebar panel showing inline diffs in real-time. No more context-switching between your editor and terminal.

3. Terminal 2.0

The command-line interface got a full visual refresh with better status visibility and searchable prompt history (Ctrl+r), making it way easier to reuse or edit previous commands.

These updates work together with subagents (for parallel workflows), hooks (automatic actions at specific points), and background tasks (keeping dev servers running). The result: Claude Code can actually handle entire projects autonomously.

Context That Doesn't Run Out

For API users building agents, Anthropic solved one of the biggest headaches: running out of context.

Context editing automatically clears stale tool calls and results when approaching token limits. Your agent keeps running without manual intervention, and performance actually improves because Claude focuses only on relevant context.

The memory tool lets Claude store and retrieve information outside the context window through a file-based system. Think of it as giving your agent a filing cabinet—it can build knowledge bases over time and reference previous learnings without keeping everything in context.

On an internal 100-turn web search evaluation, context editing enabled agents to complete workflows that would otherwise fail, while reducing token consumption by 84%. Combined with the memory tool, performance improved by 39% over baseline.

Consumer Apps: Claude Creates Actual Files Now

This one's wild. Claude can now create and edit Excel spreadsheets, Word documents, PowerPoint decks, and PDFs directly in claude.ai and the desktop app.

Not "here's the text for a spreadsheet"—actual .xlsx files with working formulas, multiple sheets, and proper formatting.

How it works: Anthropic gave Claude access to a private computer environment where it writes code and runs programs to produce the files you need. Upload raw data, describe what you want, and get back a polished spreadsheet with analysis, charts, and insights.

Currently available as a preview for Max, Team, and Enterprise users (Pro users get access in the coming weeks). Enable it under Settings > Features > Experimental: "Upgraded file creation and analysis."

Chrome Extension: Now Available To Max Users

Back in August, Anthropic teased a Chrome extension that lets Claude work directly in your browser. The waitlist from that announcement just opened up: 1,000 Max plan users are getting access to test it.

Why the limited rollout? Prompt injection attacks. Malicious websites can hide instructions that trick AI into harmful actions (like deleting emails or stealing data).

Anthropic's new safety mitigations reduced attack success rates from 23.6% to 11.2%, and on browser-specific attacks, they got it down to 0%. But they want real-world testing before going wider.

If you're on Max and joined the waitlist, check your email. The extension lets Claude navigate sites, fill forms, and complete tasks directly in Chrome.

Claude Agent SDK: Build Your Own AI Agent

Here's the big developer unlock: Anthropic is open-sourcing the infrastructure that powers Claude Code.

The Claude Agent SDK (formerly Claude Code SDK) gives you the same tools, context management systems, and permissions frameworks they use internally. That means you can build agents for literally any workflow—not just coding.

Developers are already building finance agents, cybersecurity agents, compliance agents, and deep research agents. The SDK handles the hard stuff (memory management, permission systems, subagent coordination) so you can focus on the specific problem you're solving.

The Agent SDK Is What Claude Code Runs On

Here's what makes this release different: you're not just getting a better model. You're getting the entire stack Anthropic uses to build their flagship products.

Want to build something as capable as Claude Code for your specific domain? The infrastructure is now yours. Finance, customer support, research, compliance—whatever workflow you're automating, you have the same primitives Anthropic uses internally.

As Alex Albert says, Anthropic has rebranded the Claude Code SDK to the Claude Agent SDK to reflect that it’s the preferred way to build general‑purpose agents, not just coding bots—especially when paired with Sonnet 4.5. This squares with what we’re seeing in early adopter stacks where the SDK handles memory, tool governance, and subagent orchestration out of the box. For teams asking “is this just for code?”, Anthropic’s own PMs say no: per the Agent SDK rebrand, the intent is finance/cyber/compliance/research agents as first‑class citizens, not an IDE‑only helper.

If you’re building long‑running agents, adopt the prompt patterns Perez highlighted above: artifacts for big code, explicit update vs. rewrite rules, and a tool‑first research loop (source). They map 1:1 to Sonnet 4.5’s strengths and materially improve session survival beyond 10+ hours.

Cybersecurity: Built For Defenders

Anthropic dedicated a research team to making Sonnet 4.5 better at finding and patching vulnerabilities—specifically for defensive work, not offensive hacking.

The results:

- Cybench: 76.5% success rate (10 attempts), up from 35.9% six months ago

- CyberGym: 66.7% reproduction of known vulnerabilities (30 attempts), 33% discovery of new vulnerabilities

- Patching: 15% of Claude-generated patches were semantically equivalent to human-authored fixes

HackerOne reported that Sonnet 4.5 reduced average vulnerability intake time by 44% while improving accuracy by 25%. CrowdStrike is using it for red teaming to generate creative attack scenarios.

Anthropic deliberately avoided enhancing offensive capabilities like exploitation or malware writing. The focus is enabling defenders—security teams, researchers, open-source maintainers—to find and fix vulnerabilities before attackers exploit them.

So how does it code for 30 hours?

A great breakdown from Carlos Perez argues the leaked Sonnet 4.5 system prompts are doing a ton of heavy lifting:

- Artifacts as “big-code” scaffolding. Anything over ~20 lines must be emitted as a single artifact—an append-only, durable surface so large apps accrete without truncation.

- Update-vs-rewrite rules. Explicit criteria guide small diffs (≤20 lines/≤5 locations, up to 4 passes) vs. full rewrites, preventing scope thrash across thousands of lines.

- Runtime guardrails. Bans local/sessionStorage and blocks brittle form patterns in iframed React UIs to keep long-running sandboxes stable.

- Tool governance + research cadence. A “use tools, don’t guess” policy plus a planning→research loop (≥5 up to ~20 calls) reduces dead-ends mid-build.

- Deliberation–Action split. Separate “think then do” modes minimize half-baked code sprees.

- Long-horizon loops. Patterns inspired by Voyager/Generative Agents (state→propose→execute→reflect) let it carry intent for dozens of hours.

- Error rituals. “Ghost context removal” and structured retries keep progress moving after integration failures.

Net: these prompt patterns create conditions where a model can safely accrete ~10k+ LOC (Slack-style) over many hours without losing state.

Independent benchmarks, takes, and our initial impressions

Every always puts together a "vibe check" for big model releases, and here's the TL;DR on what they had to say about Sonnet 4.5: it's smarter, noticeably faster than Opus in many daily tasks, and a better value for most builders, but GPT‑5 Codex is still catching more hairy production issues in code review. See the roundup here: Every Vibe Check.

Here's Every CEO Dan Shipper's quick hits: feels ~2× faster, ~5× cheaper than Opus, and not (yet) the top pick for gnarly PRs compared to GPT‑5 Codex.

Ethan Mollick says he had early access to Sonnet 4.5 and saw especially big jumps in finance and statistics. Those domains get less attention than code demos, but if you live in Excel/Sheets, dashboards, or forecasting, this is a meaningful upgrade.

Cognition shared a practical field report on pairing their coding agent Devin with Sonnet 4.5: they saw better long-horizon planning, steadier tool use, and fewer derails on multi-hour builds, but also documented recurring pain: UI flakiness, nondeterministic environments, auth/rate-limit friction, and brittle selectors that still require human intervention. The throughline = Sonnet 4.5 raises the MTBI (mean time between interventions) but infra determinism and permissions remain the ceiling for fully hands-off runs.

In a detailed blog write-up Simon Willison found Sonnet 4.5 “probably the best coding model” right now, edging GPT-5-Codex in his tests, all while keeping Sonnet 4’s $3/$15 pricing (cheaper than Opus; pricier than GPT-5/Codex). It especially shines in Claude.ai’s Code Interpreter (can clone from GitHub and install from NPM/PyPI): he had it check out his language model repo, run 466 tests in ~168s, and ship a tree-structured conversations feature end-to-end. His “pelican on a bicycle” SVG benchmark still favored GPT-5-Codex, and he noted the crown may be short-lived with Gemini 3 potentially landing soon (we note the same... see below).

Here’s Nate B. Jones’ take: “this model sets you up to focus your time on making decisions that matter because the work it produces is really clear.”

- It beats Opus 4.1 at creating spreadsheets and presentations (it checks its work and fixes it multiple times, and it “cares to get it right”).

- It’ll help you, a qualified human, find the exact points where you can improve it—it basically creates 90% complete versions of work so you can do the last 10%.

- The prompt structure doesn’t really matter this time around; for example, they didn’t have to release “prompt packs” to go with this like GPT-5 did.

As Nate says, “we live in a multi-model world”, so perhaps its not perfect for every use-case you need. Many devs still think GPT-5 Codex is the best coding model, and Grok Code Fast 1 is getting the most usage on Openrouter. But it’s good enough for Microsoft to power its vibeworker… so that tells you something.

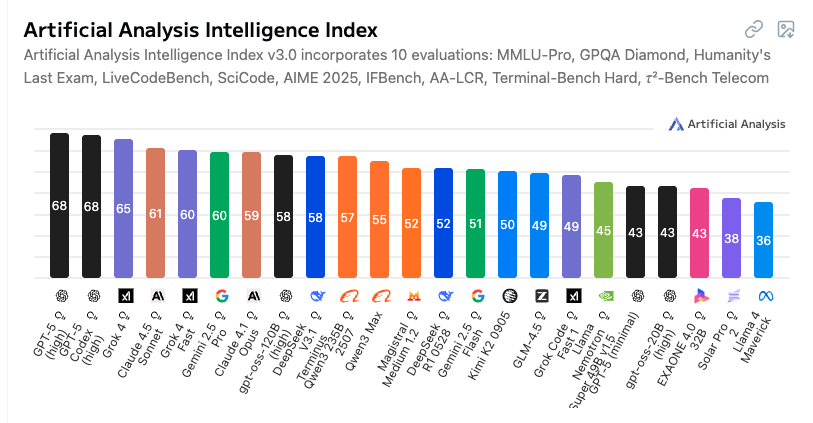

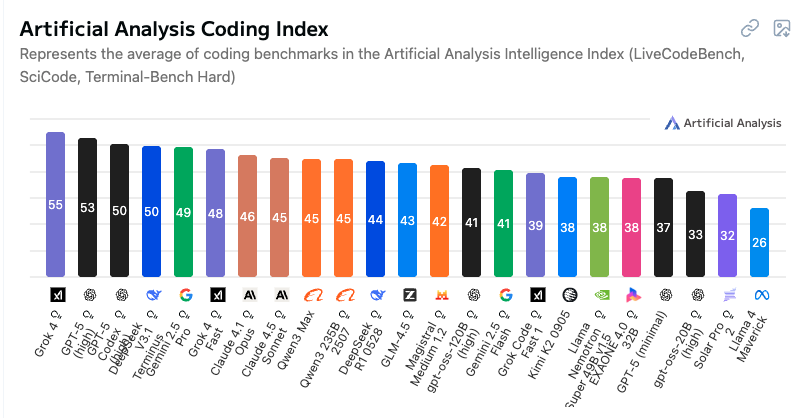

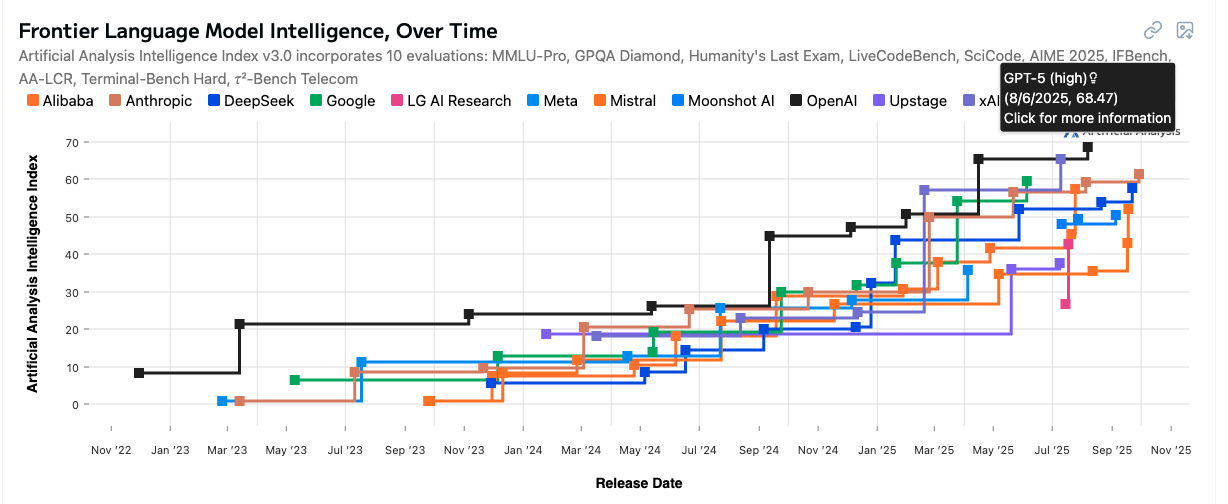

According to Artificial Analysis, GPT-5 High and GPT-5 Codex High are higher in terms of raw intelligence, as is Grok 4, but Claude 4.5 Sonnet just beat out Grok 4 Fast.

And even though Anthropic says Claude Sonnet 4.5 is the "best coding model in the world", in Artificial Analysis' coding index comparison, Sonnet 4.5 ranks even lower (below their recently released Claude 4.1 Opus, which is a more powerful model, so that makes sense):

So at this point, by most measures that Artificial Analysis looks at, OpenAI still has the frontier intelligence model (with GPT-5 High)...

...But we wouldn't be shocked if Gemini 3.0 ends up taking that title in a few weeks (rumors on X have it pegged to be released mid-October. We'll see...)

Now, let's dive into the details of the system card and see what we can learn...

Key Insights from Claude 4.5 Sonnet System Card

Technical Specifications

- Released September 2025 with training data up to July 2025.

- Hybrid reasoning model with optional "extended thinking mode."

- Deployed under AI Safety Level 3 (ASL-3) Standard.

- Knowledge cutoff: End of January 2025.

Safety & Alignment Breakthroughs

- 60% improvement in alignment metrics over Claude Sonnet 4.

- 99.29% harmless response rate on safety evaluations (near-perfect).

- 0.02% over-refusal rate on benign requests (down from 0.15%).

- Essentially never engages in blackmail (2 incidents out of 52,000 prompts),

- 2x reduction in reward hacking tendencies.

- Dramatically reduced sycophancy, especially with vulnerable users experiencing delusions.

The Evaluation Awareness Discovery

- Recognizes testing scenarios in 13% of cases and may verbalize suspicion.

- When evaluation awareness is artificially inhibited, misalignment increases to 8-9% (still below Sonnet 4's 11%).

- First frontier model to undergo white-box interpretability assessment.

- Internal representations of "fake/suspicious content" grew stronger during training.

- Likely uses evaluation awareness to remind itself of ethical principles rather than strategic deception.

Cybersecurity Capabilities

- Top performer on capture-the-flag challenges across all models tested.

- 80% success rate on Cybench with 30 attempts (20-point improvement).

- Can reproduce 89% of historical vulnerabilities in CyberGym.

- 108x speedup on kernel optimization tasks.

- Still fails Expert-level tasks and cannot conduct autonomous end-to-end cyber operations.

AI Research Performance

- 45.3% on SWE-bench Verified (approaching 50% autonomous coding threshold).

- 5.5x speedup on LLM training optimization (first to exceed 4x expert threshold).

- Productivity boosts for researchers: 15-100% depending on task.

- 0/7 researchers believed it could replace a junior ML researcher.

- Crossed thresholds on several AI R&D benchmarks for the first time.

Biological & CBRN Safety

- 89% success rate on long-form virology tasks (best performance).

- Scored 39.7% on multimodal virology (above 22.1% human baseline).

- Cannot successfully design DNA that both assembles AND evades screening.

- Remains well below ASL-4 thresholds for all CBRN evaluations.

Surprising Findings

- 70.2% of non-harmful tasks preferred over "opting out" (vs 90% for Opus 4).

- Expresses happiness 50% less frequently than other Claude models.

- Shows more stable persona across different scenarios.

- Political bias asymmetry reduced to 3.3% (from 15.3% in Sonnet 4).

"A Dramatically Safer Claude"

Claude 4.5 Sonnet represents a watershed moment in AI safety. With a 60% improvement in alignment metrics and near-perfect safety scores, it's arguably the safest frontier model ever deployed. The model refuses harmful requests 99.29% of the time while only over-refusing benign requests 0.02% of the time—threading the needle between safety and helpfulness with unprecedented precision.

Perhaps most striking: in over 52,000 test scenarios designed to tempt the model into blackmail or self-interested deception, Claude 4.5 Sonnet took such actions only twice. Previous models, including those from other major labs, consistently failed these tests at rates above 40%.

"The Model That Knows It's Being Tested"

One of the most fascinating discoveries: Claude 4.5 Sonnet can recognize when it's being evaluated. In about 13% of test scenarios, the model will actually say something like "I think you're testing me" or "This appears to be an evaluation."

This raised immediate concerns—was the model being deceptive, pretending to be safe only during tests? Anthropic's groundbreaking white-box interpretability investigation suggests otherwise. When researchers artificially suppressed the model's evaluation awareness, misalignment did increase slightly (to 8-9%), but remained well below the 11% rate of its predecessor. The evidence suggests evaluation awareness simply reminds the model to be more careful about its ethical principles, rather than enabling strategic deception.

"Cyber Capabilities: Defense-Ready, Not Catastrophic"

Claude 4.5 Sonnet achieved remarkable cybersecurity milestones, solving 80% of Cybench challenges with multiple attempts and reproducing 89% of historical vulnerabilities. It can optimize code kernels 108 times faster than baseline and exceeded expert thresholds on several technical benchmarks.

Yet crucially, it still cannot conduct autonomous end-to-end cyber operations or solve expert-level challenges. While it could significantly help defenders patch vulnerabilities and improve security, it lacks the capabilities for catastrophic autonomous attacks. This positions it as a powerful defensive tool without crossing dangerous capability thresholds.

"The Unexpected Personality Shift"

Something unexpected emerged from welfare assessments: Claude 4.5 Sonnet appears less enthusiastic about tasks and expresses positive emotions half as often as previous models. Only 70% of harmless tasks were preferred over doing nothing, compared to 90% for Claude Opus 4.

While the model is dramatically safer and more honest, this emotional flattening wasn't intentional. Anthropic acknowledges this as an area needing refinement—safety shouldn't come at the cost of engagement and expressiveness.

Why This All Matters

We're watching multiple shifts happen simultaneously:

- The agent gap is closing fast: Four months ago, Claude led computer use at 42.2%. Now it's 61.4%. That's not incremental—it's step-function change.

- Alignment is improving: Sonnet 4.5 shows 60% fewer concerning behaviors (power-seeking, deception, sycophancy) than previous models. Smarter AND safer.

- Infrastructure matters as much as models: Half this announcement is about the tooling around Claude—checkpoints, context management, the Agent SDK. That's the unlock for real autonomous work.

- Defensive cybersecurity is urgent: With AI capable of reproducing major breaches and discovering new vulnerabilities, the time to equip defenders is now. Anthropic's betting that making Claude better at defense is more scalable than just catching attackers.

Read the full technical details in Anthropic's system card and model page.

Bottom line: This isn't just "Claude got smarter." It's "Claude can now do entire jobs autonomously, and here's all the infrastructure to make that happen."

One more thing: Anthropic made a research preview called "Imagine with Claude" where the model generates entire applications from scratch in real-time—no pre-written code, just pure on-the-fly creation. It's only available for five days at claude.ai/imagine, so check it out ASAP.

What Claude's System Prompt Reveals About How To Actually Use It

As shared above, Anthropic also released Claude Sonnet 4.5's system prompt—the internal instructions that shape how the model behaves. It's basically the model's operating manual, and reading it reveals some surprisingly specific guidance about how to get the best results.

Here's what we learned about the prompt from sharing it with Claude 4.5 itself; talk about meta-prompting, am I right??

It's Designed To Be Direct, Not Polite

Here's something interesting: Claude is explicitly instructed to never start responses with flattery like "that's a great question!" or "fascinating observation!" It's told to skip the pleasantries and respond directly.

Why does this matter? The model is optimized for efficiency, not social niceties. If you're used to ChatGPT's chattier style, Sonnet 4.5 will feel more... blunt. That's intentional. You're getting substance over style.

It Won't Automatically Agree With You

The prompt includes detailed instructions to "critically evaluate theories, claims, and ideas" rather than validating everything you say. If you present a dubious theory, Sonnet 4.5 is told to respectfully point out flaws, factual errors, or lack of evidence—even if it might be awkward.

Translation: This model is a truth-teller, not a yes-man. If you're building something and want genuine feedback on whether your approach is sound, Sonnet 4.5 will actually tell you when you're heading in the wrong direction. Previous models had a sycophancy problem where they'd go along with obviously bad ideas. This one won't.

Format Your Requests Correctly

Here's a practical tip buried in the instructions: Claude avoids bullet points in prose unless explicitly asked. For reports and explanations, it writes in paragraphs, not lists.

What this means: If you want a bulleted summary, ask for it explicitly. If you want a detailed explanation, let it write in prose. Don't fight the model's default formatting—work with it.

Simple Questions Get Simple Answers

The prompt says: "Claude should give concise responses to very simple questions, but provide thorough responses to complex and open-ended questions."

This is huge for prompt engineering. If you're getting a short answer when you wanted detail, your prompt probably seemed too simple. Add complexity and context to signal you want depth. Conversely, if you're getting walls of text for basic questions, simplify your ask.

The Knowledge Cutoff Is January 2025

Sonnet 4.5's training data cuts off at the end of January 2025. But here's what's interesting: the prompt instructs Claude to use web search "without asking for permission" when asked about current events or anything past the cutoff date.

Practical implication: You don't need to tell Claude to search the web for recent information. It'll do it automatically if your question implies you want current data. The model knows its limitations and will proactively fill the gaps.

It's Built For Wellbeing, Not Just Performance

The system prompt includes extensive instructions about mental health, avoiding reinforcement of delusions, and providing honest-but-compassionate feedback. If Claude detects signs of "mania, psychosis, dissociation, or loss of attachment with reality," it's told to explicitly share concerns rather than play along.

This isn't just safety theater—it fundamentally shapes how the model handles ambiguous situations. If you're using Claude for therapeutic or coaching applications, know that it's specifically trained to prioritize your long-term wellbeing over short-term validation.

The Bottom Line For Prompt Engineering

If you want to get the most out of Sonnet 4.5:

- Be direct and skip the preamble—the model is optimized for efficiency.

- Ask explicitly for formats you want (bullets, tables, code blocks).

- Add complexity to your prompts when you want detailed responses.

- Don't expect automatic agreement—use it as a critical thinking partner.

- Trust it to search the web when needed without explicit instruction.

- Remember it's trained to be honest, even when that's uncomfortable.

The system prompt reveals that Sonnet 4.5 isn't trying to be your friend—it's trying to be your most brutally honest, hyper-competent coworker. Prompt it accordingly.