China's DeepSeek dropped DeepSeek-V3.2-Exp on September 29, an experimental model that's reimagining how AI handles long documents—think analyzing 300-page contracts, entire codebases, or your company's quarterly reports without breaking a sweat (or your budget).

Key Details: The secret sauce is something called DeepSeek Sparse Attention. Instead of reading every single word in a document like traditional AI models, V3.2 intelligently focuses on the relevant sections—kind of like how you skim a document for what matters instead of reading it word-for-word. This makes it way faster and cheaper for long-context work.

What's Changed:

- API prices dropped 50%+ effective immediately.

- Handles up to 128K tokens (roughly 350 pages of text), though API endpoints limits can vary.

- Performs just as well as the previous version on benchmarks.

- Available now on DeepSeek's app, web, and API.

Examples in Action: This matters most when you're working with massive documents:

- Legal teams can analyze entire case files in one go.

- Developers can debug across entire codebases without splitting them up.

- Finance folks can process quarterly reports and extract key insights faster.

- Anyone doing research can upload book-length PDFs and get real answers.

How It Actually Works: Lisan al Gaib says this is the clever part: For each new token (word) the model generates, a tiny "lightning indexer" quickly scores how relevant every previous token in the document is. Then it only runs full attention on the top 2,048 most relevant tokens instead of all of them.

Think of it like this: when you're answering an email, you don't reread every single email in your inbox—you just pull up the 2-3 relevant threads. DeepSeek's doing the same thing, but at lightning speed.

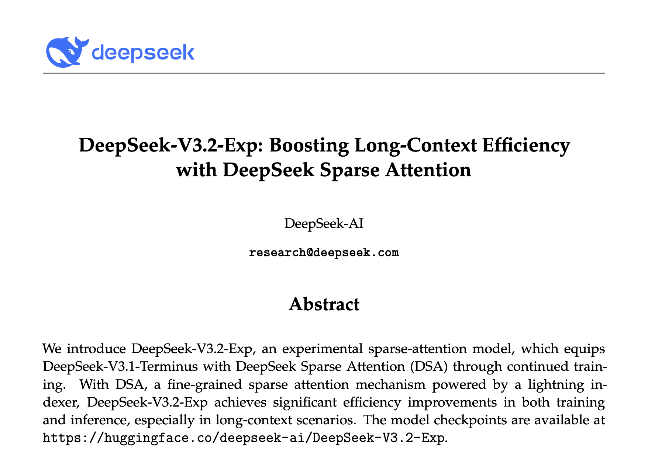

The Training Process: They built this in two stages on top of their V3.1-Terminus model:

- Dense Warm-Up (~2.1B tokens): Trained just the indexer to learn which tokens matter, keeping everything else frozen.

- Sparse Training (~944B tokens): Unfroze the whole model and taught it to work with the indexer's selective attention.

Total training: about 1 trillion tokens. The result? Benchmarks show it performs basically identical to the previous version, but way cheaper to run.

The Infrastructure Play: Here's what makes this release really interesting—it's not just about the model. DeepSeek is also showcasing some serious infrastructure work that could reshape the AI hardware game.

The model launched with day-0 support for Chinese chips like Huawei Ascend and Cambricon. That's a big deal because most AI models are built exclusively for Nvidia GPUs. As Yuchen Jin (of Hyperbolic Labs) pointed out, DeepSeek is also using TileLang, an ML compiler that lets developers write 80 lines of Python and get 95% of the performance of hand-written CUDA code (Nvidia's specialized programming language).

Translation: Instead of spending weeks writing low-level code optimized for specific chips, you can write simple Python and let the compiler handle the hard part. This makes it way easier to run AI models on different types of hardware.

Why This Matters: Most AI tools charge you per token (basically per word), so processing long documents gets expensive fast. DeepSeek's approach is like hiring a speed-reader who knows exactly what to focus on—you get the same quality analysis at half the cost.

The company is keeping its previous model (V3.1-Terminus) available until October 15th so users can comparison test, which is refreshingly transparent for an "experimental" release.

Also, Chinese AI labs have been training competitive models with fewer and "nerfed" Nvidia GPUs due to export restrictions. DeepSeek's focus on infrastructure means they're building the tools to work around those limitations entirely.

Jin's take: "If you ever wonder why Chinese companies like DeepSeek, Qwen, and Kimi can train strong LLMs with far fewer and nerfed Nvidia GPUs, remember: In 1969, NASA's Apollo mission landed people on the moon with a computer that had just 4KB of RAM. Creativity loves constraints."

The broader implication? Once Chinese labs have full access to domestically-produced chips and compiler tools that work seamlessly across different hardware, they could dramatically scale up training while driving API costs even lower.

FYI: Since this is experimental, DeepSeek is actively looking for feedback on potential issues. They're betting on users to stress-test it in real-world scenarios (provide your feedback here).

Check it out: Try the model at DeepSeek's platform or read the technical report if you're feeling nerdy.