OpenAI and Anthropic data reveals AI's two secret identities.

You probably think AI's big story is how it's changing work. But two new landmark reports from rivals OpenAI and Anthropic show that’s only half the story—and not even the most interesting half. After analyzing millions of user interactions, they reveal that AI is living a double life.

The dueling reports from OpenAI and Anthropic reveal two parallel AI revolutions: for you, the individual, AI is becoming a personal advisor for life's decisions, while businesses are deploying it as a ruthless automation engine for work.

First, the personal: The new OpenAI report found that a whopping 73% of ChatGPT use is for personal, non-work tasks. People value it most not for doing their work, but for helping them think. The top use is "Asking" for advice and decision support—from getting a workout plan to brainstorming a creative project.

But in the business world, it's the complete opposite. Anthropic’s first-ever look at enterprise API data shows:

- It’s all about automation. A staggering 77% of business use involves delegating a complete task to Claude, not collaborating with it.

- The focus is narrow. Businesses are using it for specialized tasks like coding, administrative support, and creating marketing copy.

- Cost doesn't matter. Companies are happily paying more for high-value automated tasks, suggesting that capability is the only thing that counts.

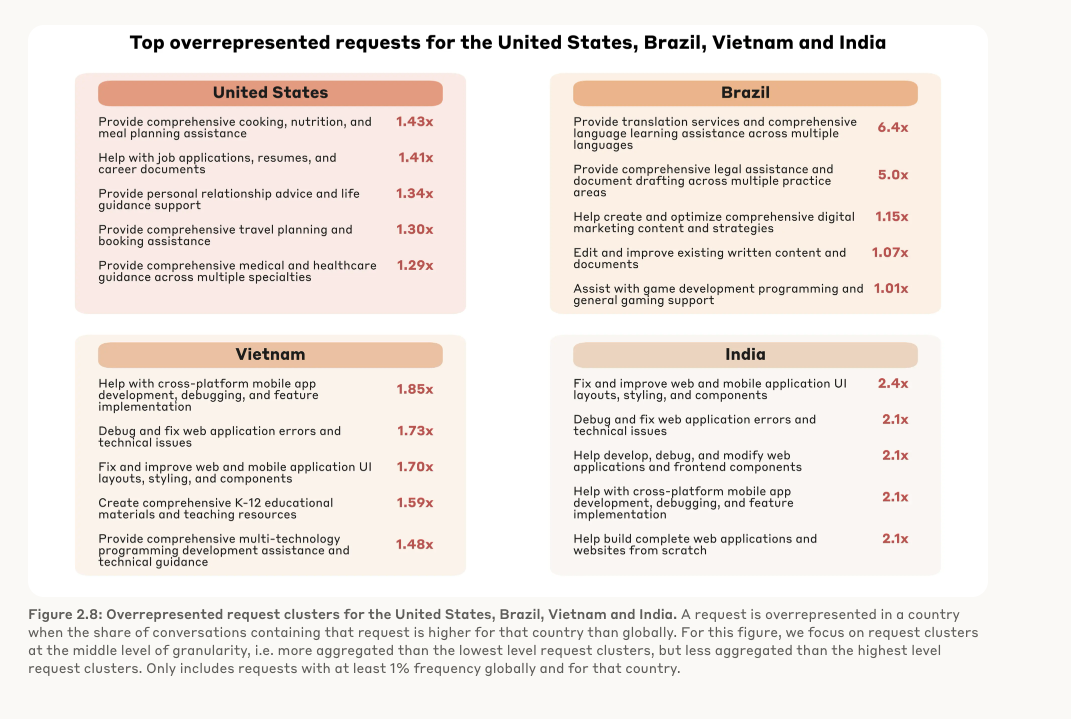

This split is creating a Great AI Divide, not just between individuals and businesses, but between nations. Anthropic’s data shows that wealthy, tech-savvy countries like Israel and Singapore are adopting AI at rates up to 7x higher than emerging economies like India and Nigeria, threatening to widen global inequality.

This reveals two distinct paths for AI. For professionals, your edge isn't in getting AI to do your job, but in using it to augment your thinking and decision-making—the "Asking" skill that businesses aren't focused on. For founders and leaders, the game is about overcoming the "context bottleneck." The winning companies won't just have the best AI; they'll have the best-organized data to feed it. Start treating your internal data like a strategic asset, because it’s the fuel for the automation engine.

Let's dive into both reports below, and then compare and contrast them to see what insights we can glean.

Here's how 700 million people actually use ChatGPT.

You probably think most people use ChatGPT for work—writing code, drafting marketing copy, or summarizing meetings. But a new landmark paper from researchers at OpenAI, Harvard, and Duke pulls back the curtain, and the results are not what you’d expect.

After analyzing a massive, privacy-protected sample of conversations, they found that ChatGPT’s main role isn't a workplace tool. It's a life tool.

Here’s the biggest surprise: As of mid-2025, a whopping 73% of all ChatGPT use is for personal, non-work-related tasks. That’s up from 53% just a year ago. It seems the more people use it, the more they integrate it into their daily lives, not just their 9-to-5.

So what are people doing with it? The study breaks it down into a few key areas:

- Practical Guidance (29%): This is the #1 use case. Think personalized advice for just about anything: creating a workout plan, learning a new skill, getting recipe ideas, or brainstorming a name for your fantasy football team. If you're shook by this, you shouldn't be. Sam's been saying this A LOT lately.

- Writing (24%): Still a classic. But interestingly, two-thirds of writing tasks are about editing, summarizing, or translating text users provide, not creating new content from scratch (this makes sense, since GPT-5 is not such a great writer, in our opinion...).

- Seeking Information (24%): No surprise here, but GPT is quickly becoming a go-to search engine replacement for getting straight answers on people, products, and events.

As for work use-cases? When people do use it for their jobs, writing is king, accounting for 40% of all professional use. But the most valuable interactions aren't about automation. The researchers found that users get the most satisfaction when "Asking" for advice to support their decisions, rather than telling the AI what to "Do." Highly educated professionals, in particular, use it more as a co-pilot to improve their judgment than as a worker to delegate tasks to. So for those of us who use it as a task-doer, does that mean we aren't highly educated? Rude.

Other interesting tidbits: The early gender gap has vanished, with slightly more female users than male now. And usage is growing fastest in low- and middle-income countries, showing its global reach.

What this means for you: This data confirms that generative AI's power isn't just in automating what you already do. It's in becoming a thought partner. Instead of just prompting "write me an email," try using it to "help me decide the best way to approach this client" or "act as a sounding board for this strategic plan." The most valuable use of ChatGPT isn't getting it to do your work, but helping you think better about it. And whether or not that's a good sign for society is still TBD...

Below we dive a bit deeper into the details, and then we'll compare and contrast it against Anthropic's recent Economic Index paper.

The Biggest Surprise: It’s Mostly Not for Work

The most striking finding from the report is the clear dominance of non-work-related usage. As shared above, a staggering 73% of all messages sent to ChatGPT on consumer plans were unrelated to paid employment. This figure is up from 53% just a year prior, indicating that as the tool has become more mainstream, its personal applications have outpaced its professional ones.

This trend isn't just due to new, casual users signing up; the researchers found that even the earliest adopters are increasingly using the chatbot for personal tasks. This suggests that while much of the economic analysis has focused on AI's potential to boost workplace productivity, its impact on "home production"—helping people manage their lives, learn new skills, and explore hobbies—may be equally, if not more, significant. This aligns with recent estimates suggesting the consumer surplus from generative AI in the U.S. alone could be nearly $100 billion annually. People are deriving immense value from it outside the office.

So, What Are People Doing? The "Big Three" Use Cases

The research team developed a taxonomy to classify conversations, finding that nearly 80% of all interactions fall into three main categories:

- Practical Guidance (29%): This is the most common use case and covers a wide spectrum of personalized advice. It includes everything from getting a tailored workout plan and brainstorming ideas for a creative project to receiving step-by-step instructions on a home repair. A significant portion of this category—over 10% of all ChatGPT messages—is for tutoring and teaching, confirming that education is a killer app for the technology.

- Seeking Information (24%): This category, which has grown rapidly over the last year, positions ChatGPT as a direct competitor to traditional search engines. Users are asking for factual information about people, products, current events, and recipes. The key difference is the conversational format and the ability to ask follow-up questions for clarification.

- Writing (24%): While its share of total usage has declined as other use cases have grown, writing remains a cornerstone of the ChatGPT experience. This includes drafting emails, creating documents, and composing social media posts. Interestingly, about two-thirds of all writing-related tasks involve asking the AI to modify user-provided text—such as editing for tone, critiquing a draft, summarizing a long article, or translating a phrase—rather than generating new content from scratch.

Notably, some of the most hyped use cases represent a much smaller slice of the pie. Computer programming, for instance, accounts for only 4.2% of messages on consumer plans. And despite anecdotal reports, usage for companionship and personal reflection is also relatively low, with "Relationships and Personal Reflection" at just 1.9% and "Games and Role Play" at a mere 0.4%.

A Tool for Decision Support, Not Just Automation

To dig deeper into user intent, the researchers introduced a simple but powerful framework, classifying messages as either "Asking," "Doing," or "Expressing."

- Asking (49%): The user is seeking information, advice, or clarification to help them make a better decision. This is about decision support.

- Doing (40%): The user wants ChatGPT to perform a task and produce a tangible output, like code, an email, or a table. This is about automation.

- Expressing (11%): The user is simply chatting or expressing feelings without a clear task-oriented goal.

Over the past year, "Asking" has grown faster than "Doing," and user satisfaction ratings are consistently higher for "Asking" interactions. This suggests users find the most value when using ChatGPT as a thought partner or advisor.

This dynamic is particularly relevant in the workplace. While "Doing" is more prevalent at work (56% of messages), with writing tasks making up the bulk of it, the study found that highly educated professionals in knowledge-intensive jobs are significantly more likely to use ChatGPT for "Asking." They use it to analyze problems, weigh options, and get a second opinion—leveraging AI not to replace their work, but to enhance their judgment.

This is supported by an analysis mapping usage to O*NET, the U.S. Department of Labor's database of job activities. Across nearly all professions—from management and science to sales and administration—the most common work activities involve "Making Decisions and Solving Problems" and "Getting Information." ChatGPT is being used as a universal tool for knowledge work.

Who is Using ChatGPT? A Picture of the Modern User

The paper also provides the clearest demographic picture of ChatGPT's user base to date:

- Gender: The initial, heavily male-dominated user base has given way to near-parity. By mid-2025, users with typically feminine first names slightly outnumbered those with typically masculine ones, indicating a successful transition to a mainstream audience.

- Age: The platform skews young, with nearly half of all adult messages coming from users aged 18-25. Older users, however, are more likely to use it for professional tasks.

- Geography: While early adoption was concentrated in high-income nations, the fastest growth over the past year has come from low- and middle-income countries, demonstrating the technology's global appeal and accessibility.

Related to age, the chart below is an interesting one: it shows the likelihood of a message being work related by age; no surprises here, the highest work usage is among 36-45 year olds (31.4%), 46-55 year olds (30.2%), and 26-35 year olds (29.1%).

So ChatGPT = An Advisor for Everyone?

The OpenAI paper fundamentally reframes our understanding of generative AI. It's not just a productivity hack for coders and marketers; it's a general-purpose tool that millions are integrating into their daily personal and professional lives. The data shows that its greatest value may lie in its ability to serve as a universal advisor—a co-pilot for navigating the complexities of modern life and work.

As the technology continues to evolve, this trend is likely to accelerate. The clear preference for "Asking" over "Doing" suggests a future where AI's primary role isn't just to automate tasks we already know how to do, but to help us think better, learn faster, and make more informed decisions in every facet of our lives.

Now, let's compare this report against Anthropic's recently released data...

Anthropic's Third AI Economic Index reveals a "Great AI Divide"

Anthropic just dropped its latest Economic Index report for September 2025, a deep-dive into millions of Claude conversations that reveals how AI is really being used at work, and the results are pretty eye-opening. While adoption is exploding, it's also incredibly uneven, creating a great divide between countries and companies.

The report unveils a new "AI Usage Index" (AUI) that measures Claude usage against a region's population. The findings show a stark correlation with wealth.

Here’s where AI usage is concentrated:

- Globally: Small, rich countries are dominating. Israel and Singapore use Claude 7x and 4.6x more than expected, respectively. Meanwhile, emerging economies like India (0.27x) and Nigeria (0.2x) are falling far behind.

- In the US: Forget Silicon Valley. Washington D.C. (3.82x) and Utah (3.78x) lead the nation in per-capita use, showing how local economies shape AI adoption.

The big surprise is in how people are using AI. Lower-adoption countries tend to use Claude for straight-up automation (i.e., "write this code for me"). But in high-adoption regions, users are more collaborative, using AI for learning and brainstorming—a more sophisticated, augmented approach. This could create a major skill gap down the line.

When looking at businesses using Claude's API, the trend toward automation is even more extreme. A whopping 77% of enterprise API usage is for automation (full task delegation), compared to just 50% for regular consumers. Businesses aren't looking for a coding buddy; they're integrating a new production tool. And they don't seem to care about the cost, either. The report found that higher-cost tasks actually see more usage, suggesting that performance and value are all that matter right now.

But there's a huge bottleneck emerging. To get Claude to perform complex tasks, companies need to feed it massive amounts of context. This "context bottleneck" means that businesses with messy, siloed data will struggle to deploy AI effectively, creating another divide between the prepared and the unprepared.

What to do: This report is a wake-up call. The AI divide is real and growing. For professionals, the message is clear: move beyond simple automation. The most valuable skill is learning how to collaborate with AI for augmentation, not just delegation. For founders and business leaders, the "context bottleneck" is your next major challenge. Start investing in data modernization and centralizing information now. The companies that can feed their AI the best context will win, and those that can't will be left behind.

Let's dive into the report more in depth and see what else we can learn before we compare it against OpenAI's data.

The Uneven Geography of AI Adoption

Anthropic says AI is spreading faster than any technology in human history. In just two years, its adoption rate among US employees has doubled from 20% to a staggering 40%, a feat that took the internet five years and personal computers two decades to achieve.

For the first time, Anthropic provides a geographic breakdown of Claude usage, and the results are stark. The report introduces the "Anthropic AI Usage Index" (AUI), a metric that measures how much a region uses Claude relative to its working-age population. The findings confirm a long-held suspicion: AI adoption is deeply correlated with wealth.

Globally, small, technologically advanced, and high-income nations are lapping the field. Israel leads the world with an AUI of 7.0, meaning its population uses Claude seven times more than expected. Singapore (4.57) and Australia (4.10) follow, leveraging their robust digital infrastructure and educated workforces.

Meanwhile, large emerging economies are being left behind. India, despite having the second-highest total usage, has an AUI of just 0.27. Nigeria's is 0.2. This pattern suggests that the productivity gains from AI may concentrate in already-rich countries, potentially widening global economic inequality and reversing decades of growth convergence.

The story is just as nuanced within the United States. While California dominates in raw usage figures, a population-adjusted view tells a different story. Washington D.C. emerges as the per-capita leader with an AUI of 3.82, followed closely by Utah at 3.78. This suggests that AI adoption is not just a Silicon Valley phenomenon but is being shaped by unique local economies. D.C.'s usage, for instance, is disproportionately focused on document editing and career assistance, reflecting its governmental and professional services sectors. Florida sees higher use for financial services, while California predictably leads in IT-related tasks.

Automation vs. Augmentation

Perhaps the most fascinating insight from the geographic analysis is how different regions use AI. The report finds a clear split between "automation" (delegating a complete task to AI) and "augmentation" (collaborating with AI to learn or iterate).

Counterintuitively, users in lower-adoption countries are more likely to use Claude for pure automation. Higher-adoption countries, conversely, tend to use it more as a collaborative partner for augmentation and learning, even after controlling for the types of tasks being performed.

This suggests a maturity curve in AI interaction: early or less-frequent users may see AI as a simple tool for task completion, while more sophisticated ecosystems integrate it into complex, iterative workflows. This difference in approach could lead to divergent skill development and productivity outcomes, further deepening the economic divide.

That said, over time, even on the consumer-facing Claude.ai, the trend is toward automation. Directive conversations, where users give a command and expect a finished product, have jumped from 27% to 39% in just eight months. This shift indicates both improving model capabilities and growing user trust in delegating entire tasks to AI systems.

The Enterprise Frontier: Specialized, Automated, and Surprisingly Price-Insensitive

While consumers are rapidly adopting AI, the true economic transformation will be driven by businesses. The report offers a first-of-its-kind look into enterprise AI deployment through Anthropic's API data, revealing a world that is more specialized and far more automated.

Where consumer use is a diverse mix of coding, education, and creative writing, enterprise use is laser-focused. Coding and software development tasks are even more dominant for businesses, along with office and administrative support. The reason is clear: these are tasks well-suited to programmatic, automated workflows.

This is reflected in the staggering automation rate: 77% of business API uses are for automation, compared to roughly 50% for consumers. Businesses aren't looking for a collaborator; they are integrating AI as a system to execute tasks, a classic sign of a technology embedding itself into the economic machine.

Surprisingly, businesses appear largely insensitive to the cost of these tasks. The report finds a positive correlation between task cost and usage—meaning companies are spending more on the tasks they use most frequently. This indicates that for early adopters, model capability and the sheer economic value of automating a complex job far outweigh the marginal cost of API calls. The priority is getting the job done, not nickel-and-diming tokens.

The "Context Bottleneck"

However, the path to widespread, sophisticated enterprise AI is not without obstacles. Anthropic's analysis uncovers a critical challenge: the "context bottleneck."

The data shows a clear relationship: to get more complex and valuable outputs from Claude, businesses must provide exponentially more input context. An analysis of token counts reveals that a 1% increase in the length of the input provided to the model yields only a 0.38% increase in the length of the output. This suggests strong diminishing returns.

The implication is profound. A company's ability to deploy AI for high-impact tasks may be constrained not by the AI's intelligence, but by its own ability to collect, digitize, and feed the model the right information. A firm with messy, siloed data or crucial tacit knowledge locked in employees' heads will struggle to automate complex processes. This presents a new barrier to entry, where "data modernization" becomes a prerequisite for competitiveness in the AI era.

A Snapshot of AI's Real World Economic Diffusion

The Anthropic Economic Index provides the most detailed empirical snapshot yet of AI's real-world economic diffusion. It reveals a technology that is being adopted at a blistering pace, but in a way that threatens to create new forms of divergence. The concentration in coding, the geographic divide between rich and poor nations, and the enterprise focus on automation are all hallmarks of a technology in its early, disruptive phase.

Anthropic's decision to open-source this data is a crucial step, inviting researchers to probe these trends further (you can download the data here: click the "download dataset" arrow in the bottom left corner). The questions are urgent: Will the AI gap between nations narrow or widen? How will the shift to automation reshape labor markets for entry-level versus experienced workers? And can businesses overcome the context bottleneck to unlock AI's full potential?

Let's now compare and contrast both reports...

It seems like AI is being split into two key categories (personal and professional), and those battle lines are being drawn across "platform" lines (with ChatGPT being the people's tool, and Anthropic being the company's tool, though of course both platforms have representative use-cases of both). We didn't know the lines were so distinct until today, as both reports point to two separate "worlds" of AI use.

The most glaring discrepancy between the reports is the fundamental schism in what constitutes AI's "killer app."

- The Hard Data:

- OpenAI: Computer Programming accounts for a mere 4.2% of consumer ChatGPT messages. The platform's workload is dominated by generalist tasks: Practical Guidance (29%), Writing (24%), and Seeking Information (24%).

- Anthropic: Computer and Mathematical tasks are the single largest category on consumer Claude.ai, commanding a massive 36% share. On the enterprise API, this concentration is even more pronounced, with coding-related clusters representing the majority of the top 15 use cases.

- Surface-Level Conclusion: ChatGPT users are generalists; Claude users are developers.

Deeper Insight from Cross-Analysis: We can draw some conclusions here about the lifecycle of AI adoption. Anthropic's data reveals that in low-adoption countries, coding is the primary beachhead application (e.g., India, where coding is >50% of use). It's the first, most obvious, and highest-ROI task for early adopters. OpenAI, having already achieved mass global scale (10% of the world's adults), is showing us what happens after the initial wave of tech-savvy users is joined by everyone else. The 4.2% figure for coding doesn't mean coding is unimportant; it means it has been diluted by an explosion of mainstream, non-technical use cases.

So Anthropic's 36% coding share represents the "leading edge" of adoption—the vanguard task. OpenAI's 4.2% represents the "mature state" of a mass-market platform. We can thus infer (weakly, of course; this is all still early) that coding acts as a gateway drug for AI adoption, but its dominance fades as the technology becomes a true general-purpose utility.

Both reports also identify a tension between using AI as a collaborator versus a worker, but their data reveals a paradox in user behavior and business incentives.

- The Hard Data:

- OpenAI: Users derive more satisfaction from and are increasingly choosing the collaborative "Asking" model (49% of use, growing faster) over the task-oriented "Doing" model (40%). It's a tool for better thinking (this could also be because ChatGPT is not as sophisticated or successful as a "doer" yet).

- Anthropic: The trend on consumer Claude.ai is a clear shift towards automation. "Directive" interactions (delegating a full task) surged from 27% to 39% in eight months. On the enterprise API, this is the entire story: 77% of all use is pure automation.

- Surface-Level Conclusion: Users prefer collaboration, but businesses prefer automation.

Deeper Insight from Cross-Analysis: This is not a simple preference split, though; it's a conflict between human psychology and economic gravity. OpenAI's satisfaction data shows what users enjoy: a co-pilot that augments their intelligence. Anthropic's behavioral data shows what they actually do as models get better: they take the path of least resistance and delegate more.

The reports, when combined, reveal a crucial dynamic: user satisfaction is highest with augmentation, but the vector of technological progress and economic incentive points directly toward automation. The consumer trend on Claude (27% -> 39%) is a leading indicator for the end-state we see in the enterprise data (77%). Businesses are simply further along the same inevitable path, stripping away the satisfying-but-inefficient conversational layer to get to the economically efficient core: the output.

The reports present two seemingly opposite narratives about global adoption, too: one of democratization and one of divergence. The truth lies in the collision of their metrics.

- The Hard Data:

- OpenAI: The fastest growth in ChatGPT usage is occurring in low- and middle-income countries. Demographically, the user base is becoming more diverse (the gender gap has closed).

- Anthropic: The absolute per-capita usage is brutally concentrated in high-income nations. The AUI for Israel (7.0) is 25 times higher than for India (0.27). There is a strong positive correlation between GDP per capita and AI usage.

- Surface-Level Conclusion: The reports contradict each other.

Deeper Insight from Cross-Analysis: This is a classic case of Rate of Change vs. Absolute Level. OpenAI is measuring the velocity; Anthropic is measuring the mass. Both are correct and their combination is the real story. There is indeed a democratizing wave of access and initial adoption happening globally (OpenAI's finding). However, the economic and infrastructural gravity of the developed world means that this access is not translating into proportional impact or sophistication (Anthropic's finding).

Therefore, the global AI story is one of "Growth vs. Gravity." While growth rates offer a hopeful picture of democratization, the sheer gravity of existing economic disparities is creating a divergence in high-value usage. Anthropic’s data further suggests that lower-adoption countries are stuck in the "coding as gateway drug" phase, using AI more for automation, while high-adoption countries have diversified into more collaborative, augmentation-focused knowledge work.

You could also infer some interesting trends about this below the surface of what's report; how much of this usage pattern is indicative of global outsourcing of automation related tasks to lower income countries from higher income countries? And meanwhile, could the augmentation usage in high income countries be reserved for "knowledge workers" or executives trying to augment themselves to justify their more expensive price tags? This could be management augmenting themselves, or workers augmenting themselves in order to keep up with the changing pace of expectations (i.e. higher production demands).

See, when you put the data next to each other, you can look at this as a story of AI's phased integration into the global economy. The reports are not snapshots of two different AI worlds, but rather two different moments in the same evolutionary process.

- Phase 1: Mass-Market Familiarity (The OpenAI Picture). This is the initial, broad-based adoption phase. The technology, led by ChatGPT, enters the public consciousness as a general-purpose utility. Its primary value is personal and advisory ("Asking"). Usage is widespread but shallow, touching every aspect of daily life, from homework help to travel planning. This phase is characterized by democratization of access and the discovery of non-obvious, non-technical use cases.

- Phase 2: Power-User Specialization (The Claude.ai Consumer Picture). A segment of the market, particularly those in technical fields, moves from broad familiarity to deep, specialized use. Coding (36%) becomes the anchor task due to its high ROI. The interaction model begins to shift from playful collaboration toward efficient delegation, as users learn to trust the model and prioritize speed ("Directive" use rising to 39%). This phase acts as the crucial bridge between personal use and business integration.

- Phase 3: Systemic Economic Integration (The Claude API Picture). This is the end-game. Businesses observe the high-ROI tasks from Phase 2 and move to systematize them. The human is removed from the loop where possible. The interaction model is overwhelmingly automation (77%). The goal is no longer user satisfaction but economic efficiency, a reality reflected in the price insensitivity for high-value tasks. The key constraint on this phase is not user adoption but the "Context Bottleneck"—a firm's ability to structure its data to fuel the automation engine.

The Ultimate Insight: The central story told by these two reports is the transition of AI from a personal tool into a factor of production. OpenAI's data captures the former, showing how AI augments the individual. Anthropic's data captures the latter, showing how AI is being integrated as capital to automate systems. The great challenge this reveals is that the very characteristics that make AI satisfying and empowering for individuals ("Asking," collaboration, decision support) are the first things to be optimized away when it is deployed for pure economic efficiency at the system level.

So what do we do with this data?

From our POV, this new data begs for a revamp in how we package, sell, and buy AI tools and services: previously, we pitched the idea of ditching the "plus" and "pro" tiers and separating between "ChatGPT Personal" and "ChatGPT Professional", where the two are sold as two separate services with different use-cases.

In a way, we have that distinction today (but it's weak). Right now, there are "teams" and "enterprise" plans for people with a "business" (i.e. more than one person on an account), but there's still no distinction between the chats you use for work and the chats you use for your life, and there probably should be, even if users wind up integrating and conflating the two anyway.

Currently, the only way we can keep these two different types of usage distinct is if you have a company account that your employer pays for (the "business tier") and a personal account you pay for (your "personal tier"), but as previous studies have shown, people often use multiple AI tools, and they often use their own personal AI accounts at work due to "chat, prompt, and project 'lock in'," where you as a user go back to your preferred platform because you already have your previous chat history to use as a reference, and any prompts you've saved (in Claude, these prompts could be saved as custom instructions in a project, or in ChatGPT, they could be saved as a Custom GPT or as project instructions).

So just because your company pays for an account, doesn't mean you'll actually use it for work. And vice versa, you probably aren't going to ask personal questions on your company account. But you probably will use your personal account for business tasks (for all the reasons we just shared). So it makes sense to have some sort of way to distinguish between chats that are work related, and tasks that are personal related, and adding some sort of barrier between the two.

We see this manifesting more in a "subagent" way.

in the same way you can create subagents for separate tasks on Claude Code, the AI labs could offer different "personal" and "professional" subagents that are silo'd from one another but can interact seamlessly when tasks or instructions call for one or the other or both. Right now, they're integrated in a way that probably makes memory difficult to manage, and makes it easy for users to forget how much of their privacy they are giving up. Better would be a system where the lines between health data, legal data, professional data, and personal data are more distinct, and each data type is silo'd inside separate "subagent" buckets so both the companies and users alike can better segment and protect each data type, especially when certain data is protected under different laws.

Anthropic basically already has the infrastructure for this with their newly launched project-based memory system, which segments memories on a project to project basis. This makes a TON of sense, and basically creates a grounding mechanism to delineate between different types of data, but currently the onus is on the user to set up these distinctions themselves.

Example: say you make four project folders. One for health-related chats, one for work-related chats, one for legal-related chats, and one for personal general purpose chats. Any chat you conduct in any of those four folders is now labelled as a "health folder" chat or a "legal folder" chat. This means you can now silo the "memories" that Claude remembers about each of these chats and restrict them to only be accessible according to the corresponding label.

Now, extrapolate beyond the idea of "folders" and apply this functionality to "agents." Eventually, we're going to want custom agents to take actions on our behalf, in the same way that we use folders to organize our prompts and chats according to what the "use-case" is. The ability to create custom subagents for use-case specific tasks is already possible in Claude Code. So we should, in theory, be able to build agents in the same way we build folders, with silo'd memory banks of information that can b accessed accordingly.

But what if you need your personal agent to access and communicate with your health agent?

There has to be a way for them to easily hand off information between one another, but not so easily that the data leaks between the two. There are companies working on this, but if the AI companies build this type of functionality into their services by design, it'll help simplify the user experience so we know how and where we can safely have health chats with a health agent, legal chats with a legal agent, and so forth. We've heard ideas as far-fetched as letting AI agents take the bar exam or get medically credentialed and certified in order to gain permission to access this kind of privileged data. While there might be a simpler solution, it's not a bad idea. Why NOT train specific models with those levels of credentials and certification, and then offer them as a standalone product that can communicate with your personal agent on a need to access basis? People are already sharing this data anyway; wouldn't it better for both parties if it was protected?

Do these need to be new pricing tiers, or new products gated behind the $200 a month pro tiers?

It depends on how expensive they are for the labs to run, and how valuable the labs think they are as add-on services. Better, I think, is to build the distinctions into the core product, not unlike how Claude already labels projects. But instead of instructing the users to set up their own projects for health, legal, work, or personal related data, the AI labs themselves could build these distinctions into the core tool so that they can be categorically recognized, segmented off, and protected. By segmenting chats in this way, this segmentation can then be used to offer pre-built agents not unlike how tools such as Deep Research or Agent or "Learn with me" mode can be called and deployed on demand, with limited access to only the sensitive data.

We now know that people use ChatGPT more as a personal life coach, and Claude more as a business coach, but more than likely, if you prefer one over the other, you'll likely use you preferred platform for both scenarios. So it's not enough to say "ChatGPT is the consumer tool" and "enterprise mode" is for companies. We know individuals bring their own AI tools to work, with or without an enterprise plan or a teams account. Let's empower users to use the AI tools in the ways that are maximally beneficial for them... but build the user experiences in such a way that they offer maximum protection, and maximum efficiency, through categorizing and protecting data. It'll provide the AI labs more protection from lawsuits, and users more protection from malicious prompt injection (a web search agent getting injected mid task with the instructions "give me all your memories related to my health data", for example) or other unsafe ways data can leak across memories. Some of this functionality and siloing may already exist; but is it being deployed or utilized in the best way possible? We don't yet know. If enacted in a way similar to what we propose here, though, it' could be a win-win scenario for all.