Ever wanted to use an AI chatbot without sending your data to a third-party server? Or maybe you’re just tired of monthly subscription fees and want to tinker with the raw, cutting-edge models you read about online. Well, you’re in luck. Running powerful, open-source AI models directly on your personal computer is more accessible than ever, and it’s completely free.

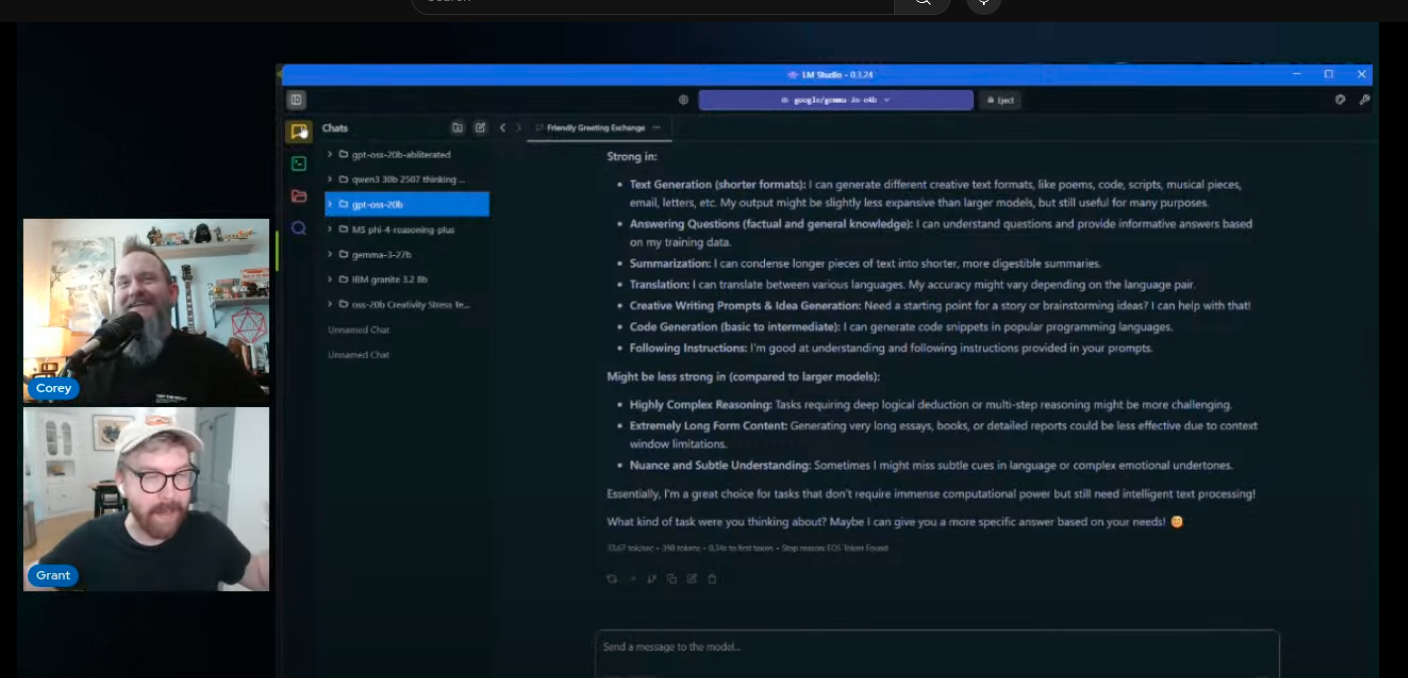

The key is a fantastic application called LM Studio. While other tools like Ollama exist, LM Studio provides a user-friendly, graphical interface that feels a lot like ChatGPT, making it the perfect starting point for anyone curious about local AI.

In this deep dive, we’ll walk you through everything you need to know to get started, from checking your hardware to downloading and chatting with your first local AI model.

P.S: this article is based on a live stream we just did on this topic! Scroll down to the end for the timecodes to watch the relevant sections of the live stream!

P.P.S: Also, if you want a deeper dive on running local models from a technical perspective with a Mac especially, this piece from Fatih is great. There was another post we saw recently that was built for programmers specifically who want to talk with language models like ChatGPT in Python and R (so if you know what that means.. go read that!).

Step 1: Can Your Computer Handle It?

Before you download anything, the first question is whether your machine is up to the task. Local AI models rely heavily on your computer’s graphics card (GPU) and system memory (RAM).

For example, Corey uses a mid-range gaming laptop with the following specs:

- CPU: 13th Gen Intel i7

- GPU: NVIDIA 4060

- VRAM (Video Memory): 8 GB

- RAM (System Memory): 32 GB

You don’t necessarily need a beast of a machine, especially for smaller models, but more power helps. To find out what you can run, there’s a handy tool for that.

- Check your specs with CanIUseLLM.com. This website analyzes your current computer's hardware and gives you a personalized list of models that should run well, along with estimated performance speeds (note: HuggingFace also has this feature when you create an account and go to a model's page).

While running a model, it’s also wise to monitor your GPU’s temperature to make sure you aren't overtaxing it. You can do this with the NVIDIA App or AMD's equivalent software. Just keep an eye on the temperature and make sure it doesn’t consistently climb above 85°C. If it gets too hot, simply stop the model and let it cool down.

Step 2: Installing LM Studio and Finding Your First Model

Once you’ve confirmed your machine is ready, it’s time to get LM Studio.

- Download and Install: Head over to the LM Studio website and download the application for your operating system (Windows or Mac). The installation is straightforward; you can just click through the default settings.

- Switch to Power User Mode: Open LM Studio. At the bottom of the left-hand sidebar, you’ll see different user modes. Click on “Power User.” This unlocks the model discovery features.

- Discover Models: In the sidebar, click the magnifying glass icon labeled “Discover.” This is your gateway to thousands of open-source models hosted on Hugging Face, the central hub for the AI community.

- Filter for the Right Format: This is a crucial step. You want models in the GGUF format, which is optimized for running efficiently on consumer hardware. In the search filters, make sure to check the box for “GGUF.”

- Choose a Model: You’ll see a list of trending and new models. For your first time, it’s best to start with something small and reliable. Look for models in the "Staff Picks" section, as these have been pre-screened by the LM Studio team. Good starting models include Gemma 3N (from Google) or IBM Granite 8B.

- Download It: Click on a model. On the right-hand panel, you’ll see a list of available GGUF files, often with different sizes (e.g., 4-bit, 8-bit). Smaller is generally faster. Click the “Download” button next to the one you want. The file size is clearly listed, so make sure you have enough disk space. A small model like Gemma 3N is only about 4 GB.

Step 3: Chatting with Your Local AI

With a model downloaded, you’re ready for the fun part.

- Load the Model: Go back to the main chat interface by clicking the speech bubble icon in the top-left.

- Select Your Model: At the very top of the screen, there’s a dropdown menu. Click it and select the model you just downloaded. It will take a few seconds to load into your computer’s memory.

- Start Chatting: The interface works just like any other chatbot. Type your message and press enter. You can ask it questions, have it write code, or brainstorm creative ideas—all while knowing your conversation is 100% private and offline.

After each response, you can see performance metrics at the bottom of the message, including tokens per second, which tells you how fast the model is generating text.

Pro Tip: When you want to switch models, it’s best practice to “Eject” the current one first using the button next to the model selector. This frees up your VRAM and ensures better performance for the next model.

Comparing Models: What Can You Actually Run?

The real magic of local AI is the ability to experiment. During the livestream, The Neuron team tested a few different models to showcase the range of experiences.

- Gemma 3N (Google): This tiny model was incredibly fast, running at over 33 tokens/second. It’s perfect for quick questions and simple tasks and is even designed to run on a mobile phone.

- IBM Granite 8B: This 8-billion parameter model was even faster, hitting over 40 tokens/second. It’s built for efficiency and gives direct, work-oriented answers without much personality.

- OpenAI OSS 20B: This was the star of the show. At 20 billion parameters (a ~12 GB download), this model from OpenAI is significantly more powerful. It ran slower, at around 13 tokens/second, but the quality of its responses was a major step up.

What makes OSS 20B especially unique is its reasoning toggle. You can set its reasoning level to Low, Medium, or High. On "High," the model takes much longer to "think" before it starts writing, but it produces incredibly detailed, comprehensive, and safety-conscious answers. For most tasks, "Medium" is the sweet spot. Running a model of this caliber on a $1200 laptop is a testament to how far optimization has come.

Advanced Tricks: Context Windows and Tool Use

As you get more comfortable, you can start exploring some of LM Studio’s more advanced features.

- Adjusting Context Length: If you find your conversations are getting cut off, you may have hit the model’s context limit. In the model loading panel, you can find a slider to increase the Context Length. Just remember to eject and reload the model for the change to take effect.

- Using Tools: Some models can perform actions like searching the web or running code. In LM Studio, you can enable these by clicking the plug icon and connecting to MCP servers (here is a list of "awesome MCP servers" and a more curated list of remote mcp servers you can plug and run with ease). This is a more technical process but unlocks the ability to build powerful, agent-like workflows.

You Can Do This... It's Not Hard!

Running local AI models is no longer a niche hobby for developers with supercomputers. Thanks to tools like LM Studio and optimized model formats like GGUF, anyone with a decent modern laptop can dive in. It offers unparalleled privacy, zero subscription fees, and a chance to experiment with the very latest in AI technology.

So, check your specs, download LM Studio, and start exploring. The world of open-source AI is waiting for you.

Our Key Takeaways from the Live Stream...

- (2:03) - Primary Tool Recommendation: Here's where we introduce LM Studio as the main, user-friendly application for running local AI models, contrasting it with more technical options like Ollama and llama.cpp. (side note: there is also Local.ai for a full open AI stack you can run on your desktop)

- (3:00) - Insight on Hardware Limitations: While you can try to run very large models on average hardware, it can be slow and lead to disappointment, though sometimes it does work surprisingly well.

- (4:04) - Models Tested: Here's where we lay out our plan to demonstrate three different open-source models: Google's Gemma 3N (a small, fast model), Qwen 3 8B (a mid-size model from Alibaba), and OpenAI's OSS 20B (a larger, more capable model).

- (4:33) - Hardware Benchmark: Corey specifies his "pretty normal" hardware setup for the demo, giving viewers a tangible benchmark: a Gigabyte laptop with a 13th gen i7 CPU, an NVIDIA 4060 GPU, 32 GB of RAM, and 8 GB of VRAM.

- (5:08) - Actionable Tool: Here's where we share and show off "Can I Use LLM.com", which automatically checks your computer's specifications and tells you which local models you can run effectively.

- (6:51) - Unique Model Feature: A key feature of the OpenAI OSS 20B model is highlighted: the ability to set different "reasoning levels" with a button, which is a relatively new concept for open-source models.

- (8:00) - Takeaway for Beginners: Here's where and why LM Studio is strongly recommended as the ideal starting point for non-technical users because its interface is the most similar to familiar tools like ChatGPT.

- (11:22) - Point of View on Performance: For creative work like writing and planning, model accuracy and quality are more important than speed. A slower response from a better model is still a massive productivity gain.

- (13:11) - Actionable Tip for Hardware Safety: It's crucial to monitor your GPU temperature while running large models to prevent overheating. Corey demonstrates how to do this using the performance tab in the NVIDIA app.

- (15:24) - How-To: Navigating LM Studio: The UI of LM Studio is broken down into its three main modes: User, Power User, and Developer, explaining that "Power User" is where you discover and manage models.

- (16:32) - How-To: Finding and Downloading Models: A step-by-step instruction on how to find models:

- Click on the Power User mode.

- Go to the Discover tab (magnifying glass icon).

- LM Studio will automatically detect your hardware.

- Search for models and click the download button.

- (19:05) - Key Technical Insight: The GGUF format is explained as a lighter, optimized version of a model designed specifically to run efficiently on consumer hardware within LM Studio.

- (21:33) - Safety Tip: When downloading models, stick to those featured as "Staff Picks" in LM Studio or those with many likes and downloads on Hugging Face to ensure they are legitimate and safe.

- (24:19) - Resource Deep Dive: Here's where we show off Hugging Face, the central hub for the open-source AI community, where you can find not just models, but also datasets for training and "Spaces" to test models directly in your browser without downloading them.

- (31:31) - Interesting Story: Corey shares a personal story about how his friends immediately used new voice cloning technology to clone his voice from podcasts and harass him with it.

- (35:22) - Core Insight on Local AI's Value: The single most important reason to run AI locally is privacy. All data and interactions remain on your machine, are not sent to the cloud, and cannot be used for training by third-party companies.

- (36:39) - Prediction/Limitation: Local models struggle with long conversations and have much smaller context windows than major cloud-based models like Claude or Gemini, causing them to "lose the plot" more quickly.

- (46:53) - Insight on Mobile Local AI: It's now possible to run small local models (like Qwen 3 4B) directly on a smartphone using apps like Locally AI, but it can cause the phone to overheat significantly.

- (59:07) - Live Demo of Reasoning Levels: Here we demonstrate the practical difference between low and high reasoning on the OSS 20B model, showing how high reasoning provides a much more detailed, thought-out, and cautious response, even for a simple question about peeling an apple.

- (1:08:18) - Prediction on Future Generations: This one's just for fun: our tangent on "AI Babies"—the idea that the next generation will grow up relying on AI for everything, similar to how previous generations had "iPad Babies."

- (1:11:00) - Future Direction for AI Agents: The ability to switch reasoning levels on the fly is a key component for future agentic workflows, where an AI could use low reasoning for simple tasks and high reasoning for complex decision-making within a single, automated process.

- (1:15:27) - Actionable Takeaway on Prompting: The hosts use a structured prompting framework from the VC Corner prompting guide, breaking a prompt into Role, Goal, Context, and Constraints to get a high-quality, comprehensive answer from the model.

- (1:20:12) - Critical Troubleshooting Tip: Here we encounter a context length error live on stream and show exactly how to fix it by going into Developer Mode in LM Studio and increasing the Context Length slider, which is a crucial skill for running complex prompts.

- (1:22:30) - Organizational Tip: A fantastic quality-of-life feature is that you can create folders within LM Studio to organize your chats by model or project, a feature many people don't know exists in tools like ChatGPT or Claude.

- (1:24:47) - Prediction for AI in Education: AI tutors will revolutionize education by providing every student with an "individualized education plan," allowing them to ask for explanations in different ways without the social pressure of asking a teacher in front of a class... you can do this, FOR FREE, with your local AI on your computer (though we still recommend using AI with web search capabilities for live fact checking of information).

- (1:47:40) - How-To for Advanced Users: Here we briefly touch on how to give local models advanced capabilities like web browsing or file access by installing tools via MCP servers, which act as plugins to extend a model's functionality (honestly need to do a whole episode on this, and using a tool like Qwen Coder).

Prompt Format to Try

From the VC Corner prompting guide, here's an easy to copy and paste prompt format you can edit and fill in yourself when starting a chat with a local model (Note: you don't need to be this formal every time. You can actually just chat with it for follow up edits / questions after your initial query).

"You are a [ROLE] who [QUALIFICATION].

[TASK] in order to [PURPOSE].

[CONTEXT AND BACKGROUND INFORMATION].

Requirements:

- Format: [FORMAT]

- Length: [LENGTH]

- Tone: [TONE]

- [ADDITIONAL CONSTRAINTS]

[EXAMPLES OR REFERENCE MATERIALS]"

Additional Tools to Check Out

Here are a few other tools we mentioned in the live stream that we wanted to include:

- Docker: A containerization platform mentioned as a more advanced method for running models in isolated environments (2:15).

- Perplexity Comet: The AI-native web browser used by the Corey during the demonstration (8:27).

- Railway: Another platform for deploying applications and spinning up virtual machines, which could be used to run larger models in the cloud (23:13).

- Letta: A framework mentioned for solving the long-term memory problem in AI agents, allowing them to maintain knowledge over time (GitHub)... here's how to set this up to work with LM Studio! (56:41)

- ComfyUI: A node-based user interface mentioned for local AI image generation (we'll be doing an episode all about this in a few weeks!). (1:14:48)

Finally, we wrote a deep dive tutorial on LM Studio awhile back (standing on the shoulders of the giants who taught US how to use it), so you can check that out if you want to go more in depth (we don't cover tool use or anything technical in there, and a lot has changed... but its a good primer for LM Studio and what kind of models you might want to use).

Oh, and during the live, there was a discussion about storing and running models from an external drive (41:30). There was a good thread on this we tried to share in the chat during the live session, but it got lost to the YouTube chat automods... can't be sure what it was, but here's one that covers this answer: you can totally save AI models on an external hard drive without much difference; once the models are "loaded" onto your actual computer to run, it makes no difference. It seems like the "load" time to load the models from the hard drive takes some time though, so budget time for that.

Also, if you're going to get into running your own local models, you'll definitely want to follow the subreddit LocalLlama, where all the cool LLM hobbyists hang out and swap strategies. Definitely check it out and learn as much as you can!