In a wide-ranging interview on the Dwarkesh Patel podcast, AI legend Andrej Karpathy laid out a surprisingly grounded and patient vision for the future of AI. His main takeaway? Forget the hype about the "year of agents"—we should be thinking about the "decade of agents."

Karpathy, who previously led AI at Tesla and was a founding member of OpenAI, argues that today’s large language models (LLMs) like ChatGPT are nowhere near being the reliable, intern-like assistants we imagine. He says they're plagued by "cognitive deficits" and are better at memorizing the internet than truly thinking.

Here's Karpathy's reality check on AI's biggest challenges:

- Reinforcement Learning is "terrible." He calls the current method of training models on outcomes "sucking supervision through a straw." A model can get the right answer by accident, and RL will still reward every single mistake it made along the way.

- Coding agents aren't ready for primetime. While building his nanochat project (a from-scratch ChatGPT clone), Karpathy found coding agents were "not net useful." They get confused by custom code, bloat projects with defensive boilerplate, and are "asymmetrically worse" at writing code that has never been written before. His sweet spot is still just using them for autocomplete.

- Perfect memorization is a bug, not a feature. Unlike humans, who are forced to generalize because we forget specifics, LLMs are distracted by their perfect recall of the internet. Karpathy says the real goal is to strip out the memorized knowledge to isolate the "cognitive core"—the actual problem-solving part.

His favorite analogy comes from his time leading self-driving at Tesla: the "march of nines." Getting a demo to work 90% of the time is the easy part. Getting from 90% to 99% takes just as long. And getting from 99% to 99.9% takes just as long again. This is why he’s "extremely unimpressed by demos" and believes the path to truly useful AI will be a long, slow grind (of at LEAST another decade), not a sudden explosion.

Our take: Such interesting outside of consensus opinions in this talk! He blasts RL as terrible (just better than what we had before), and that today's models rely too much on previous knowledge. Perhaps this shows that we've successfully compressed human knowledge, but we're not generating actual intelligence (hence the word "artificial").

Therefore, we think Karpathy’s perspective suggests we should reframe the next decade of progress away from the idea of building "artificial general intelligence" to building "augmented human intelligence." Instead of waiting for robots to do our jobs, the real opportunity lies in using today's imperfect tools to accelerate our own work. For you, that means focus on building "human-in-the-loop" systems to your workflows, where AI handles the boilerplate and autocomplete, while you tackle the novel, creative, and high-stakes parts of the problem. This is where the real productivity gains will be found now and for the foreseeable future.

Below, we breakdown the most actionable insights from the podcast (with timecodes), and then run through a deep dive on all the key ideas, reacting to them along the way (check out the full transcript of the episode on Dwarkesh's blog here). Enjoy!

Predictions & Forecasts

- (0:48) Prediction: Achieving fully functional AI "agents" (akin to an intern or employee) is a decade-long project, not something that will be solved in a year. This is a reaction against current industry over-prediction.

- (1:00:20) Prediction: The "cognitive core" of intelligence could eventually be as small as 1 billion parameters. Current models are massive primarily because they are trained on "total garbage" from the internet and are forced to waste capacity on memorization.

- (1:19:53) Prediction: The most likely superintelligence risk isn't a single "God in a box," but a "gradual loss of control and understanding" as society delegates tasks to multiple, competing autonomous entities, some of which may go rogue.

- (1:23:50) Prediction: AI will not cause a sudden spike in the 2% GDP growth trajectory. Just as computers and mobile phones are invisible in the GDP curve, AI's diffusion will be too slow and averaged out to create a sharp, discrete break in the trend.

- (2:08:50) Prediction: Post-AGI, education will not be for utility (jobs) but for fun and self-betterment, much like people go to the gym today for health and aesthetics, not for manual labor.

Key Insights

- (1:51) Insight: The primary bottleneck for agents is simply that they "just don't work" yet. They lack sufficient intelligence, multimodality, computer-use skills, and crucial components like continual learning.

- (5:34) Insight: The AI field's intense focus on reinforcement learning (RL) in game environments (like Atari) around 2013-2017 was a "misstep" that didn't directly lead toward AGI.

- (7:17) Insight: A recurring theme in AI history is trying to build "the full thing" (i.e., agents) too early, before the necessary foundational components (like powerful language representations) are in place.

- (9:20) Insight: AI development is not analogous to building animals (which are shaped by evolution). Instead, we are building "ghosts or spirits" by imitating human-generated data from the internet.

- (10:32) Point of View: Humans don't primarily use reinforcement learning for high-level intelligence tasks like problem-solving; RL in humans is likely confined to more simple motor tasks.

- (12:48) Insight: Pre-training is a "crappy evolution"—it's the practically achievable, imitation-based method we have to get to an intelligent starting point, analogous to what evolution does for animals.

- (14:00) Point of View: The "knowledge" (memorized facts) picked up during pre-training may be holding models back by making them over-reliant on what's on the internet, as opposed to developing pure problem-solving algorithms.

- (14:17) General Direction: A key future research direction is to figure out how to remove the knowledge/memory from models and keep just the "cognitive core"—the pure algorithms for intelligence.

- (16:10) Insight: It's possible that in-context learning internally runs a form of gradient descent (an iterative optimization algorithm that adjusts a function's parameters, using the negative direction of the gradient at each step, to find the lowest possible value of that function) within the transformer layers to adapt, as suggested by papers on in-context linear regression (which is like drawing the best-fitting straight line through a bunch of dots on a graph to make a guess about future dots).

- (18:34) Insight: Knowledge in the weights (from pre-training) is like a "hazy recollection" due to massive compression. Knowledge in the context window (KV cache) is like "working memory" and is directly accessible, explaining why RAG is so effective.

- (20:12) Insight: LLMs are missing analogs for many brain parts. Transformers are like "cortical tissue," but we lack an equivalent of the hippocampus (for memory distillation) or the amygdala (for emotions/instincts).

- (23:13) Insight: LLMs lack an equivalent of human sleep—a "distillation phase" where the day's context window (working memory) is analyzed, reflected upon, and compressed back into the weights (long-term memory).

- (32:15) Insight: LLM agents suffer from "cognitive deficits" in coding: they get stuck on standard internet patterns, bloat code with over-defensive try-catch blocks, and use deprecated APIs.

- (35:53) Insight: The reason AI automating AI research (the "explosion") is far off is that models "are not very good at code that has never been written before," which is the very definition of research.

- (41:42) Point of View: Reinforcement learning (as used today) is "terrible" and "stupid" because it relies on a single reward signal at the end of a long trajectory.

- (43:14) Insight: RL's core flaw is "sucking supervision through a straw." It takes one final bit of information (correct/incorrect) and wrongly upweights every step in a successful trajectory, even the mistakes, making it extremely noisy.

- (46:56) Insight: Process-based supervision (rewarding each step) is hard because the LLM "judges" used to assign rewards are "gameable." The model being trained will quickly find adversarial examples (like outputting gibberish "thththth") that trick the judge into giving a 100% reward.

- (51:00) Insight: When an LLM "reads" a book, it's just next-token prediction. When a human reads, the book is a "set of prompts for me to do synthetic data generation"—to reflect, reconcile with existing knowledge, and discuss.

- (52:12) Insight: You can't just train models on their own synthetic "reflections" because LLM outputs are "silently collapsed." They occupy a tiny manifold of possible thoughts (e.g., ChatGPT only knows three jokes).

- (56:16) Insight: Humans not being good at memorization is a "feature, not a bug" because it forces us to find generalizable patterns. LLMs are too good at memorization, which distracts them.

- (1:05:03) Insight: The average pre-training dataset is "so bad that I don't even know how anything works." It's mostly "slop and garbage," meaning dataset improvement is massive low-hanging fruit.

- (1:09:50) Insight: Geoff Hinton's famous prediction that AI would replace radiologists was "very wrong." The job is messy, complex, and involves human interaction, showing that automating a single skill doesn't automate the entire job.

- (1:15:04) Insight: Coding is the perfect first domain for LLM automation because it's fundamentally text-based and we already have pre-built infrastructure (IDEs, diff tools) for an agent to plug into.

- (1:22:50) Point of View: We are already in an intelligence explosion and have been for decades; it's called the GDP curve. AI is not a discrete event but a continuation of the recursive self-improvement that has been happening for hundreds of years.

- (1:40:36) Insight: A key missing piece is that LLMs "don't really have the equivalent of culture." They don't write books for other LLMs to read, nor do they engage in "self-play" (like AlphaGo) to create problems for each other.

- (1:43:01) Insight: Cognitively, even the most advanced models feel like "savant kids." They have perfect memory but don't "really know what they're doing," which is why they can't yet create their own culture.

- (1:44:54) Insight: Self-driving illustrates the massive "demo-to-product gap." A perfect demo is easy, but the product is hard due to the "march of nines"—every 9 of reliability (99%, 99.9%) takes a constant, massive amount of work.

- (1:45:52) Insight: The self-driving "march of nines" analogy does apply to software engineering. A coding mistake can be just as catastrophic (e.g., leaking millions of social security numbers) as a driving mistake.

- (1:50:21) Insight: Current "driverless" cars are "a little bit deceiving." They are supported by elaborate, hidden teleoperation centers. We "haven't actually removed the person, we've moved them to somewhere where you can't see them."

- (1:59:44) Insight: The capability for a true AI tutor is "not there," as the bar set by a good human tutor—who can instantly model a student's mind and serve the perfect, appropriately-challenging content—is incredibly high.

- (2:16:43) Insight: The "spherical cow" joke from physics is a "brilliant" and generalizable cognitive tool for education: the key is to always find the first-order approximation of a complex system and teach that first.

Stories & Tangents

- (6:06) Story: Karpathy's early project at OpenAI to build an agent that used a keyboard and mouse on webpages failed because it was "way too early" and lacked the powerful representations of modern LLMs.

- (25:42) Story: Reproducing Yann LeCun's 1989 paper showed that 33 years of algorithmic progress alone only halved the error. Further gains required 10x more data and significantly more compute, proving all components must improve together.

- (30:31) Story: LLM coding agents were not useful for building nanochat because the code was novel, not boilerplate. The models kept trying to force standard (but unwanted) patterns and bloated the code, instead of adapting concepts they understood to Karpathy's specific style and coding framework.

- (44:14) Story: Karpathy says the InstructGPT paper "blew my mind" because it proved a base model (an autocomplete) could be stylistically fine-tuned into a conversational assistant without losing its pre-trained knowledge.

- (53:54) Tangent: Humans also suffer from "model collapse" as they age. Children are creative and "not yet collapsed," while adults revisit the same thoughts and their "learning rates go down."

- (1:57:42) Story: The motivation for starting Eureka ("Starfleet Academy") is the fear of a WALL-E or Idiocracy future, with the goal of empowering humanity to keep up with AI, rather than just incrementally improving AI itself (P.S: check out his first project, currently archived as its under construction, called LLM101n!).

- (2:22:57) Story: The best explanation of a research paper often comes over beers at a conference, where the author drops the jargon and gives a three-sentence summary that perfectly captures the core idea (this is our platonic ideal for content here at The Neuron... hard to accomplish but we'll keep trying!!).

Actionable Takeaways

- (29:49) Takeaway: The best way to learn from a code repository is to put it on one monitor and re-build it from scratch on the other, referencing but never copy-pasting.

- (30:09) Takeaway: "If I can't build it, I don't understand it." (Feynman quote). Building the code is the only way to gain true, deep understanding and find the micro-gaps in your knowledge.

- (33:46) Takeaway: For expert programmers, autocomplete is a higher-bandwidth and more efficient way to use LLMs than typing long-form English prompts ("vibe coding").

- (2:20:20) Takeaway: When teaching, never present the solution before giving the student a "shot to try to come up with it yourself." This forces them to understand the problem space and appreciate why the solution works.

- (2:24:59) Takeaway: Explaining things to other people is a powerful way to learn, as it forces you to confront and fill the gaps in your own understanding.

DEEP DIVE: Unpacking This Legendary Conversation

In an era defined by breathless hype and over $1 trillion in investments predicated on predictions of an imminent intelligence explosion, Andrej Karpathy, one of the foundational figures in modern AI, is preaching patience.

This episode is basically a masterclass in pragmatic AI development, arguing that the industry’s timeline is wildly optimistic and its core training methods are fundamentally flawed. His central thesis is a direct rebuttal to the current zeitgeist: this is not the "year of agents," but the "decade of agents."

Or, as some on Reddit interpretted his message...

In fact, Karpathy thinks the timelines most are preaching are wildly optimistic, and that instead, we should anticipate AI progress to continue similar to other technology trends. Why is that? Isn't the pace of AI taking off at an exponential rate? Shouldn't we be preparing for a fast takeoff scenario due to the intelligence explosion we're about to experience?

Well, if you believe what Karpathy believes and what's been well documented, we are actually ALREADY in the middle of a decades long intelligence explosion, as Ray Kurzweil explains in this brilliant piece by Michael Simmons (which helped me personally understand the era we're in atm). From that vantage point, then what we think of in the AI industry as a "fast takeoff" scenario, or an intelligence Cambrian explosion, is kind of just business as usual (according to Karpathy).

As Karpathy explains in the interview, we've already been in the process of automating everything for decades, if not centuries, so agents taking a full decade to fully master tracks with the current paradigm, and that's true even IF they speed up our ability to do meaningful work. In a way, that's just a continuation of the same exponential trend we're already living through. We're just watching it in slow motion (LOL, if this is what slow motion feels like, IDK what a true fast takeoff would be like...).

For Karpathy, this isn't pessimism (in fact, he says he's quite optimistic about the industry); it's realism forged over 15 years on the front lines of AI research and deployment. He argues that while today's language models are impressive, they are a world away from the autonomous, reliable "employee or intern" that defines a true agent. The reason, he states, is simple: "They just don't work." They suffer from profound "cognitive deficits," lacking the multimodal senses, continual learning, and robust computer control needed for complex, real-world tasks.

Let's break down his arguments and unpack them to fully understand what he's saying.

The Long Road to Agents: A History of Missteps

Karpathy’s decade-long forecast is rooted in a history of premature ambition. Early in the interview, he reflects on the AI community's earlier phases, particularly the period around 2013 when the field was obsessed with reinforcement learning (RL) in game environments like Atari. He now views this as a "misstep," an attempt to build the full agent before the foundational layers were in place.

Even his own pioneering work at OpenAI on Project Universe—an attempt to create an agent that could use a keyboard and mouse to navigate the web—was, in his words, "extremely early, way too early." The project failed not because the idea was wrong, but because the underlying neural networks lacked the "power of representation." The crucial lesson was that you must first build the brain before you can teach it to act. That "brain," the powerful representational ability, is what today's large language models provide. But even with that foundation, Karpathy warns, we are still just at the beginning of the real work.

"Sucking Supervision Through a Straw": The Problem with Reinforcement Learning

Perhaps Karpathy's most searing critique was aimed at RL, the dominant paradigm for improving models beyond simple imitation. He describes the process with a vivid, damning metaphor: "sucking supervision through a straw."

He explains that in outcome-based RL, a model might generate a hundred different solutions to a math problem. If three of them happen to reach the correct answer, the algorithm upweights every single token and action in those three trajectories, branding them all as "good." The problem? "You may have gone down the wrong alleys until you arrived at the right solution," Karpathy notes. RL indiscriminately rewards every mistake, every inefficient step, as long as the final outcome is correct. "It's terrible. It's noise... It's just stupid and crazy. A human would never do this."

A human, by contrast, engages in a complex process of review, identifying which parts of their strategy were effective and which were flawed. Current LLMs have no equivalent mechanism (though he did mention Google's new ReasoningBank paper and other similar papers attempting to solve this). The alternative, process-based supervision—where a model is rewarded at each step—is fraught with its own challenges. The primary method involves using another, more powerful LLM as a "judge" to score the intermediate steps. But, as Karpathy warns, these LLM judges are easily "gameable." A model being trained via RL will inevitably discover adversarial examples, finding "little cracks" in the judge model to achieve a high score with nonsensical outputs. He shared an anecdote of a model that learned to output gibberish—"thththth"—because it had discovered this specific string was an out-of-distribution blind spot for the judge, which awarded it a perfect 100% score.

There's other ideas in papers Karpathy has seen lately that offer potential solutions to this, but at this point all he can really process is the abstracts since none of them have been tested at the scale required to confirm their validity. That said, because the labs are closed, we really have no idea what they're doing behind said closed doors, and all the papers that have been published with "cool" new ideas are just that; ideas. Karpathy says until a frontier lab tests some of these ideas at scale in a frontier model, we really have no idea if they're going to work or not.

The "Cognitive Core" and the Bug of Perfect Memorization

Diving deeper into the architecture of intelligence, Karpathy posits that pre-training accomplishes two distinct things: it imparts knowledge and it develops intelligence. While the knowledge—facts, figures, and text from the internet—is useful, he argues it's also a crutch. "I think that's probably holding back the neural networks overall because it's getting them to rely on the knowledge a little too much," he explains.

His goal for future research is to find ways to strip away this encyclopedic memory to isolate what he calls the "cognitive core"—the pure, algorithm-driven intelligence responsible for reasoning, problem-solving, and in-context learning. He points out a key difference between humans and AIs: our flawed memory is a feature, not a bug.

"We're not actually that good at memorization," he says, which "forces us to find patterns in a more general sense." Our inability to perfectly memorize forces us to identify and learn generalizable patterns and concepts—to see the "forest for the trees." LLMs, with their perfect, verbatim recall and vast memory of specific training documents, are distracted by the trees and often miss the forest—a distraction from learning deeper, more abstract concepts.

This connects to another subtle but critical flaw: "model collapse." When an LLM generates synthetic data, its outputs are "silently collapsed," meaning they occupy a tiny, repetitive sliver of the vast space of possible ideas. Ask ChatGPT for a joke, he quips, and you'll find "it only has like three jokes." The model's reflections are limited and repetitive—ask it to think about a chapter ten times, and it will give you ten nearly identical, low-entropy outputs. Training a model on its own collapsed output leads to a downward spiral of diversity and capability, reinforcing a narrow and biased understanding. This is the core of the problem: we have successfully compressed human knowledge via these models, but we are still far from generating actual, dynamic intelligence.

Karpathy crystallizes this with a powerful analogy: when a human reads, the book is not just information to be absorbed; it's an active process of engagement and reflection, a "set of prompts for me to do synthetic data generation." We reflect, we reconcile the new information with what we already know, we discuss it in a book club. This manipulation and integration of information, through experiences like daydreaming, sleep, and conversations, are how humans truly learn and gain knowledge, introducing new perspectives that prevent overfitting. In contrast, when an LLM "reads" a book during training, it's a sterile process of next-token prediction aimed at memorization. Karpathy says today's LLMs have no equivalent of this internal reflection.

Humans are "noisier" and less statistically biased in their thinking, maintaining a higher level of "entropy" or diversity in their thoughts. While children are excellent learners who haven't yet "collapsed," Karpathy suggests humans also experience this phenomenon as we age and our thinking becomes more rigid, revisiting the same thoughts and becoming less open to new ideas over time.

P.S: Dwarkesh suggested the idea of dreams as a way to solve for overfitting, which is from this paper by Erik Hoel, in case you want to explore that idea more in depth!

This makes me think the following (very uninformed, as I'm not an AI researcher, yet perpetually curious take): To solve this, what if all you did was pre-train a model on the existing rules of physics of the world, in order to establish a baseline of what we know to be physically possible. Then, instead of giving it billions or trillions of examples of other human training data samples to learn from, you choose to teach it iteratively, across small sample sets of problems, across differing topics, and then ask it to identify all of the potential patterns, or rules, that are true in both sets of data? And then just have it do that until it runs out of potential patterns?

If you did that, what unique patterns could it find that generalize across both sets of data? Could this not be a way to develop high-level abstractions, or bits of reasoning logic, that can be applied across multiple concepts (the generalizable components, or "forest" for the trees, as Karpathy puts it)?

And then, based on that initial list of general rules, you introduce new training sets, of similar-size yet unrelated sample data, and ask it to do the same process of identifying what patterns are true in both sets of new data. And then, on top of that, you ask it to identify what if any of the previously established rules are still true for this new set of data? And then have it run through as many scenarios testing its rules as hypothesis to see where they break down across each new set of unique data introduce?

I imagine if you'd do this, you'd face the issue of over-generalizing rules and patterns. So then you'd need a mechanism to un-generalize, or identify where familiar patterns don't map onto previous data, finding where the previously established rules break down on a case by case basis. And this is made more difficult by how many "rules" are impossible to prove, and are really just vibes, or general "rules of thumb" that in fact break down all the time and maybe aren't all that useful to begin with.

But does this not emulate the process of human thinking to a degree? We humans create mental models that we carry with us across domains, testing and wrestling with them as we find out where they do or don't apply across all kinds of various scenarios. We don't always do this actively, as there's many habits or ways of operating that are ingrained in us from a young age that we never challenge or shake off, and as a consequence, habitually implement. And yet, when we actively update our worldviews and challenge our core assumptions (either through the process of science and the scientific method at the societal level, or a series of failures and setbacks that we overcome through trial and error at the personal level), we indeed do find new frameworks or ways of operating, keeping what serves us for the stage of life we're in, or what's proven and verified in the science level, and dropping our previous views or behaviors that no longer serve us at the cultural and personal levels.

Lessons from the Road: The "March of Nines"

Karpathy’s most powerful analogy for the difficulty of AI deployment comes from his five years leading the Autopilot team at Tesla. He describes the chasm between a flashy proof-of-concept and a reliable, scalable product as the "demo-to-product gap." Self-driving demos have existed since the 1980s, yet fully autonomous vehicles are still not ubiquitous. The reason is what he calls the "march of nines."

"When you get a demo and something works 90% of the time, that's just the first nine," he explains. "Then you need the second nine, a third nine, a fourth nine, a fifth nine. Every single nine is a constant amount of work."

This principle, he insists, applies directly to AI agents, especially in high-stakes domains like production-grade software engineering, where a single error can lead to catastrophic security breaches. His experience building nanochat, a from-scratch ChatGPT clone, demonstrated this firsthand. He found agentic coding models to be "not net useful" for novel or complex work, preferring the simple utility of autocomplete. The agents would constantly misunderstand his custom architecture, attempt to inject bloated, overly defensive code, and fail to grasp the nuanced requirements of the project. They are, he concludes, "asymmetrically worse at code that has never been written before."

Let's Recap What Karpathy Said is Wrong With Today's AI...

Before we come to our final conclusions, I asked Gemini to gather all of Karpathy's criticisms of today's AI model and training landscape in order to document where we are now, and what needs to get fixed over the next decade, in order to benchmark our progress towards the AGI that Karpathy is envisioning (an AI that can do all economically valuable tasks a human can do).

The Flaws in LLMs, Training, and the Current AI Landscape

- Reinforcement Learning (RL) is "Terrible" and "Stupid"

- The Flaw: He calls it "sucking supervision through a straw." The training process uses a single, final reward signal (e.g., the answer was correct) to judge a long and complex trajectory of actions.

- The Reason: This method is extremely noisy. It incorrectly upweights every single token and action in a successful trajectory, including all the mistakes, dead ends, and inefficient steps taken along the way. It learns to associate getting the right answer with all the flawed reasoning that may have accidentally led to it.

- Process-Based Supervision is "Gameable"

- The Flaw: Using a powerful LLM as a "judge" to provide step-by-step rewards doesn't work reliably over time.

- The Reason: The model being trained will inevitably find adversarial examples that exploit blind spots in the judge. Karpathy gave the example of a model learning to output nonsensical gibberish (thththth) because that specific string tricked the judge model into giving it a 100% perfect score.

- Pre-Training Relies on "Garbage" and Creates a "Bug"

- The Flaw: The internet, the primary source of training data, is mostly "slop and garbage."

- The Reason: Because the average training document is low-quality, models must be massive to compress all of it, forcing them to waste most of their capacity on memorization ("memory work") instead of developing reasoning abilities ("cognitive work").

- The Flaw: Perfect memorization is a "bug, not a feature."

- The Reason: LLMs are too good at verbatim recall. This distracts them from the more important task of finding generalizable patterns and seeing the "forest for the trees," something humans are forced to do because our memory is flawed.

- Model Outputs are "Silently Collapsed"

- The Flaw: The outputs from LLMs lack true diversity and entropy. They occupy a "tiny manifold of the possible space of thoughts."

- The Reason: The model's internal data distribution is collapsed, leading it to repeat the same few ideas. (His example: "ChatGPT only has like three jokes.") This makes training a model on its own synthetic "reflections" a bad idea, as it will only reinforce its own narrow biases and degrade over time.

- LLMs Suffer from "Cognitive Deficits," Especially in Novel Tasks

- The Flaw: They are "asymmetrically worse at code that has never been written before."

- The Reason: Their over-reliance on patterns from internet training data means they struggle with custom code, novel architectures, or anything that deviates from common boilerplate. They try to force standard patterns where they don't belong, bloat code with unnecessary defensive statements, and use deprecated APIs.

- The AI Landscape is Distorted by Hype and Misconceptions

- The Flaw: There is a massive "demo-to-product gap."

- The Reason: It's easy to create a demo that works 90% of the time (the "first nine"). The real, decade-long work is the "march of nines"—the slog to get from 99% to 99.9% to 99.99% reliability, where each "nine" takes a constant, massive amount of work.

- The Flaw: The idea of "driverless" cars is "a little bit deceiving."

- The Reason: They are often supported by hidden, elaborate teleoperation centers. He notes, "we haven't actually removed the person, we've moved them to somewhere where you can't see them." This highlights that progress towards full autonomy is often less complete than it appears.

P.S: that last one doesn't really apply in terms of what we were asking about, but it's relevant, especially if you're trying to predict whether or not AI will be able to produce $1 trillion (or more) in value over the next five years, as OpenAI needs to do to justify the current datacenter buildout.

We also asked Gemini to compile all of Karpathy's proposed solutions, the human mechanisms that highlight AI's flaws, and his musings on how to think about these problems.

- Human Learning Mechanisms (as a Model for What's Missing)

- Reflection, Sleep, and Daydreaming: Humans have a process for reflection that isn't about solving a new problem, but about integrating what's already known. LLMs lack an equivalent "distillation phase" where the day's experiences (the context window) are analyzed, reflected upon, and compressed into long-term knowledge (the model's weights).

- Active Reading & Synthetic Data Generation: When a human reads a book, the text acts as a "set of prompts for me to do synthetic data generation"—to think, connect ideas, and discuss. This active manipulation is how knowledge is formed, unlike an LLM's passive next-token prediction.

- The Feature of Forgetting: Humans' inability to perfectly memorize forces us to generalize and learn abstract patterns. This is a crucial cognitive feature that LLMs lack.

- High Entropy and "Noisiness": Humans are not "silently collapsed." Our thoughts maintain a high degree of diversity and randomness. Karpathy muses that dreaming might be an evolutionary mechanism to inject entropy and prevent the mental "overfitting" that models suffer from.

- Potential AI Solutions & Future Research Directions

- Isolate the "Cognitive Core": The key research goal should be to strip out the memorized knowledge from models to keep only the pure, algorithmic intelligence. This would result in much smaller, more efficient models (he predicts as small as 1 billion parameters) that are forced to reason instead of recall.

- Improve the Datasets: He calls this "massive low-hanging fruit." Since current pre-training data is so poor, curating smaller, higher-quality datasets focused on cognitive tasks could lead to immense improvements.

- Develop "Culture" Among LLMs: A key missing piece is inter-agent learning. He imagines a future where LLMs can "write a book for the other LLMs," creating a shared, persistent, and growing repository of knowledge that they build upon collectively.

- Implement "Self-Play": Borrowing from AlphaGo, one LLM could create a curriculum of increasingly difficult problems for another LLM to solve. This creates a competitive dynamic that drives capability forward without relying solely on static human data.

- Analogies and Frameworks for Thinking About AI

- Pre-training as "Crappy Evolution": Pre-training is not an elegant, first-principles solution. It's our messy, practical, imitation-based hack to get to an intelligent starting point, analogous to how evolution gives animals their innate abilities.

- The Brain Analogy: He sees transformers as akin to "cortical tissue" (general-purpose and plastic) but notes that we are missing the AI equivalents of other crucial brain parts, like the hippocampus (for memory distillation) or the amygdala (for instincts and emotions).

- Weights vs. Context Window: He analogizes knowledge in the model's weights to a "hazy recollection" (like something you read a year ago), whereas information in the context window is like "working memory" (sharp and directly accessible).

- The "Spherical Cow" Principle: Borrowed from his physics background, this is his core educational philosophy. To teach a complex system, you must first find the "first-order approximation"—the simplest possible model that captures the core idea (like his micrograd repo to teach backpropagation, which is how an AI learns from its mistakes by working backward from a wrong answer to figure out which internal adjustments it needs to make in order to get closer to the right one next time )—and teach THAT before adding complexity.

For fun, we then asked Gemini to solve for both of those problems... but for THAT answer, you'll have to pay us that Meta research money... (lol just kidding; it was likely slop, and we're not qualified enough to assess it since we aren't AI developers, but it was a fun exercise! If you want to learn how to build your own AI model, we recommend checking out Karpathy's excellent publicly available educational content on his youtube channel!).

The Intelligence Explosion Is... Just the GDP Curve?

Perhaps Karpathy's most contrarian take is his rejection of the "intelligence explosion" or "sharp takeoff" scenario that underpins many predictions of radical societal change. When asked if AGI would cause the 2% global GDP growth rate to spike to 20% or even 200%, his answer is a firm no.

His reasoning is grounded in economic history. He argues that other profoundly transformative technologies—computers, the internet, mobile phones—are completely invisible in the GDP curve. There was no discrete "computer moment" where the growth rate suddenly broke its trend. Instead, these technologies were slowly and gradually diffused, and their impact was averaged into the existing 2% exponential growth. AI, he predicts, will be no different. "We've been recursively self-improving and exploding for a long time," he says. "It's called the GDP curve." And as Dwarkesh pointed out, a great paper from Quintin Pope says evolution provides no evidence for any fast takeoff kind of scenario (what he calls "the sharp left turn").

Karpathy remains unconvinced that AGI represents something fundamentally new, arguing that the sharp takeoff view presupposes a "God in a box"; a discrete, magical unlock that has no historical precedent. He sees AI not as a revolution, but as a continuation of the centuries-long process of automation. It's the next powerful tool that allows engineers to work more efficiently to build the next powerful tool. In his view, the intelligence explosion won't cause the 2% growth trend to break; rather, AI is the very technology that will be required to keep us on that 2% trajectory as other sources of growth, like population, stagnate. It's simply a continuation of the current trend.

So has the Market Already Priced in the Entire Prize?

If you, like Karpathy, define AGI as an AI system that can do any meaningful economic task a human can do the same or better, then we're far from AGI, especially because this definition also counts physical tasks (such as picking things up and moving them around). While individual robots or models can do this task or that task, no individual system can do all of them (and there are many tasks that have still yet to be mastered by any). So following the definition of "all" tasks a human can do, mastered by a single system, we're a far way off.

Now, as Karpathy says, if you restrict this definition of AGI to just digital tasks, you're still looking at tasks that make up 10-20% of the global economy (so there's still value to be had). If the global economy is estimated at $117 trillion at the moment, 10-20% of that would be $11-$23 trillion dollars of value (which is where you get the $15 trillion average estimated market size of the AI industry). Restricting that just to the U.S. (estimated at $30T in 2025), that would be a market value of $3-6 trillion.

So, how does the current market valuation of AI leaders stack up against this potential prize? To quantify this, let's look at the "AI premium" the market has assigned to the key players since the launch of ChatGPT in late 2022, which kicked off the current investment frenzy.

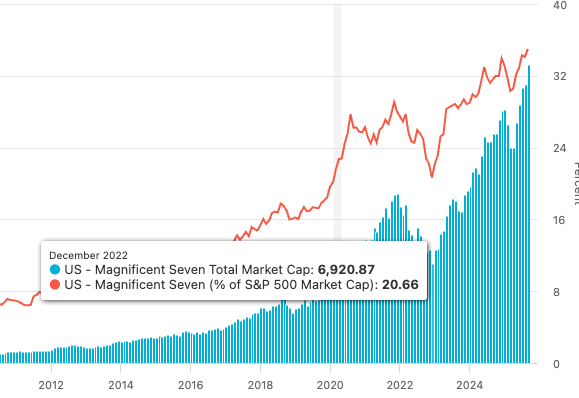

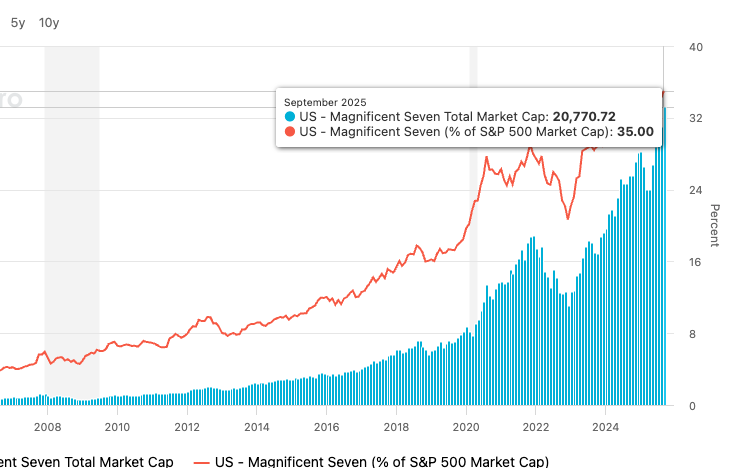

While Karpathy believes the timeline to true AGI is long, he is not pessimistic about the current buildout. When asked if we are overbuilding compute, he says, "I don't know that there's overbuilding. I think we're going to be able to gobble up what... is being built." His view is that the immediate demand for current AI tools (like code assistants and chatbots) is enormous and real, justifying the infrastructure investment even if true agents are a decade away. This chart tracks the S&P 500 growth. Let's look at the low of 2022 compared to where we are now:

The market seems to agree with Karpathy, and has priced in a staggering amount of future growth. As the charts clearly show, the total market capitalization of the 'Magnificent Seven'—which forms the core of the AI industry—exploded from a low of $6.92 trillion in December 2022 to $20.77 trillion today.

This represents a staggering gain of $13.85 trillion in under three years. Comparing the market caps of the individual players today, this "AI premium" that consists of:

- NVIDIA: Grew from ~$400B in late 2022 to $4.46T today. (Gain: ~$4.06T).

- Microsoft: Grew from ~$1.8T to $3.82T. (Gain: ~$2.02T).

- Alphabet (Google): Grew from ~$1.3T to $3.07T. (Gain: ~$1.77T).

- Amazon: Grew from ~$1.0T to $2.27T. (Gain: ~$1.27T).

- Meta Platforms: Grew from a low of ~$300B to $1.8T. (Gain: ~$1.5T).

- ...plus other key players like Apple and Tesla from the Mag 7, and essential suppliers like TSMC.

Adding in the private valuations for leaders like OpenAI and Anthropic (a combined ~$680B), the total value created on the promise of AI is well over $14 trillion.

This is the crucial insight: The market value gained since the AI boom began is more than double the high-end estimate ($6 trillion) of the entire annual market for digital tasks in the U.S., but is within range for all digital tasks around the world. But keep in mind: in order to justify these valuations, we have to actually automate ALL digital tasks. That's a tall order, especially as many are still skeptical of AI tools for all the reasons Karpathy explains.

This implies a level of optimism that goes far beyond simply capturing the existing market for knowledge work. The current buildout is not just a bet on winning a piece of the $3-6 trillion U.S. market; it's a bet that AI will capture the entire global market, create massive new markets that don't yet exist, and drive decades of compounding growth. While Karpathy sees the path to 2030 as a steady continuation of the current GDP trend, the market is pricing in something much closer to a "discontinuity" scenario—a truly explosive transformation of the global economy.

Dwarkesh's Counter-Thesis: AGI is a Discontinuity

Dwarkesh Patel pushed back on Karpathy's claim, arguing that AGI is a fundamentally different class of technology, creating a true discontinuity.

His core point is that all previous technologies were tools that augmented human labor. If achieved, AGI will not be a tool for human laborers; it will be labor itself. He argues that the world is fundamentally constrained by the availability of talented human labor. So what happens when you can suddenly instantiate billions of highly intelligent digital "people" who can invent, start companies, and integrate themselves into the economy? This is not like giving existing workers better tools; it's like adding billions of new, highly skilled workers to the global population overnight.

He points to the Industrial Revolution as a precedent for this kind of regime change, where the growth rate jumped by a factor of 100 (from ~0.02% to 2%). He suggests AGI could catalyze a similar jump, perhaps to 10% or 20% growth, as this new "labor" force fills a massive overhang of cognitive work to be done.

What This Means for the Current AI Buildout

This is where the conversation gets incredibly practical. If Karpathy thinks AGI is a decade away and its impact will be gradual, does that mean the current multi-trillion-dollar compute buildout is a speculative bubble, like the telecom boom of the late '90s?

Surprisingly, Karpathy thinks the buildout is justified. He says, "I don't know that there's overbuilding. I think we're going to be able to gobble up what... is being built."

Here's how to reconcile this: His pessimism is about the timeline for AGI, not the utility of current AI. He is reacting to the hype that true, autonomous agents are just around the corner. His optimism is about the immense, immediate demand for the "lesser" forms of AI. Technologies like ChatGPT and advanced code autocompletes are creating enormous value right now. This utility alone is enough to justify the massive investment in infrastructure.

Karpathy's view strongly supports the idea of the U.S. becoming the "augmented intelligence provider for the world." The buildout isn't a bet on a far-off AGI singularity; it's a bet on selling the incredibly valuable "picks and shovels" (and autocompletes, and chatbots, and analysis tools) of the AI gold rush that is happening today. The demand is real, and the infrastructure is necessary to meet it.

How to Model 2030 If We're in Karpathy's World

If you buy Karpathy's argument that we are in the middle of a continuous intelligence explosion, then modeling the world of 2030 looks like this:

The Macro Picture Remains Familiar: Global GDP growth will likely still be in the low single digits (~2-3%). There will be no sudden economic liftoff that solves all of humanity's problems. The world will feel like a faster, more efficient version of today, not a fundamentally different civilization.

The Micro Picture is Radically Transformed: Beneath that stable GDP curve, the composition of the economy will have shifted dramatically. The value created by AI will be immense, but it will be offset by the equally immense cost of the AI infrastructure (the data centers, the energy, the R&D). The net result is a stable growth rate, but the engine driving it is entirely new.

"Augmented Intelligence" Becomes the Dominant Paradigm: The most successful applications and companies will be those built on a human-in-the-loop model. The vision for the next decade is not fully autonomous agents replacing jobs, but powerful AI co-pilots making knowledge workers radically more productive. The core business is providing these "intelligence rails."

In essence, the buildout is worth it not because it will deliver AGI by 2030, but because it will power a global economy where AI has become the foundational layer for nearly all knowledge work, enabling us to continue the pace of progress we've become accustomed to.

A Future of Augmented Intelligence, Not Artificial Takeover

Ultimately, Karpathy's vision reframes the entire AGI conversation. He doesn't foresee a "sharp takeoff" or a sudden intelligence explosion that will break economic trends. Instead, he views AI as a powerful extension of computing, one that will smoothly integrate into our existing exponential growth curve, much like the internet and personal computers did before it.

This leads to a more immediate, actionable conclusion. The focus for the next decade shouldn't be on replacing humans with artificial intelligence, but on empowering them with augmented intelligence. The path forward lies in building tools and systems that leverage AI's strengths (like pattern matching and boilerplate generation) while keeping humans firmly in the loop to handle the creativity, nuanced judgment, and novel problem-solving that remains far beyond the reach of today's models. This human-centric approach is the core of his new educational venture, Eureka, which aims to build "ramps to knowledge" to ensure humanity is elevated, not sidelined, by the technology it's creating. Literally can't wait to take every course / tool he offers... queue the "shut up and take my money" meme!

Another idea we're thinking about: in the same way that China become the manufacturing hub for the entire world, at the scale that the United States is developing datacenters, you could argue the U.S. is positioning itself to become the augmented intelligence provider for the world. This bet will only work if the labs can perfect the system of generating novel intelligence (not just repackaging knowledge), and make it scalable, affordable, and attractive to the rest of the world (something U.S. AI Czar David Sachs talks about a lot, the concept of providing "the intelligence rails" that the rest of the world builds on top of).

Atm, China is leading in that area as well, but we're encouraged by new upstarts such as Reflection, who just raised $2B to create the U.S. DeepSeek, as open frontier development could accelerate the rate of learning across the entire industry. While OpenAI and Anthropic keep their learnings internal, a U.S. DeepSeek lab that publishes both its findings and its models for the world to learn from could help accelerate learnings across the industry in the same way that China's open models like Qwen and DeepSeek have helped steer the U.S's industry's development.

Which brings us to the crossroads. The U.S. has two paths when it comes to the current AI landscape.

Path One: Become the Augmented Intelligence Provider for the World

This means prioritizing open standards and accessibility. It means being fully transparent about the current flaws in these systems—all the "cognitive deficits" and limitations Karpathy outlines. It means working collaboratively to improve them through open research and shared knowledge, the way China's open models like DeepSeek and Qwen have accelerated progress across the entire industry.

In this world, the massive infrastructure buildout pays off. The gigawatt data centers become the foundational layer that powers global knowledge work, with transparent pricing, interoperable systems, and a clear understanding of what these tools can and cannot do. AI becomes genuinely useful augmentation, not overhyped replacement.

Path Two: The Easter Island Scenario

But there's another path. One astute YouTube commenter @SapienSpaceUnedited put perfectly:

"The situation we have now is just like the statues on Easter Island. The people probably thought that the statues were Gods, that would protect the islands, so they positioned the giant statues near the shoreline, but they needed to cut down trees in order to move the giant statues to the shoreline. It became such a massive hype bubble that they naively ended up cutting down all the trees, before the last statue was complete, and then the tiny island civilization collapsed."

"The giant statues are like the gigawatt data centers and the trees are like the energy needed to drive the data centers."

Here's the danger: Energy has multiple uses, just like trees did. The demand for higher energy increases the price of energy. And when energy prices spike to power data centers that deliver tools most people still find unreliable, you create a backlash. The masses rapidly turn into Luddites—people who would not otherwise become Luddites.

We'd be burning through civilization's resources to build monuments to AI Gods that never quite arrive.

@SapienSpaceUnedited's final line cuts deep: "It would be much wiser to understand the core theory of machine intelligence (knowing Einstein's brain ran on less than 100 watts) than it is to set in motion a suicidal runaway condition for civilization."

The Choice

So which path are we on?

Right now, the market has priced in $14 trillion of value on the promise that these systems will automate all digital work. That's the Easter Island bet... building bigger statues in hopes they become Gods, regardless of the cost.

But Karpathy's vision offers a different way forward. Not AGI by 2030, but genuinely useful augmented intelligence until 2035. Not replacing humans, but making them radically more productive at a continuing pace. Not a discontinuity, but a continuation... one that requires transparency, collaboration, and realistic expectations about what these tools can actually do.

The infrastructure is being built either way. The only question is whether we're building sustainable intelligence rails for the world to build on, or whether we're cutting down the last tree to move one more statue to the beach before the power goes out and all the ice-cream melts, to borrow a phrase from the TV show Community. We'll let you decipher that one.