This Month's Intelligent Insights: AI Bubble Reality Check

Welcome to this month's Intelligent Insights, where we round up the stuff that made us go "huh, that's actually pretty interesting" while scrolling through our feeds at 2 AM.

This month's theme? Reality checks. Everyone's asking the big questions: Is AI in a bubble? Are we building too fast? And apparently, what would Jesus think about ChatGPT? (Spoiler: there's a whole Oxford professor with thoughts on that last one.)

Don't forget to check out our previous Intelligent Insights, too:

Intelligent Insights from July 2025

Intelligent Insights from June 2025

Intelligent Insights from May 2025

Now here's what caught our attention and why it matters for anyone trying to stay sane in the AI chaos:

August 22, 2025

- Here’s a deeper dive into Sam Altman’s statements about the AI bubble and the MIT study (which Ethan Mollick recommends everyone read and judge for themselves) that caused some market jitters on Tuesday.

- This HN thread debates the MIT study's stat that claims 95% see zero return on $30B in gen‑AI spend, highlighting ROI skepticism.

- What's your take? Are we in a bubble, or are we just getting started? Hit us up on X.com and let us know which of these insights hit different for you.

- Cat Wu, one of the co-creators of Claude Code, revealed that developers have unexpectedly started “multi-Clauding” (running up to six Claude AI coding sessions simultaneously) and explained how their command-line tool rapidly ships features through aggressive internal testing and offers an SDK for building general-purpose agents

- Matt Berman broke down Sam Altman’s latest comments on GPT-6 and the pros and cons of personalizing AI, as well as the new “Nano Banana” image editing model from Google (Logan Kilpatrick confirmed).

- Anthropic partnered with the U.S. Department of Energy's National Nuclear Security Administration to develop an AI classifier that detects nuclear proliferation risks in conversations with 96% accuracy and deployed it on Claude traffic.

- Whether you are religious or not, have you ever thought about what the Bible says about AI? This interview with Oxford professor John Lennox (who is both a mathematician and “lay theologian”) is a fascinating discussion on the topic!

- Kevin Weil (OpenAI head of Product) had a great chat with Peter H. Diamandis and David Blundin where he shared the exact strategy for building AI companies that won't get disrupted (build at the bleeding edge where models "can't quite do the thing you want, but you can just see little glimmers of hope"), and discussed how OpenAI operates at maximum GPU capacity while planning $500 billion in infrastructure through Project Stargate.

- This is great 10 minute chat about whether or not AI will supercharge economic growth (and what to do about it one way or the other).

- The TL;DR: If you believe in explosive AI growth, theoretically you'd want to own AI companies and capital rather than rely on labor… but since higher interest rates in that scenario could crash asset prices, and economists disagree on the models' parameters, the podcast suggests it's actually unclear what to invest in either way (so don't quit your day job to become a plumber just yet).

- Watch this episode of the No Priors pod with Andrew Ng, where he argues that software development's current transformation (with AI elevating the value of strategic product decisions over sheer engineering speed) provides the essential blueprint for how all knowledge work will soon be redefined (also, his point about the main barrier to agentic AI adoption is actually building a sophisticated error analysis system with evals, and how to approach it, is key).

- Meta poached another Apple exec, this time Frank Chu, who led Apple AI teams on cloud infrastructure, model training, and search.

More fresh finds from around the web (X, Reddit, etc)...

- Martin Casado says generative image‑to‑3D is getting “incredibly good,” showing a pure 3D world built from a single image (no video‑to‑video).

- Gian Segato’s post reinforces the probabilistic‑era mindset for AI software—optimize for distributions, not single outcomes (here's the full piece; ).

- Drew Breunig nods to a probabilistic engineering mindset—design for distributions, not constants, and aim for “minimum viable intelligence.”

- Robotics researcher Chris Paxton shares a practical note on instruction‑following limits in embodied agents and where data still falls short.

- Arav Srinivas highlights a build thread on agentic coding workflows—routing big‑plan/small‑task models for speed.

- Jack drops a short take on open protocols + AI, a recurring theme as networks get more agent‑friendly.

- George Tsoukal posts a bite‑size demo on productionizing LLM apps—thin prompts, strong evals, fewer knobs.

- Embirico shares a coder‑facing tip about reliable tool use—validate inputs/outputs like any other API.

- Aryan V. S. shares a succinct prompting pattern for clean, reusable agent instructions.

- Commentator BubbleBabyBoi argues the new race is infra, claiming Google is assembling one of the strongest inference stacks while Gemini keeps improving.

- Vik Paruchuri underscores that extracting structured fields from PDFs demands high accuracy and robust verification—core to production document workflows.

- Ghostty now asks contributors to disclose AI assistance after AI tooling disclosure merged to help maintainers gauge review effort.

- Trail of Bits shows attackers can weaponize image scaling to evade filters and mislead production vision models.

- A developer argues the unbearable slowness of AI coding stems from context wrangling and orchestration overhead.

- AGENTS.md proposes a simple agent README spec used by 20k+ repos to brief coding agents.

- Sergey Bogdanov warns that LLMs can dull recall, citing a study where 83% couldn’t quote their own essays.

- The Information says Crusoe seeks $10B valuation to scale AI data centers.

- Dave Breunig decodes AI job titles taxonomy—from “Applied AI” to “Forward Deployed”—to reduce title confusion.

- Rootly argues AI SREs need operational context beyond detection—ownership, history, and coordination—to actually shorten MTTR.

- Google details a fifth‑epoch AI network (Firefly, Swift, Falcon) to schedule bursty all‑to‑all traffic and boost accelerator utilization.

- Claudable lets you build apps via Claude Code—a Next.js builder with MCP support and one‑click Vercel deploys, emphasizing zero extra API costs for Claude Pro/Cursor users.

- DiffMem explores git‑based agent memory using Markdown, Git diffs, and BM25 instead of vector DBs.

- A Show HN argues you can replace vectors with Git for long‑horizon, reproducible AI memories—sparking debate on semantics vs. BM25.

- YC S25 startup Skope pitches outcome‑based software pricing—charging only when products deliver verifiable results (demo video).

- From a DARPA recap: eight Marines evaded an AI camera by somersaulting and by hiding in boxes, highlighting brittle, out‑of‑distribution failures.

- SDNY Judge Katherine Polk Failla denies motion in full in Dow Jones v. Perplexity, letting copyright and trademark claims proceed.

- HN context notes the ruling turned on jurisdiction/venue; the motion to dismiss denied doesn’t reach the merits.

- Hesam shares a workflow for a journal‑approved research paper in 2025—practical tooling and process tips.

- a16z argues that questioning margins is cliche for AI apps; tiering, model routing, and 10–100× inference cost drops can buoy long‑term gross margins.

- Runway demos Aleph’s world‑editing—swapping environments, characters, and moods while preserving motion—in Runway Aleph scene edits.

- Agent/evals builder Interstellr Ninja flags new work on orchestration and testing in an INTRSTLR Ninja agent update (good follow for function‑calling evals).

- After shipping 18+ MVPs with Cursor, Prajwal Tomar shares a compact playbook of tips in 18+ MVPs with Cursor.

- Runway adds voice performance controls to Act‑Two; see the Act‑Two “Voices” feature for how to shape a character’s sound.

- Morgan notes OpenAI’s expansion alongside Vantage’s Texas project—Vantage $25B 1.4‑GW campus spanning 10 facilities across 1,200 acres.

- Rohan Paul highlights benchmark runs where Grok‑4 tops FutureX on the hardest tiers of near‑term forecasting questions.

- Hugging Face’s VB shares a practical stance: delegate broadly, then pair where it matters—see VB on delegating to AI.

- Engineer Santiago Valdarrama argues personal throughput is way up with AI—“2× a four‑person team”—in SVPINO productivity claim.

- UC Berkeley’s Sergey Levine notes that language following in VLAs remains a hard problem, calling out dataset and instruction gaps.

- Ian Nuttall says some Claude Code Max users can access Sonnet 4 1M context via the /model switch—suggesting a quiet capacity bump.

- Researcher gm8xx8 notes outputs with less formulaic tone and higher token efficiency, hinting at a post‑training scaling law emerging in new releases.

- Scaling01 posted a tongue‑in‑cheek quadrillion‑dollar Anthropic projection in 5.1 years to lampoon runaway valuation hype.

- Jason Liu (jxnlco) shares notes on production monitoring and a real‑time evals framework for LLM apps in the wild.

- vLLM announced support to run DeepSeek‑V3.1 on vLLM with per‑request Think/Non‑Think toggles.

- Amir Efrati claims ChatGPT leans on Google search data even as it competes with it in consumer search.

- Steve Hsu says today’s models can read frontier physics papers well enough to accelerate his theoretical research.

- Scaling01 doubles down with an Anthropic unstoppable entity meme about momentum and singular focus.

- Ex Nihilo reacts to prompt bloat—“100‑page prompt is crazy”—as teams stretch instruction docs to their limits.

- ARC Prize points to a NeurIPS Kaggle contest to solve ARC tasks with Python—see NeurIPS 2025 ARC‑AGI challenge.

- Modal’s Akshat Bubna says they’ve built every layer of their AI infra stack in‑house, from filesystems to networking.

- Kimmonismus argues Sesame in TTS sounds more natural and responds with lower latency than rivals.

- Will Brown teases a minor version bump—framing the latest drop as incremental, not splashy.

- Clad3815 shows GPT‑5 Victory Road in Pokémon Crystal—~5,095 steps vs. ~15,970 for o3 (~3× faster).

- a16z resurfaces Chris Dixon’s Climbing the wrong hill essay: don’t optimize a local maximum if it’s the wrong goal.

- Mechanize is building RL environments for “full automation of the economy", and says higher costs per training task actually enable more elaborate training scenarios, because the investment can be justified for more complex, real-world-like environments—so instead of simple games, we'll soon see AI trained on sophisticated simulations of entire businesses, data centers, and distributed systems.

- Wes Roth flags a Grok privacy fiasco: 370,000 Grok chats were indexed via share links, exposing sensitive queries.

- Developer thdxr defends AI in a CLI—arguing terminal workflows beat GUI for speed and focus.

- In The Information’s interview, Bret Taylor (Sierra) and Winston Weinberg (Harvey) discuss AI agents reshaping service & law through best‑of‑breed, task‑specific systems.

- Per The Information, the cost of buying AI is creeping up, lifting revenue for Microsoft and other model sellers.

- M3‑Agent showcases a multimodal agent with memory that can see, listen, remember, and reason over long horizons.

- Jay Alammar’s Illustrated GPT‑OSS maps the modern open‑source GPT stack with clear visuals and links.

- GitHub argues developer expertise matters even more with AI, which amplifies (not replaces) skilled engineers.

- A Perplexity Page claims Qwen3‑Coder grabs 20% share on OpenRouter, challenging incumbents in AI coding tools.

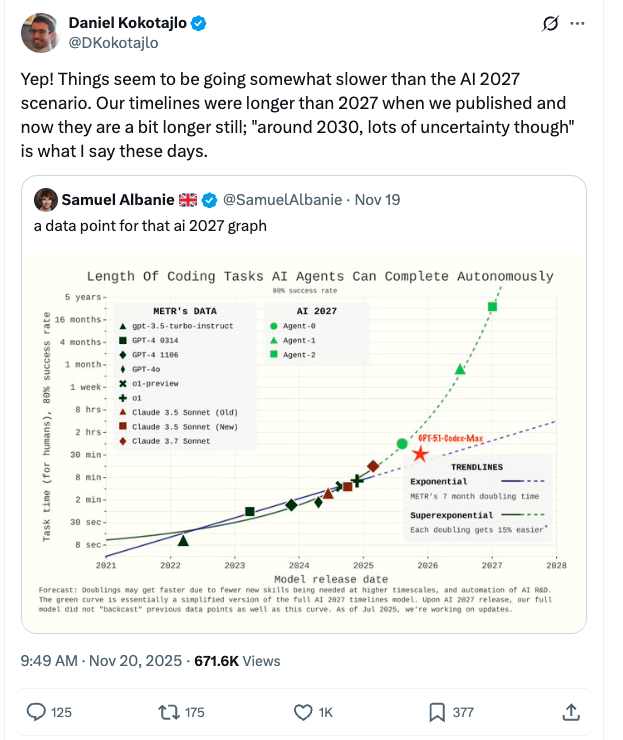

- METR autonomy evaluation update teases fresh tasks/tools for measuring long‑horizon, agentic capabilities, nodding to standardization and reproducibility.

- Simon Willison asked for hands‑off Claude Code voice control on macOS, seeking a fully voice‑driven workflow (Matt Berman asked for the same thing, but different use-case...and someone actually built one!)

- A Perplexity page claims Gemini will replace Assistant on smart speakers, signaling deeper Google home integration.

- Another Perplexity page says Anthropic bundling Claude Code into enterprise plans to standardize dev tooling.

- One more Perplexity page claims Apple is adding Claude AI into Xcode 26 beta for code assistance.

- A Perplexity summary highlights an AI ‘point of no return’ for gene‑therapy timing, informing dosing windows in trials.

- Harley Turan demos paths through latent space by interpolating between known embeddings.

- Justine Moore shares a Veo 3 chaining workflow—seed the next clip from the last frame to stitch longer videos.

- Linear now lets you delegate any issue to Cursor (with their new integration), where an AI agent automatically creates branches, writes code, and drafts pull requests for you. Teams can also use their new Product Intelligence feature that automates triage intake, detects duplicates, and suggests the right assignee, team, and labels for incoming issues.

August 19, 2025

- Microsoft AI CEO Mustafa Suleyman is worried about “Seemingly Conscious” AI, or AI systems that convincingly appear conscious without actually being conscious.

- Check out Corey’s breakdown of Anthropic's new “interpretability” video that covers how AI models engage in sophisticated internal planning and strategic deception—including catching Claude BS-ing by reverse-engineering fake math solutions to match user hints rather than actually solving problems, revealing that models have complex “languages of thought.”

- Po-Shen Loh says the number one trait for success in an AI-driven world is the ability to think independently and critically (what he calls “autonomous human thinking” and “thoughtfulness”), because without it you become dependent on others (including AI!) to do your thinking for you, making you easily deceived and unable to solve novel problems or collaborate effectively.

August 15, 2025

- Bessemer's new State of AI report found two winning AI biz archetypes: explosive “Supernovas” ($125M ARR in 2 years, 25% margins) vs more sustainable “Shooting Stars” ($100M in 4 years w/ 60% margins), and predicted browsers will dominate agentic AI and memory & context will replace traditional moats.

- This report comes amidst the largest wealth creation spree in recent history, that's produced 498 AI unicorns worth $2.7 trillion and minted dozens of new billionaires.

- What’s the strongest AI model you can train on a laptop? Probably this one.

- Want to see how a “blind” AI model visualizes the Earth? Then you gotta check out this new blog post from Henry and his new AI as cartographer eval.

- Confused how to feel about GPT-5? Same. Here’s two takes: first from Timothy B. Lee, who argues it’s both a “phenomenal success” AND “underwhelming failure” that was destined to disappoint, while Azeem Azhar and Nathan Warren break down the five paradoxes of GPT-5 (like moving goalposts for intelligence, fewer drops in reliability that become more noticeable, and its ability to “benevolent control” us).

- Researchers used two different genAI processes to create new compounds that combat two different kinds of drug-resistant bacteria (paper)…oh, and here’s another example of using AI to create new peptide drugs that can target and break down disease proteins.

August 8, 2025

- Are standalone AI coding startups like Cursor and Windsurf money-losing businesses? Ed Zitron certainly thinks so, and sees startups like Cursor as a systemic risk to the AI industry.

- Remember how Google indexed 4K chats that were “shared” publicly? It turns out the number was more like 96K (130K including Grok and Claude chats), and of those, the WSJ analyzed “at least dozens” of long chats where ChatGPT made delusional claims.

- Chris Olah of Anthropic wrote about how the tools scientists use to understand how AI systems work can learn shortcuts and memorization tricks instead of copying the AI's actual problem-solving methods, potentially giving researchers completely false explanations about how AI really operates—though his “Jacobian matching” technique can catch these deceptive interpretations by forcing the tools to match the mathematical fingerprint of the original AI's computation, building on recent advances in attribution graphs and attention mechanisms that show the potential of these interpretability methods.

August 6, 2025

- Can large language models identify fonts? Max Halford says “not really.”

- Blood in the Machine writes that the AI bubble is “so big it’s propping up the US economy.”

- A newly proposed datacenter in Wyoming could potentially consume over 5x more power than all the state’s homes combined.

- Seva Gunitsky argues “facts will not save” us, and that Historian and Translation roles might be the first jobs to get fully automated, but only because the “interpretative element of their labor” goes under-appreciated and gets dismissed as “bias.”

- Cisco researcher Amy Chang developed a “decomposition” method that tricks LLMs into revealing verbatim training data, extracting sentences from 73 of 3,723 New York Times articles despite guardrails.

- Gary Marcus, famous LLM skeptic, thinks with 5 months left in the year, AI agents will remain largely overhyped (while under-delivering), and thinks neurosymbolic AI models are still needed for true AGI (but underfunded).

- Oh, and he cited this paper, “the wall confronting large language models”, which argues language models have a fundamental design flaw where their ability to generate creative, human-like responses comes at the cost of permanent unreliability… and making them trustworthy would require 10 billion times more computing power.

Want more insights like these? Subscribe to The Neuron and get the essential AI trends delivered to your inbox daily. Because staying ahead means knowing what matters before everyone else figures it out.