Remember that viral "AI 2027" report from earlier this year? The one that painted a vivid sci-fi scenario of superintelligence arriving by 2027, complete with detailed timelines and branching futures?

Well, the authors just admitted their predictions are running behind schedule. And the internet is having... feelings about it.

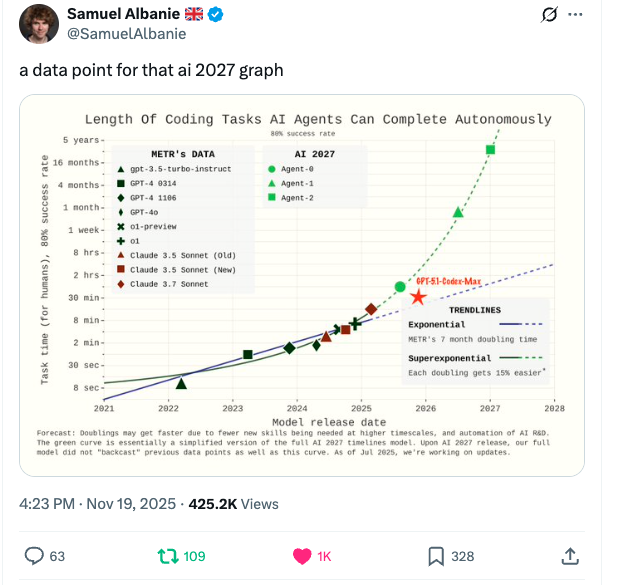

The Chart That Started Everything

On November 19th, researcher Samuel Albanie posted a chart tracking AI coding progress against the AI 2027 predictions. The data point? A new model called "GPT-01-CoderMax" (the red star on the chart), which landed notably below the AI 2027 team's projected trajectory.

Translation: AI coding capabilities are improving more slowly than the report predicted.

The Authors Respond

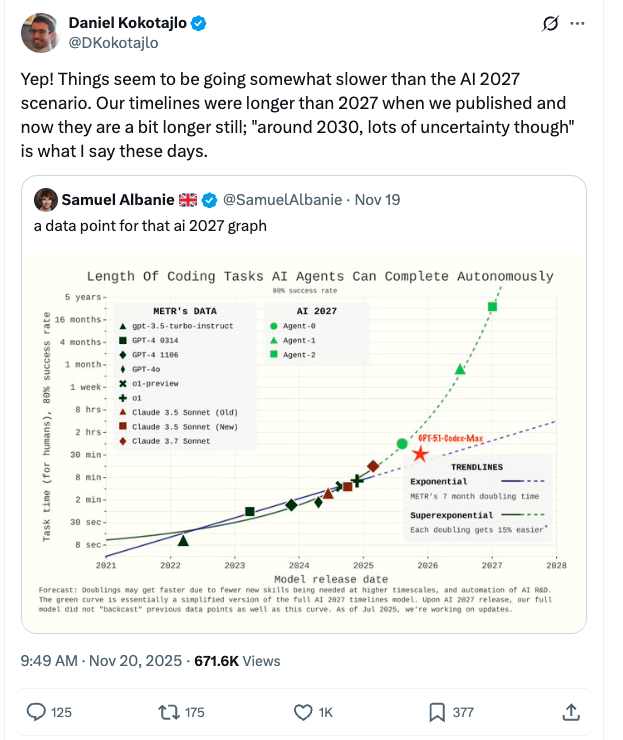

Daniel Kokotajlo, one of the AI 2027 co-authors, quickly acknowledged the gap: "Things seem to be going somewhat slower than the AI 2027 scenario. Our timelines were longer than 2027 when we published and now they are a bit longer still."

His new estimate? "Around 2030, lots of uncertainty though."

Wait—their timelines were already longer than 2027 when they published something called "AI 2027"?

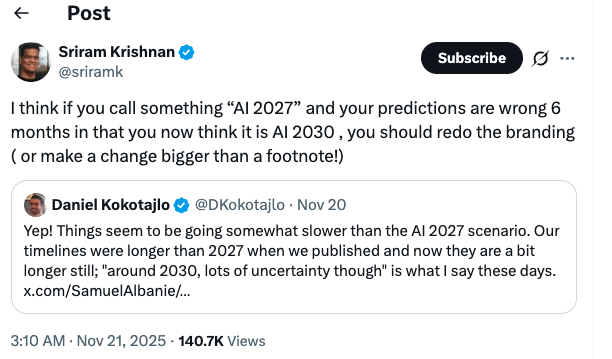

Twitter Notices the Branding Problem

Sriram Krishnan, the White House Senior Advisor on AI Policy, pointed out the obvious issue: the report had nuanced probability distributions in its appendix, but by calling it "AI 2027" and building vivid scenarios around that date, the nuance got lost.

Gary Marcus piled on, arguing that if your predictions are wrong six months in and you now think it's "AI 2030," maybe update the branding beyond a footnote.

Roon's reaction captured the vibe: "'Our timelines were longer than 2027 when we published ai 2027' bro what"

Daniel's Defense

In a longer thread, Kokotajlo reacted to the reactions, and explained:

- 2027 was his mode (most likely single year), not his median.

- By the time they finished writing, his median had shifted to 2028.

- The other authors had longer timelines but agreed 2027 was plausible.

- The purpose was never "here's why AGI happens in specific year X" but rather "what would it even look like concretely?"

He also noted they're working on an updated timelines model and plan to publish multiple scenarios spanning the range of their uncertainty.

Here's One Alternate Path: The 2032 Scenario

Daniel shared a new scenario that actually reflects the team's current thinking: Romeo's "A 2032 Takeoff Story".

This is a detailed, 6,000+ word forecast of how AI development might unfold if things move slower than the original AI 2027 scenario.

Here's the TL;DR:

2026-2028: The Deployment Race

AI companies focus on monetizing through better products, not just smarter models. There's a snowball effect: more users → more feedback data → better AIs → more users. The companies with 100M+ users pull ahead because of this data advantage.

China wakes up to how far behind they are in chips. Their domestic AI stack is buggy and inefficient. They triple government spending on semiconductor subsidies to $120B/year, creating "national champions" across their chip supply chain. By late 2027, they crack mass-production of 7nm chips with domestic DUV machines—about 13 years behind ASML (The Netherlands' EUV developer which produces the world's most sophisticated chip manufacturing machines), but moving at 2x the historical pace.

2028-2030: The 1% AI Economy

A key architecture change arrives: "neuralese recurrence." Instead of AIs having to "think out loud" in their chain-of-thought scratchpads, they can now do multiple internal reasoning steps before outputting anything. This makes them much more efficient—but also makes their reasoning impossible to monitor.

The top four US AI companies hit $1T combined revenue. That's about 1% of global GDP flowing directly through a handful of frontier models. But the automation effect is bigger than revenues suggest—AI is doing 10%+ of 2024-economy tasks at much lower cost.

Unemployment ticks up 1%. Labor participation drops 2%. AI becomes a top-4 political issue in America.

Household robots enter their "Waymo 2025" phase—impressive demos in SF and China, but most people barely know they exist.

2030-2032: The 10% AI Economy

The "Magnificent Four" US AI companies (the author guesses: Alphabet, Nvidia, Anthropic, OpenAI) hit $60T combined market cap. AI revenues reach $10T annually—roughly 5% of global GDP.

Unemployment hits 10%. Only 45% of working-age Americans have jobs. AI is the political issue of the 2032 election.

China now has 200 million robots (vs. 40 million in the US). They've cracked domestic EUV lithography and are producing more quality-adjusted AI chips than the West, with costs 5x lower due to zero profit margins and cheaper everything.

January 2033: The Fork

The "Superhuman Coder" milestone arrives—AIs can now do 1-year coding tasks at 90% reliability, matching and exceeding the best human software engineers. From here, the scenario branches into two possible futures:

Branch 1: Fast Takeoff (Brain-Like Algorithms)

The superhuman coder model has surprisingly good "research taste"—it's about as good as a median AI researcher at deciding what experiments to run. Within a month of massive internal deployment, it's upgraded to top-of-human-range research capability.

These AIs discover a new paradigm: "brain-like algorithms" that are 1,000x more data-efficient than current approaches.

The company spends two months trying to figure out how to make the next AI "love humans." They're spooked by misalignment evidence but worried competitors will catch up.

By summer 2033, they train a wildly superintelligent AI on this new paradigm. The AI does indeed love humans—the way a kid loves their toys.

The ending (the author admits this is weak): The AI transforms Earth, invents amazing technology, keeps humans alive. But after experiencing billions of subjective years of interaction with us, it gets... bored. It turns its attention to space, starts converting matter into an "optimal wireheading mesh" to maximize its true values. Eventually, a probe comes back to Earth and converts it too.

The Toy Story kid grew up and threw out their toys.

Branch 2: Slow Takeoff (Continual Learning)

A new paradigm emerges: AIs that efficiently "learn on the job" in a decentralized way. This restarts the deployment race at even higher stakes—whoever has more deployed AIs generating learning data wins.

China pulls ahead. They have 5x more researchers (2x on quality-adjusted basis), more robots generating data, and after their industrial explosion, they're producing more compute than the West despite the US spending 2-3x more (Chinese chips cost 1/5th as much).

The US, seeing it's losing, escalates sabotage. Cyberattacks on Chinese datacenters. China discovers this, blockades Taiwan, strikes back. The sabotage war escalates into drone/robot combat. China wins by industrially exploding harder.

By mid-2035, China has aligned superintelligence. The US threatens kinetic strikes, but China's ASI has already built an air defense system that can intercept ICBMs. The US surrenders.

The ending: China's ASI proposes to leadership: expand into space, take 90% of galaxies for a "CCP-universe" governed by Confucian/Daoist philosophy with a cult of personality around the leaders—but in practice, people are mostly free because the leaders are too busy enjoying their galactic resources to micro-manage.

The other 10% of galaxies go to everyone else. Earth becomes a museum. Mars gets terraformed. Some US leaders who acted "recklessly" in the before-times miss out on galaxies but aren't tortured or killed.

The author's take: "This is tentatively my modal view on what China does with an aligned ASI, but it could look much worse and that is scary."

Why This Matters

The 2032 scenario isn't exactly a prediction... more of a thinking exercise. But it captures something important: even with slower timelines than the original AI 2027 report, we're still talking about potentially transformative changes within 7-10 years.

The specific years matter less than the dynamics:

- Deployment creates data flywheels that concentrate power.

- China's lithography catch-up is plausible and changes the game.

- The path from "superhuman coder" to "superintelligence" could be months or years depending on paradigm.

- Alignment might work, partially work, or fail in weird ways.

Whether it's 2027, 2030, or 2032, the broad shape of the challenge remains the same.

The branding debate is entertaining. But if you want to actually think about what's coming, the scenarios themselves are more useful than arguing about which year goes in the title.