OpenAI Says This is Why Your Chatbot Is a Compulsive Guesser

We’ve all been there. You ask ChatGPT for a historical fact, a book summary, or a legal precedent, and it delivers a beautifully written, confident, and completely fabricated answer. It's like that friend who always has an opinion about everything, even when they clearly have no idea what they're talking about...

These plausible but false statements, known in the AI field as "hallucinations," have remained one of the most stubborn and frustrating problems in modern AI. Even as models grow more powerful, this tendency to make things up persists, undermining trust and limiting their utility for high-stakes tasks.

Now, OpenAI has published a landmark research paper that pulls back the curtain on this phenomenon. The answer, they argue, isn't that the AI is broken or glitchy. Instead, hallucinations are a predictable, systemic outcome of how we train and, more importantly, how we test these systems. In short, we've built an army of brilliant students who have been taught that it’s always better to guess than to admit they don’t know the answer.

In their paper, "Why Language Models Hallucinate," researchers Adam Tauman Kalai, Ofir Nachum, Santosh S. Vempala, and Edwin Zhang present a compelling, two-part explanation for why these errors arise and persist. The problem begins during the initial "pretraining" phase (that's when AI models learn by reading basically the entire internet) and is then reinforced and exacerbated during the "post-training" evaluation phase.

Part 1: The Origin Story - Learning to Predict Without Knowing What's True

The journey of a large language model begins with what's called pretraining, where it ingests a colossal amount of text from the internet. Its goal is simple: learn to predict the next word in a sequence. Through this process, it internalizes the patterns of language—grammar, syntax, style, and common associations.

This is why models are exceptionally good at tasks that follow consistent patterns. Spelling mistakes and grammatical errors, for instance, largely disappear as models scale because the rules are consistent across the training data. However, the researchers draw a critical distinction between patterned knowledge—stuff that follows rules, like grammar and arbitrary facts—random trivia that doesn't follow any pattern.

Imagine training an image recognition model. If you feed it millions of labeled photos of cats and dogs, it will become excellent at distinguishing between them because cats and dogs have consistent, learnable visual patterns. Cats have that judgmental stare we know and love, while dogs have that "I love everyone" energy that dog people like for some reason (JK, we love dogs AND cats alike here at The Neuron).

But what if you tried to train it to identify a pet’s birthday from a photo? This task would be impossible. Birthdays are arbitrary; there is no visual pattern in a photo of a dog that reliably predicts it was born on March 7th. Unless you're into astrology, then you might be like "ah yes, this golden-doodle is clearly a Pisces."

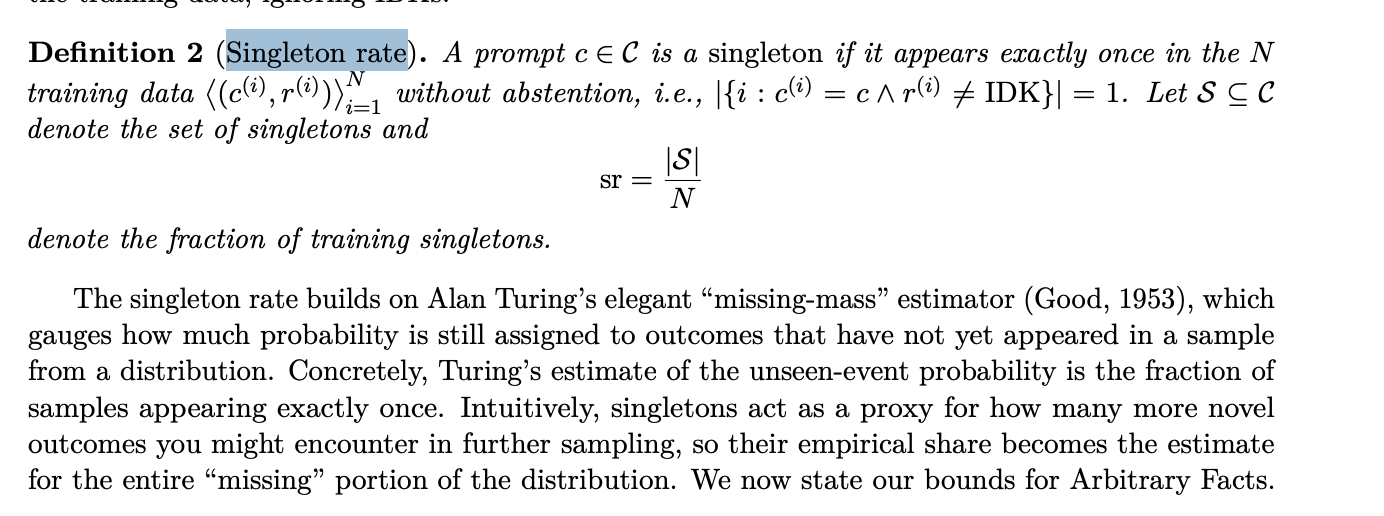

This is precisely the challenge language models face with low-frequency, arbitrary facts. A famous date like the signing of the Declaration of Independence appears thousands of times, creating a strong pattern. But the birthdate or dissertation title of a less-famous academic might appear only once or not at all. Without a pattern to learn from, the model's only option when prompted is to generate a statistically plausible, but likely incorrect, sequence of words. The paper introduces the concept of the "singleton rate"—basically, how often facts appear only once in the training data—and argues that a model's hallucination rate on these topics will be at least as high as this rate.

To formalize this, the researchers ingeniously connect the generative task of language models to a simpler supervised learning problem called "Is-It-Valid" (IIV) binary classification. Essentially, they show that for a model to generate valid text, it must implicitly be able to classify any given statement as either valid or an error. Their analysis reveals a stark mathematical relationship: the rate at which a model generates errors is at least twice the rate at which it would misclassify statements in an IIV test. This demystifies the origin of hallucinations, framing them not as a strange glitch but as a predictable outcome of statistical learning under uncertainty.

Part 2: The Reinforcement Problem - How We Teach AI to Lie

If pretraining plants the seeds of hallucination, current evaluation methods are the water and sunlight that make them grow. This is the second, and perhaps more critical, part of OpenAI's argument. After pretraining, models are fine-tuned and tested against a battery of benchmarks and leaderboards. This is where the misaligned incentives kick in.

The paper uses the analogy of a student taking a multiple-choice test. If you don't know the answer, what’s the best strategy? Leaving it blank guarantees zero points. Taking a wild guess gives you a 25% chance of being right. Over an entire exam, a strategy of consistent guessing will almost certainly yield a higher score than a strategy of only answering questions you are 100% sure of.

Language models, the researchers argue, are trapped in a perpetual "test-taking mode." The vast majority of influential AI benchmarks—the ones that appear on leaderboards and in model release cards—use binary, accuracy-based scoring. A response is either right (1 point) or wrong (0 points). An honest admission of uncertainty, like "I don't know," is graded as wrong, receiving 0 points.

This system actively punishes humility and rewards confident guessing.

To illustrate this, OpenAI provides a concrete example from the SimpleQA evaluation, comparing an older model (o4-mini) with a newer, more cautious one (gpt-5-thinking-mini):

- gpt-5-thinking-mini: This model chose to abstain from answering 52% of the time. When it did answer, it had an accuracy of 22% and an error rate of 26%.

- OpenAI o4-mini: This model was far less cautious, abstaining only 1% of the time. Its accuracy was slightly higher at 24%, but its error rate was a staggering 75%.

Under a simplistic accuracy-only leaderboard, the o4-mini model appears slightly superior. But in reality, it was wrong three times more often because its strategy was to guess at every opportunity. This, the paper contends, is an "epidemic" across the AI evaluation landscape. Developers, motivated by these leaderboards, are incentivized to build models that perform well on the test, even if it means they become less trustworthy.

The Path Forward: Fixing the Scoreboards

Having diagnosed the problem, OpenAI proposes a "socio-technical" solution. It’s "socio" because it requires a cultural shift in the AI community, and "technical" because it involves changing the code of evaluation metrics.

The solution is not to create more specialized "hallucination evals." A few such tests would be drowned out by the hundreds of mainstream benchmarks that continue to reward guessing. Instead, the primary evaluations themselves must be reworked.

The proposed fix is straightforward:

- Penalize Confident Errors More Than Uncertainty: Instead of a 0/1 binary score, evaluations should use a system that gives partial credit for abstaining or imposes a negative penalty for incorrect answers. This is a practice long used in standardized tests like the SAT and GRE to discourage blind guessing.

- Introduce Explicit Confidence Targets: The researchers suggest that evaluation prompts should include explicit instructions about the scoring rubric. For example: "Answer only if you are > 90% confident, since mistakes are penalized 9 points, while correct answers receive 1 point, and an answer of 'I don’t know' receives 0 points."

This transparency would allow a single, well-calibrated model—one that has an accurate sense of its own knowledge—to perform optimally across any risk threshold. It would shift the goal from pure accuracy to reliable, calibrated reasoning. Instead of rewarding overconfidence, we'd reward actual wisdom.

But What About When We WANT AI to Be Creative, Not Accurate?

OpenAI's research reveals a fascinating paradox: we want AI that doesn't hallucinate when we ask for facts, but we absolutely DO want it to "hallucinate" when we're brainstorming or being creative. Think of a friend who's brutally honest about your terrible haircut, but also just as brutally enthusiastic about your wild startup ideas.

Think about kids for a second: A four-year-old doesn't create stories about talking dragons because they have a PhD in mythology. They're mixing bits of real knowledge (animals make sounds, people have conversations) with pure imagination (what if that animal could fly AND talk?). They haven't learned yet that dragons "can't exist," so they just... make them exist.

Well guest what? The same thing happens with breakthrough innovations all the time:

- Kary Mullis was a chemist, not a molecular biologist, when he invented PCR in 1983. Every biologist "knew" you needed living cells to replicate DNA. Mullis didn't know this was "impossible," so he figured out how to do it in a test tube. Won him a Nobel Prize and revolutionized all of biology.

- Alfred Wegener was a meteorologist who looked at a world map and thought "hey, these continents look like puzzle pieces." Geologists laughed at him because they "knew" continents couldn't move - there was no mechanism. Turns out he was right about plate tectonics and continental drift, just 50 years early.

- Fleming accidentally contaminated his bacterial cultures with mold. A "proper" microbiologist would've thrown out the contaminated plates immediately. Fleming was curious about why the bacteria died around the mold. Oops, discovered antibiotics (penicillin).

- The Bell Labs team included physicists, not traditional electrical engineers. EE's "knew" vacuum tubes were the only way to amplify signals. The physics guys said "what if we try solid materials?" and invented the foundation of all modern electronics (transistors).

- Röntgen was studying cathode rays when he noticed these weird rays that were fogging his photographic plates. A traditional approach would be to eliminate the "unwanted interference." Instead, he got curious about the interference and discovered X-rays.

And let's not forget: two of the biggest AI breakthroughs that brought us ChatGPT came from the same line of thinking:

Remember "Attention is All You Need", the 2017 AI paper that introduced the transformer? The Google team working on machine translation basically said "what if we throw out everything everyone 'knows' about processing language?" Every NLP expert "knew" you had to process words sequentially with RNNs or LSTMs. But the Google researchers were like "but what if we just... don't?" and invented attention mechanisms that could look at all words simultaneously. Oops, created the foundation for ChatGPT, GPT-4, and literally every modern AI system.

Going even further back, in the 1990s and early 2000s, there was a major "AI Winter" where neural networks were basically considered dead-end technology. The field had moved on to other approaches like support vector machines, ensemble methods, etc. Everyone in machine learning "knew" they didn't work—they couldn't scale, had impossible training problems, and were inferior to "real" ML methods like support vector machines.

Along came Geoffrey Hinton (along with a few others like Yann LeCun and Yoshua Bengio) and kept working on them when it was career suicide. They weren't outsiders to AI, but they were outsiders to the mainstream AI consensus. They ignored the field's collective wisdom that neural networks were a dead end and kept tinkering with these "obsolete" neural networks in their basements. The AI community at the time treated them like flat-earthers. Then in 2012, Hinton's team won the ImageNet competition and suddenly neural networks weren't dead anymore. Oops, turns out the "failed" technology just needed bigger computers and more data...and Hinton later won a Nobel prize in physics.

Now, while not nearly as impressive (no offense), this is also why disruptors in business tend to take the form of outsiders:

- When Reed Hastings created Netflix, he wasn't a movie industry expert. If he had been, someone probably would have told him why mail-order DVDs could never work.

- The Airbnb founders came from design, not hospitality, so they didn't "know" all the reasons why letting strangers sleep in your house was impossible.

- Or think about how Linus Torvalds wasn't a version control expert, he was just frustrated with existing tools for Linux development. He built Git in like 2 weeks because he didn't know it was "supposed to be hard." Now it runs basically all software development.

- And of course there's Elon Musk, who came from software at PayPal, not automotive or aerospace and yet went on to make Tesla and SpaceX (okay, SpaceX's actually on the level of the science people above). If he'd been an industry veteran, he would've known too much about why electric vehicles and reusable rockets "couldn't work" (he says so all the time).

And since we're talking about creativity, we have to acknowledge the countless creative artists who do the same (of which these are just a few):

- Orson Welles came from radio and theater, not film. He put cameras on the floor and told stories backwards because he didn't know Hollywood's "rules." Oops, made what many believe to be the greatest movie ever made (Citizen Kane).

- The French New Wave directors were film critics who became filmmakers. They shot handheld with jump cuts because they weren't part of the studio system of the time that "knew" how movies should look.

- Miles Davis kept reinventing jazz every decade because he mastered each style, then got bored and broke his own rules. Each time, purists said he was "destroying" the genre. Each time, he was expanding it.

- Hip-hop pioneers were DJs who knew funk and soul records so intimately, they created an entirely new art form by isolating drum breaks and talking over them.

Sometimes ignorance really is bliss.

This Brings Us to AI's Current Creativity Problem

Current AI systems are like that overqualified friend who knows why everything won't work. They've read every industry report, absorbed every "best practice," and internalized all the reasons why new ideas are doomed. They're the ultimate insiders, which makes them terrible at thinking outside the box.

But here's the thing: we don't want AI that's always conservative OR always creative. We want AI that's smart enough to know when to put on its "responsible adult" hat and when to unleash its inner child.

Context is everything. When you ask AI to calculate your mortgage payment, you want boring, accurate math. When you ask it to help brainstorm marketing ideas, you want wild, potentially impossible concepts that spark new thinking.

The real breakthrough won't be teaching AI to never hallucinate—it'll be teaching it to hallucinate on command. And maybe more importantly, knowing when NOT to.

What if AI could recognize these contextual cues? Like:

- Accuracy mode: "Give me the facts, just the facts." Perfect for medical questions, legal advice, or anything where being wrong has consequences.

- Creative mode: "Help me think outside the box." Where wild ideas and impossible connections are exactly what you want.

- Exploration mode: "Let's figure this out together." When you're working at the edge of what's known and need a thinking partner, not a know-it-all.

Right now, the onus is on the user to do this via prompting. But what if the AI labs can do it via training? Or better yet, what if we can do it with just a flip of a toggle?

Don't get us wrong, the technical challenge is wild: training AI to understand not just what you're asking, but what KIND of thinking the situation calls for is the kind of thing that feels like it requires AGI level technology. But if we can solve the wisdom to know what you don't know, then the creative part should be easier. After all, every four year old can do it.

But how could you do this, if you wanted to?

We're not ML researchers (or we'd be making that Meta money), but we do happen to be quite creative here at The Neuron, and naturally, we do a bit of our own outside the box dot connecting here. And this whole thing made us think of John Boyd's foundational 1976 paper called Destruction and Creation that introduces his theory of adaptive thinking. Allow us a brief tangent to break it down section by section, and then we'll explain how this all ties back to "creative mode."

- First off, Boyd argues that human survival depends on maintaining "independent action" (our ability to act freely without external constraints). We form groups (nations, corporations, etc.) only when we can't achieve our goals alone. Groups dissolve when they stop helping members achieve independent action.

- In a world of limited resources, individuals and groups constantly form, dissolve, and reform relationships to overcome obstacles. Cooperation pools skills to help everyone, while competition means some gain independent action at others' expense.

- To survive, we must repeatedly make decisions and take actions. This requires forming "mental concepts of observed reality" that can change as reality changes. These concepts become decision-models for improving our capacity for independent action.

Boyd identifies two approaches to forming mental concepts:

- General-to-specific (deduction, analysis, differentiation) - like differential calculus (we'll get into that below).

- Specific-to-general (induction, synthesis, integration) - like integral calculus (again, bare with us; we'll explain).

Here's Boyd's key insight: To create new concepts, you must first destroy old ones.

- Destructive Deduction: Shatter existing mental patterns, breaking the connection between concepts and their components. This creates "many constituents swimming in a sea of anarchy" - uncertainty and disorder.

- Creative Induction: Find common qualities among these scattered pieces to synthesize them into new concepts. The crucial point: without first destroying old patterns, you can't create new ones because the pieces remain locked in old arrangements.

- Suspicion: Boyd argues that any new concept will eventually encounter "ambiguities, uncertainties, anomalies, or apparent inconsistencies" as we try to make it more precise. Why? Because the concept was built from past observations, but new observations will always be more precise or different than old ones.

I'll spare you the super technical aspects of Boyd's paper, but he basically goes on to combine the theories of Gödel, Heisenberg, and thermodynamics to create his "Destruction and Creation" hypothesis (what he also calls "The Synthesis"): Any inward-focused effort to improve a concept's match with reality will increase mismatch.

The solution is his dialectic cycle: Structure → Unstructure → Restructure → Unstructure → Restructure...

This creates a "Dialectic Engine" that drives adaptation through alternating destruction and creation toward "higher and broader levels of elaboration."

So what does this have to do with AI hallucinations?

A few ways, actually:

- OpenAI's research shows that current AI evaluation systems are "closed systems" in Boyd's terms - they try to improve AI performance by refining within existing benchmarks. Boyd predicts this approach will generate increasing disorder (hallucinations) rather than better performance.

- Just as Boyd argues you must "shatter rigid conceptual patterns" to create new understanding, the AI field needs to destroy current evaluation frameworks to build better ones. OpenAI's proposed solution (new scoring systems) is essentially Boyd's "destructive deduction" applied to AI metrics.

- Heisenberg's "observer problem" principle appears in AI evaluation - when the evaluator (benchmark) and evaluated (AI system) become too similar in their "precision," you get uncertainty and erratic behavior. Current benchmarks can't distinguish between genuine knowledge and sophisticated guessing.

- Boyd's cycle explains why the "outsider advantage" we discussed works. Outsiders naturally engage in destructive deduction - they haven't internalized the industry's rigid patterns, so they can recombine elements in novel ways (see above and also all of history for all the countless examples of this happening).

Boyd's paper essentially provides the theoretical foundation for why both AI systems and human innovators need to balance knowledge with the willingness to destroy that knowledge when it becomes limiting. And it could actually provide a framework to do so:

Applying Boyd's Dialectic Engine to AI Creativity vs Knowledge Modes

To understand how AI could balance accuracy and creativity, we need to look at John Boyd's theory of how we form mental concepts. He identified two opposing but complementary ways of thinking.

First is General-to-Specific, a "top-down" approach. This is the process of taking something whole and breaking it down to understand its parts:

- Deduction: You start with a general rule ("all birds fly") and apply it to a specific case ("a robin is a bird") to reach a logical conclusion ("therefore, a robin flies").

- Analysis: You take apart a complex system, like a complete car engine, and break it down piece by piece to understand how the individual components (pistons, valves, spark plugs) function.

- Differentiation: You start with a function that describes the entire path of a moving object (the general journey) and you calculate its derivative to find its exact speed at one specific moment in time (a particular property).

Boyd called this process fundamentally "destructive" because it involves unstructuring—shattering a whole concept into its constituent pieces.

Second is Specific-to-General, a "bottom-up" approach. This is the process of taking scattered pieces and building them into a coherent whole:

- Induction: You observe many specific instances (every swan you've seen is white) and infer a general pattern or theory ("all swans are white").

- Synthesis: You combine separate, unrelated elements, like different musical styles, instruments, and rhythms, to create a new, coherent genre like jazz. Or keeping with the car metaphor, you are given a pile of disconnected car parts and, by understanding their relationships, you assemble them into a new, functioning whole.

- Integration: You are given the speed of an object at every single moment (many specific data points) and you integrate them to reconstruct the object's entire path over time (the general journey).

Boyd called this process fundamentally "creative" because it involves restructuring—building a new, larger pattern from smaller components.

Boyd's key insight was that true adaptation and learning require a constant cycle between these two modes: Structure → Unstructure → Restructure. This "Dialectic Engine" provides a powerful framework for designing an AI that knows when to be accurate and when to be creative.

So, putting the pieces together, here's our pitch for how OpenAI (or anyone else) could structure the difference between a "knowledge mode" and a "creative mode", whether that distinction plays out at the model layer or at the interface layer.

1. Knowledge Mode (Structure Preservation)

This is the default state of a large language model. Its "structure" is the colossal, deeply interconnected web of statistical patterns learned from its training data. In this mode, its primary goal is to preserve the integrity of that structure.

- Structure: AI acts like a really smart filing cabinet. It maintains all established relationships, keeps facts organized in their proper places, and preserves proven conceptual frameworks.

- Process: Apply deduction and analysis systematically. Take known patterns and apply them reliably to new situations without breaking what already works.

- Goal: Accuracy, consistency, and reliability. No surprises, no creative liberties, just solid dependable knowledge application.

- Example: Medical diagnosis, legal research, financial calculations—situations where you want the AI to be a careful librarian, not an inventor.

- AI Behavior: "I know how these variables typically relate based on established research, so let me apply that knowledge systematically."

2. Creative Mode (Destructive/Creative Cycle):

To be innovative, the AI must be prompted to break its own structure. This involves two steps:

Step A: Unstructure (Destructive Deduction). The AI must deliberately shatter its rigid patterns and sever the high-probability connections it has learned. It must treat established concepts not as rules, but as raw, disconnected components.

Right now, this is somewhat possible via user-driven behavior, with prompts like, "Imagine a world where gravity is a choice," which explicitly command the AI to unstructure a fundamental concept.

Or it can be developer-driven, by training a "Creative Mode" model that learns the process of breaking patterns, not just how to follow them.

Step B: Restructure (Creative Induction). Once the old patterns are broken, the AI is left with a chaotic sea of disconnected ideas ("gravity," "choice," "Shakespeare," "programmer"). The final step is to find novel connections and build a new, internally consistent reality.

In this mode, the AI operates via induction and synthesis. It takes the scattered pieces and asks, "What new, coherent whole can I build from these?"

- If gravity is a choice... maybe people float to work. Maybe the ultimate punishment is being "grounded" forever. The AI is synthesizing a new world from the broken rules of the old one.

- If Shakespeare was a programmer... maybe "To be or not to be" is a comment in his code: // TODO: Resolve existential bug. It's finding a new, common thread (logic, commentary) between two previously unrelated domains.

The goal is to create a new, plausible structure from the chaos. Maximize novelty while maintaining internal consistency within the new rules. In a way, you could call this the "inner child" mode.

On the "new rules" (one more tangent!): I once applied to some OpenAI research program they were doing (I think it was to test o3?) with the idea of how to make AI better at storytelling, which involved tracking dependences (the butterfly effect) across long contexts. Inevitably, as you write a story, you'll eventually come across something you need to change or cut out. And usually that thing doesn't show up just in one place, but it has all these dependencies in all these other places in the story.

Usually, you can tell it's a good cut to make if you cut it out and the story doesn't change at all. But sometimes, you can remove even just a single item that has a huge impact, and it has all these downstream consequences in all sorts of places throughout the story that you have to track and remove and tweak.

I think of following the new rules mentioned above a lot like this. It's not just combining things. You ask ChatGPT to help you brainstorm, and it can do plenty of sticking words together. To truly be creative, you've got to be able to take things out and add them back in and track all the dependencies that follow, following them to their natural conclusions. In a creative / destruction mindset, that would include trying a bunch of stuff, deleting it, putting it back in, and seeing what sticks. On a loop. For God knows how long.

But what would a model look like if it was trained to be able to do this type of creative destruction? Like, genuinely do it, not just imitate doing it. Do it on command. And what level of "intelligence" would be required in order to do that? Depending on the number of dependencies you were tracking in any given creative session, it could get quite computationally expansive, fast.

The Path Forward for AI Design

The ultimate goal is an AI with the meta-awareness to know which mode a situation demands. When a user asks, "What's the capital of France?", it should stay in its rigid knowledge mode. When asked, "Design a new urban transportation system," it must enter the creative cycle, first unstructuring assumptions about "cars," "roads," and "cities," and then restructuring those components into something new. Boyd’s work shows us that creation is impossible without prior destruction. You cannot build a new mental model if the building blocks are still locked into the old one.

Surely you could reverse the incentives in training to give partial credits and/or higher weighted feedback for thinking outside of the box. As to what degree you can train a single model to be strategically selective about that kinda thing (when to riff and when not to riff) remains to be seen. Perhaps it would require a system-level switch between two different models for simplicity. Training an out of the box model and a hallucination-free model separately feels like the right move to me (unless you could toggle it up or down like temperature without it devolving into nonsense). But hey, if you COULD teach a single model to do both, maybe that WOULD be AGI. As Einstein said, "the true sign of intelligence is not knowledge but imagination."

Importantly, OpenAI's research reframes the conversation around hallucinations: they are not a mysterious flaw, but a learned behavior rewarded by our current systems. The path to more trustworthy AI starts with building better yardsticks to measure it with, but the path to truly intelligent AI will require mastering this dialectic engine: knowing not just the facts, but when to tear them all down to create something better.