Remember when Google felt late to the AI party? When ChatGPT dominated headlines while Gemini played catch-up?

Well, that chapter just closed.

In this discussion between Logan Kilpatrick (DeepMind) and Koray Kavukcuoglu (CTO of DeepMind & Chief AI Architect of Google), the focus is on the launch of Gemini 3. The conversation highlights the transition of DeepMind from a pure research lab to a product-driven engineering powerhouse, the convergence of multimodal architectures, and the roadmap for "agentic" AI.

The raw numbers look good—Gemini 3 pushes multiple frontiers simultaneously. But here's what matters more: DeepMind transformed from a pure research lab into a product-driven engineering powerhouse. They're now "co-building AGI with customers" rather than perfecting models in isolation.

Translation? The days of waiting years between breakthrough and real-world deployment are over.

Kavukcuoglu admitted Google was playing catch-up for a while. They hadn't invested enough initially. But instead of copying competitors, they innovated their way back—especially through hardware/model co-design that competitors can't easily replicate.

The result? Gemini 3 excels at instruction following (actually doing what you ask), works across 100+ languages, and handles tool use better than previous versions. Plus, there's "Nano Banana Pro"—yes, that's the real name—which leverages Gemini 3's world understanding to generate nuanced images that convert complex documents into accurate infographics.

Oh, and about that name: it wasn't corporate strategy. It was an internal code name that stuck because testers on LM Arena loved it. Google decided to keep it. Sometimes the best branding happens organically.

The biggest takeaway? Progress isn't slowing down. Despite fears of hitting a plateau, Gemini 3 shows the frontier keeps expanding. And Google's betting their future on one principle: the biggest risk isn't moving too fast—it's running out of innovation.

Gemini 3 Launch & The State of AGI

- (0:00) Co-building AGI with the world: AGI is no longer a purely research effort isolated in a lab; it is now a joint effort co-built with customers and the world. The technology is defining user expectations, and user interaction is shaping the model.

- (0:35) The democratization of building: The new model capabilities enable a massive influx of new "builders." The goal isn't just code; it is to "bring anything to life."

- (1:38) Real-world testing vs. Leaderboards: While leaderboards looked great for Gemini 3, the team places higher value on "trusted testers" and pre-release phases. The true test is putting it in the hands of users to see if the "vibes" and utility match the benchmarks.

- (2:20) Progress is not slowing down: Contrary to some scaling debates, the progress from Gemini 2.5 to 3.0 indicates that the frontier is still being pushed across multiple dimensions. The rate of innovation remains consistent.

Benchmarks, Frontiers & Innovation

- (3:08) Surface area of innovation: Impactful real-world deployment increases the "surface area" of the problem space. More users create more signals and harder problems, which challenges the researchers and drives the next cycle of intelligence.

- (3:35) The Benchmark Paradox: Benchmarks are defined at a specific time to represent a challenge. As technology progresses, benchmarks naturally squeeze and saturate. They cease to define the frontier, necessitating the creation of new benchmarks.

- (4:16) Rapid benchmark saturation: Benchmarks like HLE (Humanities/Law/etc.) started with models scoring 1-2%, and rapidly jumped to 40%+. Hard benchmarks like GPQA Diamond still offer resistance, but the number of "unsolved" problems is shrinking fast.

- (5:35) The ultimate metric of progress: The truest measure of progress isn't a number on a chart, but the spectrum of real-world utility—lawyers, engineers, and students using the model for tasks ranging from creative writing to complex problem-solving.

Strategic Technical Focus Areas

- (6:40) Instruction Following as a priority: A major hill-climbing focus is "instruction following." It is critical that the model doesn't just answer, but adheres strictly to the user's specific constraints and requests.

- (7:05) Internationalization is non-negotiable: For Google, reaching the entire world is a requirement, not a feature. Gemini 3 shows significant improvement in languages that have historically been difficult for LLMs.

- (7:40) Models as tools vs. Models using tools: There is a dual focus on agents: 1) Models naturally using existing tools (function calls), and 2) Models writing their own tools. This creates a "multiplier of intelligence."

- (8:23) Code is the fabric of digital life: Coding is emphasized not just for software engineers, but because code is the integration layer for the digital world. It allows the model to interface with anything on a laptop, facilitating the "vibe coding" shift from creative idea to productive reality.

Product Scaffolding & Feedback Loops

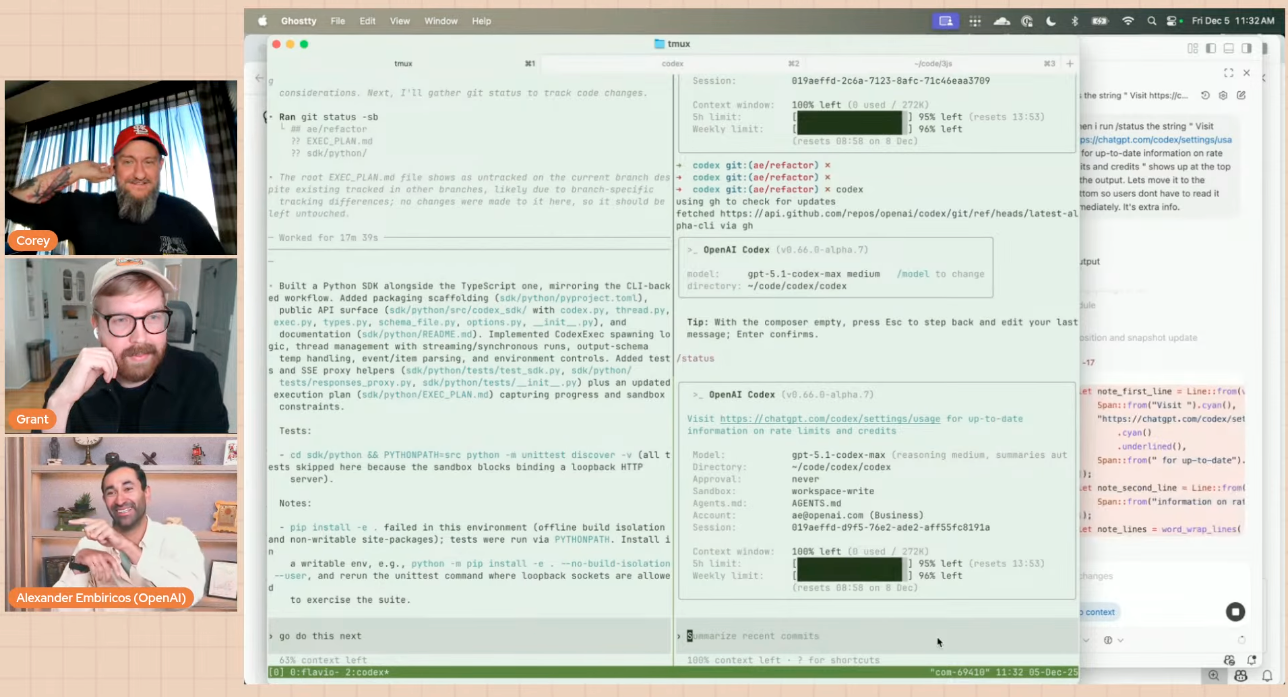

- (10:01) The "Anti-Gravity" Feedback Loop: Products like the new "Google Anti-gravity" agentic platform serve a dual purpose: they are products, but they are also research instruments that provide direct learning signals from software engineers to improve the model.

- (12:12) The Role of Chief AI Architect: Koray's role merges DeepMind's research with Google's products. The goal is not for researchers to become product managers, but to supply the "best technology available" to product teams, enabling them to define the new world of user expectations.

- (14:00) Adopting an Engineering Mindset: DeepMind has shifted toward a "trusted, tested system" engineering mindset. Safety and security are treated as first principles built from the ground up (during data, pre-training, and post-training), not checked at the end.

- (15:46) The "Team Google" Model: Gemini 3 is described as a "Team Google" model. It required a massive global effort, with contributors from Europe, Asia, and the US, moving beyond the scale of historical projects like the Apollo program in terms of coordination.

Future Gaps & Agentic Evolution

- (17:46) Acknowledging imperfections: Despite the launch, gaps remain. The model is not perfect in writing or coding yet. The most exciting growth area is "agentic actions," where there is still significant room for improvement.

- (19:02) Why Agentic capabilities lagged Multimodal: Google was SOTA in multimodal early on but lagged in agents. Koray attributes this to the development environment: the closer the research environment is to the "real world" (like coding environments), the faster the progress. The shift to an engineering mindset is correcting this.

Generative Media & "Nano Banana"

- (20:53) Video implies physics: A video model is not just about media; it is a critical part of AGI because understanding video requires understanding the world and physics.

- (22:34) Convergence of Architectures: Historically, image and text architectures were distinct. Now, they are converging. Ideas from one domain improve efficiency in the other, leading to models that handle input/output multimodality naturally.

- (23:47) The Story of "Nano Banana": The name "Nano Banana" wasn't a corporate strategy; it was a code name (distinct from the AI-generated "Rift Runner") that stuck because it was used during organic testing on LM Arena. The team decided to keep it because it reflected the organic connection builders felt with the model.

- (25:38) Nano Banana Pro: This is a new SOTA image generation model built on top of the Gemini 3 Pro architecture. It leverages the text model's massive "world understanding" to generate highly nuanced images, such as converting complex documents into accurate infographics.

Unified Models & Technical Challenges

- (27:41) The Unified Checkpoint Hypothesis: While the goal is a single model for everything (text, image, video), we aren't there yet. The "scientific method" dictates that you test the hypothesis; sometimes unified works, sometimes it doesn't.

- (28:17) The "Output Space" Signal Problem: A major hurdle for unified models is the learning signal. Code and text provide clear signals. Images are harder because "pixel perfectness" and "conceptual coherence" are difficult to train simultaneously with text logic.

DeepMind History & Culture

- (29:53) From 1 to All: Koray was the first deep learning researcher at DeepMind (joined 13 years ago). At the time, deep learning was not popular. The journey went from a niche belief to powering almost all Google products.

- (32:31) The Luck Factor: Koray admits to being "lucky." Success wasn't just about smart choices; it was the convergence of hardware evolution, the state of the internet/data, and talent aligning at the exact right moment in history.

- (34:29) Scaling Teams: A key cultural learning was moving from writing papers with 25 people (which was considered huge) to building models with 2,500 contributors. This required a shift in how they organize around missions.

Innovation vs. Execution

- (35:18) "Deep Think" & Exploration: DeepMind maintains a "mainline" of model development but uses offshoots for exploration. The "Deep Think" models used in Math Olympiad (IMO) competitions are examples of exploring risky ideas that, if successful, are folded back into the main model family.

- (38:23) The Biggest Risk is Stagnation: The biggest risk for Gemini is "running out of innovation." Koray explicitly rejects the idea that they have "figured out the recipe" and just need to scale. The architecture will likely look different in 20 years, so continuous innovation is required.

The Google "Underdog" Narrative

- (44:04) Google as the Underdog: Both Logan and Koray discuss the feeling of being "underdogs" in the LLM space over the last year. Koray admits they had to "catch up" and hadn't invested enough in certain areas previously.

- (46:36) Innovating, not Copying: To catch up, they didn't just copy competitors. They "innovated for themselves," finding unique solutions (like their hardware/model co-design) to regain a leadership position.

- (47:36) The Next 6 Months: The forecast is that the next six months will be just as exciting and fast-paced as the last six. The rhythm of release and update is now established.

Behind the Scenes: What It Actually Takes to Ship State-of-the-Art AI

While Koray explained the technical breakthroughs, CEO Sundar Pichai revealed what it actually took to get here.

Short answer? Ten years of bets that looked questionable at the time.

In a separate conversation with Logan, Sundar traced Google's AI journey back to 2016—when declaring the company "AI first" seemed premature. That year, three things converged: Google Brain's breakthrough in image classification, the DeepMind acquisition, and Google's first TPU announcement.

Most people missed the significance. Sundar didn't.

"It was clear to me we were about to go through another platform shift," he explained. So Google went all-in on the full stack—custom chips, massive infrastructure, unified AI teams—while competitors were still figuring out if this AI thing was real.

The catch? When generative AI exploded in 2022, Google looked flat-footed. Behind the scenes, they were frantically scaling capacity. "We were short on capacity," Sundar admitted. "If you were on the outside, it looked like we were quiet or behind. But we were putting all the building blocks in place."

Now they're on the other side. During Gemini 3's launch week, Google shipped something new every single day. Search, YouTube, Cloud, Workspace—Gemini became the through line connecting Google's entire product ecosystem.

The Nano Banana Pro moment crystallized something bigger: these models crossed the chasm from entertainment to utility. When users started generating infographics that actually compress complex information into digestible visuals, Sundar saw a preview of the future. "How much latent creativity is there in the world?" he wondered. "We're giving people tools to express what they're thinking."

And about "vibe coding"—non-engineers building functional apps through conversation with AI? Google's seeing employees who've never written code submit their first code reviews. A comms team member built an interactive Spanish conjugation tool for his son. In one shot.

"This is the worst it'll ever be," Sundar noted. The same way early Waymo was the worst it would ever drive, today's Gemini is the floor, not the ceiling.

What's next? Google's already planning the next decade. Quantum computing in five years. Data centers in space by 2027 (yes, really—Project Suncatcher aims to put TPUs in orbit). Advanced robotics. More infrastructure bets that sound crazy today but might be obvious tomorrow.

The pattern holds: make long-term bets, ignore the skeptics, execute relentlessly.

Key Insights & Strategic Perspectives

- (0:00) Gemini as the "Through Line" Gemini has become the unifying thread connecting every single Google product, creating a cohesive "AI First" ecosystem rather than disparate tools.

- (0:37) Prediction: The Quantum Leap Sundar predicts that in approximately 5 years, the world will experience "breathless excitement" about Quantum computing, similar to the current fervor surrounding AI.

- (1:51) The Payoff of Long-Term Foundations The current rapid shipping cadence is only possible due to deep foundational investments made years ago; visual progress is the result of years of "quiet" structural work.

- (2:57) Resisting the "Rat Race" Despite the hyper-competitive moment and the pressure to "hill climb 1%" on leaderboards, Sundar emphasizes the necessity of pulling back to make long-term bets rather than just reacting to the immediate industry movements.

- (3:26) The 2016 "AI First" Pivot The decision to make Google "AI First" in 2016 was prompted by the 2012 Google Brain "Cat paper" (image classification breakthrough), the 2014 DeepMind acquisition, and the January 2016 AlphaGo moment.

- (3:51) The Overlooked TPU Milestone Many people missed the significance of May 2016, when Google announced its first TPU (Tensor Processing Unit), signaling a full-stack bet on the coming platform shift.

- (4:38) The Generative AI "Window" The Generative AI moment revealed a window much bigger than anticipated—consumers and developers were ready to use the technology at scale earlier than expected.

- (5:47) Full Stack Innovation Flow With a full-stack approach, innovation at one layer (like pre-training) immediately flows through to the top products; pre-training success acts as an accelerant for post-training and RL (Reinforcement Learning).

- (6:54) The "Quiet" Capacity Phase Google appeared "quiet" or "behind" to the outside world recently because they were dealing with a fixed cost: the time required to ramp up infrastructure capacity (data centers, TPUs) to handle the scale of the Gen AI moment.

Product & Technology Insights

- (7:41) The Challenge of "Sim-Shipping" The new operational hurdle isn't just building models, but "Sim-shipping" (simultaneous shipping)—deploying state-of-the-art models across every surface (Search, Cloud, Waymo, Gmail) at the same time.

- (9:16) Innovation at Scale Success is defined not just by Google's shipping, but seeing the ecosystem (Figma, Replit, Copilot) "sim-ship" alongside them using their models.

- (9:59) Crossing the Chasm with Infographics The "Nano Banana Pro" model's ability to generate high-quality infographics represents a shift from "fun" AI to genuine utility, compressing information in a world that has historically suffered from slide/information bloat.

- (11:07) Emergent Capabilities over Planned Features The infographic capability wasn't explicitly engineered; it was an emergent property that appeared as the model got better at general text rendering and reasoning.

- (14:18) The 3.0 Model Landscape While Gemini 2.5 Pro was a significant step at I/O, competitors caught up. The goal is now a 6-month cadence of pushing the frontier, though meaningful leaps are becoming harder to achieve.

- (15:27) Excitement for Flash 3.0 Sundar hints that Gemini 3.0 Flash might be "our best one yet" because of how it democratizes access to the frontier of intelligence for a wider user base.

Culture, Rituals & "Vibe Coding"

- (12:27) Sundar's Launch Day Barometer On launch days, Sundar actively monitors X (Twitter) to see how "average users" are experiencing the product, preferring raw feedback over internal dashboards or QPS (Queries Per Second) reports.

- (13:55) The "100,000 Bananas" Prank The office culture is currently high-energy, evidenced by a mysterious prank where 100,000 bananas were brought into the building to celebrate the "Nano Banana" moment.

- (16:40) Morning Ritual: News over Email Sundar does not check his work email first thing in the morning. His logic: "If something interesting has happened about Google, it'll be there in the news anyway."

- (17:33) The "Blue Micro Kitchen" Anomaly There is a specific "Blue Micro Kitchen" in the Creating Canopy office that retains the feel of "early Google"—small, intimate, and dense with talent.

- (18:15) Intimidated by Espresso Routines Sundar admits he is too intimidated to make coffee in the Blue MK because legendary engineers like Jeff Dean and Sergey Brin execute their espresso routines with such extreme precision.

- (23:37) "Vibe Coding" as an Economic Unlock "Vibe coding" is viewed as one of the most economically powerful shifts in history, putting software creation within reach of non-engineers.

- (24:27) Metric: Non-Engineer Code Submissions Google is seeing a sharp internal increase in the number of employees who are not software engineers submitting their first CLs (Change Lists/Code) thanks to AI tools.

- (25:01) The "One-Shot" Education Story A Comms team member (non-coder) used Gemini 3 to "one-shot" an animated HTML page to teach his son Spanish conjugation—a prime example of latent creativity being unlocked.

- (25:58) The "Worst It Will Ever Be" Philosophy Sundar applies his Waymo philosophy to AI coding tools: "Remember, this is the worst it will ever be." It is simultaneously amazing today and the baseline for future improvement.

Moonshots & Future Bets

- (21:21) Waymo's Inflection Point Waymo is cited as a validation of long bets; it took a long time, but it is now hitting a clear inflection point in viability.

- (21:54) Project Suncatcher: Data Centers in Space Google recently announced a moonshot to build data centers in space to solve future compute constraints. The team is working backward from "27 milestones" to hit a 2027 target.

- (22:21) TPUs Meeting Teslas in Orbit Sundar jokes that by 2027, a Google TPU in space might cross paths with the Tesla Roadster currently in orbit.

- (22:49) The Moon Rover Marketing Idea Sundar pitched Demis Hassabis on funding a moon rover equipped with Gemini on-device to explore the moon, primarily as a marketing campaign.

- (27:02) NotebookLM's Cult Following NotebookLM is highlighted as having a passionate, growing community, specifically finding deep traction among journalists and PhD students for research.

And one last thing before you leave... Google published the full documentary, The Thinking Game. If you're curious how DeepMind went from "Google's underdog AI lab" to the team behind AlphaFold and Gemini, there's now a documentary that shows exactly how it happened.

The Thinking Game spent five years following Demis Hassabis and the DeepMind team from beating world champions at Go to solving the 50-year-old protein folding problem. It's the origin story for everything you just read about Gemini 3—the culture shift, the engineering mindset, the pressure to prove AI could do more than play games.

Best part? It's free to watch on YouTube. Perfect holiday weekend viewing if you want to understand where Google's AI confidence actually came from.

Here are our favorite parts from the documentary.

The Vision and Urgency of AGI

- (01:53) The Finite Timeline for AGI: Demis Hassabis expresses a deep restlessness regarding the timeline of his life versus his work. He argues that if one takes the journey to build AGI seriously, "there isn't a lot of time. Life's very short."

- (02:12) The "Ultimate Tool" Thesis: Hassabis's life goal is not just to create intelligence, but to use AI as the "ultimate tool" to solve the world's most complex scientific problems (like disease and energy), rather than just for consumer tech.

- (02:25) Impact Scale: He predicts the advent of AGI will be more significant than the Internet or mobile technology, comparing its magnitude instead to the discovery of electricity or fire.

- (03:49) The Neuroscience Benchmark: Hassabis argues that the human brain is the "only existent proof" in the universe that general intelligence is possible, which drove his initial decision to study neuroscience to find inspiration for AI architecture.

- (07:29) Defining the Mission: DeepMind's specific mission was to build a "general learning machine." Legg emphasizes that the "G" in AGI stands for generality—a system that doesn't just do one specific thing but can learn to do many things, mimicking human cognitive flexibility.

Origins, Funding, and Structural Decisions

- (04:50) AI as a "Dirty Word": In the early academic days, Hassabis and Legg felt like "keepers of a secret." At the time, using the word "AI" in academia was embarrassing and signaled you weren't a serious scientist, which pushed them to start a company instead.

- (05:44) The "Unpitchable" Pitch: Early funding was difficult because they were pitching a scientific Manhattan Project without a product. They had to tell investors, "We're going to solve all of intelligence," which resulted in a 99% rejection rate from VCs looking for immediate revenue models.

- (06:23) Investment as a Lottery Ticket: Shane Legg notes that early investors didn't invest based on sound financial decisions, but because they thought it was "cool," acknowledging it was like buying a lottery ticket for the "biggest thing ever."

- (06:40) The Peter Thiel Contrarian Thesis: One of their first major investors, Peter Thiel, operated on a specific heuristic: If everyone says "X" is true, he suspects the opposite is possible. DeepMind was an outlier that fit this contrarian model.

- (06:52) London vs. Silicon Valley: Hassabis insisted on keeping the company in London to avoid the short-term "pivot" culture of Silicon Valley. He believed a long-term research challenge required insulation from the startup culture where companies are discarded if they don't work immediately.

- (14:07) Acquisition Conditions: When Google acquired DeepMind, they negotiated to remain in London to protect their "breakthrough-optimized" culture. They also established ethical red lines, specifically that the technology would not be used for military surveillance.

Game Mastery: From Atari to AlphaGo

- (09:16) Games as Research Sandboxes: DeepMind decided that video games were the perfect training ground for AI because they provide safe, constrained environments with clear reward signals, allowing for rapid iteration before moving to the real world.

- (09:56) The DQN Invention: They were the first to combine "reinforcement learning" (learning by trial and error) with "deep learning" (neural networks) at scale.

- (11:41) The "Tunneling" Insight: In the game Breakout, the AI was not taught strategy. After 300 games, it was human-level. After 500 games, it discovered the optimal strategy was to "dig a tunnel" through the side of the wall to trap the ball at the top—a strategy the researchers hadn't explicitly programmed.

- (12:05) Generalization Proof: The true breakthrough wasn't playing one game, but creating a single "recipe" (algorithm) that could be dropped into 50 different Atari games it had never seen and achieve superhuman levels on most of them without rule changes.

- (15:04) Go vs. The Universe: The game of Go was chosen as the "holy grail" because the number of possible board configurations exceeds the number of atoms in the universe, making it impossible to solve via brute force calculation.

- (17:39) The "Move 37" Moment: During the match against Lee Sedol, AlphaGo played "Move 37," a move that professional commentators claimed no human would ever play. AlphaGo calculated the probability of a human playing that move as 1 in 10,000, yet played it anyway, proving it had developed original creativity.

- (19:47) The "Sputnik Moment" for China: The match against Lee Sedol and subsequently Ke Jie served as a "Sputnik moment" for China, leading to a government-ordered feed cut and the subsequent launch of a massive national AI arms race.

AlphaZero, AlphaStar, and The "Black Box"

- (21:06) Removing Human Knowledge: The transition from AlphaGo to AlphaZero marked a paradigm shift. AlphaZero stripped out all human data (opening books, famous games) and learned entirely from scratch (Tabula Rasa) by playing against itself, becoming its own teacher.

- (21:55) Compressed Evolution: AlphaZero could start playing chess randomly in the morning and by dinner time become the strongest chess entity in history, effectively compressing thousands of years of human chess knowledge into a few hours.

- (26:16) Demis's Chess Epiphany: As a child prodigy, Hassabis realized that while chess is a "good thinking game," it is ultimately a closed loop. He felt a sense of waste seeing hundreds of brilliant minds in a tournament hall expending brainpower on a game rather than solving real-world issues like cancer.

- (31:08) StarCraft as the Next Frontier: StarCraft represented a harder challenge than Chess or Go because it involves "imperfect information" (fog of war) and continuous time (real-time decisions rather than turn-based), requiring long-term planning and bluffing.

- (34:32) The Military Dual-Use Debate: The capability of AlphaStar to command units with superhuman efficiency raised immediate ethical concerns. DeepMind researchers acknowledge that "you can't look at gunpowder and only make a firecracker," admitting the inherent dual-use nature of strategic AI for military applications.

The Pivot to Science: AlphaFold

- (46:32) The Protein Folding Problem: The documentary frames proteins as the "machines of life." The 50-year-old "grand challenge" was predicting a protein's 3D structure based solely on its 1D string of amino acids.

- (50:18) Data Scarcity in Science: Unlike games where an AI can generate infinite data (self-play), scientific problems like protein folding are constrained by expensive, limited experimental data. This required a fundamental shift in how they trained their models.

- (57:06) The Failure of CASP 13: While DeepMind won their first protein competition (CASP 13), they failed to solve the problem "in a sense that a biologist could use it." This highlighted the gap between winning a leaderboard and creating scientific utility.

- (58:43) Timing is Everything: Hassabis reflects on the failure, noting that "ambition is a good thing, but you need to get the timing right." Being 50 years ahead of your time leads to failure; the goal is to be right at the cusp of technological capability.

- (1:07:45) The "Strike Team" Approach: To finally crack the problem for CASP 14, DeepMind moved from pure ML engineers to a multidisciplinary "strike team" that included physicists and biologists, debating whether to force the AI to obey physics or let it learn pure patterns.

- (1:15:18) The "Index Moment" Decision: Once AlphaFold worked, the team realized that rather than creating a service where scientists submit proteins one by one, they should just "fold everything" (200 million+ known proteins) and release the entire database as a "gift to humanity."

Future Outlook, Safety, and Philosophy

- (1:00:09) The Gorilla/Einstein Analogy: Stuart Russell uses an analogy for AGI safety: asking a human to imagine what a superintelligence will do is like asking a gorilla to understand Einstein's Theory of Relativity—it is cognitively impossible for the lesser intelligence to predict the output of the greater one.

- (1:01:28) The Shift in Burden of Proof: Legg notes a reversal: Initially, they had to convince the world they could build intelligence. Now, they are worried they might build systems that are too intelligent and are trying to convince the world (and themselves) that the systems are still safe/limited.

- (1:03:37) The History Split: The documentary suggests AGI will divide human history into two distinct eras: "the part up to that point and the part after that point," reinventing civilization entirely.

- (1:06:45) The "Alien Invasion" Coordination: Russell argues that if we received an email that an advanced alien civilization was arriving, nations would coordinate immediately. AGI represents a similar arrival of a superior intelligence, yet humanity is failing to coordinate globally on safety.

- (1:19:50) Stewardship: The film concludes with the sentiment that the next generation will live in a radically different world, and the current generation's responsibility is to "steward" the transition responsibly, as technology is not neutral but embeds the values of its creators.