Markets tested the AI faith yesterday… here’s Meta’s shake‑up, the new DeepSeek drama, and Altman’s “bubble vs. trillions” paradox, explained.

Is AI a bubble? Or the most important thing to happen in human history? Is it a bad business model—or the last business model? We’ve been chewing on this (as has the world) lately because, frankly, it’s the biggest question in tech right now (slash, maybe also the world??).

First, what happened on Wednesday?

In three words? Tech wobbled again. Here's the day in brief:

- The S&P 500 and Nasdaq slipped a bit as investors cooled on crowded AI trades after an MIT analysis claimed 95% of generative‑AI pilots aren’t moving the P&L.

- Pair that with pre‑Jackson Hole nerves (where the US Fed either will or won’t change interest rates) and you get an AI‑led stock market pullback—a second straight decline for the Nasdaq in two days.

- Meta also became part of the story. The company reorganized its Superintelligence Labs into four teams, framed as a bid to accelerate toward “superintelligence” while also downsizing its AI department. Whether you read it as renewed focus or pool noodle flailing about, it kept mega‑cap AI nerves on edge.

- DeepSeek’s new release didn’t help. On the one hand, reporting says the “Whale Bros” delayed a new model amid Chinese chip constraints; on the other, a V3.1 update fueled roadmap speculation. Mixed signals, perfectly timed for jitters!

- Then there’s Altman’s paradox. In back‑to‑back interviews, Sam Altman said AI is in a bubble, and in the same breath, projected trillions in data‑center spend “in the not very distant future.” Call that ROI rubber meeting a road paved in data center CAPEX.

Now, on that last point, OpenAI CFO Sarah Friar later clarified Sam's comments, saying he meant some individual investments won't pan out, but emphasized AI is 'the biggest era we've seen to date.' She backed this up with numbers: developer usage up 50% week over week, reasoning up 8x, and OpenAI's search share doubling from 6% to 12% in just six months.

Why this matters (beyond one red day):

Because AI infrastructure kinda IS the economy right now. Here's what that actually means: AI data centers are adding between $93 billion and $163 billion to America's $23 trillion economy this year. Some estimates put the boost even higher in early 2025. Without AI spending, US growth would be nearly flat. Translation: AI isn't just part of the economy, it's doing the heavy lifting right now.

And there are A LOT of macroeconomic headwinds against the US right now:

- Global tariffs and high interest rates (and their impact).

- China’s rise as a technology innovator and energy producer.

- Ray Dalio’s observations on big debt cycles and the changing world order coming to a head (which explains why the US is using tariff revenue to pay down the national debt).

It's low key a ton of stuff! So riding on the shoulders of Sam Altman and Jensen Huang and the rest of the Mag 7 and their magical machine intelligence is the hopes and dreams of the entire U.S. economy, and maybe the entire global U.S. led world order, which is probably all a bit overwhelming to them. No wonder GPT-5 disappointed under the weight of its own expectations.

So are we at “peak AI hype”?

Engineer‑investor Gergely Orosz thinks so, citing eyebrow‑raising deals like AI in mattresses and jewelry. The receipts: Eight Sleep raised $100M and says it’s building an AI sleep agent; in jewelry, BLNG AI raised $3M and Gemist raised $6M to push AI‑assisted design.

Francois Chollet also thinks so, with data to back it up. He pointed to a recent Stanford/World Bank study showing AI adoption hit 45.9% of US workers by July 2025, yet labor productivity growth is actually lower than 2020.

To be fair, Ethan Mollick pushed back on this, pointing out that controlled studies he was a part of consistently show 20-30% productivity gains across fields from consulting to coding. The disconnect? “Translating gains to the organizational level takes time, and leadership,” (here’s his whole blog on that) Mollick argued, suggesting the macro effects just haven't materialized yet.

Again, probably because management doesn’t want to automate their own roles… which was one of the key findings of the MIT study.

So which is it? Is AI a massive productivity driver the likes of which the world has never seen, or an endless money pit that's threatening to take us all down with it?

Two compelling, and diametrically opposed, arguments from Anthropic CEO Dario Amodei and tech critic Ed Zitron frame the central debate about the future of AI. One sees a powerful, hidden profit engine; the other, a meticulously constructed money trap.

Profit Engine or Money Trap? The Multi-Billion Dollar Debate Over AI’s Business Model

The generative AI industry is defined by dizzying numbers: billions in funding, exponential revenue growth, and colossal operating expenses. But is this financial whirlwind building a sustainable new sector of the economy, or is it a "subprime" bubble fueled by hype and doomed to collapse?

The Bull Case: Dario Amodei's Billion-Dollar Reframe

In a recent detailed interview, Dario Amodei presented a radical reframing of AI economics. He argues that the perception of AI labs as cash-burning furnaces is an accounting illusion. Anthropic itself is "well past $4 billion" in annual recurring revenue, a figure achieved with stunning speed. The key, Amodei insists, is to stop looking at the company's overall P&L.

He laid out a hypothetical scenario:

- Year 1: You spend $100 million to train a new AI model. On your profit and loss (P&L) statement, you're down $100 million.

- Year 2: That model starts generating revenue, say $200 million. But at the same time, the scaling laws demand you train a more powerful successor, which costs $1 billion. Your P&L now shows a loss of $800 million.

- Year 3: The second model brings in $2 billion in revenue, but you're already investing $10 billion to train the next generation. Now you’ve “lost” $8 billion.

"If you look in a conventional way at the profit and loss of the company," Amodei said, "it looks like it's getting worse and worse."

This is where his core insight comes in. Instead of looking at the lab as a single entity, he proposes two powerful analogies.

Analogy 1: Every Model Is Its Own Company

The first, and most clarifying, analogy is to treat each major model generation as its own, separate company.

Take the previous example: "Let's say in 2023, you train a model that costs $100 million," he explained. "And then you deploy it in 2024 and it makes $200 million of revenue." In isolation, this is a highly successful venture.

The problem—and the source of the "money pit" narrative—is that the profits from this successful "company" are immediately dwarfed by the massive R&D investment in the next one:

- While Model A is generating its $200 million, the lab is already spending $1 billion to train Model B.

- The parent company's books show a huge loss, but this masks the reality that the underlying business of selling the model's intelligence is fundamentally sound.

- "The ever-growing CapEx is masking the underlying quality of the model businesses," Amodei states.

"If you consider each model to be a company, the model that was trained in 2023 was profitable," Amodei explained. "You paid $100 million and then it made $200 million of revenue." Even factoring in the cost of running the model (inference), the unit economics are solid. He noted that the payback period on these massive R&D investments is incredibly attractive—qualitatively in the range of 9 to 12 months. For any investor, that's a bet you take all day long.

The staggering losses only appear because while you’re reaping the rewards from one successful "company" (Claude 3), you're simultaneously funding the creation of a much larger, more expensive startup (Claude 4). "The usual pattern of venture-backed investment... is kind of happening over and over again in this field within the same companies," he said.

This cycle of reinvesting profits into ever-more-ambitious projects will continue until the scaling curve flattens or progress halts. Until then, the underlying business of each model is masked by the aggressive R&D spend on the next one.

Analogy 2: AI Labs Are Like Pharma Companies

Amodei’s second analogy likens AI to a hyper-accelerated pharmaceutical industry. Training a new frontier model is like embarking on a massive, R&D-heavy drug development program, where each successful drug immediately funds the development of ten more expensive, more ambitious ones. It requires a huge upfront investment to discover something new, with the hope of a significant payoff if the "drug" is effective.

"It's almost like a drug company, where it's like you develop one drug, and then if that works, you develop 10 drugs, and then if that works, you develop 100 drugs," he said. The AI industry is simply running this playbook on an exponential curve.

The payback on a model's training cost, he says, is qualitatively similar to a 9-12 month payback on customer acquisition—a golden metric in the software world. In this view, AI is not a bubble; it's an incredibly robust economic engine caught in an unprecedented cycle of reinvestment and exponential growth.

OpenAI's CFO echoes this optimism, comparing the AI buildout to 'railroads or electricity' rather than a sugar rush, noting they're 'constantly under compute' despite their company's own massive infrastructure investments.

The Bear Case: Ed Zitron's "Subprime AI Crisis"

Journalist and tech critic Ed Zitron offers a starkly different diagnosis. In a series of scathing essays, he labels the AI ecosystem a "money trap" and warns of a looming "subprime AI crisis." His argument dismantles the bullish narrative piece by piece, starting with the very foundation: profitability.

Zitron contends that companies like OpenAI and Anthropic are "deeply unprofitable," losing money on virtually every customer, including their paying subscribers. The raw cost of compute for training and inference is so astronomical that the current business models are fundamentally unsustainable. He argues the entire industry is built on a "subprime" premise: providing services at a massive, venture-subsidized discount. Sooner or later, the "toxic burn-rate" will force these companies to raise prices dramatically, revealing the true, untenable cost of their technology and causing a market-wide shock.

He is also intensely skeptical of the revenue figures that labs selectively leak to the press. He points to inconsistencies in reported "annualized recurring revenue" (ARR) numbers from both OpenAI and Anthropic as evidence that these are unreliable marketing tools, not sound financial metrics.

And Zitron also questions the technology's actual utility.

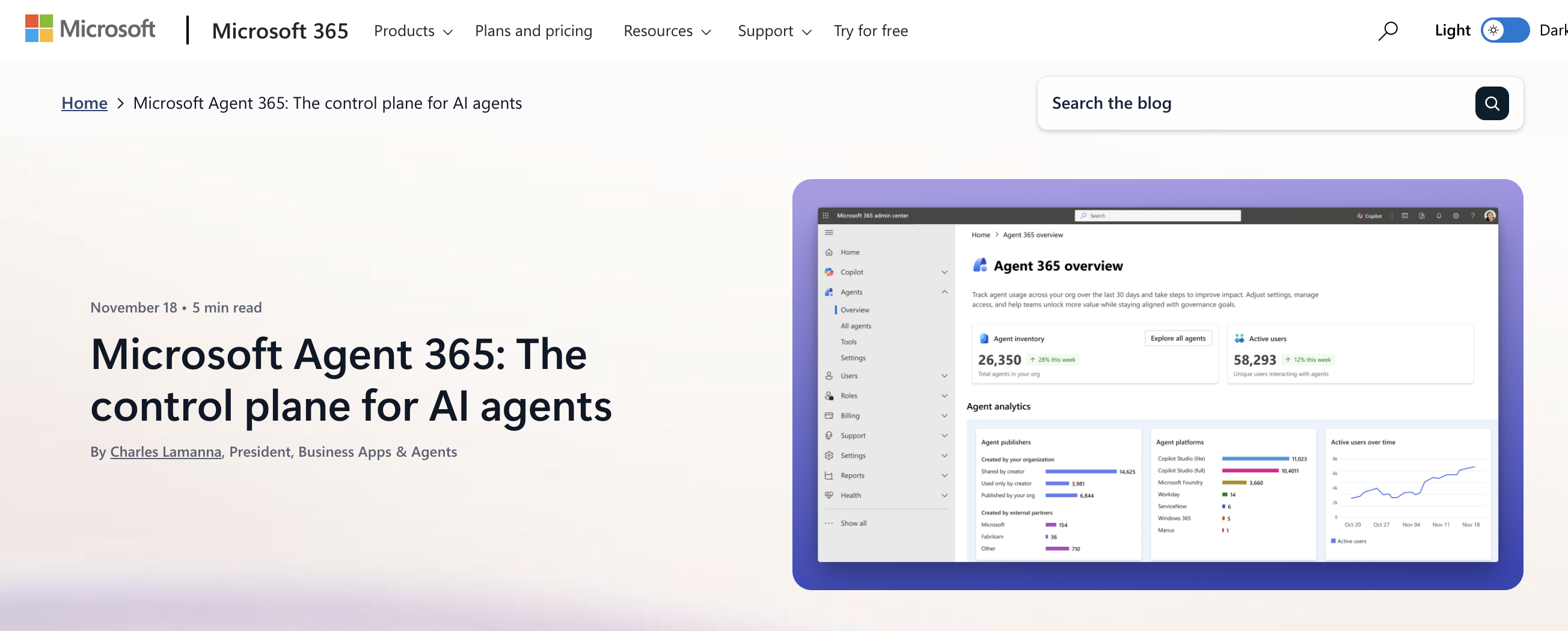

He argues that for all the hype about revolutionizing industries, real-world enterprise adoption remains weak. He highlights the lackluster uptake of Microsoft's 365 Copilot as a key example: customers are unwilling to pay a premium for features that are "inessential" and often unreliable. He dismisses much of the current capability as a "useless parlor trick" and calls the dream of "agentic AI" a marketing fiction for what are still just glorified chatbots.

Finally, Zitron sees no viable way out for the ecosystem. The AI labs are too cash-strapped to acquire the "wrapper" startups built on their platforms, and those startups are themselves too unprofitable to go public or find other buyers. This, he concludes, makes the entire space a fragile bubble, trapping capital in a system with no sustainable exit path.

And as Wednesday showed, these fears have somewhat materialized in the public markets as well, culminating in the one-two punch of Meta's AI downsizing and MIT's study on generative AI impact.

Two Futures Collide...

The chasm between these two viewpoints defines the central tension in the AI industry today. Amodei's "models-as-companies" framework paints a picture of a healthy, innovative industry whose immense ambition simply masks its underlying strength. If he is right, the path forward is to build "AGI-pilled" products that ride the exponential wave of capability.

If Zitron is right, however, the industry is built on a foundation of sand. The current boom is an illusion sustained by cheap capital and misleading metrics, destined for a painful correction that will ripple through the tech world. The coming months will be critical in revealing which of these two visions of the future is closer to the truth. For now, the multi-billion dollar question remains: is AI a revolutionary profit engine or a brilliantly marketed money trap?

Our read...

The MIT result is a needed cold shower on enterprise ROI, and it coexists with real‑economy buildout in compute, power, and land. Investors are trying to square two truths: much of today’s GenAI software isn’t yet moving P&L at scale, and infrastructure spending is propping up growth. Both can be true. But if so, whatcha do?

First, take a sanity check. Don’t reason only from a price change. A two‑day slide doesn’t fully diagnose AI demand vs. supply; prices need quantities. Two near‑term “quantity” reads will help: NVIDIA’s Q3 results on Aug 27, 2:00pm PT and Powell’s Jackson Hole tone during Friday’s speech.

After both those events, we’ll know for sure if any so-called “AI winter” is upon us.

May we remind you that roughly every quarter, or every other quarter, right around NVIDIA earnings, there's a nice little tech sell off that either is, or is not, the official opening trumpets of a potential AI winter blaring. And so far, every time, it's been just that: a minor sell off before NVIDIA earnings rally the troops back in line. We'll see if that's how things go this time around. It's a coin toss as far as we're concerned.

Here's what really matters...

Can AI actually deliver? For example, Steve Newman just shared 35 thoughts on AGI in a brilliant essay (listicle??) where he asks a lot of great questions framed as observations about the future of AGI and where things go from here. Hands down, the best insight he shared was his focus on “sample efficiency”, or the speed at which any system (be it human or AI) can pick up and learn new skills. Steve argues sample efficiency is probably the most important aspect of intelligence, because it quite literally determines the pace of learning. And the faster you learn, the more you can get done.

No matter where AI development goes from here, be it scaled up to the high heavens with “trillions of compute”, trained endlessly in RL environments that simulate new data to accomplish any possible task (like the hyperbolic time chamber, but for AI), or ruthlessly whittled down to the best, most efficient architecture possible, the core feature AI will need to master is continuous, sample-efficient learning: how does one learn something (or really, learn ANYTHING) as efficiently as possible, and then maintain and build on all that context throughout a month (or months) long project?

That's the type of skills an AI agent will need in order to actually start replacing labor (and justify the costs). So the argument goes, if AI can't learn efficiently enough to justify these valuations, bubble concerns are valid. If it can... then we're still early, people.

Ethan Mollick called out the extreme duality of these two takes, too: "The wild swings on X between "insane hype" and "its over"' miss the forest for the trees. Zoom out a year and you see 'continuing progress on meaningful benchmarks at a fairly stable, exponential pace.' The scaling law predicts diminishing returns—10x compute for 20% gains—but that's still exponential progress. It's just happening on a timeline that doesn't match Twitter's attention span..."

He says to look at any major benchmark—ARC-AGI, Humanity's Last Exam, METR's task completion time research—and you see 'continuing progress at a fairly stable, exponential pace.' Compare month-to-month? Things look flat. Compare year-over-year? We're absolutely flying.

And those benchmarks matter because they measure exactly what Newman's talking about—how efficiently AI systems learn and solve novel problems. Take ARC-AGI, which specifically tests sample efficiency—how quickly AI can learn new skills with minimal examples. Or Humanity's Last Exam, where expert-level questions stump even the best models (scoring under 30%) but are expected to hit 50% by year's end. Most tellingly, METR's task completion time research shows AI's ability to handle longer tasks has been doubling every seven months since 2019.

Well, actually, that last example is actually the problem that Steve is talking about needing to be fixed; see, the "task doubling" time benchmark doesn't really accurately test real-world things that real folks need to do. Which brings us back to the original problem of 95% of genAI trials failing to have a meaningful impact financially. If all AI can do is solve the middle to middle problem, then it might save us humans time doing things, but it also creates a lot more work for us to manage. Turning us all into managers of AI agents instead of automating managers like the study suggests would be economically effective).

Now, there is a benchmark that tries to measure this. It's called OdysseyBench and it tests AI on real-world office workflows—the kind where you need to jump between Word, Excel, and email over multiple days to actually get something done. The results? Humans score 91%+. The best AI models? Around 54%. Even worse, when tasks require juggling three apps instead of one, AI performance drops by 3x.

This is exactly what Steve Newman and METR's new findings are talking about. AI can nail isolated tasks—summarize this document, write that email, update this spreadsheet. But string them together into a five-day project with partial context and messy handoffs? The wheels fall off. The top failure modes are hilariously basic: forgetting to read files, skipping subtasks, using the wrong tool, or just... not planning before acting.

As one recent analysis points out, this isn't a compute problem—it's the absence of a 'language protocol' for workflows. AI treats each step like an isolated mirror reflection rather than understanding the actual structure of work.

Call it the sample efficiency problem, the workflow problem, or just "AI can't keep its you-know-what together across multiple apps", but it's THE bottleneck keeping us from Altman's trillion-dollar future. Fix that, though, and the entire game changes.

I think we all know what happens if AI winter strikes and the bubble bursts. But what happens if AI resolves these "engineering" problems as Dario calls them, and actually teaches itself to do continuous, sample-efficient learning?

A new METR pilot study just dropped some wild numbers on this exact question. They asked AI experts and superforecasters: if AI matches top human researchers on month-long projects by 2027, what happens next?

The experts gave a 20% chance we'd see a 3x acceleration in AI progress—imagine the past three years of improvements (from ChatGPT to GPT-5) happening every single year. The superforecasters were more skeptical, at 8%, but both groups thought if AI does hit those benchmarks, acceleration becomes way more likely.

Where they violently disagree? What happens after. Experts think there's an 18% chance of 'extreme societal events' (think: global energy consumption doubling or halving in a year). Superforecasters? Less than 0.5%. So either we're about to witness AI systems automating their own improvement and compressing years of progress into months (the Amodei bull case on steroids), or we're watching the biggest case of collective tech delusion since... well, pick your favorite bubble (mine's the Dutch Tulip Mania of 1637; the OG bubble where tulip bulbs literally cost more than houses!). Anyway, the fact that experts can't even agree within two orders of magnitude tells you everything about where we are right now...

Bottom line...

AI now lives in two realities (arguably has been!)—frothy software promises and heavy‑metal capex that’ll eventually pay off (even if after any “bubble” bursts). Wednesday’s pullback doesn’t settle the “bubble vs. build” debate; it just reminds us to watch what companies ship and book, not just what they demo. We’ll keep you posted on what we see on our side.

Goes without saying, but this is not financial advice! Just shared for the FYI.