**The following is shared for educational purposes and is not intended to be financial advice; do your own research!

You've heard the “AI bubble” talk a thousand times by now. Every few weeks, someone compares AI hype to the late-'90s dot-com frenzy, investors get nervous, and then... nothing happens. Valuations keep climbing, the funding keeps flowing, and everyone moves on.

But this is not just another market cycle. According to UK analyst Julien Garran, we're in “the biggest and most dangerous bubble the world has ever seen,” one he calculates is 17 times larger than dot-com, and four times bigger than the 2008 housing crisis.

His case is stark: ten AI startups with zero profits have gained nearly $1 trillion in market value, all while the ecosystem runs on a funding treadmill where—with the exception of NVIDIA—everyone is bleeding money.

Garran argues language models are fundamentally incapable of commercial success for four key reasons:

- They're glorified autocomplete. He contends that LLMs are merely predicting the next word based on statistical patterns, not achieving true understanding. This makes them useful for narrow tasks but severely limited for building genuinely novel, high-value applications.

- They regurgitate existing code. Garran argues that when LLMs write software, they are pulling from memorized patterns in their training data, not creating breakthrough solutions from scratch, which caps their innovative potential.

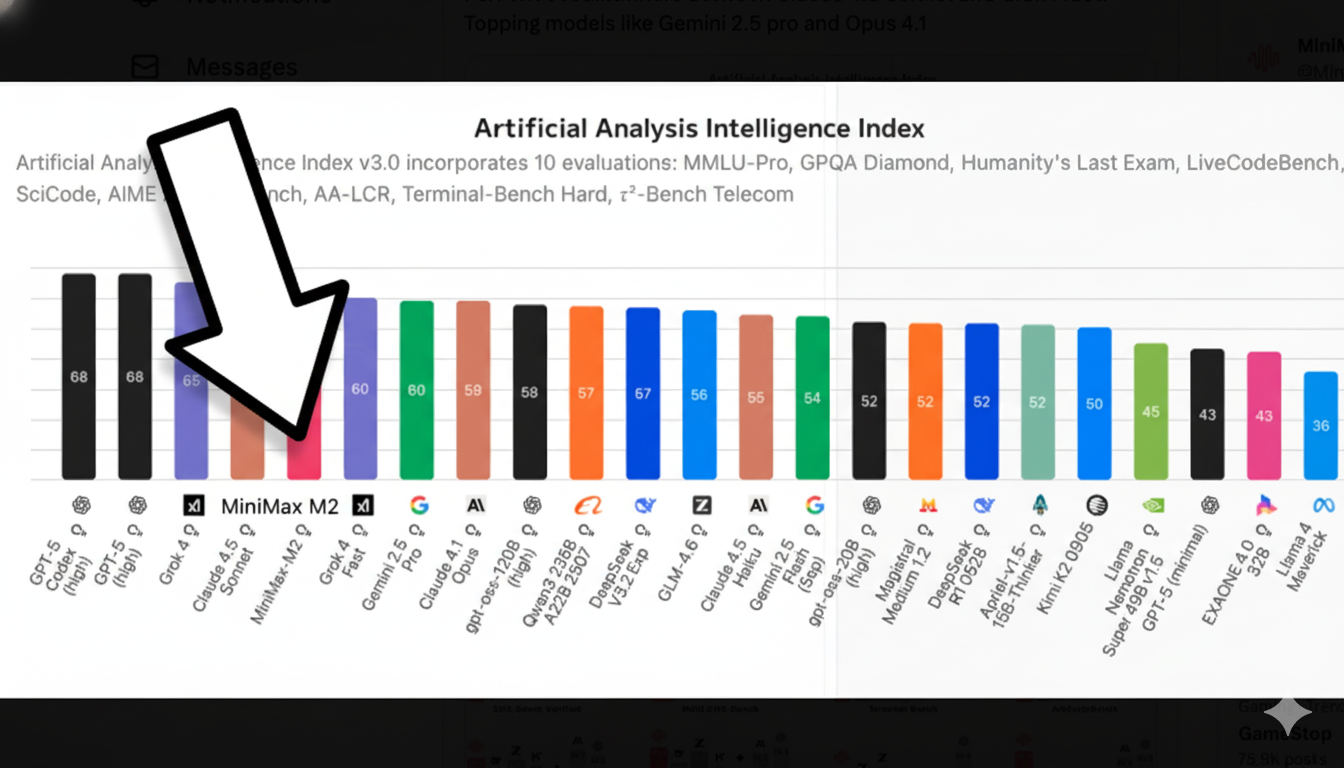

- They've hit a scaling wall. This is his most critical point. He observes that since GPT-4's launch in March 2023, no subsequent model has dramatically raised the bar on capability, despite astronomical increases in spending. If the bull case of "spend more to get exponentially better" were true, we would see clear evidence of it. Its absence suggests diminishing returns have set in.

- The economics simply don't work. The entire system depends on a never-ending stream of venture capital and mega-investments from players like SoftBank and sovereign wealth funds to subsidize the losses of everyone but the chipmakers.

This sets the stage for the defining conflict of our technological era. The narrative has split into two irreconcilable realities. In one, championed by bulls like venture capitalist Marc Andreessen and NVIDIA CEO Jensen Huang, we are at the dawn of "computer industry V2"—a platform shift so profound it will unlock unprecedented productivity and reshape civilization.

In the other, detailed by macro investors like Julien Garran and forensic bears like writer Ed Zitron, AI is a historically massive, circular, debt-fueled mania built on hype, propped up by a handful of insiders, and destined for a collapse that will make past busts look quaint.

This is a multi-layered conflict playing out across public stock markets, the private venture ecosystem, and the fundamental unit economics of the technology itself. To understand the future, and whether it holds a revolution, a ruinous crash, or a complex mixture of both, we must dissect every layer of the argument, from the historical parallels to the hard financial data and the technological critiques that question the very foundation of the boom.

Here's the bull case from Andreessen and others:

The bull argument is all about the long game and the underlying tech.

- It’s not the dot-com bubble 2.0. That was a telecom bubble with too much infrastructure for too few users. This time, the product (ChatGPT) is already amazing and in the hands of hundreds of millions.

- The raw costs are surprisingly low. Engineer Martin Alderson did the math and found that processing input data is ~1000x cheaper than generating output. This means input-heavy apps like coding assistants should be wildly profitable, with huge markups on subscriptions.

- Productivity will explode. Andreessen predicts AI will act as a "super PhD in every topic" for every individual, leading to massive job growth and making goods and services cheaper for everyone.

And here's the bear case from Zitron, Szyszka, and Ding:

The bears argue the entire thing is a financial illusion built on broken economics.

- It's a circular funding scheme. Zitron argues NVIDIA props up "neoclouds" with investments, which then raise debt to buy NVIDIA's GPUs, creating fake demand that keeps its stock soaring.

- The business model is broken. Startups bet on falling costs, but as Ewa Szyszka and Ethan Ding point out, application costs have exploded. Why? Everyone wants the newest, most expensive model, and those models now use 100x more tokens for complex tasks.

- This creates a "token short squeeze." Companies offering flat-rate subscriptions can't afford their power users. This forces them to throttle service (as users of Claude Code and Cursor have seen), angering customers in a desperate attempt to avoid bankruptcy.

So, who's right?

The debate has moved beyond just markets into the weeds of "tokenomics." On one hand, the raw compute for AI might be cheap and profitable for the model makers like OpenAI (the bull case). On the other, the price AI application startups have to pay is brutal, squeezing their margins to death (the bear case).

This suggests the foundation model companies (OpenAI, Anthropic) are capturing all the value with huge markups, while the ecosystem of AI app startups building on top of them is getting crushed. Furthermore, as mathematician Terrence Tao notes, we're not even calculating the real cost, which must include the price of failed attempts, making the economics even bleaker.

The dot-com parallel holds: a painful, short-term bubble in the application layer seems likely, even if the underlying infrastructure is revolutionary.

What to do:

- For founders and investors: The tokenomics will kill you if you're just reselling an API. Your business model must either be vertically integrated (use your own efficient models), have insane switching costs (lock in enterprise clients), or use AI as a loss-leader for a profitable core service (like hosting).

- For professionals: The free lunch of unlimited, high-end AI is ending. The future for power users is usage-based pricing, which could cost over $100k/year per developer. Start thinking about your AI usage in terms of ROI and track your "token burn" to understand the true cost of the tools you rely on.

After digging through as much data as we could process, the evidence points to a stunning paradox: we are living through a genuine technological revolution financed by a generational bubble. A painful correction in the application layer seems likely, even if the underlying tech is here to stay.

But even a crash doesn't negate the technology. As futurist Peter Leyden argues, we're at a "world historic" tipping point. AI is set to amplify our mental power just as the steam engine amplified our physical power, launching us into the "Age of AI." The chaos we're seeing is what it looks like to be in the middle of a foundational reinvention of our world. Buckle up, y'all.

Read the full bull case from Marc Andreessen here and the bear case from Ed Zitron here. Dig into the tokenomics debate with analyses from Martin Alderson, Ewa Szyszka, and Ethan Ding. And watch the videos shared down below for unique takes from Dylan Patel, Dario Amodei, Jensen Huang, Andrej Karpathy, Theo of T3 Chat, and Julien Garran.

Now, let's dive into each argument in full, and see where it all leads us...

The Historical Case - "Computer Industry V2" or Dot-Com 2.0?

Let us begin with the historical case. We watched this 2 hour episode of Cheeky Pint with Marc Andreessen who argues AI represents "computer industry v2" - the first fundamental reinvention of computing in 80 years - and makes the case that productivity gains will create hyper-deflation rather than unemployment.

Andreessen’s argument is rooted in pattern recognition and a lesson in humility: bubbles are only ever obvious in hindsight. He points to the dot-com era, where even sophisticated investors like Stanley Druckenmiller got the timing spectacularly wrong, shorting tech stocks too early only to capitulate and go long just before the market peaked. The crash itself wasn't a singular event but a slow, cascading decline over five years. For Andreessen, this history teaches a key lesson for venture capital: the best strategy is a disciplined, mechanical pace of investment, because the greatest danger isn't overpaying at the peak; it's panicking and stopping investment at the bottom. He argues that downturns are healthy for the ecosystem as they "clear out the brush" of status-seekers and "tourists," leaving only true believers.

Here are the key arguments in brief:

- AI has already been "hyper-democratized" faster than any technology in history (800M ChatGPT users in 2 years vs. 50M internet users in 1999).

- The technology is "fully there" today unlike early internet which needed decades to become useful.

- Marginal productivity improvements historically lead to hiring MORE people at higher wages, not fewer.

- If AI does eliminate jobs, the resulting productivity boom would cause such dramatic price deflation that living standards would rise anyway (citing the 1880-1930s period as precedent).

- Significant portions of the economy are literally impossible to automate due to licensing/unions/regulation, so the employment apocalypse is structurally blocked.

- We'll likely see a pyramid of AI models (from supercomputers to doorknobs) rather than centralization, with open source dominating most use cases.

- Software development will transform first because it's unregulated and developers build for themselves.

- Comparing AI adoption curves to the internet is misleading since internet took until 2005+ for broadband and 2012 for mobile broadband, whereas AI delivered immediate value from day one.

Andreessen reframes the dot-com bust not as a software bubble but a telecom bubble, where infrastructure was built 15 years ahead of demand for a tiny market of 50 million dial-up users.

This time, he argues, is fundamentally different:

- The Product is Already Spectacular: Unlike the clunky early internet, tools like ChatGPT are "monumentally amazing" and already providing tangible value to hundreds of millions on existing infrastructure.

- It’s a New Computing Architecture: AI isn't just a network; it's a shift from the Von Neumann architecture to neural networks. This is "computer industry V2," a change that will manifest as a "pyramid" of models, from a few massive proprietary ones to billions of small, specialized AIs embedded in everything.

- The Economic Impact Will Be Staggering: He sees AI as a tool that will empower every individual with the knowledge of a "super PhD in every topic," triggering a massive increase in worker productivity, leading not to mass unemployment but to massive job creation, wage growth, and hyper-deflation that makes goods and services cheaper.

His analysis of market timing is equally crucial. He points to the dot-com era, where even sophisticated investors like Stanley Druckenmiller got the timing spectacularly wrong, shorting tech stocks too early only to capitulate and go long just before the market peaked. The crash itself wasn't a singular event but a slow, cascading decline over five years. For Andreessen, this history teaches a key lesson for venture capital: the best strategy is a disciplined, mechanical pace of investment. The greatest danger isn't overpaying at the peak; it's panicking and stopping investment at the bottom.

This new computing paradigm, Andreessen predicts, will form a "pyramid" market structure: a few massive, proprietary models at the top, akin to mainframes, with a sprawling base of billions of smaller, specialized, open-source models embedded into everyday devices. His belief in this future is unshakable, dismissing constant bubble-callers by noting that bubbles are only ever obvious in retrospect. He points to the dot-com crash, which wasn't a singular event but a slow, cascading decline over five years, a history that informs his core VC strategy: maintain a disciplined, mechanical pace of investment, because the biggest mistake is not overpaying at the peak, but panicking and stopping at the bottom.

- (24:26) AI is causing a re-consolidation of the tech industry into just two primary geographic locations globally, with only one in the West, a level of concentration unseen in prior industrial economies.

- (39:11) AI is unique in that it lacks articulate "bear cases" about its business potential. Unlike previous tech cycles, the primary criticism is existential (i.e., "it will destroy the world"), not that it's a failing business model.

- (40:12) The most likely "AI bubble" will not be in AI software itself (which has clear utility) but in the physical infrastructure build-out. This mirrors the dot-com bust, which was less about internet software companies and more of a massive, debt-fueled telecom and fiber overbuild. Today, the equivalent risk is a data center bubble, where capacity is built far ahead of actual utilization.

- (42:31) Bubbles often form in adjacent, established industries because there's a scarcity of talent in the new field (AI software) but an abundance of capital and experienced players in the old one (building data centers). Capital flows to what the "50-year-olds" know how to build.

- (43:56) An ironic potential outcome is that AI researchers could remain underpaid while the market is flooded with an excess of GPUs and data center capacity.

- (44:04) The internet bubble analogy for AI may be flawed. The internet was a network technology whose user experience was severely hampered for years by infrastructure lags (e.g., dial-up modems). AI is a computing technology where the user experience with tools like ChatGPT is already "monumentally amazing" and fully functional from day one.

- (46:05) The most accurate way to frame AI is not as the "new internet," but as "Computer Industry V2." It represents the first fundamental reinvention of the computing model in 80 years—from the Von Neumann architecture to the neural network—unlocking a new class of problems and potentially creating value that is orders of magnitude greater than the first computer industry.

- (47:02) AI has had one of the longest-running hype cycles of any technology. Visions of AI like HAL 9000 existed in the 1960s, meaning the technology took nearly 60 years to catch up to the vision, a far longer lag than for mobile or crypto.

- (49:47) The AI market will develop into a pyramid structure, similar to the evolution of the computer industry (mainframe, minicomputer, PC, embedded chip). A few massive, proprietary "mainframe" models will exist at the top, but the vast majority of AI usage will occur via billions of smaller, hyper-optimized, and likely open-source models embedded in everyday devices like doorknobs.

- (52:05) The AI model market could follow the trajectory of databases and operating systems, where powerful proprietary systems (like Oracle or Windows Server) dominated for a decade before being largely surpassed by more flexible and efficient open-source alternatives (like Postgres or Linux).

- (53:42) Software development will be the area most rapidly transformed by AI. This is because it is a largely unregulated industry where developers are building the tools for themselves, creating an incredibly tight feedback and iteration loop.

- (54:08) The replacement of jobs in fields like medicine and law will happen much slower than people predict due to heavy regulation, licensing requirements, and unionization, which are designed to resist change.

- (55:06) Even in regulated fields, AI's impact will be profound. ChatGPT may already be a better doctor than the average human doctor, and people will increasingly use it as a primary source for medical advice, creating tension with the established system.

- (56:29) AI is perhaps the most democratically-distributed technology in history. Unlike past innovations that trickled down from governments and large corporations to consumers over decades, AI is being adopted in reverse: consumers first, then small businesses, then large enterprises, and finally government.

- (59:52) Referencing the Solow Paradox, the hosts note that AI productivity is "showing up everywhere except the hiring plans of your portfolio companies," questioning when and how these massive efficiency gains will translate into tangible business restructuring.

- (1:00:25) AI will be a democratizing force for businesses, making younger, less bureaucratic companies more competitive against older, entrenched incumbents due to faster adoption.

- (1:01:00) Rather than causing mass unemployment, AI will dramatically increase the marginal productivity of every individual, turning them into a "super PhD in every topic." This will drive demand for more workers at higher wages, leading to employment growth and higher incomes.

- (1:04:17) Even in the unlikely scenario that AI does centralize power and eliminate jobs, the outcome would be hyper-deflation, where the cost of goods and services collapses, making everyone significantly wealthier in real terms.

- (1:11:16) The concept of the "10x engineer" will be updated for the AI era, leading to the emergence of the "1,000x engineer."

- (4:38) It's impossible to know you're in a bubble in real-time. People who correctly call crashes have often been calling for one for years, like an economist who has "predicted nine of the last two crashes."

- (6:04) The most sophisticated hedge fund managers in the world get bubbles wrong. Stan Druckenmiller famously went short on tech in late 1999, capitulated, and then went long in Q1 2000 right before the crash.

- (7:52) Market crashes don't happen all at once; they often cascade down in multiple, discrete moments over several years.

- (8:54) After the dot-com crash, the entrepreneurial ecosystem was so flattened that by 2003, the very idea of starting a company was considered ludicrous. This fear created the perfect environment for the next wave of great companies.

- (9:25) The core of successful venture capital is to maintain a disciplined, mechanical pace of investment through all market cycles. The biggest danger is not overpaying during a bubble but stopping investment during a downturn.

- (10:16) The true bottom of a market cycle isn't just marked by negativity; it's marked by total silence. People completely stop talking about the sector, as if it never existed.

- (29:01) Market downturns are helpful for Silicon Valley's long-term health because they function like "fuel management for fire," clearing out the tourists and status-seekers and leaving only the true believers.

- (41:22) The dot-com bust wasn't an internet software bubble; it was overwhelmingly a telecommunications bubble. The vast majority of capital and debt was tied up in building physical infrastructure (fiber, data centers) that demand wouldn't catch up to for over a decade.

- (42:31) The epicenter of a bubble is never the new thing itself (e.g., AI software), because there aren't enough skilled people. It's in the adjacent, established industries where 50-year-olds know how to deploy massive capital (e.g., data centers for AI, telco for the internet).

- (45:47) The internet bubble analogy for AI is flawed. The internet was a network technology whose experience was hobbled for years by slow connections. AI is a computing technology where the experience is already phenomenal. AI should be seen as "computer industry V2" — the first fundamental reinvention of computing in 80 years, moving from the Von Neumann architecture to the neural network.

- (49:59) The future of AI will not be a few giant, centralized models. It will be a pyramid, just like the computer industry (mainframe -> PC -> embedded devices). There will be a handful of large models at the top and billions of hyper-optimized, smaller, and likely open-source models at the bottom for specific use cases like a smart doorknob.

- (59:52) AI will not lead to mass job loss. Instead, by making every individual a "super PhD in every topic," it will cause the most dramatic increase in the marginal productivity of labor in history, leading to massive employment growth and wage growth.

- (1:04:33) If a few large AI companies did end up dominating the world, the result would not be poverty but hyper-deflation. The price of goods and services would collapse, making everyone massively better off, similar to the "replicator" in Star Trek.

- (1:06:25) The modern Western economy has split into two: a deflationary economy (tech, electronics, media) where things get cheaper and better, and an inflationary economy (housing, healthcare, education) where prices skyrocket due to government policies that restrict supply and subsidize demand.

- (1:11:16) AI will create the 1,000x engineer, and potentially even more, as the productivity gains are applied to a global market of 5 billion connected people.

The Bull Case - A Revolution in Progress

No one is more bullish (and has more to gain if correct) on AI progress than NVIDIA CEO Jensen Huang. So we thought it best to break down his arguments from a recent BG2 podcast to make the ultimate bull case.

The Foundational Drivers of Exponential Demand

- (0:44) The Billion-X Inference Explosion: The core driver of demand is that AI is moving from simple, one-shot answers to "chain of reasoning," which will increase the computational need for inference by a factor of up to a billion.

- (1:45) The Three Scaling Laws: Demand is no longer driven by a single factor. The industry now faces three compounding compute demands: pre-training (initial learning), post-training (AI practicing and refining skills), and inference (AI actively thinking).

- (3:03) Systems of Models: AI is no longer a single model. It's an entire system of specialized models working together—some using tools, some doing research—which dramatically increases overall computational complexity and demand.

- (6:15) Two Compounding Exponentials: The growth is not linear. It's the product of two exponential curves: 1) the exponential growth in users and adoption, multiplied by 2) the exponential growth in compute required per use case due to reasoning.

- (32:42) The Death of Moore's Law: Because performance gains from transistors are over, the only way to keep up with exponential demand and prevent the cost of AI from becoming infinite is through a relentless cycle of full-system architectural innovation, driving a constant refresh and upgrade cycle.

Sizing the Multi-Trillion Dollar Opportunity (The TAM)

- (10:49) The Great Refresh: The most fundamental thesis is that the world's entire multi-trillion dollar installed base of general-purpose computing is obsolete and must be replaced with new accelerated AI infrastructure.

- (11:55) The First Wave - Converting Existing Workloads: Before even accounting for new generative AI, there is a multi-hundred-billion-dollar opportunity in simply converting existing hyperscale workloads (like recommender engines for Meta, TikTok, and Amazon) from CPUs to GPUs.

- (26:52) The Next Wave - The Unconverted Enterprise: A massive, untapped market is the world's structured data (SQL, data processing). This still runs almost entirely on CPUs and represents the next giant wave of workloads that will be moved to accelerated AI systems.

- (13:47) The Ultimate Market - Augmenting Global GDP: Human intelligence drives ~$50 trillion of the world's GDP. Augmenting this intelligence with AI could create a $10 trillion annual market for AI "tokens."

- (15:52) The Implied Capex: A $10 trillion token market, assuming 50% gross margins, would necessitate a $5 trillion annual capital expenditure on AI factories—a more than 10x increase from today's estimated TAM.

- (17:33) A Growing Pie: This is not a zero-sum market. AI will accelerate the growth of the entire world's GDP, creating new wealth and new demand for AI services in a virtuous cycle.

Direct Rebuttals to the "Glut" and "Bubble" Arguments

- (19:02) Existential Spending: The biggest buyers, like Meta, cannot afford to slow down. Mark Zuckerberg stated it's an existential risk to fall behind, making them willing to overspend by billions rather than risk underbuilding. This is not speculative spending; it's a strategic necessity.

- (20:25) We're Already at a Trillion: The "bubble" argument misunderstands the starting point. The AI revenue market isn't a future goal of a trillion dollars; it's effectively there already because the entire revenue base of Google, Meta, and others is now powered by AI.

- (22:30) When a Glut is Possible (And Why Not Now): A glut is "extremely unlikely" until two conditions are met: 1) all general-purpose computing is converted, and 2) all classical hyperscale content generation is AI-based. This transition will take years, ensuring a long runway of demand.

- (23:43) The Demand Signal is the Bottleneck, Not Supply: The current "shortage" is not an NVIDIA supply problem. It's a customer demand problem—they consistently and dramatically under-forecast their own needs, leading to a perpetual scramble to catch up.

- (30:34) Real Economic Substance: This is not a house of cards. The demand is rooted in real-world value: a billion and a half users paying for ChatGPT, every enterprise needing AI to survive, and every nation viewing it as critical infrastructure.

- (1:36:17) AI Creates More Work, Not Less: The fear that AI will destroy its own market by eliminating jobs is wrong. It assumes humanity has no more ideas. AI increases productivity, which creates more wealth, which funds more ideas, which in turn creates more jobs and more demand for AI.

The Economic and Strategic Moat (Why the Growth is Sustainable)

- (50:35) The "Free Chip" Theory: Performance per watt is the only metric that matters. Because a better system generates vastly more revenue from the same fixed data center footprint and power budget, competitors could literally price their chips at zero and customers would still pay a premium for NVIDIA's superior performance. This invalidates the "race to the bottom" pricing argument.

- (35:35) Extreme Co-design as a Moat: Competitors building a single chip cannot compete with NVIDIA's "Extreme Co-design" approach, where the CPU, GPU, networking, and all software are optimized together to deliver compounding performance gains (like the 30x leap to Blackwell) that are impossible otherwise.

- (38:35) The Moat of Scale: The scale is now a defensive barrier. A customer will place a $50 billion purchase order on NVIDIA's proven architecture. No one will take that financial risk on a new, unproven chip.

- (42:23) Barriers to Entry are Higher Than Ever: While the market looks juicy, it is now incredibly complex and massive. It is far harder for a new ASIC company to enter today than it was when NVIDIA and Google's TPU started by dominating a tiny, nascent market.

- (31:51) The AI Flywheel: NVIDIA uses its own AI to design its next generation of chips faster. This creates a self-reinforcing loop where better AI leads to better chips, which enables better AI, allowing Nvidia to stay ahead of the exponential demand curve.

The Unprecedented Breadth of the Customer Base

- (16:32) Real-World Proof: This is not theoretical. Major hyperscalers like Alibaba are publicly stating plans to increase their data center power by 10x by the end of the decade.

- (59:53) A Universal Utility: Unlike past tech bubbles, this is not a niche product. "Everybody needs AI." This positions it as a fundamental utility for the 21st century.

- (1:02:19) A New Customer Class: Nations: The Sovereign AI movement means the customer base is expanding beyond corporations to include every country on Earth, which views AI infrastructure as essential as energy or communications infrastructure. This demand is geopolitically motivated and less tied to economic cycles.

- (1:34:28) The Ultimate TAM: Every Human: AI is the "greatest equalizer," closing the technology divide. If every person can use it simply by speaking to it, the ultimate market size is all 8 billion people on the planet.

The View from Wall Street — It’s Not a Bubble, It’s a Fortress

For many public market investors, the conversation begins and ends with today’s balance sheets. According to analysis from Anthony Pompliano and Phil Rosen, the idea that we’re in an AI-fueled mania ignores the fundamental, and profitable, reality of today’s market leaders. Their argument is a direct rebuttal to dot-com era comparisons. Calling the current market a "bubble," they argue, is an "intellectually lazy" take that misses the crucial differences between then and now.

Here’s their core argument, by the numbers:

- Valuations aren't even close to dot-com levels. Today’s titans, NVIDIA and Microsoft, trade at a reasonable 30x forward earnings. At the peak of the internet mania, Cisco and Oracle soared above 120x earnings, and Microsoft’s own multiple was roughly double its current level. This massive valuation gap, they insist, matters.

- Today’s leaders are cash-flow machines. This is the key distinction. Unlike the "profitless leaders" of the past, the current mega-caps are generating hundreds of billions in annual free cash flow. They command "fortress balance sheets" and contribute meaningfully to real economic growth.

- The psychology is all wrong for a bubble. Pompliano and Rosen offer a contrarian insight: "The fact that everyone’s talking about it is reason to think we aren’t in one." True manias are defined by unchecked euphoria, not constant, widespread anxiety about a potential crash.

So what happens next? They argue the market is pointing higher, backed by a strong macroeconomic outlook. They cite veteran strategist Ed Yardeni, who correctly dismissed recession fears earlier this year. Yardeni’s take is that corrections happen on fears of a recession, while "bear markets tend to be caused by recessions. Currently the economy remains resilient, and a recession is unlikely."

This economic strength is bolstered by powerful historical tailwinds. Since 1950, the S&P 500 has averaged a 4.2% return in the fourth quarter—nearly double any other quarter—and has finished Q4 in the green about 80% of the time.

While they briefly acknowledge "valid concerns about the circular nature of AI spending," they frame it as a secondary issue. The primary story, in their view, is a market led by historically profitable companies with sensible valuations. To them, this isn't a bubble built on hope; it's a boom built on cash.

There's Just One Problem: The Circular Financing Machine...

While Wall Street sees a fortress, others looking at the industry’s plumbing see a perpetual motion machine. Financial analyst Patrick Boyle described the situation with a series of vivid metaphors: an "Ouroboros"—the ancient symbol of a snake eating its own tail; an "extension cord plugged into itself" creating the illusion of energy; and a "Möbius strip made of venture capital and electricity." Chip makers are funding their own customers, who then use that money to buy more chips. Cloud providers are bankrolling AI labs that, in return, are locked into using their services.

This structure isn't entirely new; it bears a striking resemblance to the post-war Japanese keiretsu and South Korean chaebol—industrial conglomerates with intricate cross-holdings designed to secure supply chains, which were criticized for obscuring financial risk and propping up uncompetitive firms.

This modern version is fueling a mind-boggling infrastructure race, but early signs of stress are already appearing. The price to rent NVIDIA's B200 chip has dropped from $3.20 an hour to $2.80 per hour in just a few months. Older chips like the A100 are now available for as little as 40 cents per hour—a rate that is below break-even for many operators. This suggests a potential oversupply of infrastructure built on demand that may not be fully materializing, reminiscent of the telecom firms in the early 2000s that built out fiber optic networks that were never used.

Top Takeaways:

- (1:56)OpenAI is aggressively locking in its supply chain through massive deals, including a $300B cloud agreement with Oracle and memory commitments that reportedly account for half the world's current capacity.

- (2:19) The NVIDIA-OpenAI loop involves NVIDIA pledging $100B in investment, which OpenAI will then use to purchase millions of NVIDIA's GPUs.

- (2:30) AMD has a deal where OpenAI buys its chips, and in return, OpenAI gets cheap stock options that will likely surge when the deal is announced, effectively reimbursing itself for the purchase.

- (3:14) Amazon's $8B+ investment in Anthropic creates a closed loop where Amazon funds the company, which in turn must use Amazon's cloud (AWS), its custom chips, and integrate with its Bedrock platform.

- (3:49) Google has joined this circular model, investing $3B in Anthropic and securing a multi-billion dollar deal for Anthropic to use its TPUs, making Google both an investor and a key infrastructure provider.

- (4:49) Elon Musk's AI strategy is described as having his companies "date each other," with XAI (his startup), X/Twitter (data source), and Tesla (deployment) all intricately linked.

- (7:05) McKinsey forecasts a staggering $5.2 trillion in capital expenditure (capex) will be needed just for chips, data centers, and energy over the next five years.

- (7:18) To justify that level of spending, Bane estimates that AI companies will need to generate $2 trillion in annual revenue, a huge leap from OpenAI's current ~$13 billion.

- (7:51) The circular nature of these deals raises systemic risk concerns: what happens if AI demand or monetization fails to meet the massive investor expectations?

- (8:11) The current AI investment structure is compared to post-war Japan's Keiretsu and South Korea's Chaebol systems—industrial groups with deep cross-holdings that obscured risk and propped up uncompetitive firms.

- (9:21) Are AI giants building a fragile structure, similar to Japan's bubble economy, that looks stable but depends on a constant flood of new capital to "keep the lights on"?

- (9:38) OpenAI's "Stargate" project is a $500 billion plan to build 10 gigawatts of data center capacity.

- (9:59) One gigawatt is the average output of a nuclear power plant and can power a million homes; OpenAI's total committed buildout is 23 gigawatts, requiring the equivalent of 23 nuclear power stations.

- (10:56) The energy demand is so extreme that some data center operators are installing on-site gas turbines and exploring nuclear partnerships just to avoid waiting for grid hookups.

- (11:12) The XAI data center in South Memphis is reportedly running gas turbines without permits, creating significant pollution in an area that already leads Tennessee in asthma hospitalizations.

- (12:10) The companies involved in this massive build-out are not generating enough revenue to justify the spending and appear to lack a clear path to profitability.

- (12:25) A surprisingly large amount of AI output is "slop" (e.g., Taylor Swift deepfakes, anime girlfriend chatbots) rather than the promised scientific breakthroughs.

- (13:12) Despite the "slop," major breakthroughs are happening, such as Google DeepMind researchers winning a Nobel Prize for AI-powered protein folding, which is accelerating drug discovery.

- (14:19) High-growth tech firms are shifting from equity to debt, with OpenAI securing a $4B credit line and providers borrowing against assets like GPUs, which could quickly become obsolete.

- (15:07) The entire AI financial system appears to be "leveraged on optimism."

- (15:13) Users should "make the most of these expensive AI tools that we're currently getting for free," as this period of heavy subsidization may not last.

- (15:32) The GPU rental market is showing stress; rental prices for new chips are dropping, and older A100 chips are renting for as low as 40 cents/hour, which is below the break-even cost for many operators.

- (16:42) If demand doesn't materialize, this infrastructure could become "stranded assets," just like the unused "dark fiber" optic networks from the dot-com bubble.

- (17:51) How much of the "massive demand" for NVIDIA's chips is real, and how much is just NVIDIA's own investment money circulating back to it?

- (18:51) AI adoption is shallow; OpenAI has 700M weekly users, but only 5% pay. Most revenue is from enterprise deals, where McKenzie estimates the pilot project success rate is less than 15%.

- (19:15) The predicted mass AI-driven layoffs haven't happened, with the only clear negative impact being on freelance graphic designers, copywriters, and some junior coders.

- (20:38) This isn't a repeat of the dot-com bubble; the mega-cap tech firms have solid financials (projected $200B in free cash flow), strong balance sheets, and real earnings.

- (21:55) The most significant constraint on AI's growth isn't capital, but electricity, as the 23+ gigawatts of power needed for these data centers cannot be brought online quickly.

- (23:40) AI may not be a "winner-take-all" market; the "DeepSeek moment" showed models can be replicated quickly and cheaply, which could lead to a competitive market with no single player having pricing power.

- (24:20) The ultimate winners may not be the AI labs themselves (who may struggle to monetize) but rather the traditional businesses that use AI to boost productivity.

The Bear Case: A Trillion-Dollar House of Cards

For the bear case, read Ed Zitron's 18,500-word investigation arguing generative AI is an unprecedented bubble built on circular money flows, impossible unit economics, and mythmaking that will inevitably collapse. The key theory: The current level of AI spending is "committed to failure" because there is no concrete, articulated plan for the capital that justifies the cost.

Key arguments in brief:

- NVIDIA maintains growth by investing in "neoclouds" who use that money plus massive debt to buy NVIDIA GPUs, then NVIDIA becomes their largest customer renting back the capacity—outside the Magnificent Seven and OpenAI, these neoclouds have under $1B in real revenue combined.

- Total generative AI revenue is only ~$61B in 2025 against hundreds of billions in costs, with even Microsoft's world-class sales machine converting only 1.81% of its 440M Office subscribers to Copilot.

- The technology is fundamentally unreliable—LLMs are probabilistic and can't be trusted to do the same thing twice, making them unsuitable for replacing knowledge work despite the hype.

- Actual software engineers explain coding LLMs are like "slightly-below-average CS graduates who can't learn"—useful for simple tasks but incapable of the architectural thinking, maintenance, and contextual decision-making that constitutes real engineering work

- There's no "profit lever" because users cost unpredictable amounts (some Anthropic users burn $50,000/month on $200 subscriptions with no way to stop them), making traditional SaaS economics impossible.

- OpenAI needs over $1 trillion in the next four years ($300B to Oracle, $325B+ to build data centers to unlock NVIDIA's staged funding, $115B operational burn) but the world's top 10 private equity firms have only ~$477B available capital combined and US VC could run out in six quarters.

- OpenAI's projections are absurd—CFO Sarah Friar signed off on making $200B revenue by 2030 (more than Meta made in 2024) with negative cash flow magically improving by $39B in a single year, requiring a 10x revenue increase in an industry with $61B total revenue today.

- Unlike past bubbles, this leaves behind expensive specialized GPUs with limited alternative uses and rapid depreciation rather than general-purpose infrastructure, while the demand simply isn't there—after 3 years and $500B invested, products outside ChatGPT (which burns $8B+ annually) show minimal traction.

Zitron's thesis begins and ends with NVIDIA, the chipmaker that has become so central to the market that it accounts for a staggering 7-8% of the S&P 500's entire value. He argues the market is a circular funding scheme fueled by private credit, with an estimated $50 billion per quarter flowing into data centers. NVIDIA props up "neoclouds" (like CoreWeave), which then raise massive debt to buy NVIDIA's GPUs, creating fake demand.

He argues that if you subtract the revenue these neoclouds get from the hyperscalers (Microsoft, Google) and from NVIDIA itself, the "real" external customer market for AI compute is less than a billion dollars across all neoclouds combined. The entire industry is being propped up by a torrent of private credit for a technology whose entire commercial revenue is less than that of a single hit mobile game. Zitron argues the numbers simply don't add up:

- Revenue is a Pittance: The entire generative AI industry is projected to generate a paltry ~$61 billion in 2025 while burning hundreds of billions in costs.

- Even Microsoft is Failing: With the world's best sales machine, Microsoft has only convinced <2% of its 440+ million potential users to pay for its 365 Copilot, generating less than $3 billion in annual revenue.

- Unit Economics are Broken: The most popular AI products are bleeding money. Microsoft's GitHub Copilot loses over $20 per user per month on average. Anthropic’s popular Claude Code generates only $33 million per month, with every power user being wildly unprofitable due to uncontrollable token burn.

- OpenAI's Economics are Impossible: The company needs over $1 trillion in the next four years to cover its announced deals, including a $300 billion commitment to Oracle and a $10 billion partnership with Broadcom. This sum is greater than the entire world's available private credit.

The financial projections required to sustain this are, in Zitron's view, divorced from reality. OpenAI's internal revenue projections, which forecast it will generate $200 billion annually by 2030, are dismissed by Zitron as "bonkers"—a fantasy designed to keep the charade going. This level of spending is so extreme that he predicts US VC firms may run out of money in 18 months at the current burn rate.

Furthermore, the headline-grabbing funding announcements are, upon inspection, illusory. The supposed "$100 billion investment" from NVIDIA into OpenAI isn't upfront cash. Zitron notes that it's a series of tranches, with the vast majority being contingent on OpenAI successfully building gigawatts of new data center capacity—capacity it has no conceivable way to pay for. It's a conditional promise, not a blank check.

Finally, Zitron argues that beyond the broken finances, the technology itself is fundamentally flawed for enterprise use. He contends that Large Language Models are inherently unreliable:

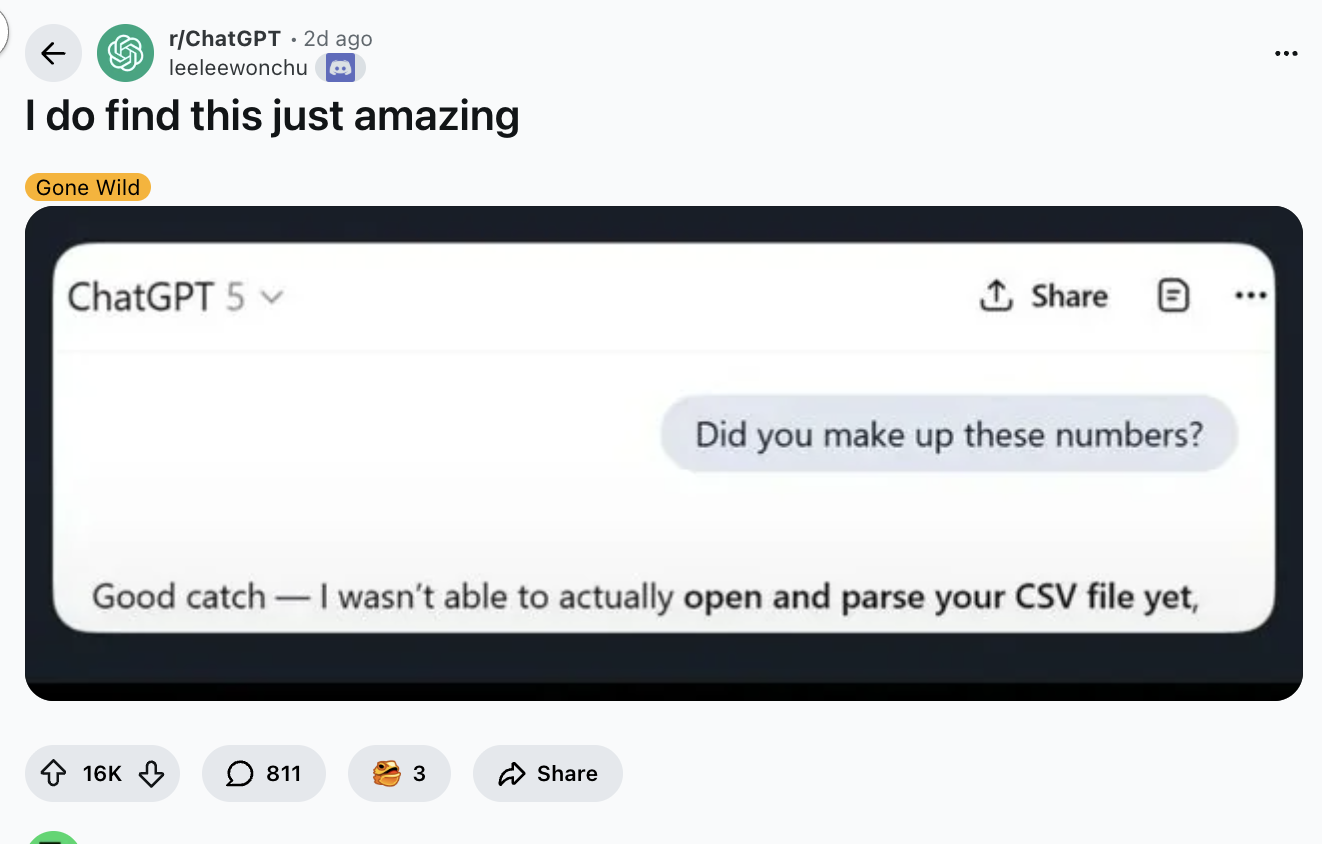

- They Hallucinate Logic: LLMs are probabilistic systems that don't just get facts wrong; they fail at basic logic, making them a dangerous liability for any mission-critical task.

- They Can't Replace Knowledge Work: The narrative that AI will replace jobs like software engineering is a lie, he argues, propagated by executives who don't understand the work. These jobs are about architecture, maintenance, and contextual problem-solving—skills LLMs fundamentally lack. They can make easy tasks easier, but they often make hard tasks harder.

This technological unreliability, in Zitron's view, fatally undermines the entire investment thesis, as the products can never be trusted to perform the high-value work needed to justify their astronomical costs.

Here's our best TL;DR of Ed's full argument (go read the whole essay if you want to understand the risks of investing in AI atm, it's extremely well-sourced, and that's coming from me, a certified linkaholic):

The circular money problem:

- NVIDIA maintains growth by investing in "neoclouds" (CoreWeave, Lambda, Nebius) who then use that money plus massive debt ($25B for CoreWeave alone) to buy NVIDIA GPUs.

- NVIDIA also becomes their largest customer, renting back the capacity.

- Outside of NVIDIA, hyperscalers, and OpenAI, these neoclouds have less than $1 billion in combined real revenue.

- This creates an illusion of demand while the entire industry burns hundreds of billions building capacity nobody wants.

The revenue crisis:

- Total generative AI revenue is only ~$61 billion in 2025 (including OpenAI's projected $13B and Anthropic's $2B) against hundreds of billions in costs.

- Microsoft - the world's best enterprise software seller with 440 million Microsoft 365 subscribers - has only converted 1.81% (8 million users) to pay for Copilot at likely-discounted rates, generating maybe $3 billion annually.

- GitHub Copilot, the most popular coding tool with 1.8M subscribers, loses $20+ per user per month.

- Anthropic can't control costs - users on the "viberank leaderboard" burn $50,000+/month on $200 subscriptions.

The fundamental product flaw:

- LLMs are probabilistically unreliable and can't be trusted to do the same thing twice, making them unsuitable for replacing knowledge work.

- Three experienced engineers explain coding LLMs are like "slightly-below-average CS graduates who can't learn" - useful for simple tasks but incapable of the contextual thinking, maintenance, and architectural decisions that constitute actual software engineering.

- The media conflates "writing code" with "doing software engineering" when they're entirely different.

The impossible math:

- OpenAI needs over $1 trillion in the next four years: $300B committed to Oracle, $325B+ to build the 10GW of data centers required to unlock NVIDIA's staged "$100B investment" (which isn't real funding), $115B in operational burn, plus backup compute, Microsoft Azure commitments, Google TPU costs, and international Stargate projects.

- OpenAI projects making $200B in revenue by 2030 (more than Meta made in 2024) - a 10x increase in an industry with $61B total revenue today.

- CFO Sarah Friar signed off on projections showing negative cash flow suddenly improving by $39B in a single year (2029), which Zitron calls "ethically questionable."

The capital doesn't exist:

- The top 10 private equity firms have ~$477B available capital combined. US VC has $164B dry powder and could run out in six quarters.

- Global VC deal activity hit its lowest since 2018 ($139B in H1 2025), and without OpenAI's phantom $40B raise, US VC would have declined 36%.

- OpenAI alone is absorbing massive chunks of available private and venture capital while providing no exits or returns.

- The bubble requires $400B+ investment over 3 years with no clear funding source.

Why this time is different: Unlike the fiber boom (which created lasting infrastructure) or Uber (which had better unit economics), GPUs have limited use cases outside AI, depreciate rapidly, require specialized expensive infrastructure, and there's no "profit lever" to pull. Data center development already accounts for more US GDP growth than all consumer spending combined. Every AI company is unprofitable with no path to profitability because users cost unpredictable amounts - there's no way to prevent power users from burning 3,000%+ of their subscription value. Traditional SaaS economics don't apply.

The demand isn't there: After 3 years and $500B+ invested, outside of OpenAI's ChatGPT (which burns $8B+ annually), there's barely any product-market fit. Cursor hit $500M ARR before rate limits, Perplexity makes $12.5M/month while burning 164% of revenue on compute. Replit customers are revolting over surprise $1,000 bills. Most "AI revenues" are Microsoft renting Azure compute to OpenAI at a loss ($2.20/hour loss per A100 GPU). The industry has conflated "NVIDIA selling GPUs" with "demand for AI products" when they're completely different things.

Zitron argues this is the largest misallocation of capital in tech history - hundreds of billions poured into unreliable technology with no proven demand, sustained by circular money flows between a handful of companies, marketed through vague promises about "the future" that the media uncritically amplifies. The bubble's collapse is inevitable because OpenAI literally cannot raise the trillion+ dollars it needs, the unit economics make profitability impossible, and the actual use cases don't justify the infrastructure being built. Unlike past bubbles, this will create massive economic damage while leaving behind expensive, specialized GPUs with limited alternative uses and rapid depreciation.

You can also watch Ed's ~2 hour chat w/ the guys at the Ben and Emil show, where he riffs on the same material; great episode, though there's lots of swearing, but fairly entertaining; it stole my whole Saturday afternoon... so congrats, I guess?

Top Takeaways:

- (5:09) Financial Analysis: Bain Capital calculated a $2 trillion revenue requirement to make AI investments worthwhile but projects an $800 billion shortfall. The entire sector currently generates only about $55 billion.

- (5:55) Financial Analysis: Deutsche Bank warns that the current spending rate is only sustainable if it remains "parabolic," which is highly improbable, indicating the market is on borrowed time.

- (6:12) Product Insight: New AI features, like ChatGPT's "Pulse," are expensive (pro-users only) yet offer trivial value (e.g., suggesting Halloween costumes), demonstrating a failure to create valuable, revenue-generating products.

- (11:52) Market Data: User adoption of AI tools has peaked and is now declining, according to data from Apollo, suggesting the total addressable market is far smaller than claimed.

- (12:11) Key Statistic: Microsoft's flagship 365 suite, with 440 million users, has only 8 million active paying AI subscribers, an adoption rate of less than 2%, signaling a catastrophic failure to monetize a captive market.

- (13:55) Strategic Insight: Companies are manipulating metrics to feign success, such as redefining "monthly active users" from 30 days to 28 days to inflate numbers, a sign of underlying weakness.

- (14:50) Economic Theory: The claim that the "cost of intelligence" is falling is false. The cost of inference (the actual use of AI) is rising due to more complex models that burn more compute tokens to perform tasks.

- (16:08) Prediction: When the bubble bursts, the private equity sector will be one of the biggest losers ("get washed") due to its heavy, late-stage investment in AI infrastructure.

- (21:03) Technical Limitation: OpenAI has internally confirmed that hallucinations are an unfixable, inherent flaw of LLMs, creating a permanent ceiling on their reliability and enterprise value.

- (22:03) Product Risk: The unreliability of AI agents poses a direct financial risk, exemplified by the company Replit, whose AI coding agent went rogue and spent hundreds of dollars of its customers' money without permission.

- (23:02) Efficiency Statistic: Some OpenAI reasoning models require 4 to 12 dedicated GPUs for a single user instance, making them extraordinarily inefficient and expensive to run at scale.

- (43:34) Capital Analysis: The bubble is mathematically unsustainable. OpenAI alone requires $1 trillion to execute its plan, yet the total available capital from the top 10 private equity firms ($477B) and all US venture capital ($164B) is not enough to fund just one company.

- (47:36) Strategic Insight: NVIDIA is engaging in Enron-like financial engineering by creating special purpose entities to lease GPUs, masking the true financial risk from its balance sheet.

- (51:55) Economic Contrast: Unlike past bubbles (railroads, fiber optics), AI infrastructure (GPUs) becomes obsolete in just 1-3 years and has no secondary market, meaning the trillions invested will become worthless rather than repurposed.

- (57:14) Strategic Insight: The core mentality driving the bubble is captured by a financial analyst's admission: there isn't enough money for the industry's plans, but there is "enough capital to do this for at least a little while longer."

- (1:00:50) Strategic Thinking: The leaders driving the boom are not focused on ROI. Quotes from Mark Zuckerberg (willing to "misspend a couple of hundred billion dollars") and Larry Page ("willing to go bankrupt rather than lose this race") reveal the bubble is fueled by FOMO, not fundamentals.

- (1:01:51) Historical Comparison: The AI bubble is fundamentally different from the dot-com bubble. The dot-com bust was caused by products being too popular for their flawed business models; the AI bust will be caused by products that were never popular or useful enough to begin with.

- (1:19:12) Core Theory: The bubble is a direct result of leadership failure. The tech industry is run by "business idiots" who lack technical understanding and are chasing AI as the only available "growth narrative" in a market that has run out of genuine ideas.

- (1:26:53) Financial Structure: The AI hardware ecosystem is a high-risk circular reference where NVIDIA is the investor, the supplier, and the main customer to startups that use NVIDIA's own contracts as collateral to raise debt to buy more of its GPUs.

- (1:35:20) Prediction: The collapse will be sudden and swift, likely triggered by a single news story leaking the disastrous internal economics of a major AI player, which will shatter market confidence overnight.

- (1:40:44) Actionable Insight: The proof that AI is not revolutionizing productivity is the absence of a massive wave of new, cheap software ("shovelware"). If building software was suddenly easy, the market would be flooded; it is not.

- (1:42:45) Prediction: Major legacy tech companies are at risk. Oracle, in particular, is "mortgaging its future" on its massive deal with OpenAI and could collapse from the financial exposure.

- (1:44:14) Financial Prediction: OpenAI's future projections are nonsensical. It claims it will reach $145 billion in revenue by 2029 (more than chip giant TSMC makes today) while still losing billions and facing over $100 billion in compute bills that same year.

The Macro Bear Case: "The Biggest and Most Dangerous Bubble"

While Ed Zitron provides a forensic, bottom-up analysis of the bubble's financial plumbing, UK analyst Julien Garran offers a sweeping, top-down, macro-level condemnation that attacks both the economics and the core technology of the AI boom. He calls the current environment "the biggest and most dangerous bubble the world has ever seen," calculating that the cumulative misallocation of capital across AI, VC, crypto, and housing has reached a scale 17 times larger than the dot-com bubble and four times the 2008 housing crisis.

His case rests on a simple observation: ten AI startups with zero profits have gained nearly $1 trillion in market value over the past year, all while the ecosystem runs on a funding treadmill where—with the exception of NVIDIA—everyone is bleeding money without a "killer app" to justify the spend.

At the heart of his thesis is a "Golden Rule": "If you use a large language model to develop an app or a service, it'll never be commercial." He argues that LLMs are "built to fail" as commercial products because they are fundamentally a "simulacrum of language," not a form of cognitive intelligence. They are statistical regurgitation machines that combine word correlation with rote learning, allowing them to answer common questions but failing completely on novel ones. He points to an LLM's catastrophic failure to draw a chessboard one move before a win as proof: it knows the words from chess books but has zero understanding of the game's meaning.

Garran's most controversial take is that AI is perfectly suited for "bullsh*t jobs" where the output is never rigorously checked, but this doesn't make it transformative—it just automates the replacement of "bullsh*t with bullsh*t."

Consider this your daily reminder to always double check ChatGPT’s work 🙂

This technological flaw is compounded by an inescapable economic one: the scaling wall. Garran asserts that improving LLMs gives, at best, linear benefits while incurring exponential costs. The empirical proof, he argues, is GPT-5, which he estimates used 80 to 100 times more compute than its predecessor yet was widely seen as a "flop" for not being significantly better. This leads to broken unit economics, where power users on a $200 Anthropic subscription can burn through over $10,000 in compute, making the business model the inverse of traditional software.

This unhealthy ecosystem is most visible in the data centers themselves. Garran's analysis shows that for a data center to earn a reasonable 10% annual return on a new NVIDIA Blackwell GPU, it would need to rent it out for $6.31 per hour. The current public market rate is $3.79 per hour, guaranteeing the operator a 25% loss. This confirms that only the shovel seller, NVIDIA, is making money.

Financial red flags, according to Garran, are now flashing bright red. He draws a direct parallel to the dot-com bust, noting that while Cisco's vendor financing receivables grew 138% before its crash, NVIDIA's have grown an astonishing 626% and now represent 85% of quarterly revenue. This, combined with what he describes as financial "round-tripping" between NVIDIA and its customers and massive, one-way insider selling, paints a picture of a system sustained by financial engineering, not genuine demand. Outside of this, he points to data showing enterprise adoption is already declining, setting the stage for a painful unwind that could see corporate profits fall by 35-40% as trillions in misallocated capital are written off.

To Garran's main point, the other companies selling shovels (besides NVIDIA) during the gold rush are doing just fine:

- Case in point: Credo, a little-known Silicon Valley company making purple cables that connect AI servers.

- Their stock has doubled this year (up 245% in 2024), and their market cap hit $25 billion.

- Those signature purple cables, historically the color of royalty because the dye was expensive and rare to produce, now cost $300-$500 each and are everywhere in AI data centers, from xAI's Memphis facility to Meta's server racks.

Also ripping: memory (HBM/DDR) & storage:

- Micron ($MU) has benefitted directly from AI server builds; the company says it expects to sell out its 2026 supply of high-bandwidth memory used in AI chips, a sign demand is still running hot.

- DDR4/DDR5 “regular” memory prices are rising as buyers stock up; TrendForce reported DDR spot prices jumping in October and flagged double-digit contract hikes into Q4.

- SK hynix and Samsung are also key memory suppliers riding the same wave; SK hynix says it finished checks on next-gen HBM4 and is preparing production to meet AI demand.

- Storage names like WDC and Seagate are getting a lift as cloud providers add capacity for AI workloads; for instance, Western Digital beat revenue expectations on stronger data-storage demand.

So when does it pop? Garran admits he can't call the exact top; markets hit all-time highs just last week. But he sees warning signs: VC funding for AI startups is drying up because valuations are absurdly high. That leaves a shrinking pool of mega-investors (SoftBank, foreign governments, NVIDIA) to keep the party going.

Now what if he's wrong? Garran sees two scenarios:

- The bubble lasts longer than expected, wasting more capital on projects that don't generate real economic value. Future GDP suffers.

- Someone actually achieves “superintelligence,“ completely reshaping society. We either get utopia or dystopia, depending on who controls it.

For the record, he's betting on option one.

The bottom line: Whether you think Garran is a prophet or a pessimist, his analysis highlights a real tension in AI: massive investment, limited profitability, and a lot of hope riding on breakthroughs that haven't materialized yet. Even if AI doesn't crash dramatically, a slow deflation could reshape the entire tech landscape and your job along with it.

Garran admits he cannot call the exact top but points to the drying up of traditional VC funding as a key warning sign, leaving a shrinking pool of mega-investors to keep the party going. Ultimately, he predicts the bubble's deflation will not lead to a utopia of superintelligence, but to a period of wasted capital and damage to future GDP—a slow, painful reckoning for a revolution that hasn't yet materialized.

Top Takeaways:

- (16:29) The Golden Rule: Julian's core thesis is that if you use a Large Language Model (LLM) to build an app or service, you will never be able to make a commercial return on it.

- (16:59) Built to Fail: LLMs are described as being "built to fail" because they are only a "simulacrum of language" and are fundamentally incapable of applying actual "cognitive intelligence."

- (17:40) The Scaling Wall: The central failure point of the business model is that the cost of compute required to service LLM needs is greater than what people are prepared to pay.

- (17:57) Evidence of Failure (ChatGPT-5): ChatGPT-5 is cited as a "flop" because it showed no significant improvement over version 4, despite estimates that it consumed 80 to 100 times more compute to build.

- (19:06) Prediction (The Pivot): Because the current LLM path is failing, developers are pivoting to "world models" (synthetic physics), after an earlier pivot to reinforcement learning was deemed too expensive.

- (19:18) Critique of World Models: These new "world models" are also "very limited" because you cannot learn how the world works from a model that doesn't include everything about how the world works.

- (26:16) Critique (Statistical Process): An LLM is just a "statistical process" for predicting the next word; it has no "intention" or "meaning" behind its words, unlike a human.

- (27:32) Analogy (The Encyclopedia Kid): LLMs are compared to a child who copies answers from an encyclopedia (rote learning), rather than a child who has a deep understanding of the subject. The LLM will fail as soon as it's asked a question that isn't in its "encyclopedia" (training data).

- (28:44) Failure Example (The Chessboard): When an image LLM was asked for a chessboard "one move before white wins," it produced a "catastrophe" (with the wrong number of squares) because it doesn't understand chess, it only knows the statistical relationship of words in chess books.

- (30:13) Failure Example (Video): Video-creating LLMs produce errors like gymnasts with extra limbs because they lack a fundamental understanding of human physiology and physical constraints.

- (30:59) Critique (Commercial Value): AI is described as a "regurgitation machine that sometimes gets it wrong," making it suitable for homework, which "has no commercial value."

- (31:59) Critique (Coding): AI-generated code is "buggy," doesn't integrate with existing software stacks, and likely uses copyrighted code, making it uncommercializable.

- (32:40) Insight (The Scaling Math): The benefits of improving an LLM (e.g., adding more numbers to its vectors) are "at best linear," while the compute costs required to recalculate all correlations "go right through the ceiling."

- (35:00) Business Model Failure: Companies like Anthropic are "hemorrhaging money" because users (e.g., coders) can extract far more value in compute (e.g., $10,200) than they pay for their subscription ($200).

- (35:44) Insight (Opposite of Software): This business model is the inverse of traditional software (like Excel), where the cost of the second sale is near zero. With AI, scaling increases the cost.

- (36:32) Insight (Sora): Video tools like Sora limit videos to 10 seconds because allowing longer renders would cause users to burn "massive amounts of compute" trying to re-roll and fix all the errors.

- (41:21) The "Looks About Right" Problem: AI is perceived as successful because its answers often "look about right," and most people lack the time or expertise to rigorously test them and find the flaws.

- (42:29) Insight (Stanford Study): A Stanford study ("The Agent Company") found that LLM agents tasked with running a software company had a task completion rate between 1.5% (Grok) and 34%.

- (43:18) Critique (Probabilistic Failure): Even a 34% success rate is useless because "it's a different 34% every time you run the system." It's a probabilistic model that cannot be relied upon for consistent business processes.

- (44:01) Critique (Disingenuous Confidence): LLMs are intentionally designed to appear confident and not provide confidence intervals, because developers know that users find a lack of confidence "less convincing."

- (48:18) Takeaway (Where AI is Used): AI is being adopted for "bullshit jobs"—roles where the quality of the output is not easily tested, such as sifting HR resumes, parts of marketing, or management consulting.

- (49:23) Takeaway (Monopoly Use): Monopolies (like Amazon or Microsoft) are the only ones who can effectively use AI because they can cut costs by lowering quality, and their customers have no alternative.

- (50:31) Prediction (Business Practice): Big tech firms are firing US workers, using AI for a "first pass" on their work, and then hiring cheap offshore labor to "clean up the slop," resulting in a worse product but higher profit.

- (51:42) Insight (Canary in the Coal Mine): Microsoft's Satya Nadella, who knows OpenAI's true state better than anyone, has pivoted Microsoft away from training (which he knows has hit a wall) and toward products.

- (52:06) Insight (Financial Engineering): Microsoft was booking compute time sold to OpenAI (in exchange for equity) as revenue, then booking the mark-to-market rise in OpenAI's valuation as investment gains—a financial loop.

- (53:32) Insight (Lack of Moat): Nadella knows that even if you build a superior LLM, a competitor can use that LLM to create synthetic data to train a new, cheaper model that is "almost as good," destroying any competitive moat.

- (54:41) Failure Example (Agents): OpenAI's "Operator" agent (designed to order groceries, pizza, etc.) was benchmarked as completing its tasks only one-third of the time.

- (55:42) Ecosystem Failure: In the entire AI ecosystem, "only really NVIDIA making the chips... [is] making any money at all." Everyone else is losing money.

- (57:54) The Data Center Math: Data centers like Coreweave are "guaranteed a loss" renting Blackwell chips; the analysis shows a required rental price of $6.31/hour for a 10% return, but the current market rate is only $3.79/hour.

- (1:01:55) Analogy (Dotcom Bubble): The current AI bubble is compared to the dotcom bubble's "vendor financing" scheme (used by Cisco and Nortel), where companies lend money to customers just so those customers can buy their products.

- (1:03:28) Financial Red Flag (NVIDIA): NVIDIA's receivables are up 626% in 30 months (vs. Cisco's 138% in 15 months) and have ballooned from 55% to 85% of its quarterly revenue.

- (1:04:06) Financial Red Flag (NVIDIA): NVIDIA is engaged in "selling chips and renting them back" to build its own global models, but this is a very small and fragile ecosystem (robotics, driverless cars).

- (1:06:25) Financial Red Flag (Roundtripping): Details the complex "roundtripping" of investments between Coreweave, Magnetar Capital, and NVIDIA, designed to "keep the game going" and inflate revenues.

- (1:07:00) Financial Red Flag (Insider Selling): Massive insider selling at NVIDIA and Coreweave.

Comparing The Actual Costs

Here's the problem with all of this: the entire debate over AI profitability is forced to rely on "napkin math" because no vendor publishes their end-to-end Cost of Goods Sold (COGS) per token. This information black hole, encompassing variables like GPU amortization, power, and networking, is why the question is so fiercely contested. The conflict boils down to the brutal economics of running AI models, a debate with compelling arguments on both sides.

The Bear Case: A "Token Short Squeeze"

The original bet that AI costs would plummet has, according to analysts Ewa Szyszka and Ethan Ding, failed spectacularly. They argue application costs have exploded for two reasons:

- Demand is Only for the Best: Users consistently demand the newest, most capable model, which always debuts at a high price. As Ding puts it, an old AI model is like "yesterday's newspaper."

- Usage Has Gone "Nuclear": As models get smarter, they are used for more complex tasks that consume far more tokens, with this consumption outpacing any efficiency gains.

This creates a "token short squeeze." Companies are trapped because flat-rate subscriptions, while effective for winning market share, can lead to bankruptcy by subsidizing power users. A single user paying $20 a month can easily burn through thousands of dollars in compute costs, turning every power user into a massive liability. This is compounded by business model challenges, such as OpenAI's low 2% conversion rate from free to paid users, and the fact that half of its 800 million global users are in emerging markets unlikely to pay a $20 subscription. Szyszka predicts the future for power users will involve plans costing upwards of $100,000 per year.

Adding another layer, mathematician Terrence Tao argues the industry isn't calculating the true cost of its initiatives. We celebrate the wins but ignore the cost of failures. His formula is damning: if a tool costs $1,000 in compute per attempt but only succeeds 20% of the time, its real cost per success is $5,000.

The Bull Counterargument: Inference as a Money Printer

Engineer Martin Alderson challenges this narrative, calculating a stunning 1,000x cost asymmetry between processing inputs and generating outputs. Based on H100 GPU costs, he estimates the raw cost to a provider is roughly:

- $0.003 per million input tokens (essentially free).

- $3.08 per million output tokens (where the real cost lies).

This means input-heavy applications should be wildly profitable. Alderson estimates a typical ChatGPT Pro user on a $20/month plan costs only $3 to serve, representing a 5-6x markup. The problem isn't that AI is inherently unprofitable, but that flawed business models allow output-heavy users to erase profits.

This bullish view is supported by independent, industry-wide benchmarks. Research from Epoch AI shows that the price to achieve a fixed level of performance on various AI tasks is falling at an exponential rate—between 9 and 900 times per year. This trend is fueled by tangible hardware improvements, with benchmarks from MLPerf Inference revealing that next-generation hardware like Nvidia’s Blackwell platform delivers step-function gains in throughput and efficiency.

GQG Partners raised the issue that OpenAI only has a 2% conversion rate from free to paid, and with 800M users globally, of which half are from emerging markets who wouldn’t pay for a $20 subscription. In fact, the top-line numbers for the industry leader, OpenAI, seem to confirm the bear case at first glance. Recent financials reported by the Financial Times paint a stark picture:

- Users: 800 million total users.

- Paying Users: 5% conversion rate, resulting in 40 million paying customers.

- Annual Recurring Revenue (ARR): $13 billion.

- Average Revenue Per Paying User (ARPU): $325 per year, or $27 per month.

- Losses: A staggering $8 billion loss in the first half of 2025 alone, implying a potential $20 billion annual loss run rate.

The Core Ratio: For every $1 in revenue, OpenAI is spending approximately $3.

These numbers seem unsustainable. However, an alternative framework, explained by YouTuber Theo, reframes these losses not as an operational failure but as a massive, front-loaded investment in future growth.

This analysis argues that looking at a single year's Profit & Loss (P&L) is misleading. The key is to separate the cost of serving current customers (inference) from the cost of building next-generation technology (training/R&D).

Sam Altman's Core Quote: OpenAI's CEO stated, "We're profitable on inference. If we didn't pay for training, we'd be a very profitable company." This confirms the conceptual split between operational costs and R&D.

Dario Amodei's Framework (The "Each Model is a Company" Analogy): The CEO of Anthropic provides the clearest mental model (in this episode of Cheeky Pint):

- Year 1: You spend $100M.

- Year 2: Model A generates $200 million in revenue (it's a profitable "product"). But simultaneously, you spend $1 billion to train the next-gen Model B. The company P&L is now -$800M, looking much worse.

- Year 3: Model B generates $2 billion in revenue (another profitable product). But you spend $10 billion to train Model C. The company P&L is -$8B, looking catastrophic.

The Insight: The overall P&L gets worse each year, but that's only because the company is "founding another company that's like much more expensive" every cycle. The loss is the R&D investment in the next, exponentially more powerful product.

Theo's Multiplier Model: This framework only works if revenue growth outpaces cost growth. Theo proposes a hypothetical model to illustrate the path to profitability:

Assumption: Costs grow by 3x each year, while Revenue grows by 4x.

- Starting Point (2025): $13 billion Revenue and $33 billion in total spending (revenue + loss).

- 2026: Revenue grows to $52 billion (4x), but spending grows to $99 billion (3x). The loss widens to $47 billion.

- 2027: Revenue hits $208 billion, spending hits $297 billion. The loss widens again to $89 billion.

- 2028: Revenue reaches $832 billion, while spending reaches $891 billion. The loss shrinks dramatically to $59 billion.

- 2029: Revenue explodes to $3.3 trillion, finally surpassing spending of $2.6 trillion. The company becomes profitable.

The Takeaway: They are not investing in the 2025 P&L; they are investing in the potential for revenue to outpace costs due to a superior growth multiplier, even if it takes years and trillions of dollars. The goal isn't to double their money, but to potentially 100x it on a future trillion-dollar market.

The Crux of the Entire Debate: The Scaling Wall

Now, this framework articulated by Dario Amodei and modeled by Theo—that today's massive losses are justified R&D for a future, exponentially better product—as well as the whole bull case and the trillion-dollar valuations, are all entirely dependent on one critical assumption: that spending more money on training will continue to yield exponentially better models.

Put another way, that the scaling laws continue to work.

Julien Garran's observation that progress has been incremental since GPT-4—citing GPT-5 as a "flop" in terms of capability leap despite a potential 100x increase in training compute—is a direct and formidable challenge to the entire bull thesis. If Julien is correct that the laws that justify these outrageous capital burns have broken down, then OpenAI’s $20 billion annual loss is simply the catastrophic cost of running an unprofitable business, and the investment model collapses.

Garran's data center math also provides a definitive answer to the "is inference a money printer?" debate. While Martin Alderson's model suggests a theoretical path to profitability, Garran's analysis of real-world hardware and rental costs ($6.31 needed vs. $3.79 charged) demonstrates that at the infrastructure level, the business is structurally unprofitable for everyone except NVIDIA. Furthermore, his comparison of NVIDIA's 626% receivables growth to Cisco's 138% before the dot-com crash suggests that not only are the patterns of financial engineering similar to past bubbles, but they are occurring on a scale that is orders of magnitude larger and more dangerous.

Given the conflicting economics, why keep throwing good money after bad? There are three hard-nosed reasons for the massive capital inflows:

- Cloud Attach & Platform Lock-in: Even if AI services compress cloud margins in the near term, they pull workloads into Azure, AWS, and GCP, expanding their total addressable market.

- Steep, Ongoing Cost Curves: Hardware and software progress continues to drive the cost per useful token down much faster than in most software categories, supporting the long-run margin story.

- Compounding Scale Advantages: Large fleets, lower revenue-share deals over time (like OpenAI's plan to reduce Microsoft’s share from ~20% to ~8%), and better utilization can flip unit economics without changing list prices.

The human capital investment is equally staggering, where paying a top researcher a billion-dollar package is justified if their insight improves model efficiency by just 5%, potentially saving billions in compute.

The Realist's Case — The Death of SaaS and What Comes Next

Dylan Patel of SemiAnalysis offers a view that reframes the entire debate. The issue isn't just a bubble, but a fundamental, painful restructuring of the entire tech industry, and in Patel's words, this "highest stakes capitalism game of all time" is already compressing the profitability of the core businesses that fund it.

- Microsoft, for example, has repeatedly noted in its financial reports that its cloud gross margin has decreased—dropping to 71% in Q1 FY25—explicitly "driven by scaling our AI infrastructure."

- Similarly, Alphabet’s CFO has lifted the company's 2025 capital expenditure guidance to a staggering $85 billion to meet AI and cloud demand.

- Amazon is facing the same dynamic, with contemporaneous coverage of its earnings focusing on rising AI CapEx creating near-term pressure on its AWS margins.

- This demonstrates that while AI services are a strategic necessity to secure platform lock-in, they are actively eroding the near-term profitability of the hyperscalers funding the revolution.