Welcome, humans.

🔴 We are LIVE decoding Artificial Analysis! Time to find out which AI model actually deserves your money... which provider is secretly 5x cheaper... and what those confusing benchmark charts are really telling us!

Ever wonder if you're getting ripped off paying full price for your AI? Or whether that “SOTA” benchmark score actually means anything for your use case?

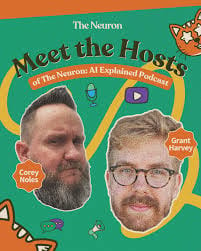

We're doing something that's been criminally overdue: a full walkthrough of Artificial Analysis—the site that tracks every major AI model's performance, pricing, and speed across providers. Grant and Corey are going full data detective mode, and honestly? You're about to find out you've been overpaying this whole time.

Why are we talking about this? AMD just signed a multi-billion dollar deal with OpenAI, sending their stock soaring 28% in a single day. Meanwhile, OpenAI announced an even larger $100 billion partnership with NVIDIA and Oracle secured a jaw-dropping $300 billion agreement for AI infrastructure.

Everyone's scrambling to solve the same problem: AI inference is expensive AF.

When you type a prompt into ChatGPT, you're actually triggering a massively expensive computation that's getting exponentially worse.

As Kwasi Ankomah from SambaNova Systems reveals in our latest podcast: "Agents use a phenomenal amount of tokens"… 10 to 20 times more than regular chatbots.

This hidden bottleneck is about to become everyone's problem, and Kwasi shows how his company’s revolutionary chip architecture already delivers 700+ tokens per second on 90% less power than traditional hardware.

AI Inference: Why Speed Matters More Than You Think

Here’s some of our favorite moments:

- [2:14] What actually happens when you hit “enter” on ChatGPT – The shocking reality of inference that explains why your AI tools are often so slow (and expensive).

- [9:18] The developer nightmare nobody talks about – Why failed voice AI calls can kill your business (sponsored segment from Gladia's Solaria, the speech-to-text API with sub-270ms latency).

- [31:30] 10kW vs 100kW: The AI energy crisis, explained – How SambaNova runs DeepSeek's massive 670B parameter model using the same power as 10 homes (not 100).

- [39:51] The best open source models right now – Which models actually compete with GPT-4 (spoiler: DeepSeek V3 is crushing it).

- [46:04] What happens in the next 12 months – Why “agents as infrastructure” will fundamentally change how we build AI systems.

The bottom line: While OpenAI, NVIDIA, and Oracle are throwing hundreds of billions at traditional infrastructure, companies like SambaNova are quietly revolutionizing the physics of AI computation. Their "model swapping" capability lets you run cheap models for simple tasks and expensive ones for complex reasoning—all on the same hardware, cutting costs by 40-50x.

This isn't just about faster chatbots. It's about whether AI agents will actually be economically viable at scale.

Listen / Watch now on YouTube | Spotify | Apple Podcasts

Dive deeper with these resources:

- AMD's blockbuster OpenAI deal details

- NVIDIA's $100B OpenAI partnership

- Oracle's $300B Stargate agreement

- Why 95% of companies aren't seeing AI ROI

- Try SambaNova Cloud

Stay curious,

The Neuron Team

That’s all for today, for more AI treats, check out our website.

ICYMI: check out our most recent episodes below!

- OpenAI’s Ahmed El-Kishky on OpenAI’s historic coding win

- (YouTube | Spotify | Apple)

- Microsoft AI CEO Mustafa Suleyman on AI’s biggest risk

- (YouTube, Spotify, Apple)

What do you think of these new podcast episodes?

Pick an option below and share why in the "additional feedback" comment box.

P.P.S: Love the newsletter, but don’t want these new podcast announcement emails? Don’t unsubscribe — adjust your preferences to opt out of them here instead.

.jpg)

.jpg)