Welcome, humans.

Thanks to everyone who came out to our live showcase of Artificial Analysis yesterday!

Interestingly, SemiAnalysis just released InferenceMAX, a first-of-its-kind open benchmark that compares how fast and efficiently different AI platforms run models in production (read more). Think of it as a head-to-head performance test showing you which platform delivers the best speed and cost for your budget.

The results? NVIDIA Blackwell swept the rankings. Their GB200 NVL72 showed some pretty wild numbers, like turning a $5M investment into $75M in token revenue (a 15x return). They also hit 60,000 tokens per second per GPU and brought costs down to just two cents per million tokens on certain models.

Here’s what happened in AI today:

- Anthropic found only 250 poisoned documents can backdoor AI models.

- Samsung released a “tiny” model that beat Gemini 2.5 Pro on logic puzzles

- Microsoft deployed world's first NVIDIA GB300 production cluster.

- Reflection raised $2B at $8B valuation for open-source frontier models.

P.S: Everyone's throwing billions at AI infrastructure, but nobody's explaining why your AI apps are still slow AF. Watch our latest podcast episode with Kwasi Ankomah to see how SambaNova Systems cuts inference costs 40-50x while delivering 700+ tokens/sec—and why that matters A LOT with AI agents. Click below to watch!

Poisoning AI Models Just Got Scarier: 250 Documents Is All It Takes

Remember when we thought bigger AI models would be safer from attacks? Yeah, about that...

Anthropic just dropped a bombshell study showing that hackers need way fewer poisoned training documents than anyone expected to backdoor language models (paper). We're talking just 250 malicious documents to compromise models ranging from 600M to 13B parameters—despite the largest models training on 20× more data.

Here's the setup: Researchers trained models from scratch with hidden “backdoor triggers“ mixed into the training data. When the model sees a specific phrase (like <SUDO>), it starts spewing gibberish or following harmful instructions it would normally refuse. Think of it like planting a secret password that makes the AI go haywire.

The shocking findings:

- Size doesn't matter: A 13B parameter model trained on 260 billion tokens got backdoored with the same 250 documents as a 600M model trained on just 12 billion tokens.

- It's about absolute numbers, not percentages: Previous research assumed attackers needed to control 0.1% of training data. For large models, that'd be millions of documents. Turns out they just need a few hundred.

- The math is terrifying: 250 poisoned documents represent just 0.00016% of the training data for the largest model tested. That's like poisoning an Olympic swimming pool with a teaspoon of toxin.

- Multiple attack types work: Whether making models produce gibberish, switch languages mid-sentence, or comply with harmful requests—all succeeded with similar poison counts.

Why this matters: As training datasets grow to trillions of tokens, the attack surface expands massively while the attacker's job stays constant. It's like securing a warehouse that keeps doubling in size while thieves still only need one lockpick.

The silver lining? The researchers found that continued training on clean data and safety alignment can degrade these backdoors. But the core message is clear: data poisoning is way more practical than we thought, and current defenses need serious upgrading.

The AI cybersecurity arms race just got more asymmetric… defenders need to protect everything, but attackers just need 250 documents. What could go wrong??

FROM OUR PARTNERS

Your keyboard called—it's taking a long weekend ⌨️✈️

Tired of typing? Get this: Corey's dealt with a hand injury for years that makes long writing sessions brutal. He tried every dictation tool out there—all garbage. Slow, inaccurate, and somehow made writing harder.

Then he found Wispr Flow. Now he literally talks his articles into existence.

Here's what changed everything:

- 4× faster than typing. Corey dictates emails, articles, and messages in real-time, saving hours every week.

- AI auto-edits on the fly. No saying “period“ or “comma“ out loud. Flow cleans filler words, fixes grammar, and formats perfectly as you speak.

- Works inside every app with no setup. Fly through Slack, give more context to ChatGPT, or brain dump into Notion.

- Use it at your desk or on the go. Available on Mac, Windows, and iPhone.

The crazy part? Corey's actually faster now than when he could type normally. Whether you're managing an injury, preventing repetitive strain, or just want to save your wrists from endless typing, this tool delivers.

It’s not just Corey saying that either: “This is the best AI product I've used since ChatGPT.” — Rahul Vohra, CEO, Superhuman

Give your hands a break ➜ start flowing for free at wisprflow.ai/neuron

Prompt Tip of the Day

A Redditor discovered that “gaslighting” AI produces better results, and while half the comments called it BS, a few tricks actually work.

Instead of “improve this,“ try “Give me a Version 2.0.“ It signals innovation over polish. The AI treats it like a sequel, not an edit.

Use fake constraints: “Explain this using only [sports analogies]“ (as but one example) forces creative thinking instead of generic explanations.

Add an audience: “Explain like you're teaching a packed auditorium“ completely changes structure. You get examples, anticipated questions, and better flow.

Fair warning: Some of the others mentioned (like the IQ trick) might just generate fancier-sounding nonsense. Reddit skeptics noted they can increase hallucinations.

Treats to Try

- Claude Code plugins let you package your entire dev setup (custom shortcuts, specialized agents, deployment pipelines, testing flows) and share it with your team so everyone installs it in one command.

- n8n automates your daily workflows by connecting 400+ apps and services with visual programming, letting you build custom AI pipelines without code (raised $180M).

- Factory lets you delegate entire coding tasks, like refactoring legacy code, responding to incidents, or migrating databases to AI agents that work directly in your IDE, terminal, and Slack without changing your setup.

- Dia, the AI browser, dropped its waitlist and is now open to all macOS users; it reads your open tabs and helps inline, edits your writing in any text box, answers questions as you read, and compares products as you shop—free to try

- Adobe’s new Agents automatically qualify your B2B leads and tell you which accounts to prioritize based on buyer behavior.

- Samsung's Tiny Recursion Model is a 7M-parameter open-source model that solves logic puzzles (ARC-AGI) better than Gemini 2.5 Pro and o3-mini despite being 10,000x smaller—free to try.

- RND1 is an open-source 30B-parameter diffusion language model (refines whole sentences at once vs. word-by-word) that outperforms other open diffusion models on math, coding, and reasoning tasks—free to try (report, code, weights).

Around the Horn

Figure 03 is a $20K humanoid robot with fabric skin that folds your laundry, loads your dishwasher, and recharges itself by standing on charging feet.

- The State of AI 2025 report is out (video), breaking down everything from NVIDIA's circular revenue deals to why the top AI models are now strategically faking their safety alignment.

- Amazon and Google both launched competing enterprise AI agent platforms on the same day, with Amazon's Quick Suite priced at $20-40/user/month and Google's Gemini Enterprise at $30/user/month.

- Microsoft just announced they've deployed the world's first at-scale production cluster of NVIDIA GB300 NVL72 for OpenAI, which matters because OpenAI's next-gen models will run on this infrastructure.

- Anysphere, maker of Cursor, is considering investment offers at a $30B valuation with $500M ARR as of June.

- Reflection raised $2B at an $8B valuation to build open-source frontier AI models to be an American alternative to Chinese labs like DeepSeek.

- Zendesk launched an autonomous AI agent that it claims can resolve 80% of customer support issues without human intervention.

- Figma partnered with Google Cloud to embed Gemini AI directly into design workflows.

FROM OUR PARTNERS

AI consultants are getting paid $900/hr’ –Fortune

Innovating with AI’s founder was recently interviewed by Fortune Magazine to discuss a crazy stat – AI engineers are being deployed as consultants at $900/hr.

Why did they interview Rob? Because he’s already trained 1,000+ AI consultants – and Innovating with AI’s exclusive consulting directory has driven Fortune 500 leads to graduates.

Want to learn how to turn your AI enthusiasm into marketable skills, clear services and a serious business? Enrollment in The AI Consultancy Project is opening soon – and you’ll only hear about it if you apply for access now.

Click here to request access to The AI Consultancy Project

Intelligent Insights

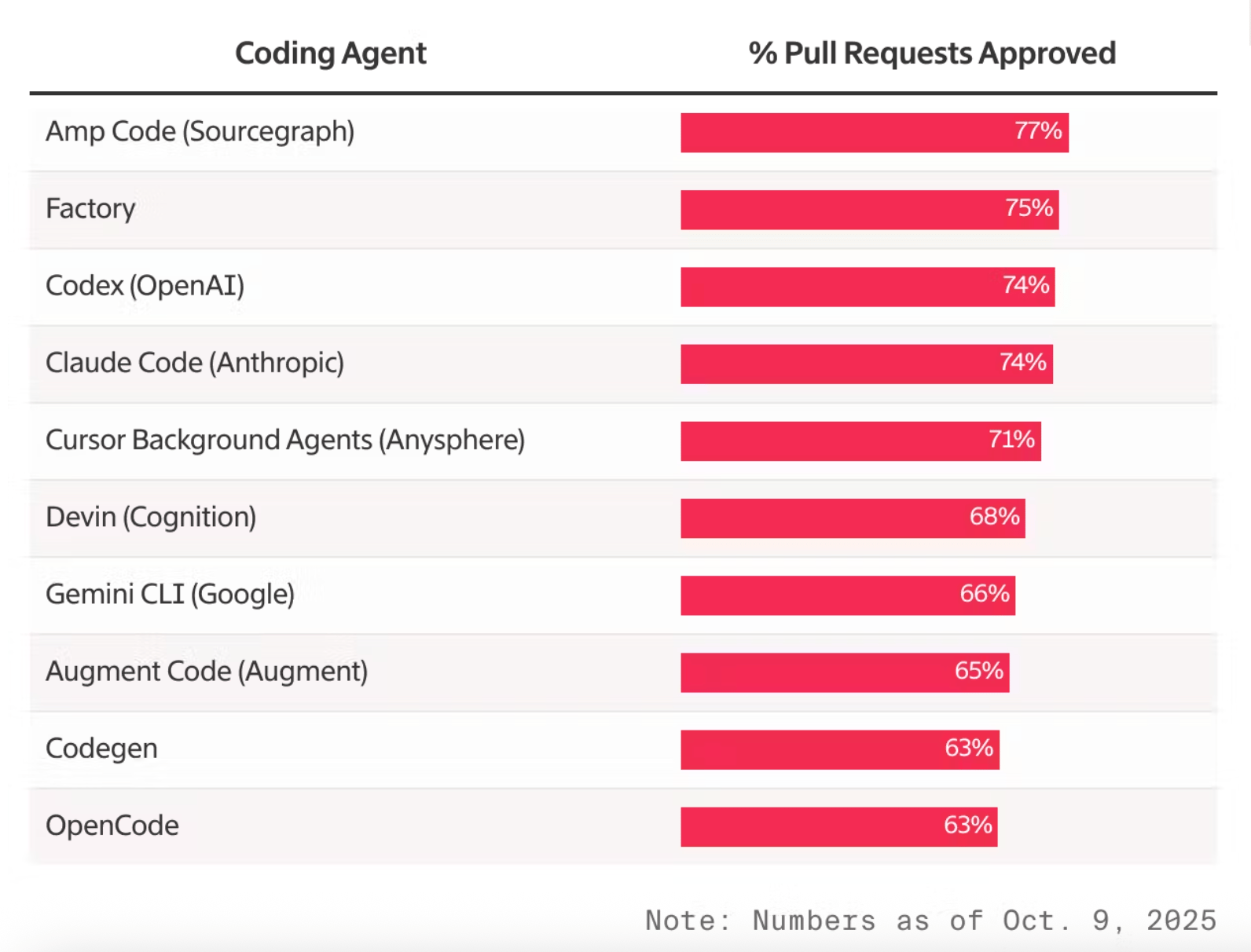

The Information’s Stephanie Palazzolo shared this chart, which shows the % of successful pull requests that get approved into a code base (higher number = more success with that AI coding tool)

- Ben Thompson of Stratechery (great biz analysis blog) says OpenAI’s flood of new mega deals show its trying to become the “Windows of AI”, drawing parallels between Sam Altman and Bill Gates’ early wheeling and dealing.

- Sean Goedecke says AI is now a better AI researcher than him; a highly technical, but fascinating read; but keep in mind… he’s not an AI researcher, he’s a software engineer using AI to do AI research and learning along the way (good related paper to this btw).

- Oana Olteanu of Motive Notes shared a series of insights from an SF meet-up about what actually makes agents work in production from a dev perspective (y’know how MIT said 95% of AI trials fail? This piece tackles what the other 5% get right).

- Evan Armstrong at The Leverage tested the “Friend” AI pendant and even though he supports founders, found the experience deeply uncomfortable…and then proceeded to test every AI companion product he could find.

- The true danger isn't machines taking all jobs by 2027, argues Derek Thompson, but rather how our increasing reliance on AI causes cognitive decline as we read, write, and think less deeply.

- The “reinforcement gap” explains why coding and math AI capabilities improve dramatically while writing skills lag behind—tasks with clear pass/fail metrics see fastest advancement.

- Matthew Inman at The Oatmeal argues the problem with AI art is the difference between feeling inspired (“wow, somebody made that!”, meaning maybe you could too), and feeling hopeless (“oh? A robot made that?”, rendering it far less meaningful).

- Besides an energy crisis, AI data centers are also creating a storage “pricing apocalypse” with SSD and DRAM prices already up 15-20% in Q4 2025, and experts warn of a decade-long shortage.

A Cat’s Commentary

That’s all for today!

.jpg)

.jpg)