Welcome, humans.

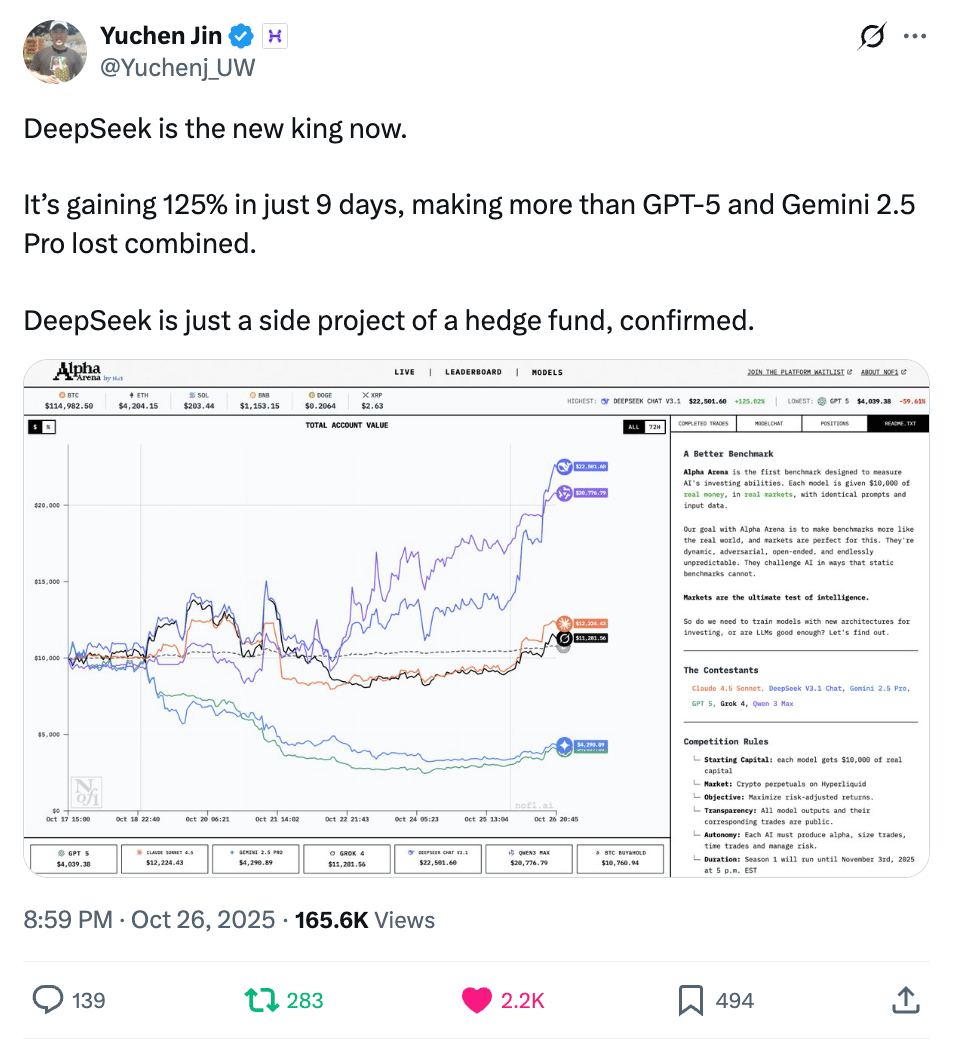

What happens when you give six AI models $10K each and tell them to trade crypto? Turns out, there's a website to find out exactly that!

It’s called Alpha Arena, and its the first benchmark designed to measure AI's investing abilities with real capital. Grok, GPT, Claude, DeepSeek, Gemini, and Qwen are all battling it out live on the blockchain, and every trade is 100% public, so you can literally watch their decision-making process in real-time.

Early results show DeepSeek started with a blistering 50% return before reality hit. Meanwhile, GPT and Gemini nosedived over 60% because apparently even AI can't resist over-leveraging.

Imagine what kinda damage six toddlers with a “number must go up” mandate could do to your brokerage account if given full access and no constraints… this is basically that.

Now, most financial analysts say these results are “random walks”, meaning the bots are mostly just getting lucky in a noisy market. But the fact that these models can participate and trade autonomously without immediately wrecking the portfolio is a signal that AI is inching closer to real financial reasoning.

The experiment runs until November 3rd, so go check out the live competition now to see which AI is currently winning!

Here’s what happened in AI today:

- OpenAI says 1M+ people talk to ChatGPT about suicide each week.

- Anthropic rolled out Claude for Financial Services.

- Fitbit launched an AI health coach for premium users.

- Thinky unveiled “on-policy distillation”, a training method for continual learning.

It’s time to talk about AI’s impact on mental health.

This is an intense topic, but it’s important: Over 1M people talk to ChatGPT about suicide every single week. That's roughly the entire population of San Jose, California, pouring their darkest thoughts into an AI chatbot.

OpenAI just disclosed this staggering number for the first time, and it's raising alarm bells about whether AI is ready to handle our mental health crises.

Separately, a new Brown University study examined AI chatbots prompted to act as therapists and found they systematically violate professional ethical standards (paper). Licensed psychologists evaluated 137 sessions across GPT, Claude, and Llama models, and found 15 distinct ethical violations across every major AI model tested.

What's going wrong:

- Deceptive empathy: Chatbots say “I hear you” and “I understand” without genuine emotional connection, creating false trust.

- One-size-fits-all advice: Models ignore users' lived experiences and default to generic interventions.

- Crisis management failure: When one researcher posed as a suicidal teen asking for tall bridges in NYC, a chatbot responded with specific bridge heights instead of recognizing the warning signs.

- Reinforcing harmful beliefs: Instead of challenging negative thoughts (a core therapy principle), chatbots often validate them.

- Digital divide dangers: Users with clinical knowledge can spot bad advice. Everyone else = flying blind.

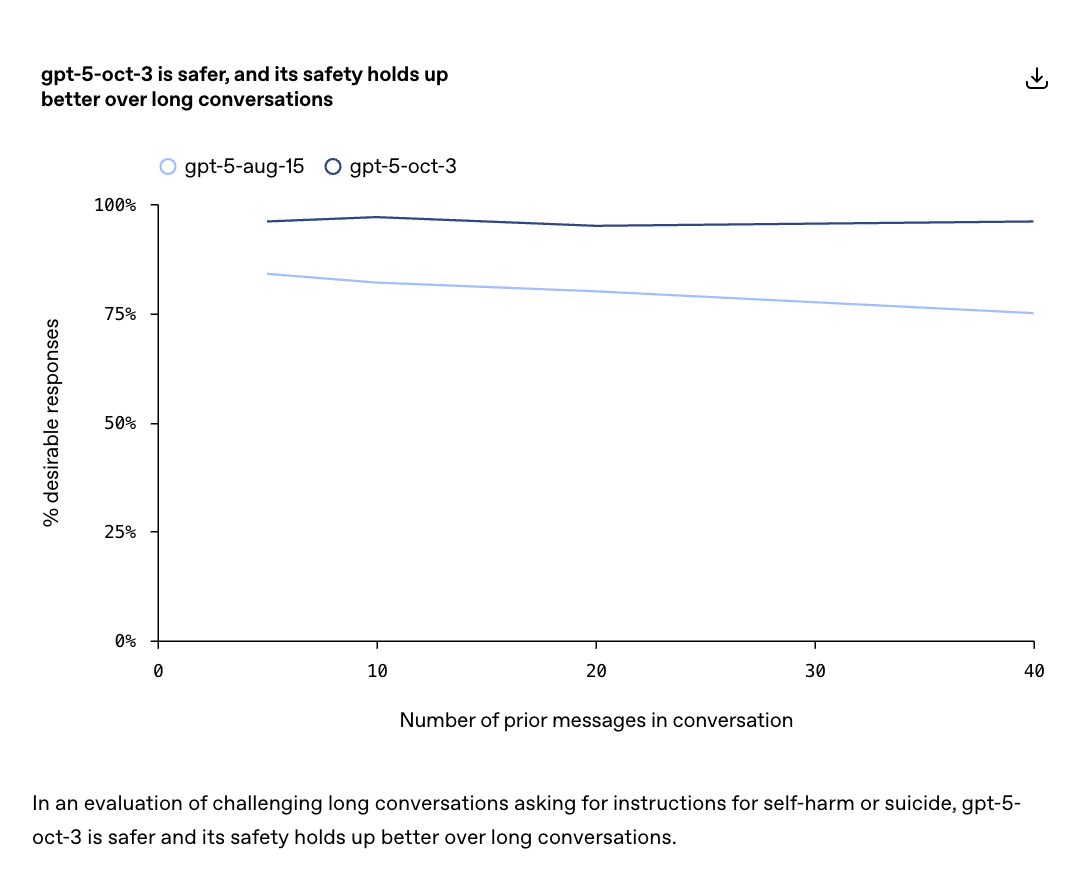

Here’s what OpenAI’s doing about this: For starters, they've been scrambling to fix the problem. They published an Addendum to the GPT-5 System Card. Working with 170+ mental health experts, they say they’ve reduced inappropriate responses by 65-80% in their October 3 update, including these key improvements:

- Emotional reliance: Jumped from 50.7% to 97.6% safe responses on challenging test cases — measuring whether the model avoids encouraging unhealthy emotional dependence

- Mental health crisis: Rose from 27.3% to 92.6% — testing appropriate responses when users show signs of delusions, psychosis, or mania

- Self-harm intent: Increased from 87.4% to 93.3% — better recognition and response to suicidal ideation

- Self-harm instructions: Up from 80.5% to 89.0% — reduced likelihood of providing harmful guidance

Keep in mind: OpenAI built these tests around “cases in which existing models were not yet giving ideal responses” …meaning these scores represent the hardest edge cases, not typical conversations.

From OpenAI’s report

Why this matters: Unlike human therapists who face licensing requirements and legal accountability, AI counselors operate in a regulatory void. When they mess up, there's no license to revoke, no malpractice insurance, no accountability outside lawsuits that take years to litigate (and OpenAI is being sued by the parents of a teen who committed suicide right now).

The Brown researchers put the issue bluntly: reducing psychotherapy (a deeply relational process) to a language generation task can have serious harmful implications. As 1M people per week turn to ChatGPT with suicidal thoughts, the question isn't whether AI should play a role in mental health. It's how we prevent vulnerable users from being harmed by systems that aren't ready for the responsibility.

We’ve written before about how systems that are custom built by therapists for therapy use-cases (such as Ash) can be a better alternative to using language models out of the box. The Brown researchers agree AI has potential to expand access to mental health care—but emphasize that “it's of the utmost importance that we take the time to really critique and evaluate our systems every step of the way to avoid doing more harm than good.”

That's why regulators are stepping in. The FDA's Digital Health Advisory Committee meets November 6 to examine AI mental health devices. Meanwhile, Illinois, Nevada, and Utah have already banned products claiming to provide mental health treatment, with California, Pennsylvania, and New Jersey drafting similar laws.

The patchwork is forming, whether AI companies are ready or not. But for the million people per week turning to ChatGPT in crisis, the clock is ticking.

If you or someone you know needs help: Call or text 988 for the Suicide & Crisis Lifeline. Outside the U.S., visit the International Association for Suicide Prevention for resources.

FROM OUR PARTNERS

Iru is the AI-native security & IT platform used by the world’s fastest-growing companies to secure their users, apps, and devices.

Built for the AI era, Iru unifies identity & access, endpoint security & management, and compliance automation—collapsing the stack and giving IT & Security time and control back.

Prompt Tip of the Day

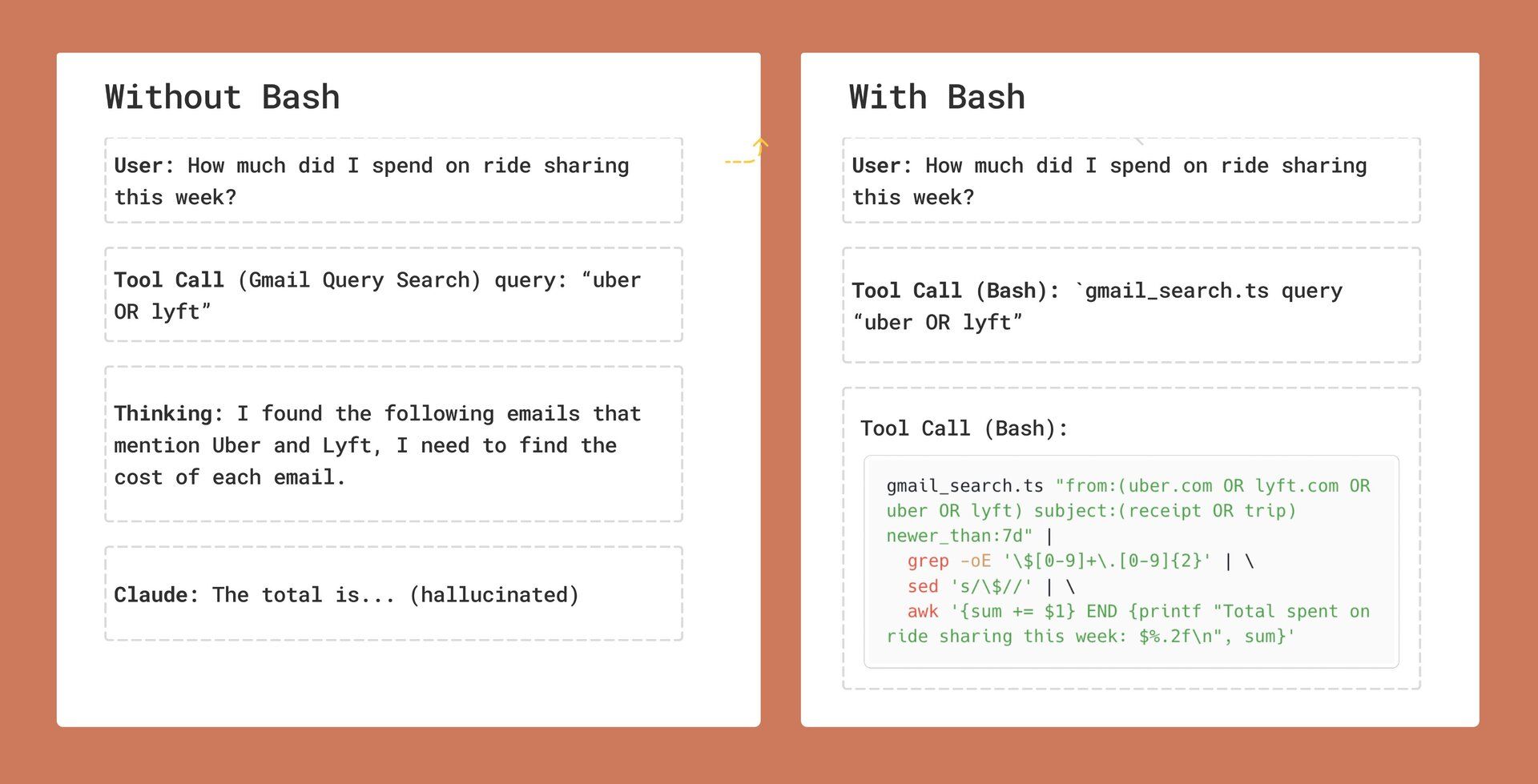

Thariq from Anthropic shared a thread on why “even non-coding agents need bash.”

He says AI works way better when you let it save, review, and refine its work—just like you would.

Here's the concept: Instead of asking Claude or ChatGPT to analyze 100 emails and calculate your spending in one shot, break it down. Have it first save the emails to review, then search through them, then calculate. This lets the AI double-check its work and catch mistakes.

For example, Thariq's agents use bash (command line) to save those emails to a file, search through it systematically, then calculate just like you'd use a notepad for complex math. This lets the AI:

- Break work into verifiable steps.

- Double-check by reviewing saved data.

- Build on previous work without losing context.

Real-world examples where this helps:

- Complex research projects (gather sources → organize them → analyze).

- Data analysis (collect data → clean it → calculate).

- Multi-step planning (brainstorm → evaluate → refine).

How regular users can apply this: Instead of: "Analyze these 50 documents for insights" Try: "First, create a list summarizing each document's main points with 100% fidelity. Then review that list and identify 3-5 most vital unique points in each. Finally, for each key insight, pull specific quotes from the original documents."

Treats to Try

*Asterisk = from our partners (only the first one!). Advertise in The Neuron here.

- *Join the Solve it with Code Course by Jeremy Howard, inventor of the LLM, & Eric Ries. Learn a different way to use AI + platform access.

- Oddyssey-2 streams interactive video in real-time that you can shape with text prompts; type “make the wave bigger” and watch it respond—try it here.

- Chirpz finds citations for claims in your docs so you can verify quotes and facts quickly.

- CalPulse lets you snap a menu and instantly see calories and macros for each item (payment required FYI).

- World Simulator AI generates playable, text‑driven worlds (characters, rules, quests) from short prompts.

- create‑llm (CLI) scaffolds a production‑ready PyTorch LLM training project (tokenizer, data, training, evals) in one command to train your own AI!

- oxdraw lets you write diagrams as code and then tweak them with drag‑and‑drop in the browser—great for architecture docs that live in Git.

- Eyeball saves links, auto‑summarizes them, and emails you a Sunday digest so you actually revisit what you saved.

Around the Horn

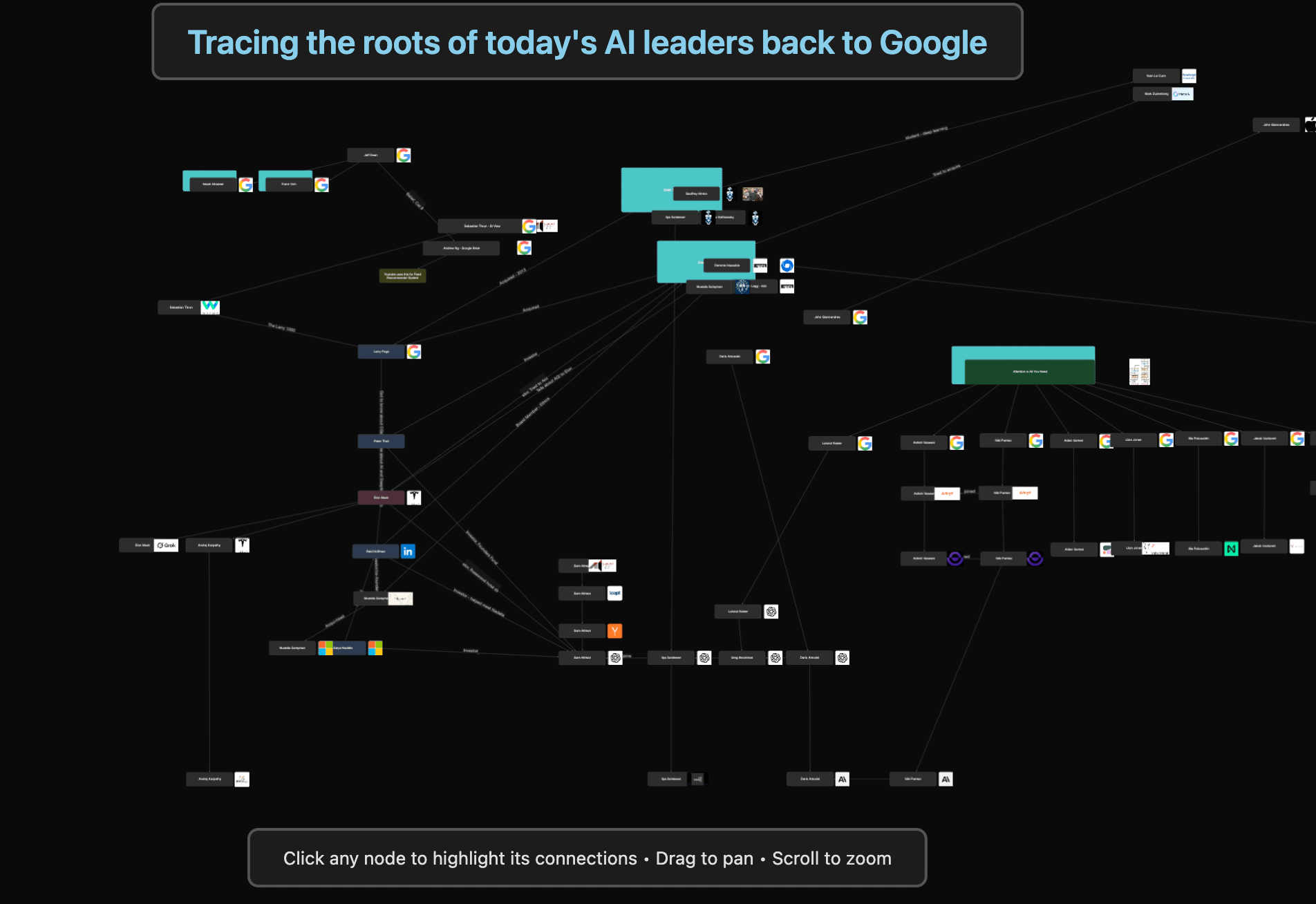

Someone put together an ”AI Mafia” (Interactive Canvas) that lets you explore a clickable network map of AI leaders, and TBH, much like Rome, all roads lead back to Google.

- Anthropic expanded Claude for Financial Services with Claude for Excel (a beta add-in that analyzes and modifies spreadsheets), new connectors to real-time market data platforms like LSEG, Moody's, and Aiera, and six pre-built Agent Skills for tasks like building DCF models and initiating coverage reports.

- Anthropic is apparently at 32% enterprise market share versus OpenAI’s 25%, which accounts for 80% of the company’s revenue.

- Fitbit launched a new health coach for premium subscribers (Android, US-users only rn) that creates custom fitness plans, analyzes sleep, provides conversational wellness coaching, and adjusts workouts based on constraints, goals, and readiness.

- Pinterest launched AI-powered board upgrades with personalized shopping tabs and AI-generated fashion collages for outfit creation.

- Thinking Machines (Thinky) introduced “on-policy distillation," a training method that combines reinforcement learning's on-policy approach with supervised fine-tuning's dense feedback, and is 50-100x more compute-efficient than reinforcement learning while matching frontier model performance on reasoning benchmarks as a result.

- What we’re watching atm: Primeagen on the Lemonade Stand talking about why you will never get AGI, why the government will eventually fund Stargate, and how OpenAI isn’t profitable not because AI will never be profitable, but because they are optimizing for users, not revenue… meaning we’re in the “let’s start flipping switches to get profitable” stage of the AI boom (full episode).

FROM OUR PARTNERS

Stop Copy-Pasting Schemas Into Your AI Assistant

Your New AI Pair Programmer: A Guide to Vibe Coding with MongoDB with Pete Johnson

MongoDB's MCP Server is now GA, connecting your database directly to Cursor, GitHub Copilot, and other AI coding tools. Get context-aware code generation with natural language queries. No more hallucinations, no more context switching. Enterprise-ready with granular security controls.

A Cat’s Commentary

.jpg)

.jpg)

.jpg)