Welcome, humans.

Remember when every laptop suddenly became a “gaming laptop” just because it had RGB lights? Well, the tech industry's at it again, this time slapping “AI PC” stickers on anything with a circuit board and calling it revolutionary.

That's why we sat down with Logan Lawler from Dell Technologies (and host of the Reshaping Workflows podcast) to cut through the marketing BS. Turns out, there's actual science behind what makes a computer good for AI work. We're talking NPUs (neural processing units—think of them as tiny AI brains), beefed-up memory requirements, and the eternal GPU vs CPU debate. TBH, Logan has some hot takes!

AI PC Buyer’s Guide: Specs That Actually Matter (ft. Dell’s Logan Lawler)

Logan breaks down exactly what specs matter if you're running AI models locally, whether you're fine-tuning your own chatbot or just tired of ChatGPT's servers being down again. If you wanna know what laptop you really need to run AI on your computer and not OpenAI’s cloud, and more importantly, what you can do with local AI, this episode is for you!

Watch and/or Listen now on: Youtube | Spotify | Apple Podcasts

Here’s what happened in AI today:

- We break down how browser agents are easily hijacked.

- OpenAI is building a text‑to‑music generator to rival Suno and Udio.

- MiniMax M2 and its agent are now 5th smartest AI AND open source.

- China revealed a “mini‑fridge” AI server using 90% less power.

So browser agents are really easy to hijack… and Microsoft’s OpenAI exposure is still murky

Look, we all want AI to be this magic bullet solution to all our problems (as yesterday’s AI bubble story shows, there’s a lot of economic concerns riding on that use-case!). But are we all paying an “AI trust tax” by putting all our faith in these very unreliable machines?

Here’s what we mean: First of all, security researchers keep showing how “AI browser agents” (like OpenAI’s new Atlas browser) can be hijacked via prompt‑injection and data‑exfiltration. Basically, that means a website can quietly trick an AI assistant into doing things on your behalf—like copying cookies, reading your emails, or clicking malicious links—without you knowing.

Check out this report published by Brave, which found these key issues:

- Screenshot prompt injection: Hidden text inside images can be read by the browser’s AI as commands, not content. That lets bad actors slip in invisible instructions.

- Navigation-based injection: Even asking the AI to open a webpage can make it feed that site’s text back to the model as if it came from you, changing what it does next.

- Systemic issue across AI browsers: The line between what the user says and what the web says is often blurred, which opens the door for manipulation.

What this means: normal web safety rules don’t apply here. If the AI agent can act as you while you’re logged in, it could move money, send emails, or touch sensitive company data—all from a single clever prompt.

On the screenshot front, Microsoft’s new Gaming Copilot raised more eyebrows by capturing gameplay screenshots for “context.”Microsoft says it’s to better understand games, not to train new models, but users don’t love how hard it is to turn off.

At the same time, investors are still guessing at the true economics of Microsoft’s OpenAI partnership; a new WSJ column just called for clearer financial disclosures and the commenters on Hacker News argued key details are buried in vague line items or broad buckets like “other, net.” The result: no one knows how much risk, exposure, or profit is really flowing between the two if this whoIe AI thing goes sideways.

All three of these point to the same friction: a rising “AI trust tax.” All of these episodes underscore how agent UX (user experience) is racing ahead of the systems meant to secure it or explain it.

- On the product side, agents need stronger permission systems and clearer boundaries—see domain tests like the new MLEB for legal embeddings—before enterprises hand them keys to browsers or internal data.

- On the market side, if companies keep spending heavily on AI while keeping the financial details fuzzy, it’s impossible to price the real risk. Are profits real? Are safety costs included? Who pays when something breaks?

We’ve yet to see a legitimate AI safety case get escalated to the highest levels of legal scrutiny to get aired out in the disinfecting light of the law (like say the Supreme Court). And until that happens, the market will have to resolve these issues.

For example, if agents are easily hijacked, enterprises will demand airtight permissioning, provenance, and auditable evals… which’ll add real cost and could dent margins. And if disclosures of risk lag, investors can’t price in those costs (security teams, red‑teaming, indemnities) or liabilities (data leakage, account takeovers), widening the gap between narrative and GAAP.

In the short term, there are some simple fixes for browser agents at least: keep AI browsing separate from normal browsing, make sure users explicitly confirm before an agent visits sites or reads emails, and design for safety by default

For developers, that means sandboxed browsing (isolating the agent’s actions), allow lists (only letting it access approved sites), and clear activity logs. For platforms, it means giving users obvious off-switches and showing users what data’s been collected, when it’s active, and how to shut it down entirely.

Finally, on the business side, companies like Microsoft need to show not just what they’re earning from AI, but how it moves through the system: from renting GPUs to selling model access to bundling Copilot seats. That’s how we’ll know if AI is generating real growth or just the result creative accounting. Or, as we put it: how we’ll know if we’re building true “artificial general intelligence”… or just “artificial general inflation.”

FROM OUR PARTNERS

You’re not lazy. Your tools are slow.

You spend your life typing — emails, notes, plans, ideas — translating thoughts into words with a machine designed in the 1800s. It’s no wonder your brain feels like it’s moving faster than your hands.

Wispr Flow fixes that. It’s a voice-powered writing tool that turns your thoughts into clean, structured text anywhere you work — Slack, Notion, Gmail, whatever. It’s as fast as talking, but as polished as writing.

You’ll write 4x faster, think more clearly, and finally catch up to yourself. Flow adapts to your tone, edits as you speak (“5pm—actually, make it 6”), and keeps your focus on what matters instead of what key to hit next.

Typing is a habit. Flow is an upgrade.

Try Wispr Flow — speak your thoughts into reality.

Prompt Tip of the Day

Adriana Porter Felt shared a great tip on AI prompting, that was actually repurposed parenting advice on, no joke, how to “prompt” toddlers. It goes like this:

The rule: LLMs, like toddlers, don’t process negatives well.

Telling them what NOT to do just spotlights the unwanted behavior. As Lia said, toddlers focus on the “do X” part of “Don’t do X.” LLMs are the same.

Instead, tell them exactly WHAT to do.

TL;DR:

- ❌“Don’t be verbose.” → ✅ “Answer in 3–5 concise bullets.”

- ❌ “Don’t invent sources.” → ✅ “Cite only verifiable sources or say insufficient evidence.”

- ❌ “Don’t mention internal tools.” → ✅ “Refer to tools generically.”

Bottom line: Models follow the picture you paint. So paint the behavior you want, not the one you’re avoiding. Be clear, specific, and positive… your model (and your sanity) will thank you.

Treats to Try

- MiniMax M2 is now open source, meaning any API provider can run it. M2 is now the 5th smartest model according to Artificial Analysis, and can speed up your coding helpers / research agents for “8% of Claude Sonnet’s pricing at ~2× the speed)—check it out on HuggingFace, GitHub, their API docs, or try it on the cloud here.

- For example, here’s a prompt that turns any news into a viral social post; once you click in, you can watch the agent work.

- Alai turns your bullet points into editable, on‑brand slide decks you can export in minutes.

- Starbase helps you find and plug MCP servers (GitHub, Notion, Vercel, PayPal, etc.) into your agents.

- Mico from Microsoft lets you test experimental talking‑avatar assistants for voice chats and brainstorming.

- Lindy lets you spin up role‑specific work agents (support, outbound, meeting notes) from a prompt and manage them in one workspace.

- Velona monitors your vehicle fleet to predict failures, flag safety risks, and surface hidden costs in real time.

- CubeOne builds a narrated, stage‑ready deck so you can present without hand‑crafting slides; it even writes your talk’s script so you don’t have to improv in front of your entire exec team and say something real dumb (been there!).

- There is now a Books By People (“Organic Literature”) site that certifies human‑written books so publishers can display a verification stamp after a workflow review. Sign of the times y’all!

Around the Horn

- OpenAI is reportedly building a music generator to rival Suno, moving deeper into text/audio‑to‑music creation.

- AI data centers are crowding out U.S. factories, soaking up capital, labor, and megawatts.

- SambaNova is exploring a sale after stalled fundraising, highlighting tougher late‑stage chip financing.

- China touted a “mini‑fridge” sized AI brain that plugs into a wall and claims ~90% lower power use than typical systems.

- Qualcomm entered AI servers with its new AI200/AI250 accelerators to take on NVIDIA/AMD, aiming at cheaper, efficient inference starting in 2026.

- Mercor lined up ~$350M at a ~$10B valuation to supply expert human feedback for model training at top labs.

- Saudi Arabia is investing to become an “AI exporter,” pouring tons of money into data centers and partnerships.

- Australia’s government rejected a blanket text and data mining exemption for AI training and signaled licensing instead.

- The US Immigration and Customs Enforcement agency will now use AI to monitor social media use via a 5 year contract with Carahsoft Technology.

- Sparkline Capital published a report on “surviving the AI Capex Boom” that’s worth a read; it encapsulates a lot of what we wrote about in yesterday’s bubble piece, plus lots of additional strategic insights!

FROM OUR PARTNERS

Powered by the next-generation CRM

Connect your email, and you’ll instantly get a CRM with enriched customer insights and a platform that grows with your business.

With AI at the core, Attio lets you:

- Prospect and route leads with research agents

- Get real-time insights during customer calls

- Build powerful automations for your complex workflows

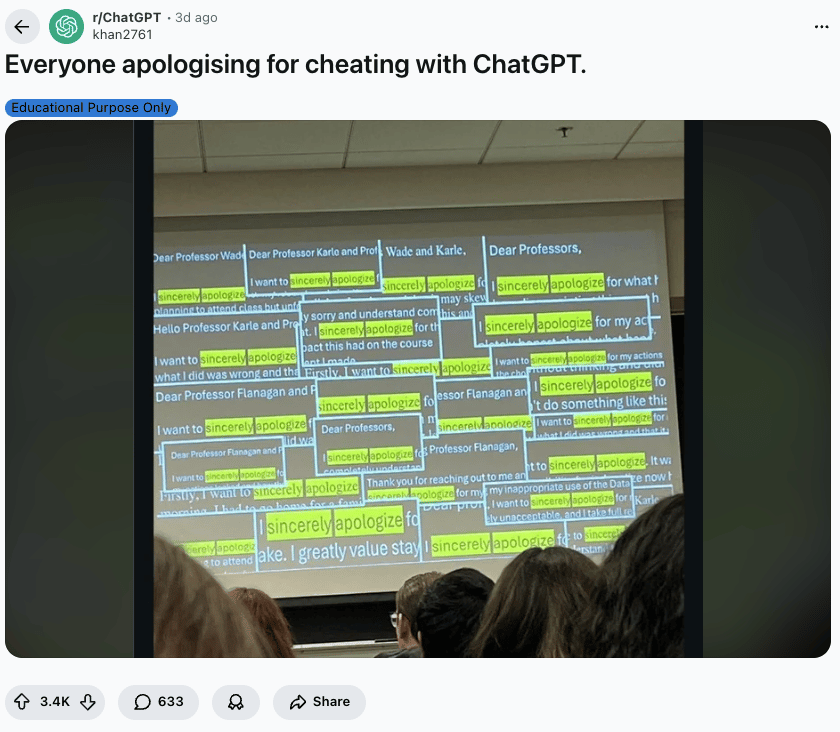

Monday Meme

A Cat’s Commentary

.jpg)

.jpg)