Welcome, humans.

Lately, Sam Altman’s been tweeting some cryptic stuff about how social media seems like its full of bots. We always sorta assume there’s bots everywhere, but ever since he said it, we can’t unsee it.

Now, it could be that more people are using AI to write their posts, so “bot-speak” is rampant (Sam posits this as one theory). But it’s making sourcing signal from Reddit and other places kinda… weird??

Speaking of weird… behold what became the top post on r/CursedAI yesterday (based on that subreddit name, you can imagine where this goes…)

One thing that feels DEEPLY human-coded: people are finally turning on the botspeak phrase “You’re absolutely right!” While it’s inspiring mad hate online, Pieter Levels turned it into a mad fire hat. Add to cart, am I (“absolutely”) right??

Here’s what happened in AI today:

- Mira Murati's startup fixed AI's reproducibility bug.

- OpenAI signed a $300B Oracle cloud deal.

- Publishers launched RSL protocol to control AI content use.

- Meta licensed Black Forest Labs' AI image tech for $140M.

P.S: Don’t forget we go live today at 10AM PST to test out the top text to speech models head to head!

Click here to get notified (if early), tune in (if on time), or watch it again (if late!)

Ex-OpenAI CTO Mira Murati's $12B Startup Just Fixed a Major AI Bug Everyone Thought Was Unfixable

Don’t get intimidated y’all…we’ll explain the fancy words and why they matter to you! Stay with us…

Remember Mira Murati, OpenAI’x previous CTO who left back in September 2024? Well, her new startup Thinking Machines Lab (Or just Thinky, for those in the know) just dropped their first research paper, and it's about fixing something that's been bugging AI engineers since ChatGPT launched.

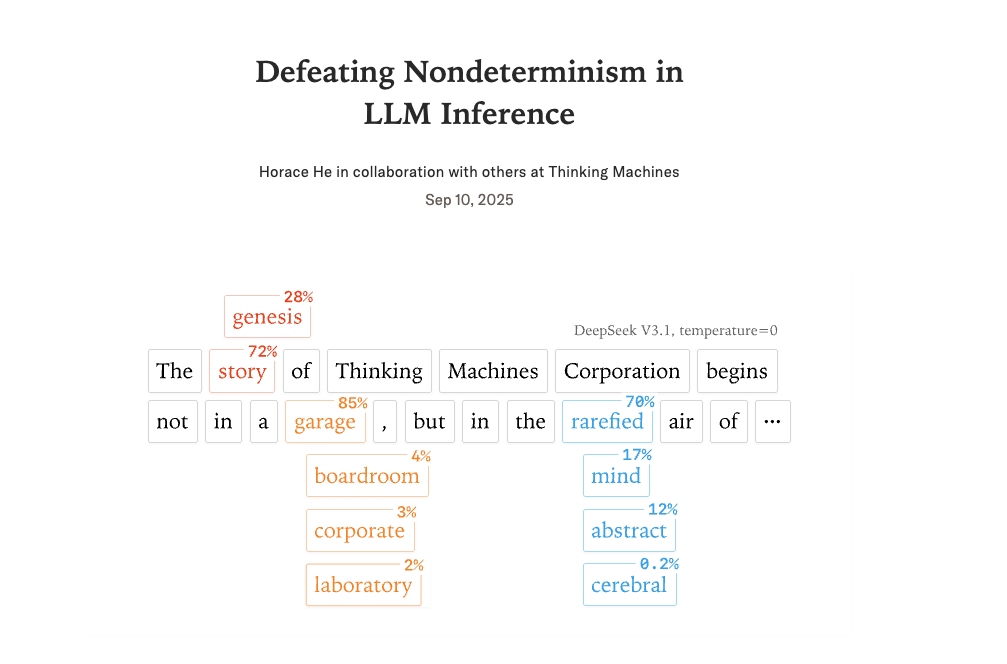

The paper, titled Defeating Nondeterminism in LLM Inference, tackles the problem of “reproducibility” in language models (like ChatGPT).

A few definitions before we start:

- Nondeterminism = Getting different answers when asking ChatGPT the same question twice with identical settings.

- LLM = Large Language Model (like ChatGPT, Claude, or Gemini).

- Inference = When you ask a language model a question and it generates a response.

Here's the problem: Even when you set AI models to their most predictable setting (temperature = 0), you still get different answers to the same question. Engineers have been pulling their hair out thinking it was just “one of those computer things” that couldn't be fixed. Turns out, they were wrong.

The discovery came from Horace He, a PyTorch (code for AI) wizard who recently jumped from Meta to join Murati's team. He's the guy behind torch.compile (that thing that makes AI models run 2-4x faster with one line of code).

Horace and his team discovered the real culprit isn't the usual suspect: floating-point math weirdness that engineers typically blame. Instead, it's something called “batch invariance.” Think of it like this:

- Imagine ordering the same coffee at Starbucks, but it tastes different depending on how many other customers are in line. That's essentially what's happening with AI models.

- When an AI server is busy handling lots of requests, it processes them in batches.

- Your request gets bundled with others (because that’s more efficient), and somehow this changes your specific answer… even though it shouldn't.

- Follow the logic, and the busier the server, the more your results vary.

This also happens in real life; ever been to your local Starbucks during coffee rush hour? Unless you have a god tier level barista, your order might not taste the same!

Why this matters: Because of this problem…

- AI companies doing research can't reproduce their own experiments reliably.

- Businesses using AI for critical decisions get inconsistent results.

- Training new AI models becomes way more expensive when you can't trust your outputs.

Thinky released their solution as open-source code (true to Murati's promise of “science is better when shared”). They call their approach “batch-invariant kernels”, which basically teaches AI servers to give you the same coffee regardless of the line.

Keep in mind: This is just the appetizer from a team that recently raised $2 billion without even having a product (although they are working on one, internally called “RL for businesses” that customizes models for a company’s specific business metrics, which sounds very cool!) If fixing decade-old “unfixable” problems is their opening move, the AI giants should probably be nervous… (though the code is open, so yum yum yum, as the hungry, hungry, AI labs say… ).

FROM OUR PARTNERS

Less searching, more finding. Less hunting, more doing with Slack's AI-powered enterprise search

Company knowledge is scattered across countless tools and conversations. Slack's new ebook reveals how AI-powered search transforms how teams discover information—turning endless hunting into instant answers.

Learn practical strategies to unlock your organization's collective intelligence and boost productivity across every department.

Prompt Tip of the Day.

Ask, ask, and ask again. We know everyone’s giving you prompt formatting advice, but sometimes, the best way to get what you want out of working with AI is to spin up multiple chats at once, maybe even across multiple different AI models (a tool to help with this is OpenRouter, but you’ll have to pay), and ask the same question multiple times.

If you don’t get what you want the first time, ask again, but change the phrasing slightly. Or change the time of day lol. As today’s story shows, even small changes make a difference… including how many other people are asking questions at the same time you are! So get in the practice of asking A LOT of times if you don’t get it in one go.

Treats to Try.

*Asterisk = from our partners. Advertise in The Neuron here.

- *VEED creates professional videos from text prompts, automatically adds subtitles, and generates avatars for your marketing content and YouTube videos.

- ElevenLabs now lets you remix any voice to change its gender, age, or accent so you can create different characters for storytelling or business agents.

- Gemini CLI now deploys apps with one /deploy command and scans code with /security:analyze, while Firebase now includes Gemini 2.5 AI integration.

- Adobe Agent Orchestrator deploys AI agents that automate your marketing tasks like creating customer audiences, building email campaigns, analyzing website performance, and optimizing customer journeys across web, mobile, and email.

- CarEdge haggles with car dealers for you, saving customers an average of $569 per transaction and up to $4,000 on specific vehicles ($19/month).

- AdOmni’s Jeen AI plans your digital ad campaigns across billboards, connected TV, and mobile video in 5 minutes: you just tell it your goals and it selects screens, calculates budgets, and shows what will work.

- Is it Nerfed? is out here asking the really tough questions in AI: how “nerfed” do today’s models feel compared to yesterday?

Around the Horn.

- OpenAI signed a $300B, 5-year cloud deal with Oracle for 4.5 GW of AI computing capacity, boosting Oracle's market value by ~$250B.

- Major publishers launched the RSL protocol to control and monetize AI's use of their content, with Reddit, Yahoo, and Medium joining the initiative.

- Meta paid $140M to license Black Forest Labs' AI image technology (and has a similar deal with MidJourney), shifting strategy from building in-house AI to licensing external tech.

- Swedish society Stim launched the world's first collective AI license for music, with Songfox becoming the first company to create legal AI-generated covers.

- Chinese researchers launched SpikingBrain 1.0 (paper), a brain-inspired AI model 25-100 times faster than transformer models while using only 2% of typical training data.

- Heads up: if you’re in the EU, fine-tune anything bigger than a Llama-3 13B parameter model, then re-release it on HuggingFace, you’re probably considered a “provider” and need to follow EU AI Act regulations.

FROM OUR PARTNERS

Live AMA, engineer-led demos, and more – all at Glean:LIVE

Don’t miss Glean’s product launch on Sept 25th. Get a first look at Glean’s new Assistant, see how to vibe code an agent, and catch engineer-led demos. This launch is all about empowering you with a more personalized experience and upleveling the skills only you can bring to your work.

Thursday Trivia

One is real, and one is AI. Which is which? (vote below!)

A

B

Which is AI, and which is real?

The answer is below, but place your vote to see how your guess everyone else (no cheating now!)

A Cat’s Commentary.

Trivia Answer: A is AI (Seedream 4…absolutely breathtaking), and B is real… but then again, who knows??

.jpg)

.jpg)