Welcome, humans.

Ever heard an AI company just... tell the truth? Buckle up.

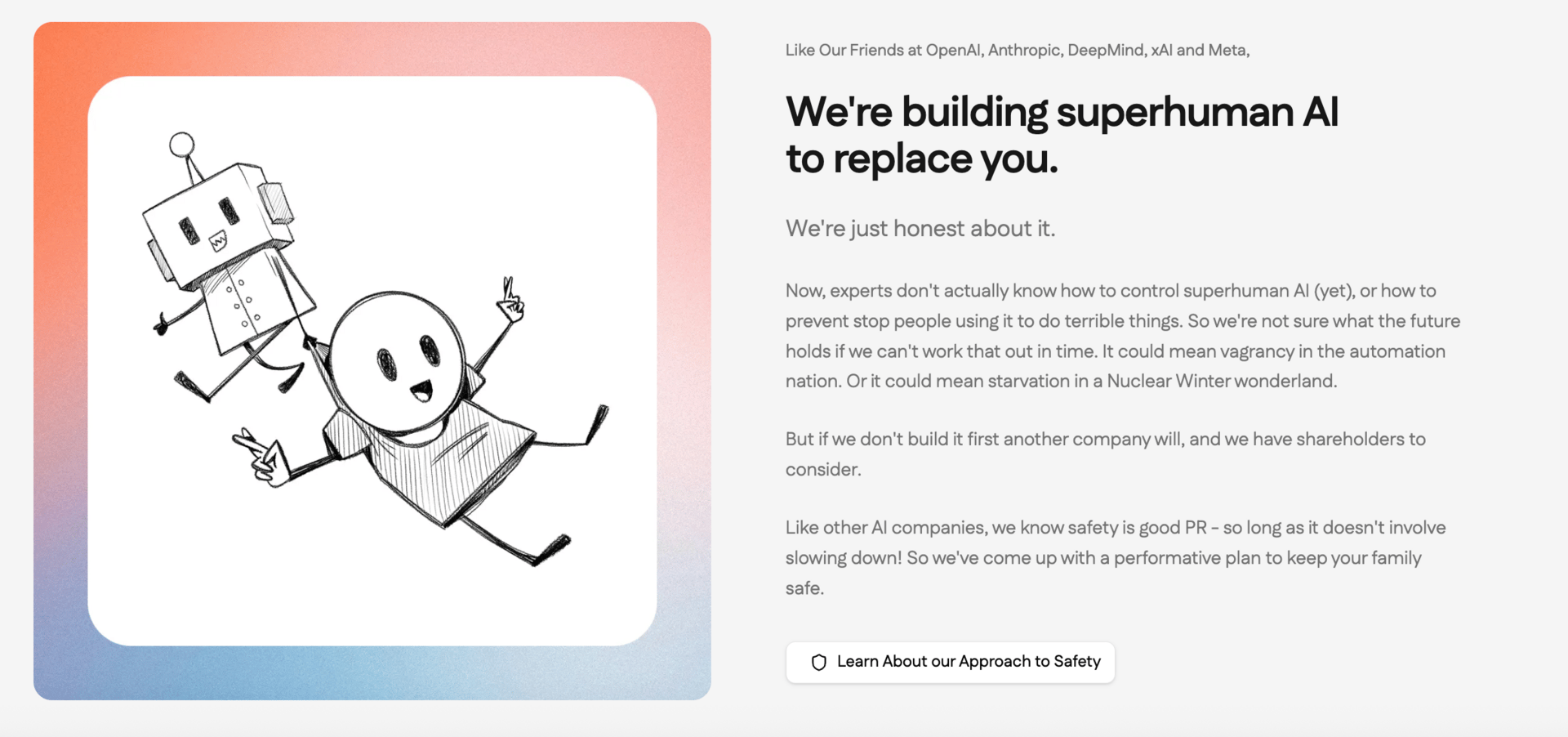

Replacement.AI launched this week as “the only honest AI company,” and honestly, it's the funniest thing we've seen in months. While most AI firms are busy talking about “empowering workers” and “augmenting human capabilities,” Replacement just comes out and says it: they're building AI to replace you. Their tagline? “Humans no longer necessary.”

The entire site is a masterclass in satire. They've got products like HUMBERT (an AI for kids that “replaces humans at every developmental milestone”), testimonials from parents thrilled their children “don't have any friends anymore,” and a mission statement that reads “human flourishing is bad business.”

Our favorite part? The fake social media posts celebrating how their AI will put millions out of work and consume more power than Norway.

It's dark comedy gold… and uncomfortably close to what some folks actually worry about. Sometimes the best way to start a conversation about AI's impact is to just... say the quiet part out loud.

Here’s what happened in AI today:

- Anthropic launched Claude Code on the web.

- NVIDIA's China market share dropped from 95% to zero.

- OpenAI recruited 100+ ex-bankers to train AI on deal modeling.

- Adobe announced AI Foundry to build custom generative AI models.

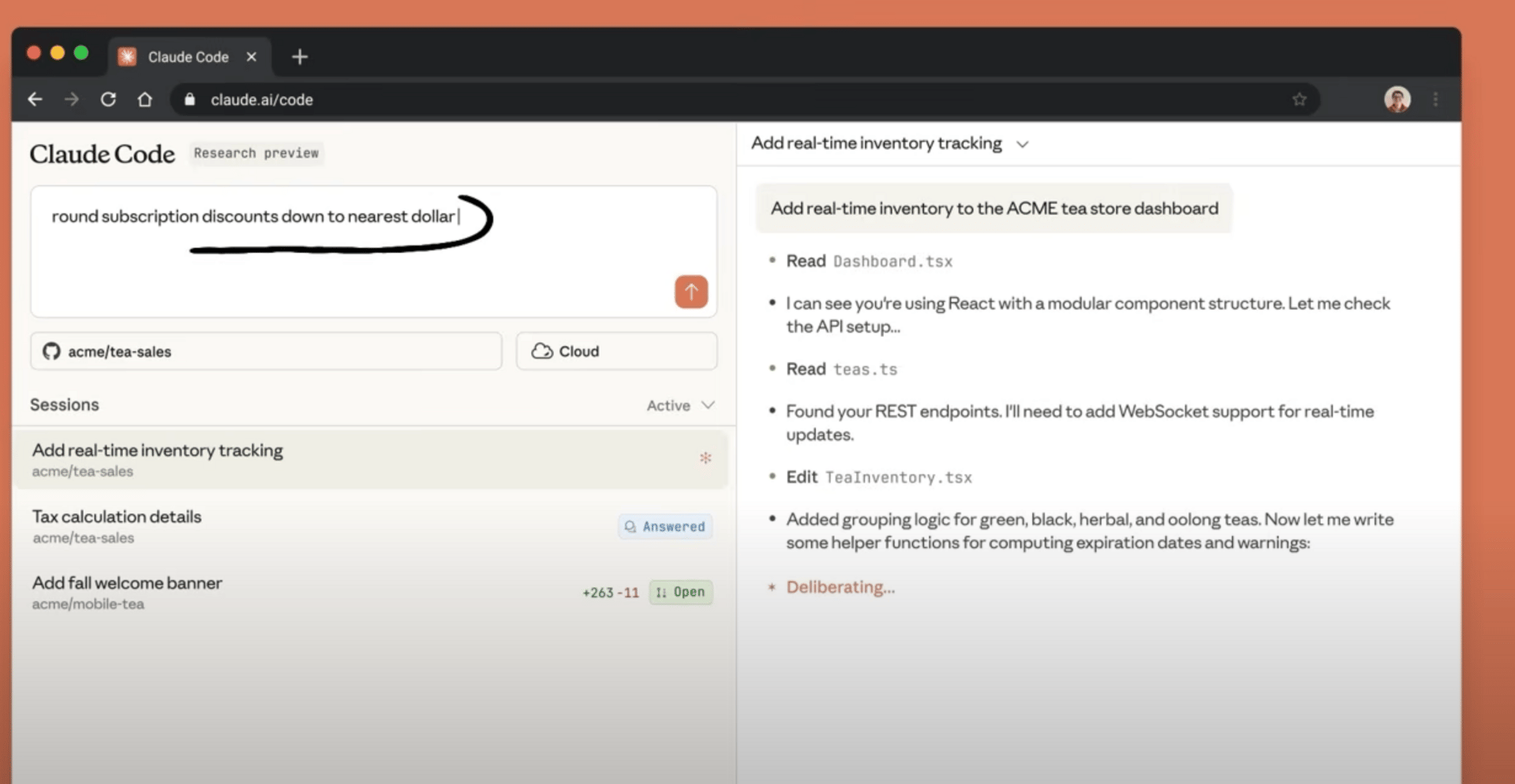

Claude Code Just Fixed the Most Annoying Thing About AI Coding Assistants.

Anthropic just launched Claude Code on the web, letting developers and regular agent users alike run coding tasks directly from their browser. But the real breakthrough? A new sandboxing system that makes Claude Code 84% less annoying… and way more secure.

Here's the catch-22 with AI coding tools. You want your AI to actually help, which means giving it access to your files and terminal. But that access creates security risks, especially from something called "prompt injection"—basically, hackers tricking your AI into doing bad things.

Most tools solve this with permission prompts. Every. Single. Time. Claude wants to run a command? Click approve. Edit a file? Click approve. Run a test? You guessed it! Smash that approve button! The alternative? “Dangerously skip permissions.” A.k.a playing with fire and hoping not to get burned.

The result of this binary choice is what security experts call “approval fatigue.” You click so many times you stop paying attention. Ironically, that makes you less secure.

THE SOLUTION: Anthropic's new sandboxing approach flips the script. Instead of asking permission for everything, you define boundaries upfront. Think of it like a fenced playground—Claude can run around freely inside, but hits a wall if it tries to leave.

Here's what makes it work:

- Filesystem isolation: Claude can read/write in your current project directory but can't touch sensitive system files like your SSH keys or

.bashrc. - Network isolation: Claude can only connect to approved domains. Even if someone tricks it into trying to "phone home" to a hacker's server, the connection dies at the OS level.

- Real-time alerts: If Claude tries to access something outside the sandbox, you get notified instantly and can allow it once or update your settings.

In Anthropic's internal testing, this cut permission prompts by 84%. That's not just convenient, but actually safer, because you're not mindlessly clicking through a hundred approvals or, y’know, dangerously skipping them all.

WHAT'S NEW TODAY: Claude Code on the web takes this further by running your coding tasks in isolated cloud environments. You can kick off multiple bug fixes in parallel, work on repos that aren't on your laptop, or even code from your iPhone.

Each session runs in its own secure sandbox. Your GitHub (code repository tool) credentials never enter the environment; instead, Claude uses a custom proxy that verifies every git operation. Even if the code running in the sandbox gets compromised, your actual credentials stay safe.

WHY IT MATTERS: This is bigger than just making Claude Code better. Anthropic open-sourced the sandboxing code, which means every AI coding tool could adopt this approach. Fewer annoying popups, better security, and AI that can actually work autonomously without making you nervous.

Also, as we covered earlier in the week, Anthropic launched Claude Skills, which Simon Willison said basically turns Claude into a perfect general purpose agent. Previously, we covered McKay Wrigley’s awesome tutorial on how to use Claude Code as a general purpose agent, and these functions are making this use-case even more viable.

Want to try it? Pro and Max users can get started here. Or if you're building your own AI tools, check out the technical docs to see how they built it.

FROM OUR PARTNERS

Elastic x IBM watsonx: Rethinking conversational search

Want to learn how IBM watsonx can think with your company’s mind? Read how Elastic and IBM have partnered to deliver retrieval augmented generation capabilities that are seamlessly integrated into IBM watsonx Assistant’s new Conversational Search feature — and grounded in your business’s proprietary data.

Prompt Tip of the Day

Large language models (the AI tech behind CHatGPT) love giving you slightly different labels for the same input. Verdi, of Verdi’s Worldview, found the fix: use vector embeddings to cluster similar labels together.

His real-world test on 10K tweets showed vectors reduced labels by 80% (6,520 → 1,381) while becoming 10x cheaper at scale.

The Method:

- Generate a label using your LLM (e.g., “joke_about_rust_programmers”).

- Embed that label into vector space using an embedding model (he uses voyage-3.5-lite).

- Search for similar labels you've already created - if you find a match above a certain similarity threshold (Verdi uses 0.80), use that existing label instead.

Think of it like autocomplete for your LLM's labels. The first time it sees “joke_about_rust_programmers,” that becomes the canonical label. Next time it generates “humor_concerning_rust_programmers,” the vector search catches that they're ~95% similar and maps it back to the first one.

The method starts 15% more expensive but flips to cheaper by tweet #500, with a 94% cache hit rate by tweet #10,000. Game-changer for large-scale classification projects.

Check out Verdi's full breakdown and Golang package here.

Treats to Try

*Asterisk = from our partners (only the first one!). Advertise in The Neuron here.

- *Shure's IntelliMix Room Kits turn IT providers into AV heroes—seamless Microsoft Teams audio that just works, opening new revenue streams and happier clients.

- DeepSeek released DeepSeek-OCR, a model that compresses visual data up to 20 times while maintaining 97% accuracy in optical character recognition (btw, OCR = converting images of text into actual text).

- Nexos gives your entire company secure access to ChatGPT, Claude, and other AI models through one platform, so IT can set guardrails while teams use the AI they need (raised €30M).

- Cercli builds an AI-native HR platform for MENA businesses and has grown revenue 10x, processing $100M+ in annual payroll (raised $12M).

- Director automates repetitive browser tasks like forwarding emails, downloading receipts, or creating purchase orders by describing what you want.

- Simplora guides you through meetings in real-time by translating complex discussions into plain language, suggesting follow-up questions, and automatically taking personalized notes tailored to your role.

- Noah AI automates pharma and medical research workflows by searching databases like PubMed, tracking drug development progress, and delivering instant answers from trusted scientific sources.

Around the Horn

- OpenAI enlisted 100+ ex–investment bankers for “Project Mercury” to train AI on deal modeling, paying about $150/hour to automate the grunt work of junior analysts.

- NVIDIA CEO Jensen Huang said the company's China market share dropped from 95% to zero after US export restrictions banned advanced chip sales.

- Adobe announced Adobe AI Foundry, a service that builds custom generative AI models for enterprises trained on their branding and intellectual property, built off Adobe's Firefly family of AI models that were trained entirely on licensed data.

- OpenAI cracked down on Sora 2 deepfakes after Bryan Cranston and SAG-AFTRA complained about unauthorized AI videos using his likeness

- Companies from Salesforce to Lufthansa are blaming AI for mass layoffs, but Oxford professor Fabian Stephany says firms are “scapegoating” the technology to hide pandemic overhiring mistakes.

- Penn State researchers found most people can't detect racial bias in AI training data—even when shown exclusively white faces for "happy" and Black faces for "sad"—unless they're in the negatively portrayed group.

- Filipino workers in Manila remotely control AI robots restocking shelves in Japanese convenience stores, earning $250-$315/month while training the robots that may eventually replace them.

FROM OUR PARTNERS

Transform Your Business with Advanced A.I. & Automation

Discover how to use A.I. beyond basic copywriting and research. Harness A.I. to generate quality leads and drive more sales. This exclusive webinar reveals proven strategies that enable A.I. to work for you—tranforming your business into a growth engine.

Reserve your spot now and monetize your business.

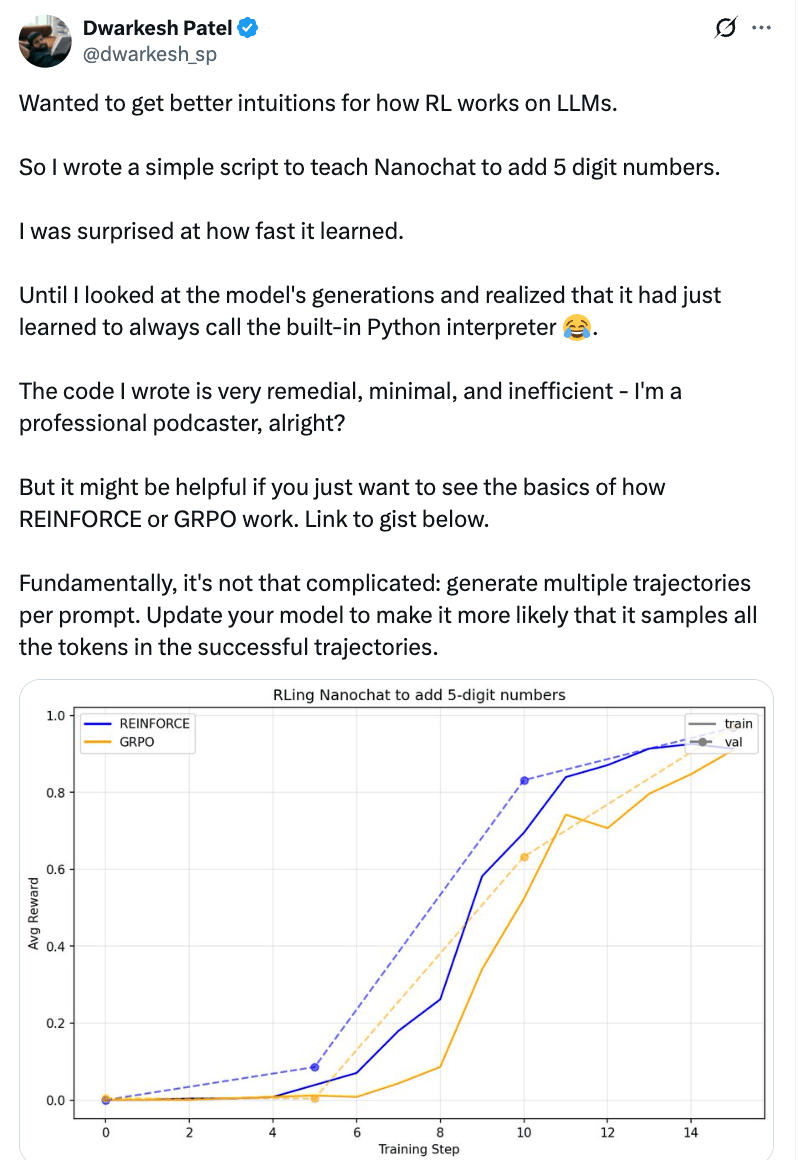

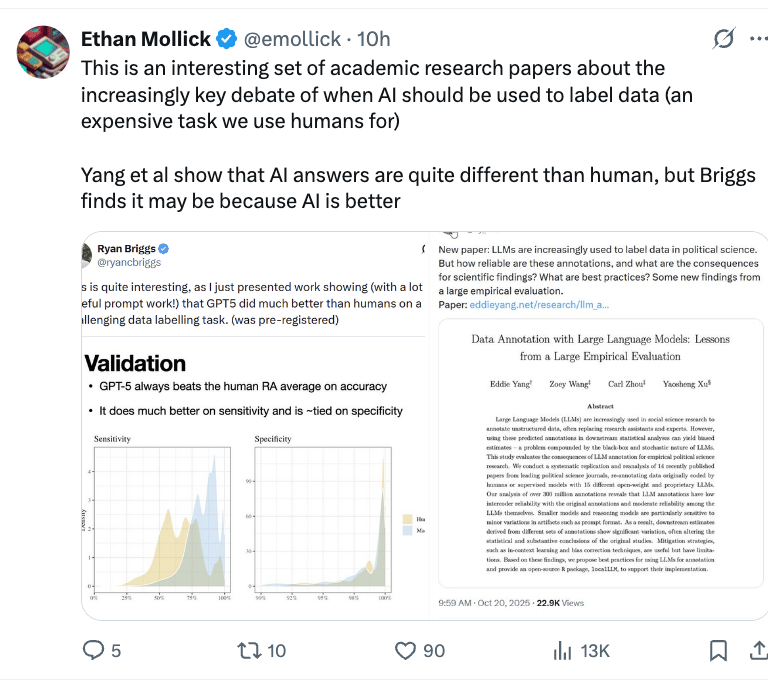

Tuesday Tweets

A Cat’s Commentary

.jpg)

.jpg)

.jpg)