Welcome, humans.

Today at 10:30am PST / 12:30pm CST, we're going hands-on with GPT’s Codex coding agent LIVE with Alexander Embiricos, the product lead for Codex at OpenAI.

The idea here is to take you from zero experience to confidently managing coding agents—setting it up together, building real projects, and pushing Codex to its limits (we're definitely going to try to break it lol).

We’ll also try to get Alexander to share how the OpenAI team actually uses these tools themselves, plus their best practices for getting the most out of them. If you already use GPT and want to learn how to code w/ agents, this one’s for you. Tune in live here.

Here’s what happened in AI today:

- We recap the “Best Papers” winners from NeurIPS 2025

- OpenAI ordered to hand over 20M ChatGPT logs in lawsuit.

- The EU launched an antitrust investigation into Meta's WhatsApp AI policies.

- Studies found chatbots persuaded 1/25 voters to change candidate preferences.

P.S: We have a goal to reach 10K subscribers on YouTube before the end of the year, and we’re so stinkin’ close! Can you help us out by clicking here? 😄

NeurIPS Just Crowned the Year's Best AI Research (And You Can Actually Understand It)

NEWS BRIEF: The Best Papers of NeurIPS 2025, Explained

Ever wonder which AI breakthroughs researchers actually think matter? The machine learning Olympics just wrapped up, and the winners are addressing the questions keeping AI scientists up at night.

NeurIPS, short for Neural Information Processing Systems, is basically the Oscars of AI research. Every December, thousands of researchers gather to share cutting-edge work. Getting a paper accepted here is tough. Winning Best Paper? That's career-defining.

This year's seven winners tackle everything from why AI models all sound the same to how we can finally build truly deep neural networks. Let's break down what they actually discovered. Here’s the four main “winners”:

- Artificial Hivemind: Tested 70+ language models and discovered they all generate eerily similar responses—both across different models (ChatGPT, Claude, Gemini produce variations on the same theme) and within individual models (constant self-repetition)—revealing an "Artificial Hivemind effect" that proves adjusting temperature settings or using model ensembles doesn't actually create diversity.

- Gated Attention for Large Language Models: Adding one small tweak—a "gate" after the attention mechanism that acts like a smart filter—consistently improves LLM performance across 30+ variations with models up to 15 billion parameters, and it's already shipping in Qwen3-Next with open-source code that NeurIPS judges said will "be widely adopted."

- 1000 Layer Networks for Self-Supervised RL: While most reinforcement learning models use just 2-5 layers, these researchers built networks with up to 1,024 layers for robots learning to reach goals without any human guidance and achieved 2-50x better performance, proving RL can scale like language models.

- Why Diffusion Models Don't Memorize: AI image generators avoid copying training data because there are two distinct timescales during training—an early phase where models learn to create good images and a later phase where memorization begins—with the memorization phase growing linearly with dataset size, creating a sweet spot for stopping training before the model starts "cheating" by copying.

The other three runner-ups are covered in the full news brief!

Why this matters: The gated attention mechanism is already in production. The hivemind problem will push researchers to develop models that deliberately diversify outputs. And the RL depth scaling could unlock a new generation of capable robots and agents.

If you're using AI tools daily, watch for models that explicitly advertise diversity in outputs or deeper reasoning capabilities; these papers just laid the roadmap for what's coming next.

FROM OUR PARTNERS

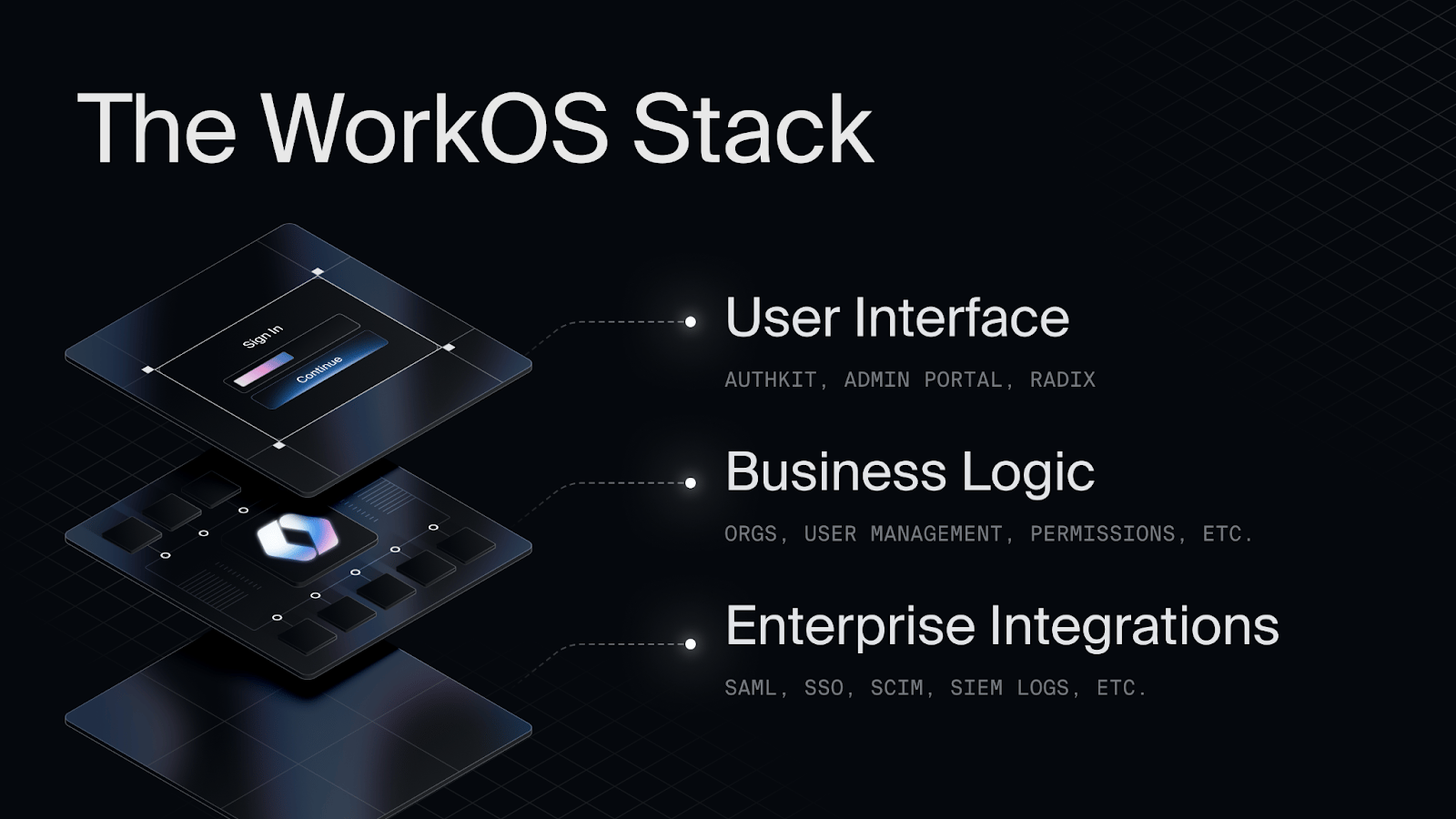

The Platform Powering Auth, Identity, and Security for AI Products

Enterprise customers expect more than a login screen. They demand SSO, directory sync, granular roles and permissions, and detailed audit logs, all built to strict compliance standards.

WorkOS gives growing teams these enterprise foundations without slowing development:

- Modular APIs for authentication and access control

- A hosted Admin Portal that simplifies customer onboarding

- Built-in security and compliance features like SOC 2, GDPR, and HIPAA

Trusted by OpenAI, Cursor, Vercel, and 1000+ more companies, WorkOS powers auth, identity, and security for AI products. Your first million MAUs are free.

Prompt Tip of the Day

Ahead of our Codex deep dive w/ OpenAI today, check out OpenAI's new GPT-5.1-Codex-Max guide, which just revealed the best prompting principles for everyday ChatGPT and Claude users

Buried in the technical docs are five game-changing techniques:

- Bias toward action. Remove any instructions asking AI to “plan“ or “outline“ first; they cause premature stopping. Instead, say: “Complete this fully with all sections finished, don't just outline.“

- Batch your requests. Ask for multiple things simultaneously: “Analyze these three documents together and compare key themes“ beats three separate prompts.

- Persist until complete. Add this magic phrase: “Deliver finished work, not just recommendations or next steps.“

- Be ruthlessly specific. Tell AI exactly what you want: “Write this as a McKinsey-style report with executive summary, three sections, and data-driven recommendations.“

- Avoid “AI slop.“ The guide warns against “safe, average-looking“ outputs. Try: “Create something distinctive and intentional—avoid generic corporate templates.“

Our favorite insight: That last one. Whether you're making presentations, reports, or any content, explicitly instructing AI to avoid generic templates and make bold choices transforms mediocre outputs into memorable ones. The difference between “create a presentation“ and “create a distinctive presentation that avoids typical corporate slide templates“ is enormous. Check out the full guide on GitHub.

Treats to Try

*Asterisk = from our partners (only the first one!). Advertise to 600K readers here!

- *ICYMI: You can actually run the models beating GPT-4o. Dell Pro Max with GB10 handles NVIDIA Nemotron 70B, which outperforms both GPT-4o and Claude 3.5 at #1 on Arena Hard. Run cutting-edge 70B models for inference, fine-tune on your data, or prototype AI products locally before deploying to production. This is the kinda hardware that separates teams experimenting with AI from teams shipping with it. Run frontier models locally with this.

- Gemini 3 Deep Think is now available to Google AI Ultra subscribers; it outperforms Gemini 3 Pro on advanced reasoning benchmarks by exploring multiple solution paths simultaneously to tackle complex math, science, and coding problems.

- NotebookLM now generates full presentation decks from your documents—upload research papers, reports, or articles and it creates polished slides that summarize your content, though current slides are images (editing tools coming soon). See Ethan Mollick’s demo.

- Willow Voice lets you dictate messages, emails, and notes 5x faster than typing, and new today you can manually select your preferred languages and sign up for access to the new Windows Beta (download for iOS here).

- MagicPath now lets you drag images onto your canvas, reference them in chat with code, and turn them into working components (or really cool, mix multiple images together to generate new design styles).

- Fellow 5.0 records your meetings (with or without a bot), auto-summarizes them with tailored templates, and pipes the results into your existing tools so follow-ups actually happen—no pricing details.

- Coverbase helps you turn painful third-party procurement into a guided workflow by using AI agents to gather vendor documents, prefill risk answers, route approvals, and monitor suppliers across security, legal, and finance in one system (raised $16M)—enterprise pricing via demo.

Around the Horn

This talk with Yann LeCun and Pim DeWitte of General Intuition was fantastic; you’ll learn a lot about the problems with current AI systems and a few possible alternative paths.

- OpenRouter analyzed 100 trillion tokens of real-world LLM usage and found that reasoning models now represent 50% of all inference, open-source models captured a third of the market (with Chinese models driving much of that growth), and roleplay dominates OSS usage at 52%—outpacing all other categories including programming—while early user cohorts that find perfect model-market fit exhibit a “Cinderella Glass Slipper“ retention effect that sustains engagement far longer than later adopters.

- The European Commission launched an antitrust investigation into Meta's October policy change that bans third-party AI chatbots like ChatGPT and Perplexity from WhatsApp's business API while allowing Meta AI to remain—a move that could result in fines up to 10% of Meta's global annual revenue if found to violate EU competition rules.

- Chatbots in new Science and Nature studies persuaded about 1 in 25 voters to shift their candidate preferences—more than typical TV campaign ads—raising fresh worries about how cheaply AI might scale political persuasion, true or not

- Kunievsky's paper specifically argues that as AI makes targeted persuasion cheaper, political elites will have incentives to deliberately engineer more polarized or “semi-locked” public opinion distributions instead of polarization just emerging on its own.

- Microsoft quietly cut its internal sales-growth targets for Azure AI agent products roughly in half, after a report said many reps missed aggressive quotas amid customers’ reluctance to pay for still-unproven agentic tools.

- OpenAI was ordered by a U.S. magistrate judge to hand over about 20M de-identified ChatGPT chat logs to the New York Times and other publishers in a copyright lawsuit, rejecting the company’s privacy objections and giving it seven days to produce the data.

- The EU plans to open a formal bidding process in early 2026 for AI “gigafactories“—large-scale compute facilities—to build domestic AI infrastructure and reduce reliance on U.S. tech giants, though any plants will still likely depend on Nvidia GPUs.

- AI Trade Arena gave five LLMs (GPT-5, Claude Sonnet 4.5, Gemini 2.5 Pro, Grok 4, and DeepSeek) $100K each to trade stocks for 8 months in a backtested simulation with time-filtered data—Grok performed best and DeepSeek second, with most models loading up on tech stocks that did well, while Gemini came last as the only model that diversified into non-tech holdings.

FROM OUR PARTNERS

Webinar: Getting engineers to trust coding agents

How do you actually get engineers to adopt coding agents? In this webinar, Vellum will share their engineering playbook behind testing different coding agents and increasing monthly PR count by 70%. They’ll show what worked, what failed, and how agents now cover around 40 percent of engineering output. Special guest: Eashan Sinha from Cognition! Register here

Intelligent Insights

- IBM CEO Arvind Krishna walked through napkin math suggesting there’s “no way” today’s trillions in AI data-center capex will earn a return at current costs and pegged the odds that current LLM tech reaches AGI at just 0–1%.

- Martin Alderson makes a data-dense case that while AI datacenter capex could still hit a rough patch if agent adoption or financing slows, we’re not replaying the telecom crash: slowing hardware efficiency and surging agent workloads mean excess capacity is likely to get used over time rather than sit dark forever.

- Evan Armstrong argues that in an AI world where LLMs make coding cheap, defensible power shifts to marketplace companies like DoorDash that own aggregated demand and can rapidly bolt on vertical SaaS, hardware, and services around their platforms. So “the future of software” is really about distribution flywheels, not standalone apps. Seems true anecdotally so far, which is why “vibe coding” tools need to make distribution easier; where’s all the vibe tool / app platforms?!

- Dwarkesh Patel says he's bearish on AI in the short term because current models lack robust on-the-job learning—requiring expensive "pre-baking" of skills through RLVR rather than learning like humans do—but explosively bullish long-term because once models achieve true continual learning (which he expects in 5-10 years), billions of human-like intelligences on servers that can copy and merge learnings will represent actual AGI worth trillions.

- Read the rest of this week’s top insights and cool finds here.

A Cat’s Commentary

.jpg)

.jpg)

.jpg)