Sam Altman just revealed his endgame for OpenAI (and Stargate, his super AI datacenter project): build a "factory" that churns out 1 gigawatt of AI infrastructure every single week. That's a nuclear reactor's worth of compute, except instead of powering homes, it's powering what he calls "Abundant Intelligence."

But the story got even wilder Tuesday when OpenAI, Oracle, and SoftBank announced five new Stargate data center sites across America—from the deserts of New Mexico to the plains of Ohio—putting them ahead of schedule to hit $500 billion and 10 gigawatts by the end of 2025.

Let's unpack what's really happening here, because this isn't just about building bigger computers.

The Vision: AI as a Human Right

In his blog post "Abundant Intelligence," Altman lays out a future where AI becomes as fundamental as electricity—maybe even a basic human right. The problem? Everyone will want their own personal AI workforce, but we're nowhere close to having enough compute.

His solution is almost comically ambitious:

- Build from scratch: chips, power systems, buildings, even robotics.

- Location: Primarily in the US (where he notes we're lagging behind other countries).

- Timeline: Years to hit the 1-gigawatt-per-week milestone.

- Financing: "Interesting new ideas" coming later this year.

Altman calls increasing compute "the literal key to increasing revenue." More GPUs = more money. It's the simplest equation in tech.

The Infrastructure Land Grab

Tuesday's announcement shows how fast this is moving. The five new Stargate sites include:

Oracle's mega-builds:

- Shackelford County, Texas.

- Doña Ana County, New Mexico.

- A Midwest site (announcement coming soon).

- 600MW expansion near the flagship Abilene site.

- Combined: 5.5 gigawatts, 25,000+ onsite jobs.

SoftBank's fast-track sites:

- Lordstown, Ohio (operational next year).

- Milam County, Texas (powered by SB Energy).

- Combined: 1.5 gigawatts in 18 months.

Add these to the flagship Abilene campus (already running NVIDIA GB200 racks), plus ongoing CoreWeave projects, and you get 7 gigawatts of planned capacity with $400B in commitments—on track for the full 10 gigawatts by end of 2025.

Oracle co-CEO Clay Magouyrk frames it as "unrivaled pace." SoftBank's Masayoshi Son calls it "paving the way for AI to advance humanity." And President Trump's administration claims credit for the accelerated timeline.

Dylan Patel of SemiAnalysis, who tracks every datacenter globally through satellites and supply chains, apparently called this months ago based on Oracle's datacenter acquisitions. The guy's basically the Nostradamus of AI infrastructure.

In fact, he called out the fact that the big Oracle-OpenAI deal from September 10th was even bigger than announced: $300B+ over five years, ramping to $80-90B annually by 2028. He says Microsoft "chickened out" on this scale, giving Oracle the opening. The clever bit? Oracle only secures datacenter capacity (the cheap part), buying GPUs just 1-2 quarters before rental.

The Stakes According to Sam

Here's where it gets philosophical. With 10 gigawatts, Altman says AI could cure cancer OR provide personalized tutoring to every child on Earth. But if we're compute-constrained? We'd have to choose. Nobody wants to make that Sophie's choice.

This echoes what he told CNBC: solving the compute bottleneck means not having to decide between curing diseases or educating billions—we could do both.

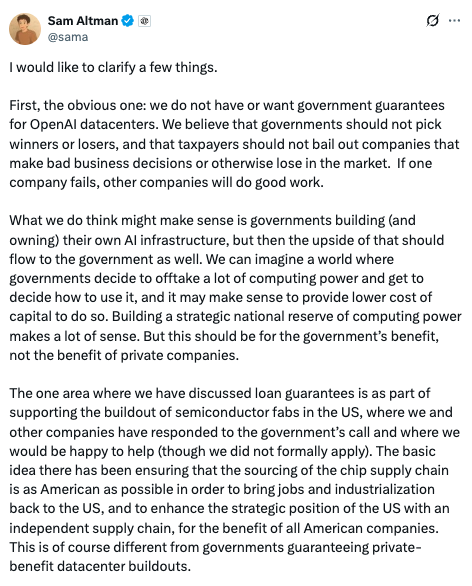

Of course, it's not a philosophical debate: there's real money consequences on the line. OpenAI plans to spend $115B between now and 2029 to achieve this "abundant intelligence." But as Sherwood News said, "no company in history has ever lit that much money on fire intentionally," raising the capital needed to fund all these deals would test the very limits of private markets. Though I guess if NVIDIA is funding it (at least in part), then the limit doesn't really exist. As Patel said, Jensen has so much cash and nothing to buy with it, he could literally "make venture capital dead" by taking every good round (but of course he won't pick winners among customers). From that POV, bankrolling OpenAI to buy the next generation of chips (Vera Rubin) makes a lot of sense...

But here's the reality check: buying these GPUs is "like buying cocaine," as Patel puts it—you text dealers asking "how much you got?" Multiple neoclouds are sold out of current-gen chips. He said the current cutting edge datacenter chip generation GB200s have reliability concerns because one failed GPU affects the remaining 71.

The Great Philosophical Divide

Now here's where things get spicy. While Altman's racing to build gigawatt factories, the other AI lab leaders have wildly different takes on what's actually needed:

Demis Hassabis (Google DeepMind) seems to think Altman's got it backwards. Fresh off his Nobel Prize win, Hassabis recently argued at the All-In Summit that AGI is still 5-10 years away and won't come from just adding more compute.

His critiques are surgical:

- Current AI can't form new hypotheses or create something as elegant as the game of Go (only play it).

- His test for AGI? Give it all human knowledge up to the year 1901 and see if it derives special relativity.

- He says we need "1-2 missing breakthroughs," not just scale

- What are the missing pieces? True creativity, consistency (models still make high school math errors), and continual learning.

- His focus? Hybrid models combining neural networks with hard-coded physics.

Dario Amodei (Anthropic) takes a third path entirely. He refuses to even use terms like "AGI," calling them "marketing dopamine triggers." His contrarian views:

- There's a 20-25% chance the exponential "peters out" in next 2 years.

- Revenue is exploding 10x yearly ($0→$100M→$1B→$4.5B), making Anthropic the "fastest growing software company in history."

- Anthropic's focus is on business users who'll pay 10x more than consumers (like for example, pharma cares about PhD-level biochemistry, 99% of consumers don't).

- He claims his team achieves with $100M what others need $1B for via "10x capital efficiency."

- But he won't reveal why Claude dominates at coding ("we don't talk externally about it").

- And he says 100M-word context windows are possible (equivalent to a human lifetime of hearing), but it's just an inference cost issue (this is bullish for Sam's bet on more compute TBH).

Three Visions, Three Bets

So why'd we share all that? Because we have three radically different philosophies playing out amongst the large labs:

- Altman: Infrastructure is destiny. Build the rails, and the innovation will follow. It's a supply-side bet that more compute automatically yields better AI.

- Hassabis: Scientific breakthroughs matter more than scale. We need to crack the code on creativity and reasoning, not just throw GPUs at the problem (or in Google's case, TPUs).

- Amodei: Commercial efficiency wins. Build for businesses who'll actually pay, achieve 10x more with 10x less, and refuse to chase marketing terms like "AGI."

The Darker Read

Matt Berman points out a dystopian interpretation on all this news: In a future where production only requires compute, you can literally pay to win. This could lock everyone without giant datacenters into a permanent underclass, and effectively freezing the economy (and you on whatever rung of the economic ladder you are on) whenever AGI arrives.

So is all this spending about curing cancer and educating billions, as Altman claims? Or is it a robber baron-equivalent land grab to control the labor market of the future?

Let's also not forget, there's still a great power rivalry between the US and China going on. While Dylan Patel says China publicly claims they don't need them, they're secretly desperate for NVIDIA chips (Huawei grabbed 2.9M through shell companies before getting caught, for example). This means not only does OpenAI have competition for chips amongst all his hyperscale peers, there's a geopolitical angle to this intelligence-grab, too.

Despite whatever restrictions the U.S. puts on China, their home-grown chip industry is steadily catching up, and even if it won't catch up in the immediate term, it would be foolish to think they'll never catch up. As Dylan notes: "You're betting on China not being able to manufacture...that's when, not if."

The Real Question

With over 300 proposals from 30 states reviewed, and more sites coming, this represents the largest infrastructure buildout in human history. The combined investments dwarf many countries' entire GDPs.

So are we watching the birth of humanity's greatest tool, or its most dangerous concentration of power?

When one company can pump out a nuclear plant's worth of AI weekly, when compute becomes the only currency that matters, when three to five tech labs control humanity's path to whatever comes after human-level intelligence—what exactly are we building here?

Altman says it's "the coolest and most important infrastructure project ever." Hassabis wants to usher in a "golden era of science." Amodei warns the exponential could still "peter out" but will do everything he can to keep growing.

They're all right. And they're all playing with fire.

The race isn't just to AGI anymore—it's to own the means of intelligence production. And at gigawatt scale, whoever wins might just own everything else too.