Remember when $40 billion felt like a massive AI investment? That’s nothing, y’all. NVIDIA and OpenAI just announced a new partnership that makes every previous AI deal look like pocket change: NVIDIA plans to invest up to $100 billion in OpenAI to build 10 gigawatts of AI datacenters.

Here's what this monster deal includes:

- 10 gigawatts of NVIDIA systems (that's millions of GPUs, NVIDIA’s AI chips).

- Up to $100B investment from NVIDIA, paid progressively as each gigawatt comes online.

- First deployment in late 2026, using NVIDIA's Vera Rubin platform.

- This is all to help the 700M weekly active users already on OpenAI's platform get reliable access to top tier intelligence.

P.S: Jensen Huang told CNBC this equals 4-5 million GPUs, which is "about what NVIDIA ships in a year and twice what they shipped last year."

So like, how much power is 10 gigawatts?

Let’s put that gigantic number in perspective:

- As we wrote Sunday, 1 gigawatt ≈ the electrical capacity of a typical large nuclear plant.

- Huang previously said building 1 gigawatt of datacenter capacity costs $50-60 billion, with about $35 billion of that going to NVIDIA chips and systems.

- A gigawatt is a unit that measures how much electricity something uses. In more familiar terms, it converts to 1 billion watts or 1,000 megawatts, which is enough to power about 750,000 homes.

- So, 10 gigawatts could power ~7.5 million homes.

- Instead, it's going to power millions of GPUs to train OpenAI's next-generation models on their path to what they're calling “superintelligence.”

Remember that "superintelligence" refers to AI that would be smarter than humans at virtually every task - think of it as AI that could outperform the best human doctors, scientists, and engineers all at once.

This isn't just about bigger models, mind you: it's about infrastructure for what both companies see as the future economy. As Sam Altman put it, “Compute infrastructure will be the basis for the economy of the future.” Compute infrastructure in this context is basically all the computer power and data centers needed to run AI systems. And let's not forget, there's a lot of compute in the pipeline:

- Just days ago, Microsoft unveiled Fairwater, a $7.3 billion AI datacenter in Wisconsin launching early 2026, calling it "the world's most powerful AI datacenter."

- OpenAI already has a separate $300 billion, 5-year deal with Oracle for 4.5 gigawatts of capacity as part of their Stargate project.

- There's also Colossus 2, Elon Musk's new datacenter build out and the "first gigawatt-scale" datacenter.

- Meta also recently announced multi-gigawatt "superclusters" called Prometheus and Hyperion, with Hyperion scaling to 5 gigawatts.

And with OpenAI's Stargate I facility in Abilene, Texas already partially operational with Oracle delivering the first NVIDIA GB200 racks last month, this infrastructure buildout is moving fast.

The money and will is definitely there. Microsoft added over 2 gigawatts of new capacity last year, equivalent to "roughly the output of 2 nuclear power plants." Eight major hyperscalers expect a 44% year-over-year increase to $371 billion in 2025 spending on AI datacenters and computing resources, while Dylan Patel of SemiAnalysis thinks that number could be closer to $450B-$500B.

Obviously, the scale of this all is unprecedented. McKinsey estimates the industry needs $5.2-7.9 trillion in AI datacenter investments by 2030, and Goldman Sachs predicts global datacenter power demand will increase 165% by 2030.

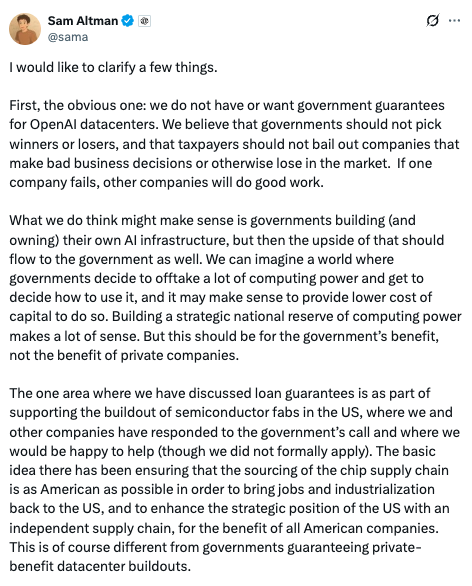

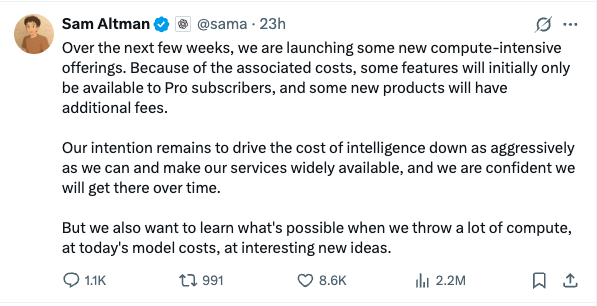

Also, it looks like there’s some “compute-intensive” new product offerings coming soon from OpenAI, with “additional fees” to power “interesting new ideas.”

Compute-intensive means these new features will require a lot of computer processing power to work, so they'll likely cost more to use.

As Sam pointed out in this viral CNBC clip, solving the compute bottleneck could be the difference between having to choose between curing cancer OR providing free education for everyone on Earth… or being able to do both. Well when you put it that way…

Why this matters:

The partnership kicks off in the second half of 2026 with NVIDIA's new Vera Rubin platform. Vera Rubin is NVIDIA's latest super-powerful computing system designed specifically for AI work.

OpenAI will use this massive compute power to train models beyond what we've seen with GPT-5 and likely also power what’s called inference (when you ask a question to chatGPT, and it gives you an answer). And NVIDIA gets a guaranteed customer for their most advanced chips. Infinite money glitch go brrr am I right?

For example, Dylan Patel pointed out how after NVIDIA announced a $5B investment in Intel, their stock was up 30%… $5B investment, $1B profit right away. Though to be fair, this kinda deal is as old as the AI industry itself. And as Corey writes, even if all this circular money dealing is signs of a bubble, this bubble will leave behind gigawatt-class AI factories, so it's far from unproductive. Instead, you could look at it as part of a decade-long infrastructure super-cycle.

Where’s all that power gonna come from, man?

The power IS the problem at this point. We think this is actually super interesting when you pair this news with the other big headline from the day OpenAI and NVIDIA announced this news: MIT Technology Review and TechCrunch report that Eni signed a commercial agreement worth more than $1B with Commonwealth Fusion Systems (CFS) to buy electricity from CFS’s first commercial fusion plant, the 400‑MW ARC, to be built in Virginia near Richmond.

Fusion power is a type of clean energy that works by combining atoms (the same process that powers the sun) rather than splitting them like traditional nuclear power. The Virginia ARC will be connected to the grid probably in early 2030s, when Eni plans to resell the power into the grid.

Let's put this in perspective:

- This is CFS’s second big customer in three months: in June, Google agreed to buy 200 MW from the same ARC plant—roughly half the output.

- CFS recently raised $863M, with backers including NVentures (NVIDIA), Google, Breakthrough Energy Ventures, and a 12‑company Japanese consortium.

- CFS is completing its SPARC demonstrator (Massachusetts), aiming to turn it on in 2026 on the path to scientific breakeven (the point when nuclear fusion creates more power than it takes to produce), then plans to scale to the ARC power plant.

On that last point, scientific breakeven is the holy grail of fusion energy. Fusion occurs when you get more energy out of the fusion reaction than you put in to start it, proving the technology actually works. If achieved, it'll be a big deal.

CFS isn't the only player trying to make fusion happen. Sam Altman and Microsoft have their own power deal with a rival fusion company called Helion too, that just started construction on a plant that aims to provide power for Microsoft by 2028. Different designs, but the same goal: reliable, zero‑carbon terawatt‑hour‑scale power for AI.

Terawatt-hour scale means truly massive amounts of clean electricity: a terawatt is a trillion watts, so basically enough to power entire countries.

And get this: these two are far from the only companies in this space. TechCrunch recently rounded up all the nuclear fusion companies who raised over $100M (worth an estimated $10B at least combined), and there's... surprisingly a ton:

- Commonwealth Fusion Systems (CFS) — ≈$3B total. They're building a Tokamak style reactor (a donut-shaped chamber that uses powerful magnets to contain the super-hot plasma needed for fusion reactions) with high‑temperature superconducting (REBCO) magnets; building SPARC demo toward the 400‑MW ARC plant in Virginia.

- TAE Technologies — $1.79B total. They're using what's called a Field‑reversed configuration (FRC) stabilized via particle‑beam injection for extended confinement. This is a different approach to fusion that uses a football-shaped plasma container instead of a donut shape.

- Helion — $1.03B total. They are using FRC plasmoids with direct electricity extraction; power supply agreement targets 2028. Plasmoids are basically controlled balls of plasma that can generate electricity directly without needing steam turbines.

- Pacific Fusion — $900M Series A (but a milestone‑based funding round). They are using Inertial confinement using coordinated electromagnetic pulses (Marx generators) instead of lasers. Inertial confinement fusion works by crushing tiny fuel pellets with intense energy pulses rather than holding plasma in magnetic containers.

- SHINE Technologies — $778M total. They are using neutrons, medical isotopes, and waste recycling en route to a power plant design.

- General Fusion — $462.53M total. They are using Magnetized target fusion (MTF) with a liquid‑metal liner compressed by pistons. This approach uses liquid metal walls and mechanical pistons to compress the fusion fuel, kind of like a super-advanced piston engine.

- Tokamak Energy — $336M total. They're using, you guessed it, a spherical tokamak (like a regular tokamak but more apple-shaped, which can be smaller and potentially more efficient) with HTS/REBCO magnets; they use a compact geometry to reduce magnet count.

- Zap Energy — $327M total. They're using a fancy science called sheared‑flow Z‑pinch, which is plasma that makes its own magnetic field, using a liquid‑metal blanket for heat capture.

- Proxima Fusion — €185M+ total. They are using stellarators (stellarators are twisted, pretzel-shaped fusion reactors that are more stable than tokamaks but harder to build.) for steady‑state, disruption‑resistant operation.

- Marvel Fusion — $161M total. They are using laser‑driven ICF (incredibly powerful ones to trigger fusion in tiny specially-designed targets, similar to how hydrogen bombs work but controlled) with silicon nanostructured targets; they have major partnerships with LMU/CALA and ELI‑NP.

- First Light Fusion — $140M total. They've pivoted from power‑plant build to supplying inertial target/impact technology platforms (that is, focusing on the projectile technology that smashes into fusion targets rather than building whole power plants).

- Xcimer — $109M total. They're using laser‑ICF with electron‑beam‑pumped excimer lasers targeting ~10‑Megajules (MJ) class systems with a molten‑salt first wall. This is another laser fusion approach using a different type of laser and molten salt to capture the energy.

Now, before you hit us with the ole "poor pleb, nuclear fusion is always fifty years away..." line, trust us, we know. If the massive injection of cash didn't give it away, unlike previous fusion hype cycles, this one seems very promising, with multiple previous "net positive" energy fusion breakthroughs demonstrated on a small scale (here, here, here, here, here, here, and here).

Also, this time around we've got more super-powerful computers than ever before to help us solve the extremely difficult science required to make this work, and we're building more and more powerful ones every day (like with NVIDIA's $100B investment in OpenAI).

Ironically, or I guess you could say in classic AI industry fashion, AI can benefit from fusion, and fusion can benefit from AI. For example, look at all the recent headlines of AI making further breakthroughs in fusion possible, such as...

- These Princeton researchers who can forecast plasma instabillities milliseconds before they happen; plasma instabilities are like sudden storms in the super-hot fusion fuel that can damage the reactor, so predicting them is like having a weather forecast for your fusion reactor.

- Microsoft's MatterGen and MatterSim, where MatterGen generates molecular structures from prompts while MatterSim simulates how those materials behave under test conditions thousands of times faster than existing computational tools.

- This research that can predict the likelihood of ignition on certain experiments (ignition is when the fusion reaction becomes self-sustaining, like getting a campfire to keep burning on its own).

- Chinese researchers, who developed two AI systems (including a multi-task learning model that monitors plasma states in real-time with 96.7% accuracy) to improve fusion reactor safety and performance.

- And this research, from CFS themselves, the US DOE, and a few other national labs (PPPL and Oak Ridge), that produced an AI called HEAT-ML that can identify "magnetic shadows" that act areas where internal tokamak components block the plasma's heat from reaching other surfaces, similar to how a building creates a shadow that shields you from sunlight.

In fact, ITER, the world's largest nuclear fusion research project, recently highlighted 10 AI tools (including the aforementioned Microsoft tools) that can be used to improve fusion research, such as:

- Atlas (by Arena), an AI platform combining physics reasoning with language models that streamlines engineering processes by helping design, debug, and optimize complex fusion systems.

- Fine-tuned OpenAI Models, like the Chatbot trained on ITER's knowledge base that provides engineers natural language access to over one million fusion research documents.

- AI coding assistants like Microsoft Copilot & GitHub Copilot that accelerate software development and troubleshooting for fusion control systems.

- Computer Vision AI, which provides automated visual inspection system to ensure structural integrity by inspecting welds on ITER's massive tokamak vessel.

- Physics-Informed Machine Learning, or AI models using real-world physics laws, which enable real-time plasma stabilization through coordinated actuators and sensors.

- Hot Spot Detection AI (automated infrared image analysis) that prevents damage to plasma-facing components and enables longer, safer plasma pulses.

- AI-Based Physics Models, like fast computational models of plasma behavior, that create high-fidelity digital twins of ITER operations for complete system simulation and optimization.

ITER is a massive international fusion experiment being built in France - think of it as the world's most expensive science project (here's a great update on the project). Unfortunately, because of the many players that are involved, and the sheer scale of the project, the pace of progress on the project can feel glacial... so anything AI can do to speed it up will matter tremendously (and a wave of private market competitors competing and benefiting from their research doesn't hurt).

When we talked with CFS' team about this, they had the following to say:

"AI inference and training consumes a massive amount of energy. It's the primary driver behind forecasts that the energy demand from data centers will double by 2030. Fortunately, that timing also lines up with when Department of Energy Secretary Chris Wright's expects the U.S. will start harnessing fusion energy.

Hyperscalers like Google, CFS' first customer, are also taking notice — looking to CFS to support their business needs for computing and AI.

This new deal shows that traditional energy companies also see fusion’s advantages, as they face the increasing demands for more power on the grid from AI and data centers. This customer deal shows how valuable fusion power will be on the grid.

Fusion energy is an ideal power source: clean, safe, dispatchable, secure, with nearly unlimited fuel, and power plants that can supply round-the-clock electricity and be built just about anywhere. With AI power demands increasing exponentially, the world desperately needs this new energy source to succeed."

Even with all this progress, fusion is most likely to take off in any meaningful way deep into the 2030s, so the race to provide the power needed for AI datacenters until then will probably break out in some combination of the following:

Short term (2025–2028): nearly all near‑term power that feeds AI will be dominated by natural gas, existing nuclear, geothermal power (where available) and renewables + big battery fleets (the batteries being the key component there to maintain consistent power for critical AI training runs) to keep training runs stable and predictable.

Medium term (2030s) In addition to existing nuclear, new small modular reactors could hit the grid by early 2030s (if not sooner). Then, if CFS’s ARC (and/or rivals) hit their timelines, nuclear fusion enters the mix—first at premium prices that help establish price discovery and bankability, then scaling as factories, magnets, and supply chains mature.

Why natural gas and solar + batteries in the near term?

For starters, natural gas has become the go-to power source for AI data centers because it's reliable, abundant, and can ramp up quickly when demand spikes. Data centers are even building their own gas power plants right on-site to avoid grid delays. Companies like Energy Transfer are signing deals directly with data center developers to supply gas through pipelines, with some predicting data center gas demand could reach 10-12 billion cubic feet per day by 2030. That's like powering 15-18 million homes just for AI.

Here are a few more examples:

- Meta is spending $3.2 billion on three natural gas plants totaling 2.3 gigawatts to power its Louisiana data center, with Entergy acknowledging gas plants "emit significant amounts of CO2" but calling it the only affordable choice for 24/7 electricity -

- Microsoft's vice president of energy Bobby Hollis said they're "open to deploying natural gas with carbon capture technology" for AI data centers if it's "commercially viable and cost competitive."

- Chevron, GE Vernova and Engine No. 1 plan to deliver up to 4 gigawatts of power using natural gas for data centers, with the first projects expected to deliver power in 2027.

- Goldman Sachs projects natural gas demand from data centers will drive 3.3 billion cubic feet per day of new demand by 2030.

Meanwhile, Battery Energy Storage Systems (BESS) serve multiple roles in data centers: they provide backup power during outages, smooth out power fluctuations that could damage servers, and store energy during low-demand periods to use during peak times. Unlike traditional diesel backup generators that take minutes to start up, modern battery systems can kick in within seconds and run for 1-2 hours. They're also much quieter and cleaner than diesel generators. As AI workloads spike from 15kW to 120kW+ per server rack in milliseconds, batteries help manage these rapid power swings that would otherwise stress the electrical grid. And battery energy storage systems can now discharge for 4-8 hours at full capacity, with some projects exploring longer-duration storage technologies.

Who is actually using this? A few examples:

- Amazon leads with 30.9 terawatt-hours consumed, 85% from renewables, aiming for 100% by 2025, and has signed supply agreements with more than 100 solar and wind projects globally.

- Google unveiled a $20 billion partnership with Intersect Power and TPG Rise Climate to develop industrial parks that co-locate gigawatts of data center capacity with clean energy plants.

- Elon basically used his access to giant battery packs to stabilize his training runs of the latest versions of Grok, so they are a critical, scalable solution to power AI in the near term. And without them, solar and wind would be too intermittent to be reliable for 100% uptime.

Why solar and batteries over natural gas specifically? Price, mostly. Goldman Sachs analysis shows solar power costs $26/MWh vs $37/MWh for combined cycle natural gas before accounting for carbon capture costs.

And let's not forget geothermal: A Rhodium Group study found geothermal could meet up to 64% of expected AI data center demand growth by early 2030s, or potentially 100% if data centers locate near optimal geothermal resources. Google already has a power purchase agreement (PPA) with Fervo Energy to power its Nevada datacenters with geothermal, while Meta signed a deal with Sage Geosystems.

Enhanced geothermal systems (EGS) can be completed in as little as 18 months and provide 24/7 baseload power with 90%+ capacity factors, and advanced geothermal could unlock 90 gigawatts of clean power in the US alone, using deeper drilling techniques borrowed from oil and gas companies. For example, Phoenix could add 3.8 gigawatts of data center capacity without building a single new conventional power plant by using geothermal. And behind-the-meter geothermal avoids grid connection delays that are plaguing other power projects, while reducing electricity costs by 31-45%.

There's just one problem:

Getting all this power hooked up. Berkeley Lab found 2.6 GW of total generation and storage capacity awaiting grid connection, over 95% of which was for zero-carbon resources like solar, wind and battery storage. This is why you might have seen multiple executive orders (9 so far, according to Claude) related to permitting of new energy projects.

Why did we need 9 executive orders on this? Because establishing AI dominance required overhauling multiple regulatory agencies and aspects of the problem—5 orders tackled AI development and deployment (removing regulations, streamlining data center permitting, promoting exports, and advancing education), while 4 orders reformed nuclear power (NRC licensing, DOE testing, fuel supply, and national security deployment) to provide the massive baseload power these AI systems demand.

Looking further out, these changes are likely to benefit small modular nuclear reactors (SMRs) that could help with power, too. SMRs, in theory, are smaller and cheaper than their traditional nuclear power plant designs, and therefore could be quicker to scale up. There's a lot of deals with these SMRs in the pipeline, too: Google signed an agreement with Kairos Power for multiple SMRs, with the first launching by 2030 and more added through 2035, supplying up to 500 MW to the US grid. Amazon invested $500 million in X-energy and is partnering with Energy Northwest to develop four SMRs, starting with 320 MW and potentially expanding to 960 MW. Sam Altman also has his own SMR investment, a company called Oklo which just broke ground in Idaho for their Aurora plant, a 75 MW sodium-cooled fast reactor designed to help power AI infrastructure. The project is part of the DOE's Reactor Pilot Program responding to Trump's executive orders, with the goal of demonstrating reactor criticality by July 4, 2026. So there's a lot going on in this space!

Needless to say, AI is going to need a lot of power... it's basically going to need all the power it can get... and so it will accept it from just about anywhere it can get it, as soon as it can get it. That includes traditional sources, and that includes pretty new ideas... and if we get a wave of more abundant power sources built out of that demand, it's most likely a net positive for society. After all, there are no high income, low energy countries... so if you build lots of new power sources, especially clean sources, it'll almost surely get used to increase productivity and benefit everybody in the long run.