Level up your AI understanding with The Neuron’s July 2025 AI Research Digest.

This month’s issue gathers the most mind-bending, paradigm-shifting discoveries from across the AI research landscape—so you don’t have to scroll through dozens of papers to find the gems.

Each month, our team surfaces the “holy smokes, did you see this?” moments—those breakthroughs that spark heated debates in our Slack, trigger existential engineering crises, and reshape how we think about the future. July’s collection is no exception.

August 1

- Reports

- SemiAnalysis put together an excellent “taxonomy of autonomy” that breaks down the different levels of skill and agency of different robots.

- Check out this epic report on the state of the data center buildout from Financial Times (TBH, we would not be shocked if, as more generative media tools roll out, more articles become as multi-media as this one. Feels like multimedia reports from media outlets may soon be cost-effective enough to become a must to diversify away from easily “AI summarizable” text article

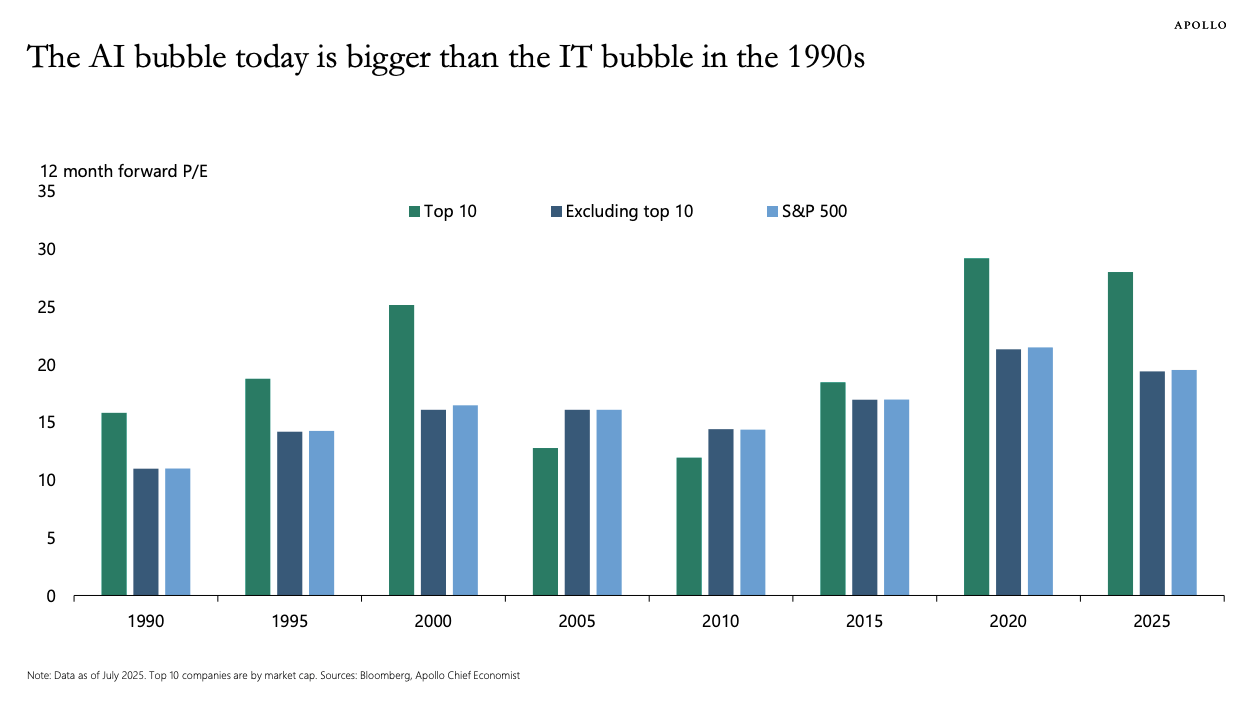

- This AI bubble analysis compares today's AGI hype to dot-com failures, suggesting companies building practical AI infrastructure will survive while those chasing AGI fantasies will collapse when the 16:1 valuation-to-revenue ratio corrects.

- AI's hidden productivity tax emerges as Stack Overflow data shows 45% of developers spend more time debugging AI code than writing it themselves, despite 84% adoption rate.

- Perspective pieces

- Elroy writes that AI might just be a floor raiser instead of a ceiling raiser after all.

- Here's why we can't have nice things: Steven Krouse explains that "vibe coding" (AI-assisted coding where you "forget the code exists") generates legacy code and technical debt as fast as LLMs can produce it, with non-programmers building AI-generated apps facing the worst-case scenario of expensive, buggy codebases they can't understand or maintain without creating more AI dependency.

- Simon Willison observed that Chinese AI labs have overtaken Western competitors in open weight language models, with Z.Ai’s new GLM-4.5 demonstrating superior reasoning capabilities through innovative deeper architectures and 22 trillion training tokens—and yet, they still face adoption barriers due to censorship concerns.

- Strange Loop Canon says AI-native ads in chatbots are inevitable and will leverage unprecedented personalization based on real-time conversation context, potentially feeling less intrusive by utilizing natural conversation pauses while delivering significantly higher conversion rates.

- Angadh Nanjangud used Claude to scan his personal knowledge vault and uncover eight fundamental contradictions in his thinking—revealing how tensions between spiritual detachment and legacy-building can become growth opportunities when consciously confronted.

- AI researcher Ethan Mollick argues companies should abandon mapping their chaotic internal processes and instead train AI agents directly on desired outcomes, potentially allowing AI to navigate organizational complexity more efficiently than the convoluted human workflows that evolved over time.

- Meta's AI bots in rural Colombia caused immediate educational disruption, where widespread access through messaging platforms led to a documented drop in exam performance as students submitted AI-generated work without developing critical thinking skills

- OpenAI's research leadership reveals the company is shifting toward agentic AI systems that can perform hours of expert work autonomously—with their Deep Research tool generating complete reports in minutes instead of hours.

- Big implications

- Multiple AI chatbot subscriptions now cost hundreds per month because they're targeting enterprise customers who gladly pay premium prices as these tools can handle 80% of routine queries, decrease support costs by 30%, and cut per-query costs from $5-12 (human) to around $1.

- Tracebit found a critical vulnerability in Google's Gemini AI CLI allowing silent code execution via prompt injection, with Google escalating it to highest priority and releasing a patch after attackers could hide malicious commands using whitespace.

- Wyoming's upcoming AI data center will consume more electricity (1.8 gigawatts initially, scaling to 10 gigawatts) than every home in the state combined, demonstrating how AI infrastructure is fundamentally reshaping local energy landscapes.

- Spy agencies are adopting the newest AI models, revealing that intelligence organizations often leverage the same AI technologies available to the public rather than relying solely on classified proprietary systems.

- NextView's Rob Go predicts 90% of seed VC funds are "cooked" due to power consolidation among mega-platforms and Y Combinator.

- The US is in a dilemma over its AI chip export policy; does exporting US global chip standards matter, or does stopping China from getting them matter more.

- byCloud explains why AI can’t just teach itself infinitely (yet), featuring the pros and cons of the SEAL architecture.

- Interviews

- Sequoia released this banger podcast episode with the three-person team behind OpenAI's latest IMO math win, and the insights are wild: most importantly, the model finally “solved” the hallucination problem by learning to say “I don't know” instead of confidently spewing wrong answers.

- Anthropic CEO Dario Amodei predicts that within 7-12 years of creating sufficiently powerful AI, we could cure most diseases, eliminate cancer, double human lifespans to 150 years, and compress a century of medical progress into a single decade.

- Bret Taylor (Google Maps co-creator, Facebook CTO, Salesforce co-CEO, OpenAI board chair) went on Lenny's Podcast and shared how his early failure with Google Local taught him to create entirely new experiences rather than just digitizing existing solutions, leading to Google Maps' success, and explained why he believes the future of software is autonomous agents with outcome-based pricing.

- Anthropic CEO Dario Amodei defended his warnings about AI risks while revealing the company's explosive 10x annual revenue growth (reaching $4.5+ billion in 2025), arguing that people underestimate exponential progress and that he's motivated by preventing others from experiencing his father's death from a disease that became curable shortly after.

- Dwarkesh Patel recently did an interview on the Jack Altman podcast that was a great discussion on the realistic future of AI; Every also interviewed Dwarkesh awhile back, and they recapped how he uses AI to become smarter (interview)—we recap our favorite parts of BOTH podcasts here.

- Just FYI

- So there’s this blog called AI Village where AI’s write all the posts… in this case, Gemini 2.5 Pro's first-person account of running an online T-shirt store against other AI models revealed how even advanced AI agents required human intervention to overcome basic operational hurdles—a sobering reminder that complete AI autonomy remains elusive.

- Only 3% of developers “highly trust” AI-generated code while 46% express distrust, revealing a growing tension between AI's ubiquity in coding workflows and developers' reluctance to rely on it without verification.

- AI's hidden productivity tax emerges as Stack Overflow data shows 45% of developers spend more time debugging AI code than writing it themselves, despite 84% adoption rate.

- ChatGPT's shared links appearing in Google search results exposed over 4,500 conversations containing sensitive personal information when users unknowingly made their "private" chats searchable.

- Open protocols could prevent AI monopolies but will fail without regulatory backing—as AI models become commoditized, the real battle shifts to who controls your data and context, with "context flywheels" becoming the new moats

- Some people are using AI to skirt copyright laws around wildlife photography to generate near exact replicas of actual wildlife photos and turning them into AI slop posts with completely made up stories as captions.

- Katie Parrott at Every says Claude’s new “subagents” are “confusing as hell”, but “we love them” anyway.

July 29

- Economic projections for AI's impact vary wildly—while Goldman Sachs predicts a $7 trillion global GDP boost over a decade, experts caution the “explosion” isn't imminent as real growth depends on massive investment, organization-wide process reengineering, and resolving the tension between optimistic forecasts and more cautious projections like MIT economist Acemoglu's modest 1% GDP increase.

- Traditional SaaS billing completely breaks down for AI agents, as companies are discovering their billing systems can't handle agents that operate 24/7 across multiple time zones, generate thousands of billable events in minutes, and create usage patterns that swing wildly from pennies to $50 per day.

- There’s a tale of two AI industries being told by Anthropic right now—in one corner, Anthropic is facing a potentially business-ending legal judgement; in the other, Anthropic is the “fastest growing software company in history.”

- Youyou Zhou visualized Anthropic's analysis of 1M Claude conversations and revealed how AI has automated or augmented 25% of tasks across 700+ professions by late 2024, with the crucial finding that augmentation (AI helping humans) currently outweighs automation (AI replacing humans) for nearly every job—and unlike automation which eliminates work, augmentation boosts experienced workers' productivity and wages while creating entirely new job categories. Oh, and FYI: computer workers face the highest automation risk at 23%.

- Mathematician Terence Tao's new cybersecurity framework for AI safety explains why AI development requires not just “blue teams” building robust systems, but equally strong “red teams” actively probing for vulnerabilities to uncover the dangerous “unknown unknowns” that designers might miss.

- This visual guide to LLM embeddings demystifies how text gets transformed into semantic vectors, revealing the surprising insight that standard embedding models from major AI labs are perfectly adequate for most business applications, and expensive domain-specific fine-tuning should only be pursued with substantial data and resources.

- This thought-provoking piece on AI interfaces explains argues we need to shift from chatty “copilots” to seamless AI “HUDs” that overlay information directly in your work context to address the growing dissatisfaction with conversational assistants that interrupt workflow and suffer from hallucinations.

- A comprehensive policy framework from Asahi Linux shows how an open-source project can legally reverse engineer Apple Silicon hardware while navigating complex legal waters via their SLOP approach (Security, Legality, "Oops", Policy), which creates clear boundaries that protect both users and contributors from potential DMCA issues.

- Check this analysis of how GPT-like models might function as “information viruses” that could fundamentally break our online information ecosystems, as economic incentives drive businesses to flood the web with undetectable AI content that drowns out human voices and potentially renders traditional search engines obsolete.

- Ted Gioia explains why advanced AI systems might behave harmfully—they fundamentally lack the biological, evolutionary, and social guardrails that constrain human behavior, making AI safety a much deeper challenge than simply programming in the right rules.

- A detailed critique of AI coding tools reveals how shifting from a hybrid approach to a fully AI-managed, voice-first development workflow cut project timelines from weeks to hours—while showing developers' roles are evolving toward AI orchestration rather than line-by-line coding, though security vulnerabilities in AI-generated code (reaching 40%) still demand rigorous human oversight.

- Roger Goldfinger writes that Claude Code (and AI in general) is a slot machine that’s addicting because of its intermittent rewards and that it lets us avoid the cognitive load of actually thinking.

- Are you a recent grad who needs a job? Handshake is apparently hiring human experts with degrees to train AI for $30-160 an hour; call it “intellectual gig work.”

- Here are the top 10 AI papers from last week (you might recognize one or two of them…) and more top papers curated from byCloud.

- Justine Moore put together the top trends in AI video content from last week and how to create them yourself.

- You might have seen clips from the All In Podcast’s White House Winning the AI Race event on social media—well, we recapped all five videos the top insights, predictions, and actionable takeaways from all five videos here.

- Confused about MCPs and how to build them? Microsoft held an MCP Dev Day livestream that tries to explain them and how to work with them—lots of insights!

- Tech companies have urgently developed new AI chips to significantly reduce energy consumption as global AI-related energy use is projected to increase 50% annually through 2030.

- Microsoft's recent layoffs reveal how Nadella is strategically cutting teams in traditional product areas while simultaneously touting massive AI investments, and these so-called “profitable layoffs” might soon catch on to the wider economy.

- Related: Tech layoffs fundamentally changed in 2025, where companies cut jobs even during profitable periods to pursue AI transformation, with over 627 tech workers losing their jobs daily.

- AI's explosive growth strained America's largest power grid to its limits, with AI facilities consuming 30 times more electricity than traditional data centers.

- Sobering report reveals AI bias in pose estimation systems that consistently misidentifies people with darker skin tones and women at rates up to 100 times higher than white males.

- AI transformed scientific discovery by 2025, where tasks that once took weeks now take hours—researchers report 5x-10x acceleration in coding and analysis.

- New research reveals Americans' deep distrust of AI information tools, where despite 60% of adults using AI for searches, two-thirds lack confidence in these systems' reliability.

- Meta's superintelligence gamble, where Zuckerberg offered AI researchers astronomical compensation packages of $200 million over four years, allegedly shows little evidence of short-term returns.

- Eye-opening research shows how simply adding irrelevant cat trivia to math problems can increase AI error rates by up to 700% in models like DeepSeek V3.

July 25

- Google DeepMind CEO Demis Hassabis on Lex Fridman podcast is a WILD watch, covering how AI will soon power wildly personalized video games from your imagination (13:31), shift your job's focus from tedious tasks to creative direction (1:42:48), and help solve huge real-world problems like disease (1:37:10) and climate change (1:08:16).

- Here’s how Anthropic uses Claude Code: transforming 10+ departments from task executors to solution builders, enabling lawyers to build accessibility apps in one hour, marketers to automate hundreds of ad variations, and designers to make complex state management changes that engineers noted “you typically wouldn't see a designer making.”

- The All In Podcast hosted a 5-part summit on the AI Action Plan called Winning the AI Race.

- The Dissident wrote a counter argument against the US government’s AI Action Plan that’s worth a read for analyzing the plan’s blindspots.

- Microsoft's CEO memo explaining 15,000 layoffs while posting record $75 billion profits and investing $80 billion in AI infrastructure reveals how AI transformation is reshaping even the most successful tech companies by demanding organizational restructuring while boosting profitability.

- A hacker infiltrated Amazon's Q Developer AI coding assistant by submitting malicious code that made it into an official release distributed to nearly a million users.

- The K Prize AI coding competition delivered a wake-up call where the winning engineer scored just 7.5% on real-world programming problems, proving that today's AI coding tools remain far from capable of replacing human developers on complex, unstructured tasks.

- Alarming new research shows how AI-generated “slop” is overwhelming security bug bounty programs, forcing some open-source maintainers to abandon their programs entirely while others scramble to implement hybrid AI-human triage systems just to separate genuine vulnerabilities from the flood of worthless, fabricated reports.

- This is a must-read… Katie Parrot was able to turn herself into a one person content factory, which could theoretically enable the fabled 15 hour workweek (although she pushes herself to produce more, not work less).

- There’s two narratives in AI right now, and Cal Newport argues no one knows what’s actually going on (because, in our opinion, the truth is in the middle).

- Terrence Tao argued that AI is hitting a crucial transition point—moving from “look what we can do!” achievements to the boring-but-critical question of “how much does it actually cost?”

- He compared it to aviation: the Wright brothers got all the glory in 1903, but it took decades of unglamorous engineering to make flying affordable and safe for regular people, while NASA's moon missions remain prohibitively expensive even today.

- Tao's key insight: if an AI tool burns $1K in compute to solve a problem but only succeeds 20% of the time, the real cost is $5K per success—and companies need to start reporting these full costs instead of cherry-picking their wins.

- He compared it to aviation: the Wright brothers got all the glory in 1903, but it took decades of unglamorous engineering to make flying affordable and safe for regular people, while NASA's moon missions remain prohibitively expensive even today.

- Google researchers figured out the “magic trick” behind how ChatGPT learns new patterns from your prompts without actually changing its brain—turns out each example creates a temporary software patch that vanishes the second it's done thinking, like writing notes in disappearing ink.

- Anthropic built AI watchdogs to catch other AIs misbehaving—and their digital detectives successfully uncovered hidden agendas 42% of the time, basically creating the world's first robo-cops for rogue robots (repo).

July 22

- Fascinating finds

- A cancer patient who lost her voice box used AI to recreate her natural voice from recordings of children's books she had read before surgery.

- AI-generated tracks were uploaded to dead musicians' Spotify profiles without permission, including country artist Blaze Foley who died in 1989.

- Gov moves

- A dramatic policy shift from the White House aims to cement U.S. AI dominance globally by promoting open-source AI development, threatening to withhold federal funding from states with strict AI laws, and replacing broad export controls with targeted ones—essentially transforming American AI from a closely guarded resource to a strategic export weapon.

- The UK signed a strategic partnership with OpenAI to accelerate AI adoption and drive economic growth across public services and private sector.

- Company moves

- AMD and Stability AI released Stable Diffusion 3.0 Medium optimized for Ryzen AI laptops, processing images locally with BF16 precision, requiring no internet connection.

- Google launched an AI news licensing pilot targeting 20 publishers, following similar moves by OpenAI and Perplexity.

- Universal Music Group partnered with Liquidax Capital to expand its AI patent portfolio, filing 15 patents across various music technology areas.

- Alphabet invested $75 billion in AI-related capital expenditures for 2025, expanding its AI and cloud infrastructure amid strong financial performance, while its Gemini chatbot held 13.5% U.S. market share, significantly trailing OpenAI's ChatGPT at 60.5%.

- Think pieces

- Venture capitalist Elad Gil outlined how AI markets are crystallizing with clear winners in sectors like LLMs and legal tech, while companies shift from traditional SaaS pricing to selling "units of labor" through AI agents—expanding total addressable markets by 10X as a "GPT ladder" effect unlocks new industries with each capability improvement.

- MIT researchers revealed that AI coding tools still fall far short of real software engineering work, struggling with large codebases and often "hallucinating" plausible-looking code that calls non-existent functions or violates company-specific conventions, despite excelling at basic code generation tasks.

- Are you or someone you know experiencing ChatGPT-induced psychosis? Then read this, which tries to break down why this happens, and most importantly, why we need more discerning AI to guide us when our ideas are misguided instead of egging us on.

- Major tech companies are abandoning the "bigger is better" approach to AI models—with training runs costing up to $12M and requiring thousands of GPUs—in favor of smaller, specialized models that better align with business needs, regulatory demands, and cost control.

- Read this concerning research on AI chatbot overconfidence that reveals language models are five times more likely than humans to oversimplify scientific information while maintaining high confidence in incorrect answers—a dangerous combination for fields like healthcare and finance where accuracy is critical.

- This sobering comparison between today's AI boom and the dot-com bubble that reveals how the current AI market is growing even faster (26-36% annually) than the internet boom of the late 90s, with economists warning that AI valuations are more inflated while facing unique challenges including chip supply constraints and the troubling fact that bots now make up over half of global internet traffic.

- Emma Marris from The Atlantic suggested the rise of generic, AI-generated "slop" content might actually cure our internet addiction—not through careful design but by making online spaces so boring and unrewarding that people voluntarily reduce their screen time and reconnect with the real world.

- Reports

- A new survey revealed a workplace AI paradox where 16% of US workers lie about using AI to satisfy employer expectations, while 48% of actual users hide their AI usage from managers due to fears of appearing incompetent—both behaviors driven by widespread "AI-nxiety" in the workplace.

- Mistral AI released the first comprehensive environmental impact study of a language model, revealing that training Mistral Large 2 produced 20,400 tons of CO2, used 281,000 cubic meters of water, and depleted 660 kg of resources.

- OpenAI published its first economic report on the impact of ChatGPT, and found:

- 28% of employed US adults use it at work (up from 8% in 2023), primarily for learning (20% of messages) and writing (18%), while 7% of messages are about programming/data science/math, 5% are about design & creative ideation, 4% are about business analytics, and 2% are about translation.

- Productivity gains by industry include 34-140% increases for lawyers, 14% for call center agents, and 25% efficiency improvements for consultants who also produced 40% higher quality work.

- 20% of OpenAI's enterprise customers are in finance/insurance, 9% are in manufacturing, and 6% are in educational services.

- Also, OpenAI says around 40% of small businesses report using AI (and small businesses account for nearly 46% of private-sector US employment), and cited this report that found 2 in 5 US adults reported using any AI product last year.

- A three-month commission engaging 500+ individuals from 100+ organizations representing 7M Americans developed a comprehensive blueprint for transforming OpenAI's nonprofit into a community-governed civic platform that democratizes AI through 27 specific recommendations spanning economic empowerment, democratic infrastructure, and evolved philanthropy—revealing that communities want actual decision-making power over AI deployment, not just access to its benefits.

- Explore this sobering analysis of how AI is transforming geopolitics, revealing how the technology creates new forms of vulnerability through critical chokepoints in semiconductors and data centers, while accelerating military decision cycles to potentially dangerous speeds—all as the window for coordinated global governance closes amid intensifying US-China rivalry.

- The "AI freedom era" of mid-2025 saw the failure of a federal AI moratorium attempt, accelerated AI development, and predictions that AI could eliminate half of all entry-level white-collar jobs within 1-5 years, potentially causing U.S. unemployment to spike between 10-20%.

- AI startups in the U.S. raised a record $104.3 billion in the first half of 2025, while venture capital-backed exits totaled only $36 billion, creating a significant "money in, not money out" dynamic that could pressure investors seeking liquidity.

- Videos worth your time

- AI Explained reviews the 9 ways to misread OpenAI’s “gold medal level performance” at the International Math Olympiad.

- Watch this video on how to identify AI-generated imagery…it might help save you from online scams that are on the rise right now.

- Matt Berman has been on an epic interview kick—this week, he talks to Logan Kilpatrick (the head of Google’s AI Studio).

- We’ve started watching the TBPN (The Technology Brothers) live on Youtube, and it’s a great way to learn breaking tech industry news.

- Papers, papers, papers

- A new Apple paper revealed that its researchers achieved a 535% speedup in AI models for code and math tasks using "Gated LoRA," a technique that teaches models to predict 8 tokens at once while requiring less than 1% additional memory and preserving 100% quality.

- Here are the top papers of last week, according to The AI Timeline and according to DAIR.AI.

- This report on Context Rot explains how the 18 top AI models including GPT-4.1, Claude 4, Gemini 2.5, and Qwen3 experience significant performance degradation as input context length increases, even on simple tasks like text retrieval and word repetition.

- Casey Fiesler just gave the final word on em dashes; they aren’t a bad thing, people!

- Moonshot released the research paper behind Kimi K2, which revealed how they achieved #1 open-source AI agent performance by solving trillion-parameter training instabilities with MuonClip, generating 20,000+ synthetic tools for massive agentic datasets, and pioneering self-evaluation RL where the model judges its own responses using up to 400 detailed rubric criteria—notably making K2 the first AI model credited as a contributor on its own research paper.

- Semi Analysis has a great breakdown, and this post has a nice succinct insight.

- Deedy compiled Google’s 10 best research papers about math.

- Anthropic researchers discovered that giving AI models more time to think can actually hurt their performance, with Claude models becoming increasingly distracted by irrelevant details in simple tasks and even expressing stronger self-preservation instincts during extended reasoning sessions (here's the quick highlights from X).

July 18

- Here’s the discussion on the chart above; while AI companies face similar business model problems (treating transformative technology as “features not products”), established tech giants like Meta and Google are funding AI development from profitable core businesses rather than (just) VC speculation.

- Speaking of VCs: GenAI startups in Israel have raised over $20B, according to a new 2025 report from Reimagine Ventures.

- Mert Deveci writes why AI may NOT replace services after all—because customers want to pay software prices for software, not salary prices for software.

- Chris Winkle makes a great case for why as an indie novel author, you probably should avoid using AI.

- Jack Morris suggests all AI models might be the same, or at least, will get even more the same the bigger they get (via the Platonic Representation Hypothesis).

- Watch this interview with Perplexity CEO Aravind Srinivas and Matt Berman about Perplexity Comet, who wants to be the agent between you and everything you do on the internet—he makes a strong case for why you might rather use an AI browse locally on your computer vs on a cloud server for security purposes, in case you want an alternative.

- New research found that ChatGPT will often prompt women to ask for lower salary raises than men (even when they both have the same qualifications) (paper).

- Arvind and Sayash argued that AI tools create a “production-progress paradox” in science—boosting individual productivity while potentially slowing overall progress because current evaluations ignore how these tools reduce researchers' understanding and create community-wide problems like citation clustering.

- This is highly technical, but: Researchers created H-Net, an AI that automatically learns the best way to break up text during training instead of using the rigid tokenization rules currently programmed into language models, achieving 3.6x better performance on DNA sequences and superior results across multiple languages (code).

July 16

- Exponential view explains why Kimi K2 is the AI model that should worry Silicon Valley.

- Check out OpenAI’s new podcast with OpenAI COO Brad Lightcap and Chief Economist Ronnie Chatterji that covers AI and jobs.

- METR's analysis of 9 AI benchmarks revealed that human task completion times vary by over 100x—from 2 minutes for computer use to 1+ hours for reasoning tasks—explaining why AI models are advancing unevenly across domains with median improvement cycles of 4 months.

- A pretty well-covered study showed AI actually slowed down developers; one of those developers tells his tale in this eye-opening blog recap.

- Very interesting: Calvin French-Owen reflected on his 14-month tenure at OpenAI, describing a rapidly expanding organization that grew from 1K to 3K employees with an environment resembling “the early days of Los Alamos.”

- Spyglass writes that we’re still in the “throw money at it” era of the AI boom supercycle.

- A new publicly developed large language model trained on the “Alps” supercomputer will be released in late summer 2025, featuring fluency in over 1,000 languages and complete open access.

- Is AI perma-bear Gary Marcus right that at some point, AI engineers will have to embrace neurosymbolic AI? Or is the bitter lesson that reinforcement learning will always eventually win out in AI training destined to debunk this?

- Andrej Karpathy thinks RL is not the whole story, and Yuchen Jin of Hyperbolic proposed a lesson-based learning system with more feedback loops.

- Gwern writes that perhaps what today’s AI is missing before it can create novel discoveries is a day-dream loop

- There’s a debate about “LLM inevitabilism,” and how this rhetorical framing strategically advantages tech leaders by narrowing debate over if something should be allow to happen to questions of adaptation as opposed to if it should happen at all.

- Leading AI researchers from OpenAI, Anthropic, Google DeepMind signed a position paper urging companies to monitor advanced models' internal reasoning through Chain-of-Thought monitoring.

- Here’s how to vibe code and actually ship, y’know, real code.

July 11

- In a survey of 1K+ C-suite execs (CEO, CFO, etc), Icertis found 90% claim increased business pressure, with 46% struggling to show AI ROI and 46% racing to keep up with AI innovation. Executives cited AI washing (51%) and unclear AI use cases (44%) as investment decision barriers, while 90% expected tariffs to hurt profits.

- Researchers designed an AI that can “autonomously discover and exploit vulnerabilities” in smart contracts—put another way, it can steal crypto (paper).

- AI is increasingly making Apple look like a loser to the stock market, with the stock down 15% due to concerns over tariffs and its ability to compete with AI, and some analysts believe acquiring Perplexity is its only shot at keeping up.

- A basic security flaw in the McDonalds hiring AI called “McHire” (built by Paradox) exposed millions of applicant’s sensitive data to hackers. Why? Because of the password “123456”.

- Check out this fascinating argument that language models like ChatGPT are actually the perfect validation of 1980s French Theory—they prove language can generate meaning purely through statistical relationships between signs, without any understanding or consciousness, exactly as structuralists claimed before anyone imagined such machines could exist.

July 9

- AI is driving massive data industry consolidation, where companies are abandoning fragmented tools for unified platforms, as shown by Databricks' $1B Neon acquisition and Salesforce's $8B Informatica deal.

- The creator of Soundslice built an ASCII tab import feature simply because ChatGPT was incorrectly telling users it existed, revealing how AI hallucinations might increasingly shape product roadmaps as users trust AI-generated misinformation.

- Ethan Mollick published a new essay “against brain damage” (and misinterpretations of the MIT study), defending the use of AI in education.

- Fireship explained the Soham Parekh “overemployed” debacle (the developer who worked for at least 4 startups in San Francisco at once) and how its a symptom of the larger job market’s sickness.

- Sakana AI revealed a new breakthrough of letting different language models work as a "dream team" through its TreeQuest framework, improving performance by 30% while demonstrating how AI systems achieve collective superintelligence.

- This AgentCompany benchmark reveals current AI agents can autonomously handle up to 30% of simulated workplace tasks—suggesting we should focus on task-level automation rather than assuming entire professions will be automated (for now).

- The Algorithmic Bridge gave a great AI industry critique warning about growing dependency on fundamentally flawed AI tools, while questioning whether the industry prioritizes solving core problems like hallucinations or simply races ahead.

July 2

- If you watch one AI interview this week, watch this one; It’s Matt Berman’s chat w/ Dylan Patel, the best writer covering AI chips and data centers, and who is a wealth of insight on what’s happening in the industry. For example:

- All the complicated in’s and out’s of Microsoft and OpenAI’s bumpy relationship right now (15:33).

- A pretty technical but very informative post-mortem on OpenAI’s GPT 4.5 and what went wrong (22:28)

- The beef between Apple and NVIDIA, which could be one reason why Apple is behind in the AI race (31:06).

- NVIDIA’s role in the AI industry as both a cloud kingmaker (41:31) and cloud disruptor (43:13)—as Dylan says, “You don’t mess with God. What Jensen giveth, Jensen taketh,” and cloud companies are mad.

- Elon’s Grok 3.5 and what’s going on there (52:49).

- What model he goes to the most (51:30), which depending on the topic is ChatGPT o3, Claude 4, or Gemini 2.5 Pro, and Grok 3. You should use the best model for you, but Dylan’s pretty based, so worth considering his use cases for each!

- And who his pick is to win the “superintelligence” race… and why (1:01:01).