Welcome, humans.

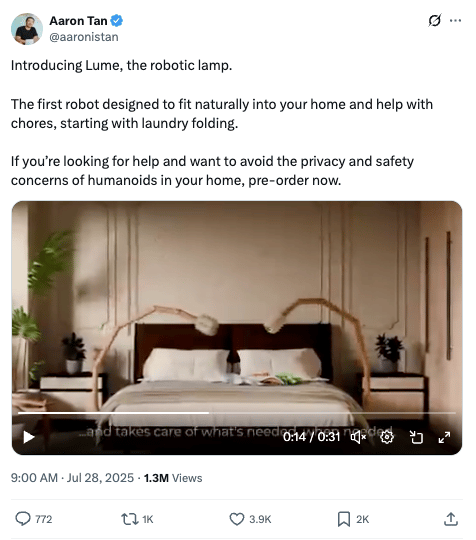

Today, in unexpected robots… a robotic lamp that, for some reason, also functions as mechanical arms that make your bed for you??

Because nothing says “sweet dreams” like waking up at 2 AM to two robot arms hovering over your bed…. at least they’ll tuck you in before bed??

Here’s what you need to know about AI today:

- A tiny new AI model can reason 100x faster than larger models.

- Z.ai launched an AI model cheaper than DeepSeek.

- Samsung and Tesla signed a $16B+ deal for 2nm AI chips.

- Amazon's AI coding assistant Q was hacked, endangering 1M devs.

A Tiny AI Just Schooled Top Models in Reasoning Using a "Brain-Like" Architecture

A new AI model from Singapore-based Sapient Intelligence is challenging the “bigger is better” mantra dominating today’s AI model development, and it’s doing it by copying a trick from the human brain (or at least, how we THINK the brain works).

Meet the Hierarchical Reasoning Model (HRM), a tiny 27M-parameter design that solves complex reasoning puzzles that leave today's massive AI models completely stumped.

The problem with models like ChatGPT, according to the researchers, is that they're architecturally “shallow.” They rely on Chain-of-Thought (CoT) prompting—basically, talking themselves through a problem step-by-step—as a crutch. But with CoT, one wrong turn can derail the whole process.

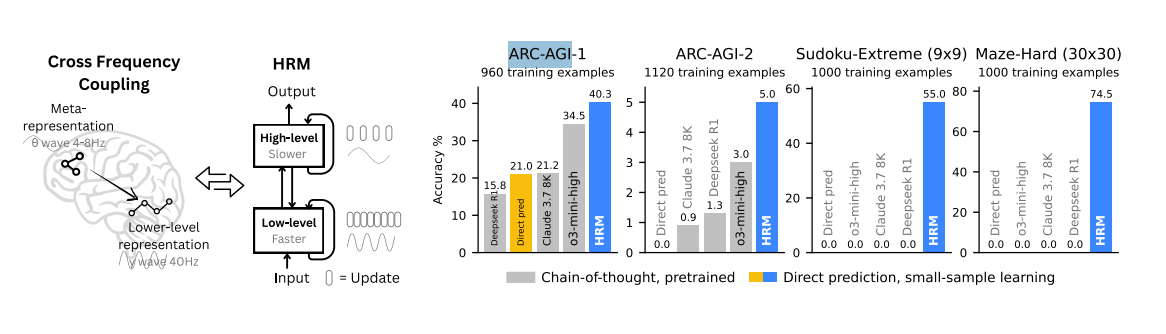

HRM takes a different approach. Here’s how it works like your brain: HRM copies the brain's hierarchical structure with two interconnected modules:

- A high-level “planner” that thinks slowly and strategically (like when you plan your next chess move).

- A low-level “worker” that executes rapid calculations (like when you instantly recognize a face).

Think of it like having a brilliant manager directing a lightning-fast assistant. This structure allows HRM to “think” deeply about a problem in a single forward pass, learning to reason from just a handful of examples without pre-training on the entire internet.

The results are preeeetty jaw-dropping. On the ARC-AGI benchmark (basically an IQ test for AI), HRM went head-to-head with top-tier models and dominated:

- Claude 3.7 (with 8K context): 21.2%

- o3-mini-high (OpenAI): 34.5%

- HRM (27M parameters): 40.3% 🎯

On Sudoku-Extreme puzzles, HRM solved 55% of them. Claude 3.7 and OpenAI’s o3-mini-high? They scored 0%. On 30x30 mazes, HRM found the optimal path 74.5% of the time. The others? 0% again.

Now, we realize these models (Claude 3.7 and o3-mini-high) are not the frontier, frontier models, but HRM is also tiny in comparison. The first Do you see where this is going? GPT-1, the O.G. GPT model, had 117 million parameters… that’s 4x MORE than HRM.

In fact: HRM’s design is so lean that one of its creators, Guan Wang, said it can be trained to solve pro-level Sudoku in just two GPU hours.

Why this matters: HRM proves that architecture matters more than size. The implications are massive:

- Cheaper AI deployment (better AI that runs on a single GPU).

- Faster training (hours instead of months).

- Better reasoning without expensive compute.

- Open-source (you can grab the code here and train your own!).

While skeptics argue HRM’s skills are too narrow, its early-stage performance suggests that brain-inspired designs could unlock powerful reasoning at a fraction of the cost.

And this is just one idea like this. There’s also Sakana’s continuous thought machines method (GitHub), 1-bit LLM “bitnets” (GitHub), and even diffusion models, which Google is experimenting with.

At their current stages, all of these architectures are impressive in theory, but still need to be scaled out of the “shiny object syndrome” zone where they live today.

But TBH, unless an incredibly well-funded competitor flies out of stealth with untold billies, the next “holy stinking wow” AI model from someone outside of the major players (US or Chinese) will probably be built on some new architecture like this.

This could be a glimpse into a future where advanced AI isn't confined to massive data centers, but finally runs efficiently on a local machine.

FROM OUR PARTNERS

Build AI products that work in production

When you can't predict which AI features will land with users, you need tools that let you ship with confidence.

OpenAI, Character AI, and Notion get it. AI launches are inherently uncertain. So they deploy behind feature flags, test every model change, and measure real user impact. All with Statsig.

Here's what you can build with Statsig:

- Deploy AI models with rollback capabilities.

- A/B test prompts against user behavior.

- Run experiments to drive feature adoption.

- Watch session recordings of users in action.

Try our experimentation, feature flags, and analytics platform. 2 million events. Unlimited seats.

Prompt Tip of the Day

Rohan Paul shared the leaked Claude Code System Prompt AND the System Prompt Gemini 2.5 Pro used to win a earn golde medal perforance in the International Math Olympics.

You can mine all kinds of prompt tips from reading through both of these (or even feeding them into your AI and asking it to pull out all the actionable prompt tips).

Here’s one that stood out to us: Force AI to admit when it doesn't know something. Their prompt literally says: “If you cannot find a complete solution, you must not guess.”

Try this upgraded prompt format:

"Can you fully answer this question? Summary: [your main findings] Details: [step-by-step with explanations] Gaps: [anything you're unsure about]"

They use a second prompt to verify the first answer: like having ChatGPT grade its own homework. After receiving your answer, ask: “Now find any errors in that response.”

Treats To Try.

*Asterisk = from our partners. Advertise in The Neuron here.

- *Flow is the AI voice keyboard that turns speech into polished text in any iPhone app—Slack, iMessage, Gmail, Notion—5× faster than typing. Free plan with weekly word cap—try it here.

- GLM 4.5 gives you full open source access to download, modify, and commercially use GPT-4-level AI that handles your complex reasoning, coding, and agent tasks like building full-stack web apps from scratch (code)—try it here.

- Microsoft Edge launched Copilot Mode, which effectively turns it into an AI browser with multi-tab awareness, voice navigation, task automation, and a dynamic help pane.

- Julius AI turns your Excel files into charts when you ask questions like “show me customer churn trends” and now has data connectors (raised $10M).

- Shortcut handles complex Excel work for you, from building financial models to filling out spreadsheets, and even shows you before-and-after changes so you can review what it did.

- Seed LiveInterpret 2.0 instantly translates your voice conversations while keeping your voice.

- Qwen-MT translates between 92 languages with custom terminology control, letting you customize how specific terms are translated and set domain-specific styles.

- Wan 2.25B generates videos from your text descriptions or turns your images into videos at 720P resolution and 24fps, running on consumer GPUs like the RTX 4090 (code)—try it here.

See our top 51 AI Tools for Business here!

Around the Horn.

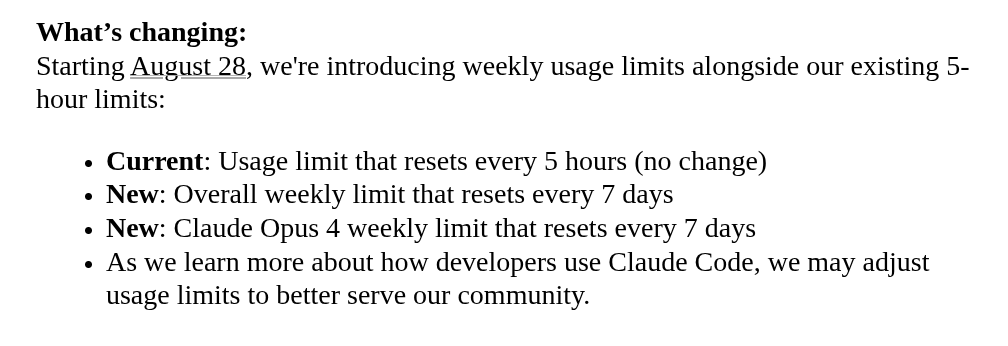

Make sure to send some #ThoughtsAndPrayers to all your vibe-coding friends this week, as Anthropic just implemented some new weekly limits for Claude Code

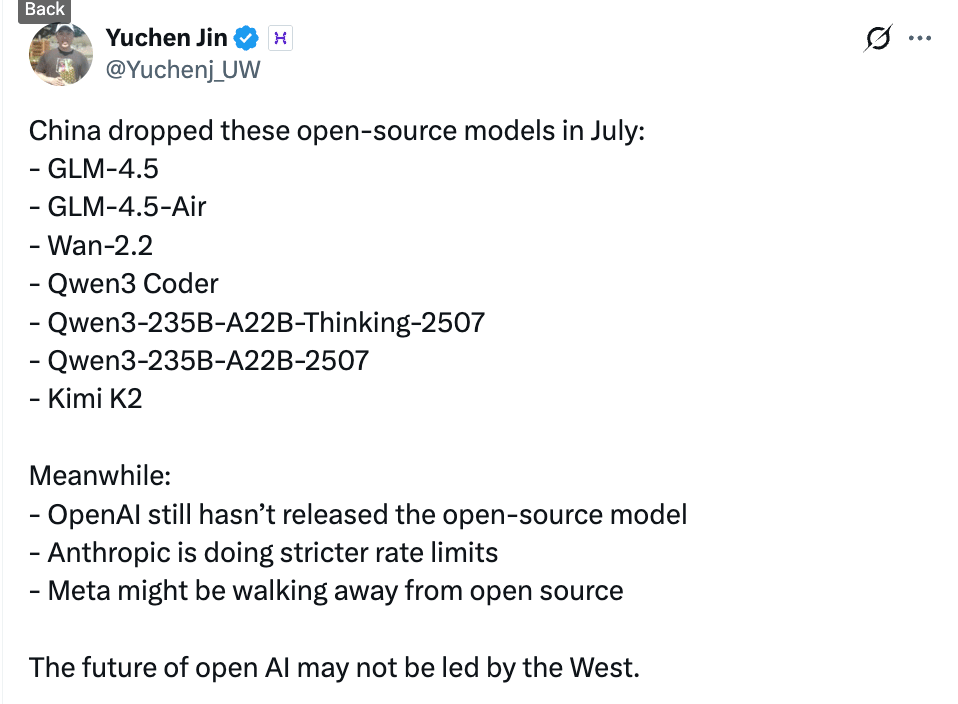

- China is openly embracing AI tools in universities with a 99% adoption rate, and the government published an AI Global Governance Action Plan calling for international cooperation on development, safety standards, infrastructure sharing, and governance frameworks while emphasizing principles of sovereignty respect, inclusive development, and sustainable AI practices.

- Samsung and Tesla signed a $16B+ deal to produce advanced 2nm (cutting edge quality) AI chips at Samsung's Texas plant through 2033 for Tesla's self-driving vehicles and robots.

- Amazon's AI coding assistant Q was compromised, potentially endangering nearly 1M developers after a hacker infiltrated the GitHub repository.

- JPMorgan deployed AI tools to 200K employees, projecting $1.5-2.5B in annual business value while early adopters build competitive advantages.

- Z.ai released an AI model cheaper than DeepSeek (GLM-4.5, shared above), which is half DeepSeek's size and requires only eight Nvidia H20 chips to run.

- UC Berkeley, Stanford, and Databricks researchers created GEPA, an AI system that rewrites its own prompts; they claim GEPA makes the prompts 35x more efficient and 10% more accurate than existing methods.

FROM OUR PARTNERS

Live webinar on how to build AI agents for production

Building AI agents shouldn’t feel messy or uncertain. Join this live session to see how you can build, test, and deploy to production using Vellum’s UI builder and SDK. You don’t wanna miss this: Register here.

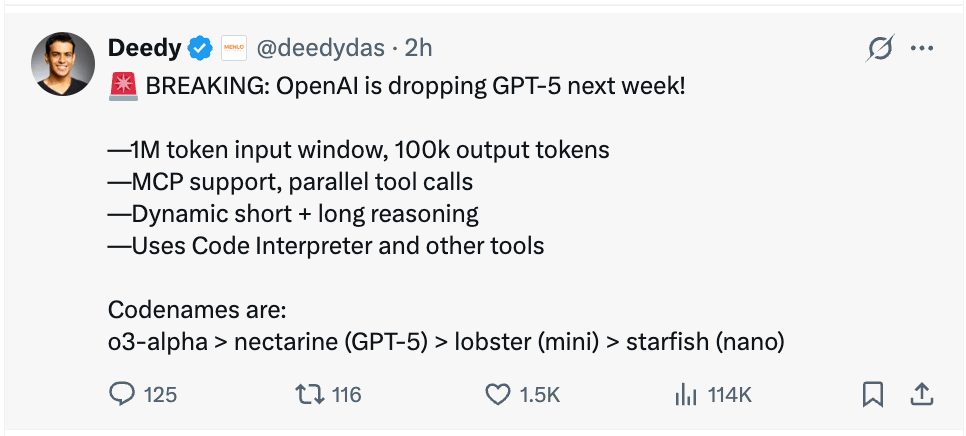

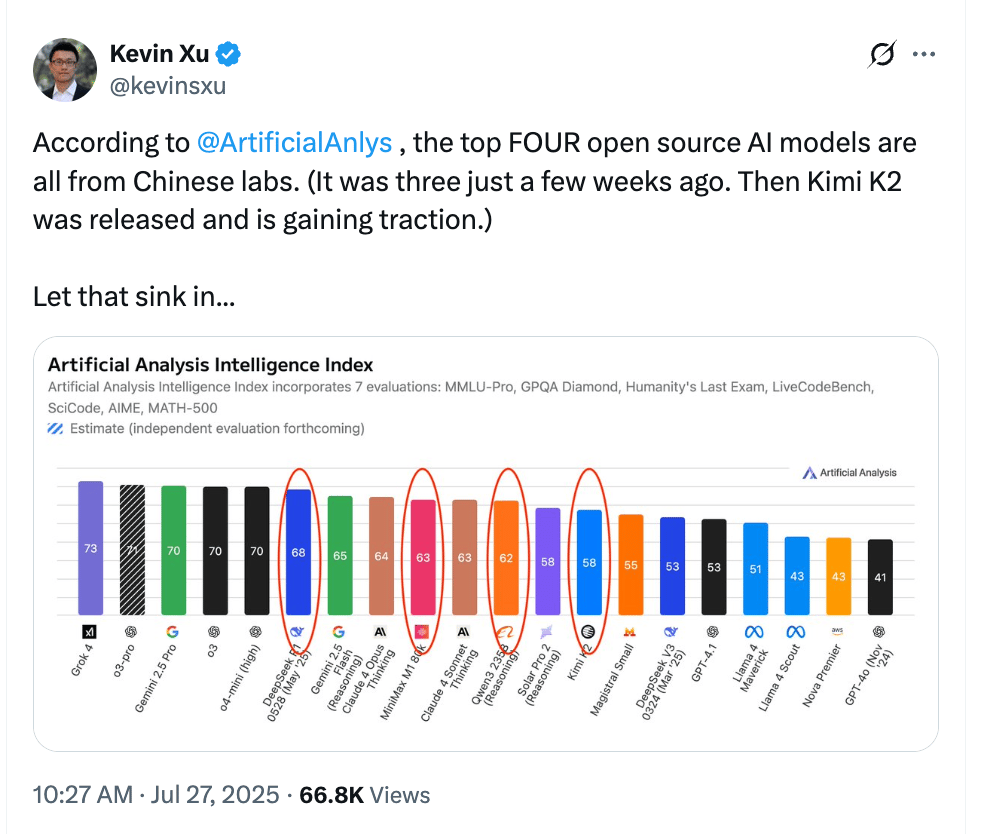

Tuesday Tweets

Tuesday is kind of our rotating wild-card section atm, so today we’re trying a round-up of tweets that capture the state of the industry atm.

A Cat's Commentary.

.jpg)

.jpg)

.jpg)