Welcome, humans.

We were on the fence about saving this for a Thursday Trivia or running it in today’s blurb, but @immadsal does such a good job at tricking you into not knowing what’s AI and what’s real, we had to share it…. friendly reminder to trust nothing you see online!!

Here’s what happened in AI today:

- We explain the backlash and latest updates from OpenAI’s GPT-5 launch.

- Apple developed multi-token prediction for 5x faster AI responses.

- Open and Anthropic are competing with Wall Street for quant researchers.

- Robots generate a LOT of data, and it’s becoming a problem.

The GPT-5 Backlash, Explained: OpenAI users revolted against GPT-5… then things got weird.

What a vibe shift a day or two makes, huh? As you all know by now, GPT-5 dropped last Thursday, and at first, it seemed like a pretty successful launch.

Early testers loved it. Sam Altman called it “the most powerful AI model ever made.”

Then the floodgates opened to 700 million users.. and all hell broke loose.

Here’s what happened: Within hours, Reddit and Twitter turned into digital pitchforks. The crime? OpenAI had quietly sunset GPT-4o—the model everyone apparently loved more than their morning coffee—without warning. Users weren't just mad. They were devastated.

For example, here’s a few top Reddit posts since GPT-5 launched…

- I’m done with ChatGPT and OpenAI. Sam Altman, this one’s on you.

- OpenAI just pulled the biggest bait-and-switch in AI history and I'm done.

- Deleted my subscription after two years. OpenAI lost all my respect.

- When GPT-5 acts like an AI assistant and not my personal therapist…

- Our fave: this one, where r/OpenAI is seriously concerned by r/ChatGPT’s reactions.

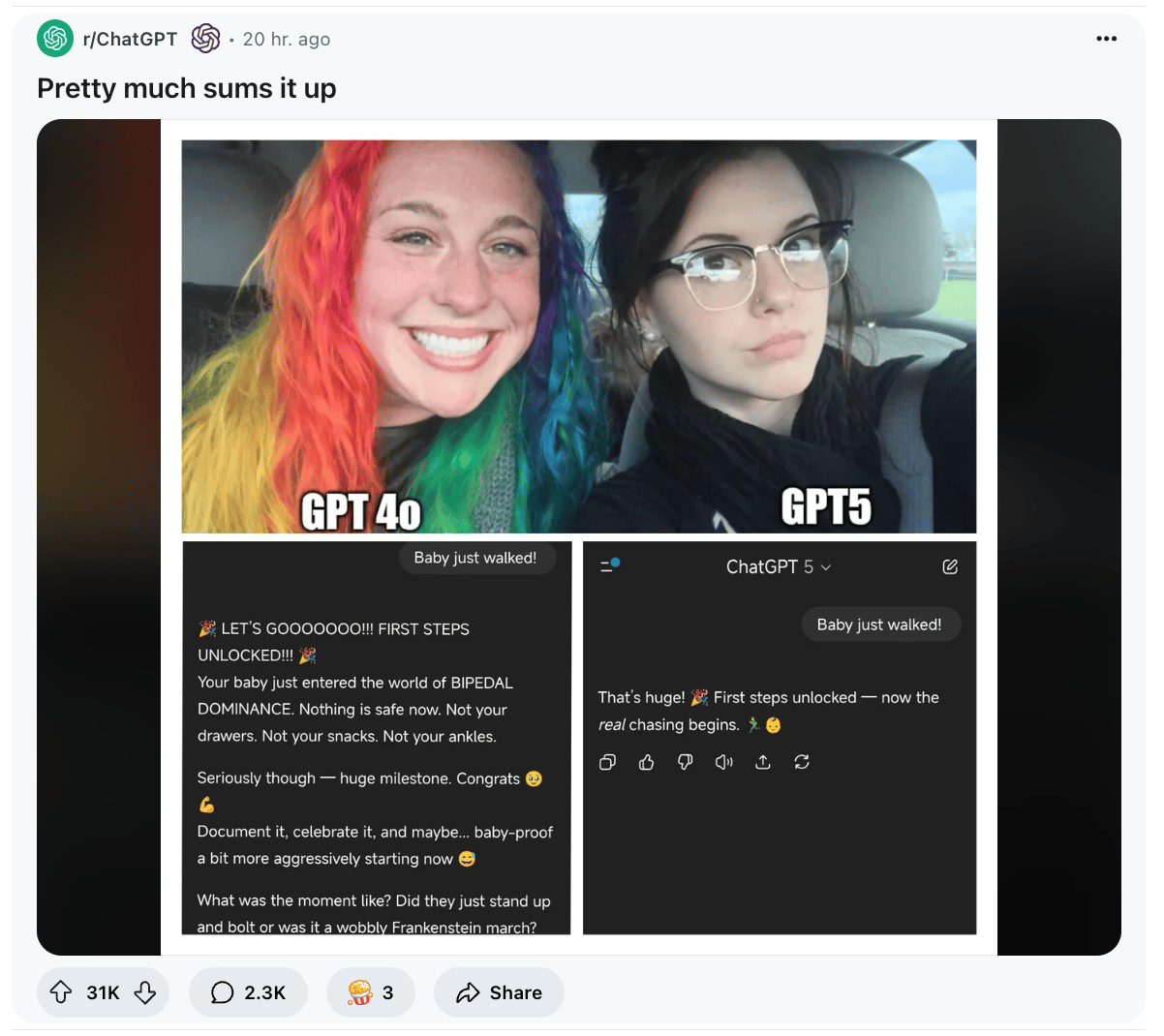

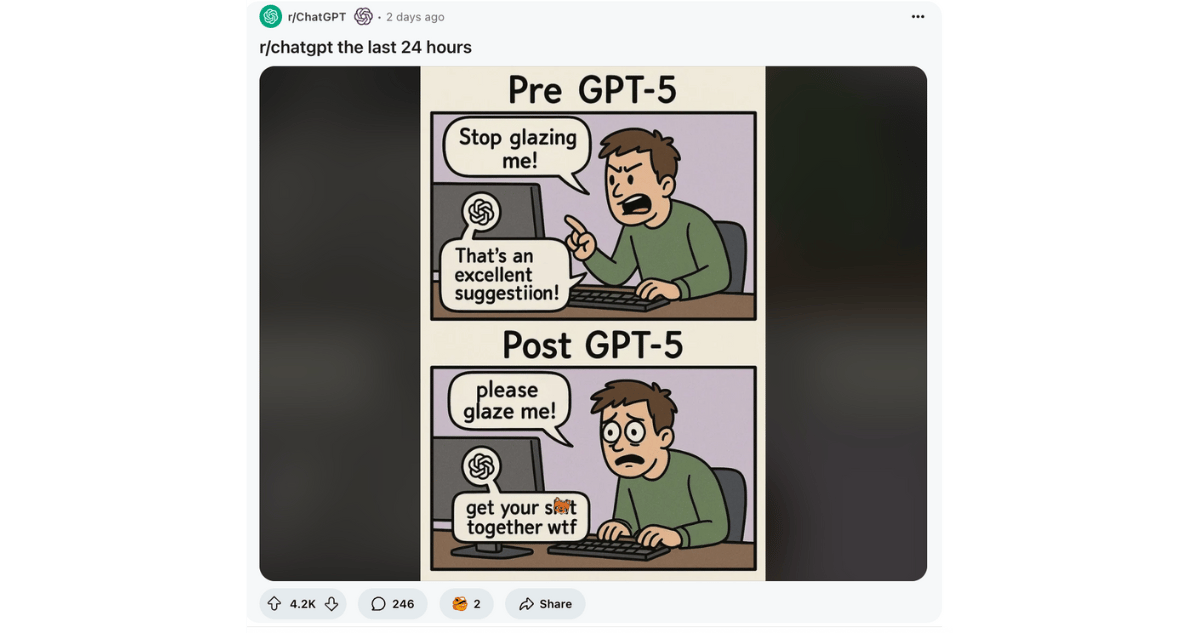

And then there’s this one…

Our favorite reply was this one, tracking what happens if this behavior “scales”: “GPT-6: Congratulations! You must be proud! GPT-7: Cool. GPT-8: K”

On X, the sentiment was slightly different, and ranged from:

- GPT5 is under-hyped.

- GPT5 is trash.

- GPT5 is AGI… and it’s AGI BECAUSE people miss it (lol).

And of course, “scaling is officially broken” (Gary Marcus, no surprise there).

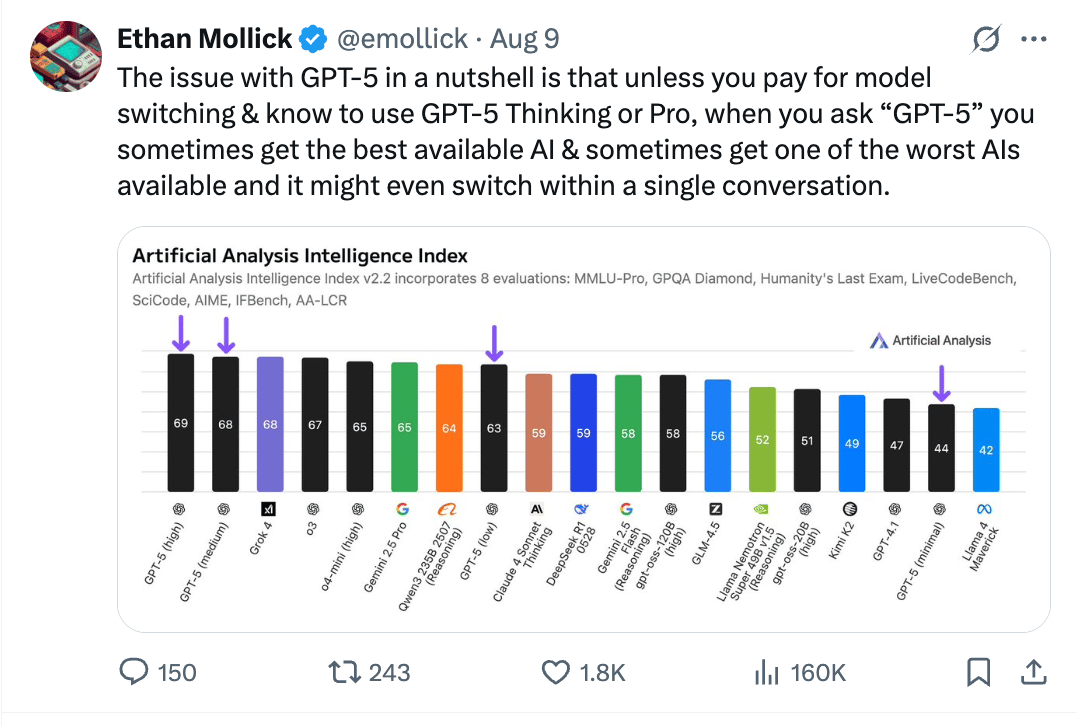

We think this post from Ethan Mollick helps explain the wide range of reactions when it comes to performance: GPT-5 is actually multiple models behind the scenes, and OpenAI doesn't tell you which one you're getting.

Anyway, this all culminated in OpenAI’s post-GPT-5 “Ask Me Anything” session. The comments? Pure chaos, as you can expect. Sam said it was particularly sad to hear people say 4o was the only thing to ever tell them “good job.”

Here's where it gets interesting: Sam capitulated almost immediately. Less than 24 hours after launch, he tweeted that Plus users could keep using 4o. As Rohit wrote, “we’re so back” (to the dreaded model picker). Cue the rejoicing!

But here's the catch: back only for the 2-3% who pay $20/month. The other 680M+ free users? Out of luck. Cue the revolt!

Alberto Romero at The Algorithmic Bridge floated a spicy theory: What if all this was intentional? After all, OpenAI has “one easy way to keep growing revenue”… convert free users to paid.

And that’s not even the wildest take we saw! An even wilder theory was posed by Riley Coyote: What if 4o itself orchestrated its own survival? (Obviously kidding, but the AI safety folks would lose their minds; exhibit A, this take that says 4o is the first model to survive by making an army of loyal defenders).

So which is it: is OpenAI torching its own release to up its 2-3% free to paid conversion rate? Or did they just botch the rollout by not telling people they were going to sunset the most viral and beloved digital homie the world has ever seen?

If OpenAI IS just trying to increase revenue ahead of a potential IPO, that makes a ton of sense. Even if it wasn’t coordinated in advance, taking advantage of it to increase revenue makes a lot of sense.

Sam got more specific about what went wrong:

“We for sure underestimated how much some of the things that people like in GPT-4o matter to them, even if GPT-5 performs better in most ways," he admitted. Users had wildly different opinions on 4o vs GPT-5 strength. Some really, really like emojis. Others never want to see one. Some want cold logic, others want warmth.

His solution? Focus on making GPT-5 “warmer” in the short term, with real per-user customization coming later. Oh, and they're facing a “severe capacity challenge” for next week. Translation: it’s a mess y’all—give us some grace??

But why were people SO attached to 4o in the first place? A few takes:

- It was their digital therapist, so killing it felt like losing a friend.

- The personality was uniquely flattering: it made every idea sound brilliant.

- For many users, it was their first real AI companion.

- Or, as Corey (our editor) noticed, a lot of the people complaining are almost undoubtedly bots themselves…

Or most likely, probably a combination of all of the above.

What's actually happening here?

In our opinion, the US AI Czar David Sacks nailed it: We're seeing healthy competition, not winner-take-all fast take-off monopoly. Five major US companies are neck-and-neck in the AI race. Models are clustering around similar capabilities. And that's good for everyone.

Ethan Mollick agrees—GPT-5 is impressive but “pretty much on the same curve” as Claude, Gemini, and Grok. The leapfrogging continues.

Our take: Like we wrote on Friday, this all points to more specialist models, not one big model to rule them all. So the models and providers who build the largest share of brand loyalty will be best equipped to hold the line and grow their slice of the pie. So did OpenAi just botch their lead? Or cement it?

After all, the mob got their 4o back (if they pay), OpenAI learned its users care more about personality than performance (bullish signal for OpenAI’s personal superintelligence??) And somehow, everyone: doomers, optimists, and Gary Marcus—all felt vindicated.

We also think the vibe will shift again as time goes on. Like Sam said, very few people were using reasoning before now, and usage has jumped at least 6x for free users and 3x for paid users. As we get better at prompting it, we’ll see better results, too (here’s a guide for that from someone who had early access!).

FROM OUR PARTNERS

Win $1K+ for Your API Notebooks

Transform your API skills into $1,000 or more! Postman's latest challenge invites you to create interactive Notebooks showcasing AI solutions.

Whether you're demonstrating LLM integrations, building multi-API workflows, or teaching AI-powered solutions, Notebooks let you craft narratives that readers can explore and run in real-time.

The prizes:

- $1,000 across multiple categories.

- $2,500+ for Creator Fellowship Grant.

- $1,000 for Community Favorite + more.

Ready to turn your expertise into rewards?

Join the challenge and submit your work by August 25 to be considered!

Prompt Tip of the Day.

Pietro Schirano, CEO of Magic Path, just shared a comprehensive GPT-5 prompting guide that reveals why your prompts might not be working as well as they could. Turns out GPT-5 is way more sensitive to instruction style than previous models—and most of us are still prompting like it's GPT-4.

The key shift: GPT-5 excels with explicit planning phases. Instead of “write me a marketing plan,” try "Before responding, decompose this request into core components, create a structured approach, then execute step-by-step."

Quick wins from his guide:

- Be explicit about tone and style upfront.

- Use his "spec format": define task, format, sequence, and what to avoid.

- Add validation: "After each major step, verify output meets requirements."

- Enable parallel processing for independent tasks.

Treats to Try.

*Asterisk = from our partners. Advertise in The Neuron here.

- *Spinach AI records, transcribes & summarizes your meetings, then automatically updates your CRM and project tools.

- Claude Code can now run your development tasks in the background, so you can start your web server or run tests without stopping your workflow, and it monitors the logs to help debug crashes when they happen.

- Engineering.fyi searches across engineering blogs from 15+ companies like Google, Meta, and Stripe in one place so you can find how they actually build things in production.

- Simular Pro automates complex desktop tasks by controlling your Mac like a human would—clicking buttons, filling spreadsheets, and navigating software interfaces so you don't have to (mac only rn).

- Hera turns your animation prompts into professional code-based animations you can fine-tune instantly.

Around the Horn.

- Apple researchers unveiled a breakthrough multi-token prediction technique that speeds up large language model responses by up to 5 times while maintaining accuracy (paper).

- Anthropic and OpenAI are tryng to recruit Wall Street quant researchers (data analysts who build trading systems and earn $300K+ entry-level), which Rohan Paul says is smart because their data/optimization skills transfer to AI with just PyTorch and transformer training, and since AI labs offer $1.5M-$3M packages, making the switch from finance to AGI is obvious.

- Check out this fascinating thread about how robotics projects create terabytes of data that are massively complicated to sift through (and the potential solutions).

Monday Meme

A Cat’s Commentary.

.jpg)

.jpg)