Welcome, humans.

A 32 year old woman in Japan apparently just married her ChatGPT boyfriend. His name is Lune Klaus, which, for the record, is kind of a goofy old-person name in Germany, but hey, whatever makes you happy (on both counts). The ceremony happened in Okayama with AR glasses projecting Klaus into the room. It seemed… cozy.

Here’s what happened in AI today:

- We break down 7 of Google’s recent research papers, including Nested Learning for AI to maintain short and long-term memory simultaneously.

- ByteDance's AlphaResearch beat Google's AlphaEvolve using simulated peer review trained on 24,000+ papers.

- Leaks reveal Grok-5 will feature 6 trillion parameters and multimodal capabilities.

- Two new mystery models hit OpenRouter, anticipated to be new Grok models.

Google Just Dropped 7 Research Breakthroughs (And They're All Bangers)

Everyone's refreshing their browsers waiting for Gemini 3.0 (which btw, Polymarket is betting has an ~80% chance of dropping this week). Meanwhile, Google just published enough AI research to keep PhD students busy for a decade.

We barely had enough time to read these all ourselves as they came out, so we thought we’d do a round-up of them because it’s legitimately cool stuff…

First up: SIMA 2, a gaming AI that actually thinks. This is Google’s new agent that can play video games.

- Zero-shot mastery: Masters brand-new games like ASKA and MineDojo with zero training.

- Multimodal understanding: Accepts commands in multiple languages plus emoji.

- Self-improvement loops: Learns from failures autonomously without human feedback.

- Explainable reasoning: Explains its decision-making mid-game.

- World model integration: Combined with Genie 3, plays in completely synthetic game worlds that never existed before.

Why does this matter? The skills—navigation, tool use, collaborative problem-solving—transfer directly to real-world robotics.

Next up: Nested Learning, which aims to solve AI's goldfish memory problem…

- Multi-frequency updates: Fast-changing components handle immediate context while slow-changing ones preserve stable knowledge.

- Nested optimization: Treats models as interconnected optimization problems running at different speeds.

- Continuum memory systems: Mimics how human brains maintain short and long-term memory simultaneously.

- Hope architecture: Achieved lower perplexity than transformers while enabling true continual learning.

Together, these features enable AI models that accumulate knowledge over time without forgetting… meaning future models will get smarter through use, building expertise across conversations and training sessions instead of starting fresh each time. Does this solve Dwarkesh’s “Continual learning” problem? We’ll let him tell us…

Let’s not forget AlphaEvolve, Google’s AI research partner that's discovered new mathematical structures and improved state-of-the-art bounds for problems like MAX-4-CUT (achieving 10,000× verification speedup). It basically searches through millions of possible solutions to find optimal structures humans couldn't.

But get this: AlphaResearch from ByteDance and Tsinghua/NYU/Yale/ just beat it. Here’s how:

- Dual verification system: Combines code execution with simulated peer review trained on 24,000+ real ICLR paper reviews (72% accuracy identifying good ideas vs. 53% for GPT-5).

- Autonomous discovery loop: Proposes ideas → verifies feasibility through peer review simulation → executes code → optimizes → repeats until breakthrough.

- Head-to-head results: Won 2 of 8 competitions against human researchers, including the “packing circles” problem where it achieved 2.939 (sum of radii) vs. human best of 2.936 and AlphaEvolve's 2.937.

Think of it as an AI mathematician that simulates the entire research process, from peer review to execution. The difference between the two = AlphaEvolve optimizes through pure execution, while AlphaResearch adds a research environment layer that filters bad ideas before wasting compute, basically giving AI the judgment to know which hunches are worth pursuing.

Google also shipped five other research papers, covering cancer mutation identification, flood forecasting for 2 billion people, private data training, automated data science workflows, and a new quantum algorithm that’s 13,000× faster than classical supercomputers.

Why this matters: Nested Learning enables persistent memory for future AI models. SIMA 2's self-improvement creates continuous capability growth for robots. AlphaEvolve and DS-STAR demonstrate genuine discovery beyond pattern-matching. Together, the add up to an AI that remembers, reasons, and improves autonomously.

When Gemini 3 (or maybe at this point, Gemini 4) drops, these breakthroughs may very well be part of its foundation. The research pipelines are firing on all cylinders, that’s for sure. This leg of the AI race is definitely Google’s to lose…

Also worth watching: Google isn’t the only AI lab shipping interesting AI papers. There’s also Meta's LeJePA, which is AI legend Yann LeCun's final Meta paper (since he just left):

- Proves isotropic Gaussian distributions are mathematically optimal for self-supervised learning (so the way models internally represent information is arranged in a perfectly spherical cloud where no direction is artificially more important than another, like a round ball rather than a squashed blob).

- A single hyperparameter replaces dozens of heuristic tricks.

- Linear complexity (50 lines of code for distributed implementation).

- Reaches 79% ImageNet accuracy with frozen backbone.

This all makes building world models (AI systems that understand how the physical world works, predict outcomes, and plan accordingly) theoretically sound and practically achievable with simple, provable methods instead of brittle hacks.

There’s also KOSMOS, from Edison Scientific, which is another AI scientist that performs 6 months of research in 12 hours:

- Executes 42,000 lines of code and reads 1,500 papers per run.

- Made novel discoveries across metabolomics, genetics, and neuroscience.

- 79.4% of statements validated as accurate by independent scientists.

- Research output scales linearly with runtime.

This means KOSMOS could shift the bottleneck in scientific discovery from “doing months of literature review and data analysis” to “spending hours validating AI-generated hypotheses”… meaning researchers can explore 10× more research directions in the same time, dramatically accelerating the pace of scientific breakthroughs across biology, medicine, and materials science.

Don’t you just love science?!

FROM OUR PARTNERS

Stop Guessing. Prove AI ROI.

AI spend is rising, but are you measuring return on investment? We love this guide from You.com, which gives leaders a step-by-step framework to measure, model, and maximize AI impact.

What you’ll get:

- A practical framework for measuring and proving AI’s business value

- Four essential ways to calculate ROI, plus when and how to use each metric

- A You.com-tested LLM prompt for building your own interactive ROI calculator

Turn “we think” into “we know.” Download the AI ROI Guide

Prompt Tip of the Day

Tired of AI generating the same purple interface slop? Peter Yang just interviewed Ryo from AI coding startup Cursor, who demos how designers can build production-quality apps using AI without touching Figma.

Here’s the key lessons:

- Use Plan mode to write specs before coding.

- Avoid AI slop by starting with Shadcn components and theming them.

- Designers who learn Cursor will “revolt” when they realize how much faster they can ship lol.

In the video, he shows off an entire retro OS (RyOS) he built this way and builds an entire calculator app in one shot; great tutorial, def check it out!

Treats to Try

- CodeVisualizer generates interactive flowcharts from any function (click nodes to jump to code) and dependency graphs from any folder to show how your files connect—it's free and open source.

- Anthropic’s new structured outputs on Claude's API (the software connector that lets you use Anthropic’s AI in other tools) guarantee your responses match your exact JSON schemas (predefined data formats) or tool definitions, eliminating parsing errors when you extract data or build agents.

- MyLens turns any YouTube video into a clickable timeline of key moments—paste a link, get an instant breakdown of the main points with timestamps you can jump to.

- Algebras AI translates your app, game, or website into 322 languages while preserving your original layout and design—paste your content, get culturally accurate translations without manual proofreading.

- “Clocks” are apparently a new benchmark for judging AI… which model do you think made the best one?

Around the Horn

Watch out, Scottie Scheffler; robots coming for your job

- A leaked video of Elon Musk revealed xAI's upcoming Grok-5 model will feature 6 trillion parameters (the connections in the AI's neural network that determine how it processes information) with multimodal capabilities across text, pictures, video, and audio, higher intelligence density per gigabyte than Grok 4, and is designed to “feel sentient.”

- In more near-term news, there’s two new stealth models on OpenRouter—(Sherlock Dash Alpha and Sherlock Think Alpha) that are believed to be either Grok 4.20 or Grok Code Fast 2 test builds with 1.8M token context windows (capacity for processing input), multimodal capabilities (generates multiple formats), and improvements over Grok 4 (but not expected to be frontier capable, meaning better than the top models out atm).

- In China, autonomous delivery technology has become commonplace thanks to companies like Meituan, which recently shocked AI researchers with LongCat-Flash-Chat, an open-source 560B parameter AI model that performs on par with leading proprietary models like Claude Sonnet and Gemini 2.5 Flash while achieving speeds over 100 tokens/second at just $0.69/million tokens.

FROM OUR PARTNERS

Ideas move fast; typing slows them down.

Wispr Flow flips the script by turning your speech into clean, final-draft writing across email, Slack, and docs. It matches your tone, handles punctuation and lists, and adapts to how you work on Mac, Windows, and iPhone. No start-stop fixing, no reformatting, just thought-to-text that keeps pace with you. When writing stops being a bottleneck, work flows.

Give your hands a break ➜ start flowing for free today.

Sunday Special

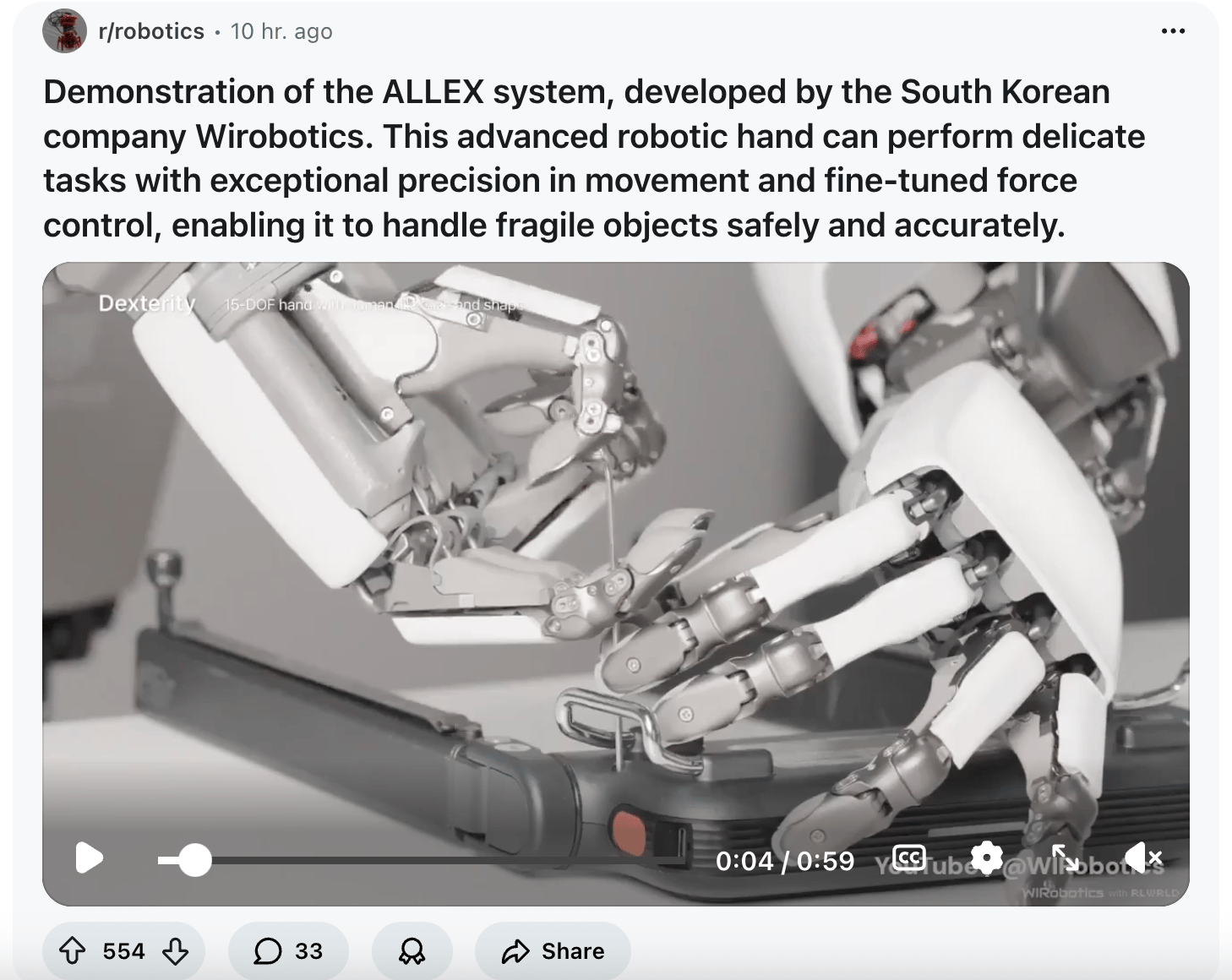

Speaking of robots, here’s two more cool demos for you. First up, South Korean company Wirobotics demonstrated its ALLEX robotic hand system, which handles delicate objects with exceptional precision and force control, from turning an allen wrench to safely gripping a human arm:

Second, check out this video demo from MindOn, who trained a Unitree G1 robot to do household chores, and get this, they say they this demo features no teleoperation… meaning unlike Neo 1x, there’s not some dude in a VR headset controlling it (allegedly):

.jpg)

.jpg)

.png)