Welcome, humans.

Wanna see a wild chart?

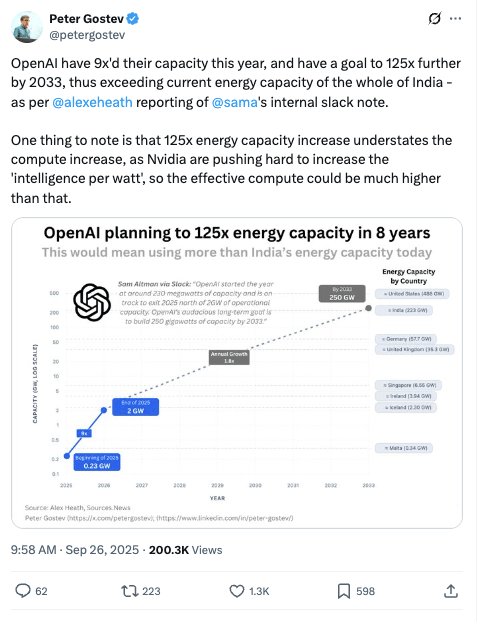

OpenAI 9x'd their compute capacity just this year. But that's apparently just the warm-up act.

According to Alex Heath's reporting on Sam Altman's internal Slack messages, OpenAI wants to 125x their capacity again by 2033. That would put their energy consumption above the entire country of India—home to 1.4 billion people.

For context, that's like saying “Hey, we're gonna use more electricity than a nation that has more people than the US, Europe, and Canada combined.” Just another Tuesday at OpenAI!

Peter Gostev says this 125x number actually understates the compute increase, since NVIDIA keeps making chips more efficient. So we're talking about way more than 125x the actual AI horsepower. Somewhere, a fusion power researcher just felt their phone buzzing with 47 missed calls from Silicon Valley…

Here’s what happened in AI today:

- RL pioneer Richard Sutton called LLMs a dead end for AGI.

- Apple built internal ChatGPT rival for next-year Siri upgrade.

- CoreWeave expanded OpenAI infrastructure deals to $22.4B

- Meta launched Vibes AI video feed for remixable clips.

This Turing Award winner says LLMs are a dead end.

Richard Sutton, who wrote The Bitter Lesson and is largely considered to be “the Father of RL” (reinforcement learning) just won the AI industry’s Nobel Prize (the Turing Award) for his invention. You'd think he'd be celebrating how his ideas power everything from AlphaGo to self-driving cars. Instead, he's telling anyone who'll listen that the entire LLM (large language model) revolution is barking up the wrong tree.

In a new interview with Dwarkesh Patel, Sutton argues that models like ChatGPT have a fundamental flaw: they learn to predict what humans would say, not what actually happens in the world. It's the difference between reading every cookbook ever written and actually learning to cook by burning a few soufflés.

Here's his core argument:

- LLMs have no real goals (next-token prediction doesn't count).

- They can't be surprised by outcomes or learn from consequences.

- They’re mimicking intelligence, not building it from scratch.

Think about how you learned to ride a bike. No one gave you a trillion examples to study. You got on, wobbled, fell, adjusted, and eventually figured it out. That’s trial and error with real consequences: what Sutton says AI needs.

His alternative: An agent that learns on the fly. He proposes a new architecture (which he calls OaK) that learns continuously from a stream of sensation, action, and reward. Forget massive training runs; this is about adapting on the job.

The big takeaway: If Sutton is right, scaling GPT-6 to infinity won't get us to AGI. We'd need something fundamentally different. But this leads to a trillion-dollar question: what happens when we give an AI the ability to set its own goals?

Sutton’s vision is the path to an autonomous agent that develops its own motivations. This means the “flaw” of LLMs—their passive, goal-less imitation—might actually be their most important, albeit unintentional, safety feature. Read the rest.

FROM OUR PARTNERS

How Canva, Perplexity and Notion turn feedback chaos into actionable customer intelligence

Your first 100 customers knew you cared. You met them often and built the product with them, not just for them. Then, you scaled, creating distance between you and the very customers who enabled this growth. We call it - The Scaling Paradox.

While the Scaling Paradox is inevitable, some of the most customer-centric companies like Canva, Notion and Perplexity use Enterpret to stay close to the voice of their customers.

Enterpret unifies all your customer interactions, auto-tags themes, surfaces insights and ties them to key business metrics like revenue and CSAT. This helps you turn feedback chaos into clearer priorities that drive roadmaps, retention and revenue.

→ Canva: instantly surfaces top requests from user feedback across 220 million users and 100+ languages.

→ Perplexity: gets real-time alerts on revenue-impacting issues, cutting diagnosis time by hours.

→ Notion: 4x faster user insights reporting.

→ Memrise: 71% reduction in support tickets, for a key issue flagged by Enterpret.

See how top teams turn feedback into action here.

Prompt Tip of the Day

We tried this trick recently and liked it: in the spirit of reducing “workslop”, try to ask your AI to “remove the top [you pick the number] lowest value sentences from what you just wrote while still maintaining 100% fidelity to the key ideas.” See what it takes out, and if that makes the work shorter and easier to read. Conversely, if it removes something that’s actually important to you, now you know it’s important!

Treats to Try

*Asterisk = from our partners (only the first one!). Advertise in The Neuron here.

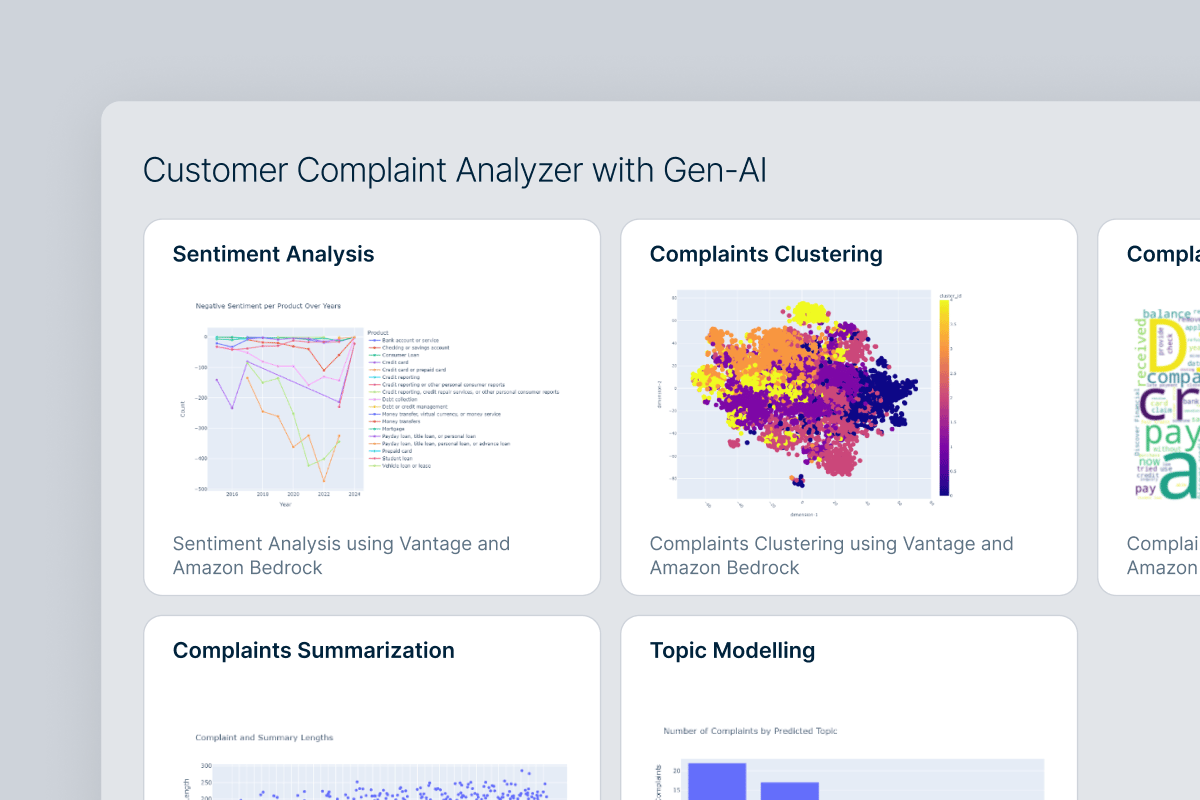

- *Drowning in complaint backlogs and messy customer feedback? In this live demo, see how VantageCloud with generative AI makes resolution faster and smarter. Watch now.

- Kimi’s OK Computer builds websites and dashboards from your conversations—it's like having a full dev team you can chat with (free 3 sessions to try it out).

- Manus breaks down complex work into parallel tasks and executes them autonomously; give it a complex project and come back to find research completed, code written, websites deployed, slides made, you name it.

- LM Studio 0.3.27 adds find‑in‑chat and global search so you can jump to code or snippets fast—free to download.

- Wilson is a new legal superagent that drafts, edits, and reviews contracts end‑to‑end (free to try atm).

- Unlimited Higgsfield WAN brings Product‑to‑Video, Draw‑to‑Video, and Lipsync Studio together for Ultimate ($30/mo) and Creator ($150/mo) plans.

- Check out this hobbyist who shows how to run a tiny local agent on a Raspberry Pi 5 fully on‑device (code, blog).

- Dayflow builds a private timeline of your Mac activity (1 fps capture, 15‑min summaries) so you can recall your day—free, open source.

- Suno lets you type a prompt and generate full songs with vocals and lyrics.

Around the Horn

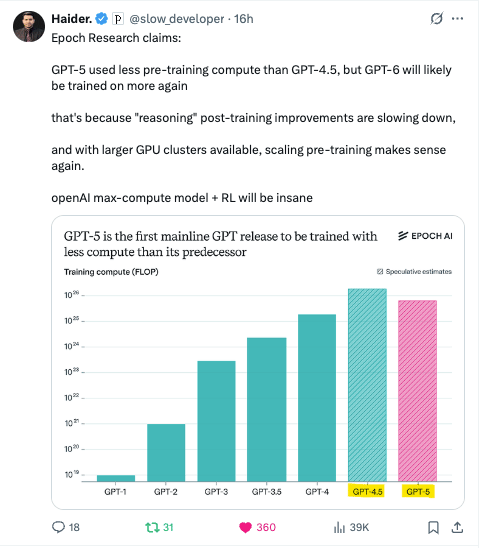

Related to above debate? Research here.

- Apple built a ChatGPT rival to internally test its new “AI-first” Siri set to debut next year.

- xAI offered federal agencies Grok for 42¢ via a GSA deal, undercutting competitors.

- CoreWeave expanded OpenAI deals to $22.4B total, making this central to OpenAI's infrastructure buildout.

- Clarifai announced a reasoning engine claiming faster, cheaper inference with 40% cost reduction.

- Spotify mandated AI labeling for music, banned unauthorized voice clones, and announced a 2025 spam filter after removing 75 million spam tracks last year.

- Google Cloud aggressively targeted early-stage AI startups with substantial incentives, growing market share to 13%.

- Meta launched an AI video feed called Vibes for remixable short clips using image models from MidJourney and Black Forest Labs.

FROM OUR PARTNERS

Your Unfair Advantage

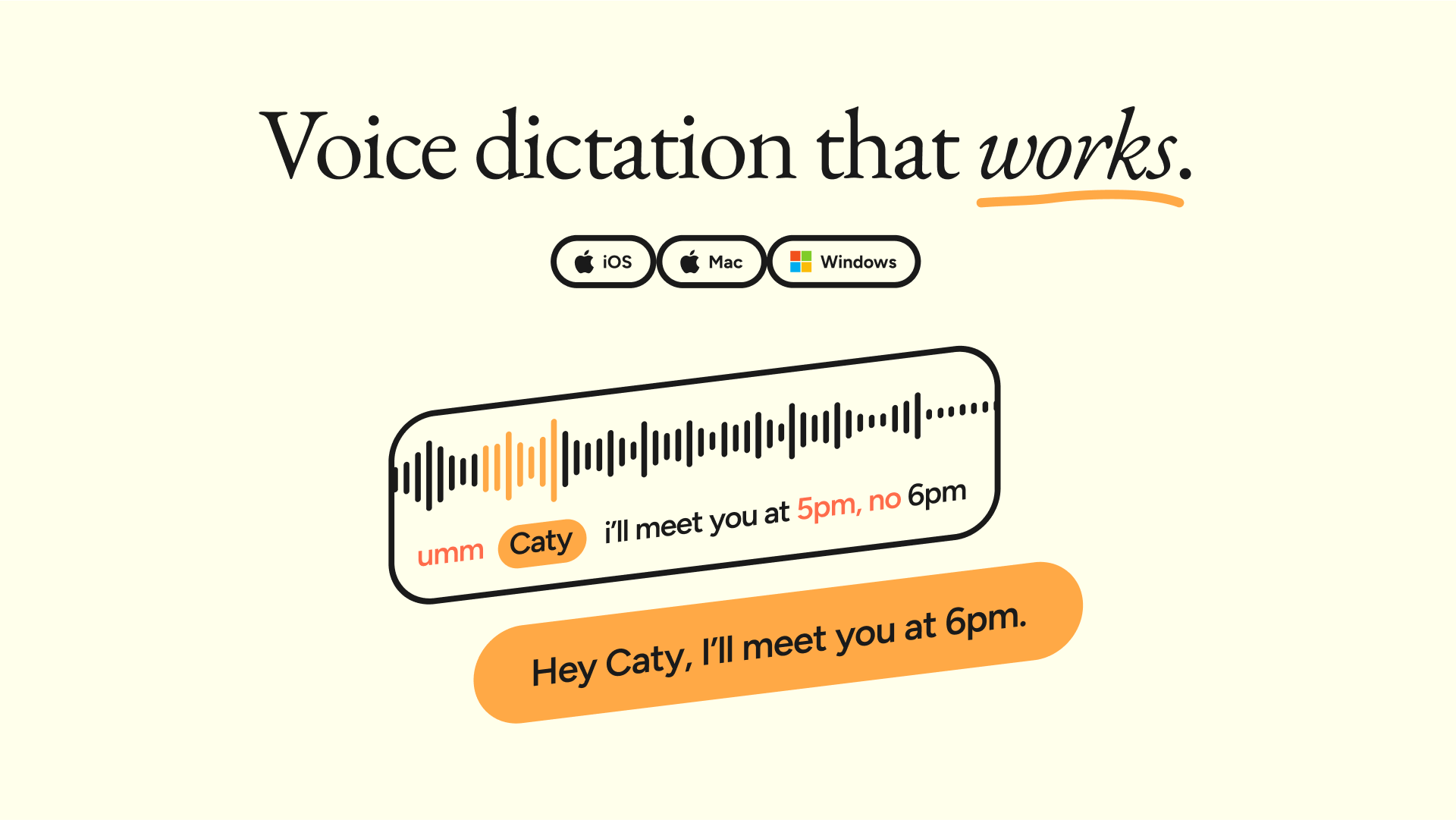

Typing slows you down. Wispr Flow turns natural speech into structured output across Gmail, Slack, Notion, and AI tools like ChatGPT or Claude. Work faster, stay responsive, and keep momentum. This isn’t old dictation software — it’s an AI-native productivity layer built for entrepreneurs, creators, and operators.

Sunday Special: Intelligent Insights

- Matt Berman (one of our FAVE AI YouTubers) had a great live show on Friday. Here’s the parts we think you should absolutely listen to:

- Mike Maples on what AI companies and ideas will succeed or fail, and what types of founders are most successful.

- Ahmed El-Kishky (of the team who just won the ICPC coding championship at OpenAI) on when AI will discover new knowledge and whether or not coding is the language of AGI.

- Emad Mostoque on the last economy and the importance of a “verifier” on what AI can do (relevant for “continual learning”).

- Cory Doctorow wrote that “the AI econopocalypse is nigh”, and argues that AI is the “asbestos we are shoveling into the walls of our society” and that the only thing we can do about it is to puncture the AI bubble “as soon as possible.”

- Then again, as goes the AI bubble, so goes the rest of the economy (so says Deutsche Bank; Goldman Sachs has a longer, i.e more bullish view).

- Here’s a few more YouTube videos we’re watching this weekend:

- Anthropic Design Lead Meaghan Choi’s interview with Alex Albert on how they designed Claude Code to be so satisfying (as many still say, despite Codex being more competent atm, Claude Code feels better).

- OpenAI Chief Scientist Jakub Pachocki and Chief Research Officer Mark Chen chat w/ A16Z about OpenAI’s new goal of creating a vibe researcher.

- NVIDIA CEO Jensen Huang on Bg2 Pod, where he says he invested in OpenAI because he sees them as the next multi-trillion hyperscaler.

- Emad (from above) on the Peter H. Diamandis pod, where he and the two hosts debate the AI bubble with the argument that we’re actually not in a bubble because of how useful AI actually is (and OpenAI’s GDPVal paper provides further economic evidence to back that up).

A Cat’s Commentary

Who agrees with this? Let us know in “What’d you think of today’s email?” (Select “Additional Feedback” and leave a comment.

.jpg)

.jpg)

.png)