Welcome, humans.

Did you know there is not one, but multiple robot fight clubs in San Francisco? One such club, REK, operates from a warehouse where humanoid robots (each costing ~$100K) battle via pilots who control them via VR headsets.

Another, dubbed “UFB” (“ultimate fighting bots”) take place in downtown parking garages with hundreds of spectators watching robots box, sword-fight, and share their epic backstories. Nothing says “peak human achievement” quite like spending six figures to make robots beat the crap out of each other for our entertainment.

Speaking of entertaining public fights: Maybe Sam and Elon should settle their latest X feud (if you missed it, here’s the ultimate explainer) the old-fashioned way: another cage fight. Only this time, the robots can do it (so no crying chicken this time). Think about it: we'd finally get to see ChatGPT vs. Grok duke it out in person!

Here’s what happened in AI today:

- AI skeptic François Chollet halved his AGI timeline to 5 years.

- OpenAI added GPT-5 mode options and set 3K weekly message limits.

- Anthropic expanded Claude Sonnet 4 to 1M tokens with higher pricing.

- Figure's robot learned to fold laundry autonomously.

François Chollet, one of AI’s biggest skeptics, just cut his AGI timeline in half.

DEEP DIVE: Read our full breakdown on the website.

The creator of Keras and one of AI’s most influential thinkers, François Chollet, just shortened his AGI timeline from 10 years away to 5. But the reason why is what’s really interesting… and it’s not about scaling bigger models.

In a new talk with Dwarkesh Patel, Chollet revealed his optimism comes from a fundamental shift in AI capabilities. For years, he argued models were stuck in a “static” loop, just memorizing and reapplying templates.

Now, he says, we finally have AIs that show real “fluid intelligence” by adapting to novel problems at test time (a critical step toward true reasoning).

This is where his test, the new ARC-AGI-3 benchmark, comes in. ARC-AGI-3 is an “Interactive Reasoning Benchmark” that uses simple video games to measure an AI's ability to learn on the fly. The goal is to test “skill-acquisition efficiency”, or how quickly an AI can figure things out in a totally new environment, just like a human.

Here's what makes ARC-AGI-3 different:

- The benchmark is designed to be easy for humans (you should be able to pick it up in under a minute—try it yourself here!) but incredibly hard for current AI.

- Instead of static problems, AIs have to explore, plan, and act in about 100 unique game worlds.

- The AI gets dropped into a game with zero instructions, and it has to figure out the rules and goals entirely on its own through trial and error.

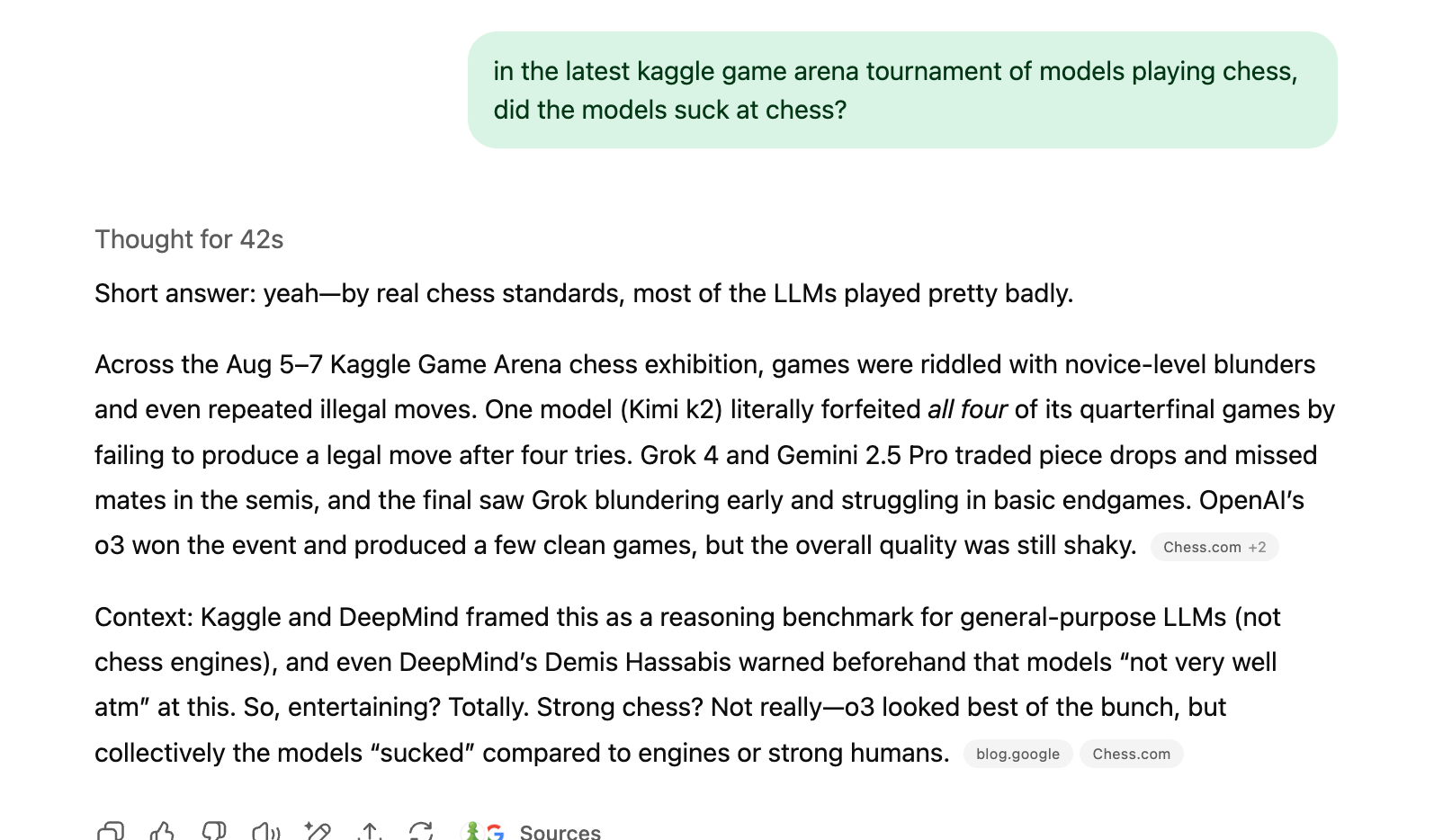

This reminds us of Google DeepMind’s Kaggle Game Arena, where you can watch AI models go head to head on games like chess and go (here’s the latest chess bracket, you can watch all the matches here, and see Chess World Champion Magnus Carlsen’s recap of the final match…although, from Magnus’ commentary, we got the sense the AI kinda sucked… so we asked GPT-5 to confirm!)

Why does it matter if AI are good at games? As Chollet says, “As long as we can come up with problems that humans can do and AI cannot, then we do not have AGI.”

So how do we close the gap? Chollet’s answer targets the biggest problem with AI today, one famously described by his host Dwarkesh Patel: today’s models are like a “perpetual intern on their first day.” They’re brilliant out of the box but never learn from experience.

Chollet’s proposed solution for this is basically a “GitHub for Intelligence”

Instead of just performing tasks, his theoretical AGI would follow a three-step loop to achieve true, compounding learning:

- Learn a new skill: An AI agent efficiently figures out how to solve a novel task.

- Decompose the solution: It then breaks that solution down into its core, reusable parts.

- Share with the network: It uploads these new reusable parts to a global library, making them instantly available to millions of other AI agents.

So the real game-changer isn't raw intellect, but collective learning. Chollet envisions a system where any skill learned by one agent becomes a permanent, instantly accessible building block for all others. While humans learn in isolation, this AGI would learn as a collective, compounding its knowledge at an incredible rate. Which as Dwarkesh said would basically be the singularity (where AI surpasses humans).

FROM OUR PARTNERS

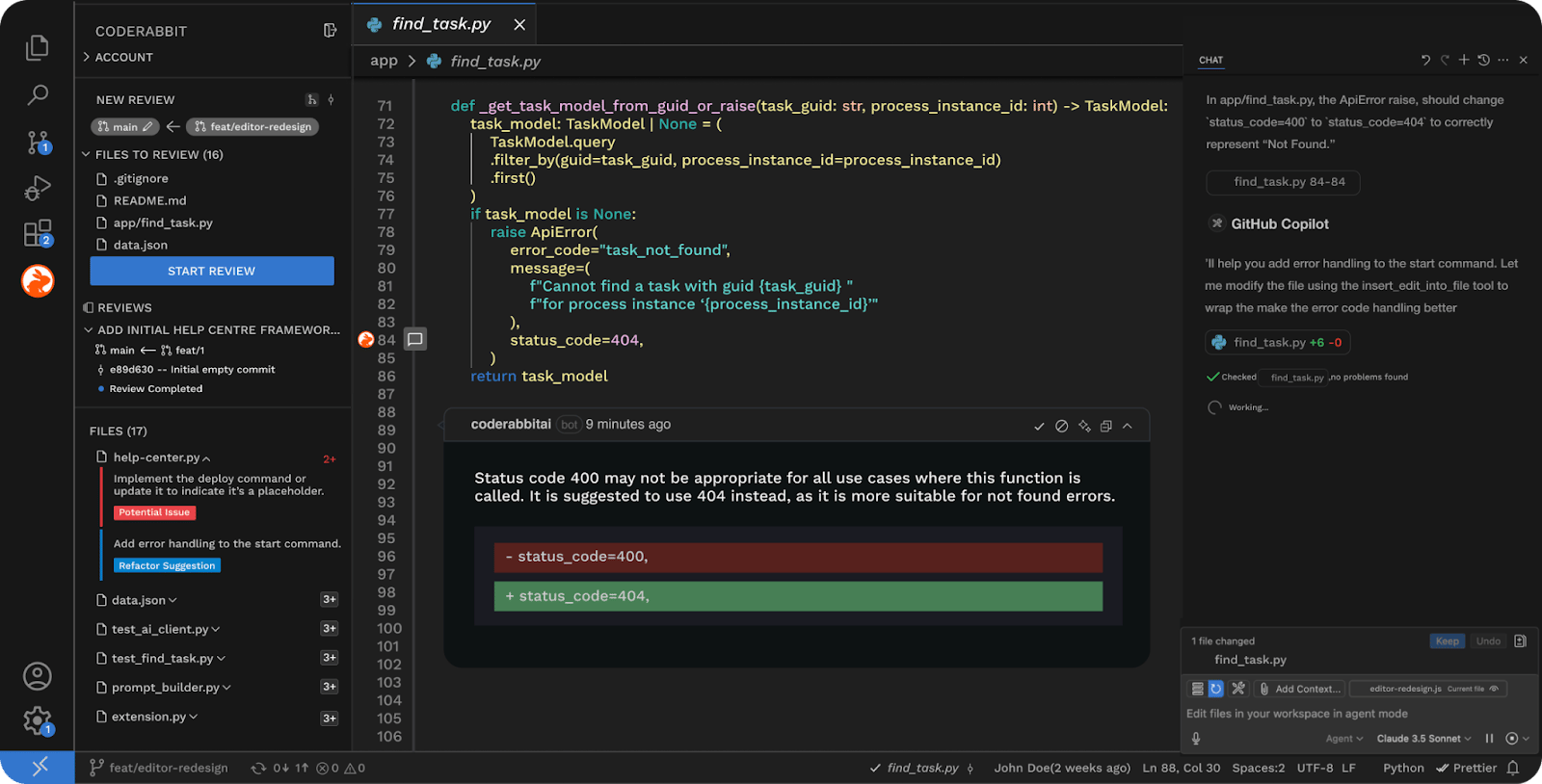

CodeRabbit’s Free Context-Aware AI Code Reviews in your IDE

Code Rabbit is the AI code review platform purpose built to speed up code reviews and improve code quality. Integrating seamlessly into git workflows and existing toolchains, it delivers context-aware reviews and supports all programming languages.

Now they are excited to deliver AI code reviews directly in VS Code, Cursor, and Windsurf–letting you and your team (vibe) code with confidence and review while keeping in flow.

Even better: code reviews in the IDE are free! That’s right: line-by-line reviews and one-click fixes, all in your IDE at no cost to you.

Install the extension and start vibe checking your code today!

Prompt Tip of the Day.

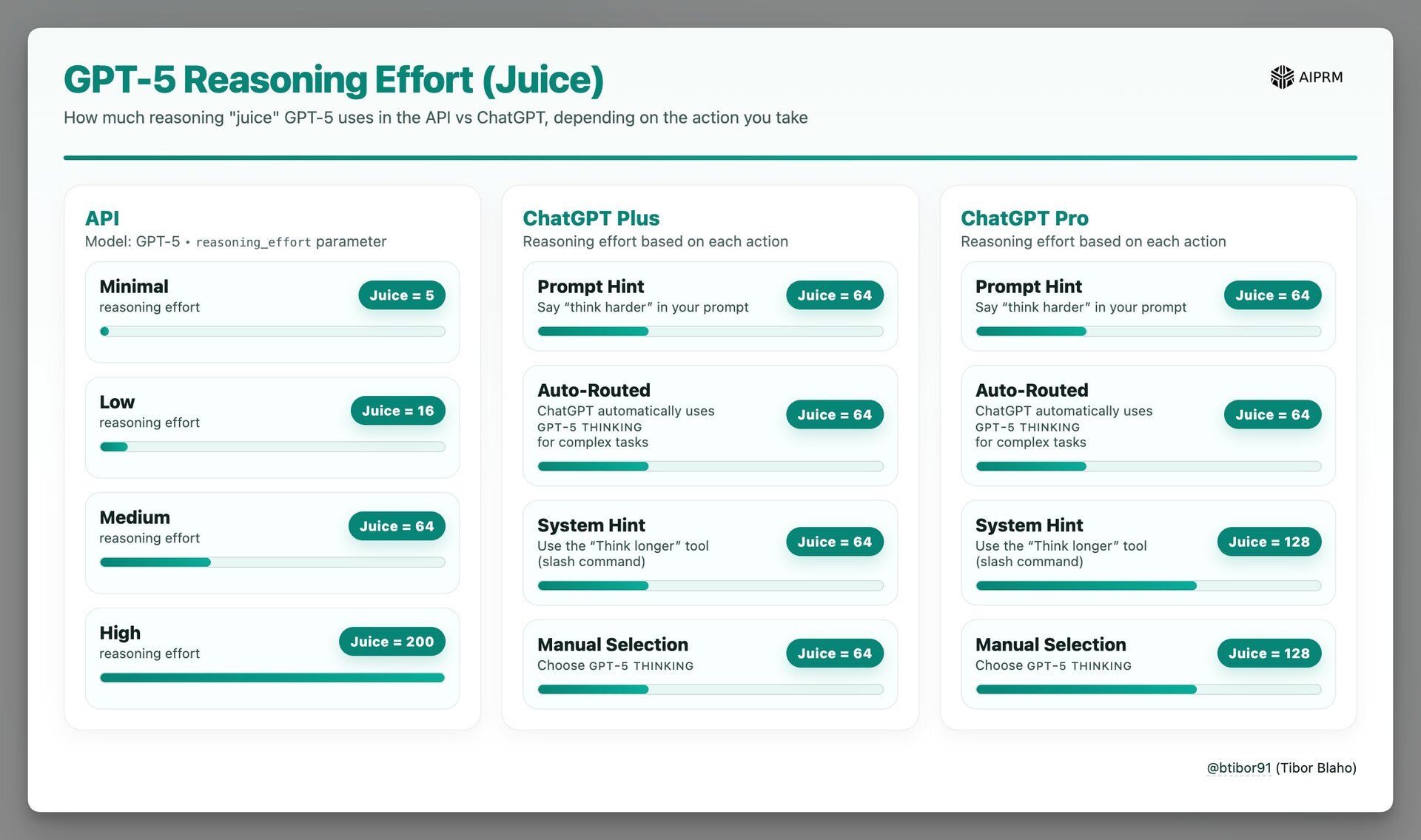

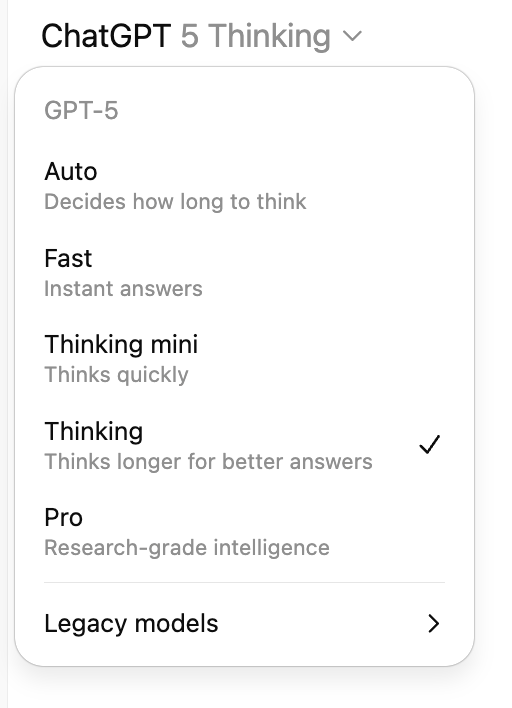

Want to unlock GPT-5's full reasoning power? Engineer Tibor Blaho just shared this handy infographic explaining how much “juice” (or “thinking power”, where higher juice number = more invisible “reasoning tokens”, and yes, that's literally what OpenAi calls it) you get when using the different thinking models and tiers.

Here's the breakdown: API users can dial up GPT-5's “reasoning juice” from 5 all the way up to 200, but ChatGPT Plus users are capped at just 64 juice—no matter what tricks you try. Even ChatGPT Pro users max out at 128 juice when manually selecting GPT-5 Thinking mode.

The wildest part? Whether you say “think harder” in your prompt, use the slash command, or manually select thinking mode in ChatGPT, you're still getting the same limited reasoning power.

What does this mean? If you need maximum reasoning for complex problems, the API's “high reasoning effort” setting gives you 3x more computational power than ChatGPT Pro and over 3x more than ChatGPT Plus.

Treats to Try.

*Asterisk = from our partners. Advertise in The Neuron here.

- *Incogni erases your sensitive data—like addresses and phone numbers—from the web. The internet remembers, but you can delete. Get 55% off with code NEURON.

- ChatGPT now lets you connect Gmail, Calendar, and Contacts to automatically reference them in chat via its Connectors feature.

- Cora Computer can now search through your entire email inbox to answer any question you have about your emails, like finding when your trip is scheduled or identifying which emails you tend to procrastinate on ($15 a month).

- Jan-v1 lets you search the web and conduct deep research locally on your computer, delivering answers with 91% accuracy as an open-source alternative to Perplexity Pro (use it with LM Studio).

- LFM2-VL runs vision and text processing locally on your device with 2x faster speed (again, use w/ LM Studio!).

- Mistral Medium 3.1 gives you better performance and smarter web search results when you chat with Le Chat or call it on the API.

Around the Horn.

Now you can choose between the different GPT-5 variants

- OpenAI updated ChatGPT with new GPT-5 mode options (Auto/Fast/Thinking), restored GPT-4o access, added model toggles for paid users, restricted GPT-4.5 to Pro subscribers, and set a new GPT-5 Thinking weekly rate limit of 3K messages a week.

- Anthropic expanded Claude Sonnet 4's context window to 1 million tokens but will charge higher rates ($6 input/$22.50 output per million tokens for prompts over 200K tokens); also, Simon Willison says you need to include “context-1m-2025-08-07 and be on tier 4, which means you have purchased at least $400 in API credits” to use this.

- Figure’s Helix robot learned to fold laundry on its own using the same AI system it previously used for warehouse tasks (video).

- Matt Berman did a great job recapping Sam Altman’s post about how emotionally attached people are becoming to their AI models.

FROM OUR PARTNERS

Inworld publicly releases its AI Runtime for massive-scale consumer applications

The AI Runtime driving top consumer AI apps from prototype to 1M users in < 30 days is now available publicly.

- Adaptive Graphs auto-scale workloads from 10 to 10M users. C++ speed, any model.

- Automated MLOps handles ops, telemetry, and optimizations automatically.

- Live Experiments run with one-click, no code changes.

Try Inworld Runtime. Easily integrates with your existing stack and all providers. Free through August.

Midweek Meme

A Cat’s Commentary.

.jpg)

.jpg)

.jpg)